It is not inconceivable, but probably also not very likely, that the datacenter business at GPU juggernaut Nvidia could at some point in the next one, two, or three years equal that of the core and foundational gaming sector. It is hard to tell based on current trends, and it all depends on how you extrapolate the two revenue streams from their current points and slopes and reconcile that against longer term data for the past six years.

The datacenter business at Nvidia was much smaller than the company’s OEM and IP businesses only a few years ago, and on par with its automotive segment until 2017, when GPU-accelerated HPC first really took off after a decade of heavy investment by the company and also when various kinds of machine learning had matured enough to for it to go into production at many of the hyperscalers and to be deployed as a compute engine on the large public clouds. Only four years ago, HPC represented about two-thirds of the accelerated compute sales for Nvidia’s datacenter products with the remainder largely dominated by early AI systems, mostly for machine learning training but also for some inference and also for experimentation with hybrid HPC-AI workloads. Now, as fiscal 2020 comes to a close in January, we infer from what Nvidia is saying about hyperscalers that AI probably represents well north of half of the datacenter revenue stream.

That datacenter group doesn’t just sell raw GPUs aimed at HPC, AI, and other workloads, but finished accelerator cards as well as server subsystems that include processors and networking, and complete, finished DGX-1 and DGX-2 systems. Those GPUs include sales of various “Pascal” and “Volta” generations of Tesla accelerator cards in two key form factors and several markets – the P100 and V100 aimed at HPC, machine learning training, database acceleration, the “Turing” T4 aimed at machine learning inferencing, virtual desktop infrastructure, and other kinds of visualization workloads. It is not clear how large that DGX systems business, and the related HGX raw subsystem business, has become, but we suspect that it is substantial even if it falls short of requiring its own line in the Nvidia financials. If the DGX and HGX business was just 25 percent of the overall datacenter group revenues, it would be generating around $750 million a year, and for perspective, that is larger than the best year supercomputer maker Cray ever had as an independent company (which was in 2015, when it had $725 million in sales). At 20 percent, Nvidia’s full systems business would rake in about $600 million, a little less than Cray did the following year and about a third more than what Cray did in 2018.

Now, all of those other GPUs not inside of DGX-1 and DGX-2 systems end up in other systems, and count the value of the systems, it is probably driving on the order of $6 billion to $7 billion a year in systems, and that is about 8 percent to 10 percent of the value of the server market, roughly.

This is significant.

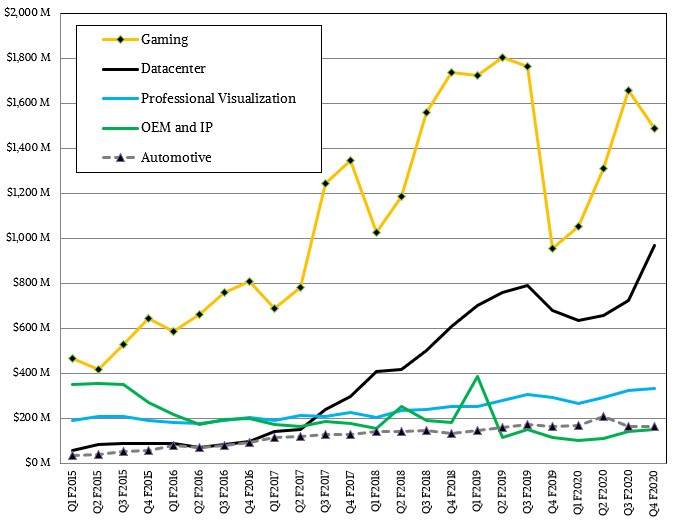

Sales of gaming GPUs and datacenter products, as the chart below shows, tanked a little more than a year ago, which was bad for Nvidia, obviously:

Prior to that, averaging out the wiggles and wobbles a little both businesses were running at the same slope up and to the right, with gaming having more sawtoothing and a much less smooth curve than the datacenter business was exhibiting. The tough thing to reckon is how the curves for both might look going forward. The trends are not yet predictable, and as we have pointed out many times – particularly when analyzing Cray’s financials when it was an independent company, the HPC business was always choppy, and the combination of HPC and AI customers is equally choppy and sometimes the up and down waves cancel each other out in a quarter or across a year, and sometimes they add up to big highs or crater to new lows.

Depending on how you think the gaming and datacenter units at Nvidia will do in the next three years, they could average out to parallel tracks on a slightly less steep curve than between the fiscal 2017 and 2019 years. If the gaming business stays on that gradually flattening curve – and with Intel coming into the discrete GPU business and AMD getting better there is no reason to believe Nvidia won’t feel the pressure on its revenues – and the datacenter business can ride up the AI-enhanced natural language processing and recommendation engine businesses that drove revenues in the fourth quarter, and the curve for datacenter goes exponential at a steeper curve, then within a year or so they cross at around $1.8 billion per quarter. If the curve is a little less steep for the datacenter business, then they cross at three years somewhere near $2 billion per quarter. If gaming stays flat and datacenter does that less steep growth curve, they cross at around $1.5 billion in about two years.

All of this comes down to one thing: Accelerated computing is in the datacenter to stay, and at some point, within the next few years, Nvidia’s datacenter business will be as large as the gaming GPU business.

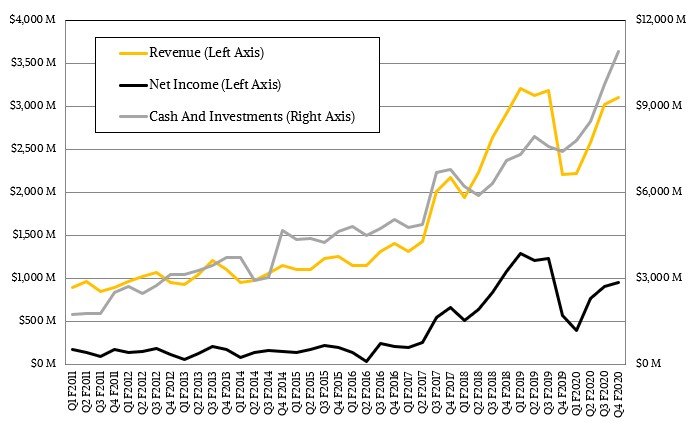

In the fourth quarter of fiscal 2020, Nvidia’s sales overall rose by 40.8 percent to $3.11 billion, and net income was up by a very smart 67.5 percent to $950 million. That left Nvidia with $10.9 billion in cash and investments, more than enough dough to pay the $6.9 billion it has offered to acquire Mellanox Technologies – a deal that was announced nearly a year ago and that is still being held up by the Chinese antitrust authorities.

The gaming business, on which Nvidia’s manufacturing volume and a substantial portion of its research and development budget rests, rose by 56.3 percent as the massive bump of inventory that built up in the PC channel several quarters ago at the same time sales of GPUs to cryptocurrency miners dried completely up as they moved to ASICs and then then the cryptocurrency market itself tanked. (Those ideas are not related or correlated.)

The datacenter business at Nvidia rose by 42.6 percent to $968 million, but for the full year, the datacenter business was up only 2 percent to $2.98 billion. On a conference call with Wall Street analysts going over the numbers, Colette Kress, Nvidia’s chief financial officer, said that hyperscalers (which we presume also includes the major public cloud builders) accounted for “a little tad bit below 50 percent” of sales. Call it $465 million in Q4 fiscal 2020 if you set it at 48 percent, and that grew by about 35 percent sequentially from Q3 fiscal 2020 ended in October. The vast majority of that hyperscaler revenue, we think, is for AI workloads, and given that enterprises and HPC centers are also doing AI workloads with GPUs, then AI is well north of half of the datacenter revenues. How much, we can’t be sure. It depends on how you allocate AI on hybrid systems.

When asked about the current attach rate of GPUs on servers, neither Kress nor Nvidia co-founder and chief executive officer Jensen Huang gave a direct answer. (By our estimates, only somewhere between 1 percent and 2 percent of the servers in the worldwide installed base of around 45 million machines have GPU acceleration, and assuming an average of three or four GPUs per machine, and somewhere between 40 million and 45 million servers, that gives you somewhere between 1.2 million and 3.6 million GPUs. (There are a lot of variable inputs there to show you how a change in one factor can really change the size of the installed base.) But in many cases, those GPUs represent somewhere between 90 percent and 95 percent of the aggregate compute of the machines they are installed upon. If you do the math on that, then the GPUs represent somewhere between 15 percent to 30 percent of the floating point and integer performance of all of the machines in the world if the average GPU acceleration over the CPUs is somewhere around 10X. If the speedup averages 3X, as we have seen on some HPC workloads, then the share of the aggregate performance that comes from the GPUs in the installed server base is between 4 and 10 percent. These are some guesses in the absence of real data – just to bracket the possibilities.

Huang did not give out any numbers like this, but he did qualify why he thought the potential market for application acceleration in the datacenter was quite large.

“I believe that every query on the Internet will be accelerated someday,” Huang explained.

And at the very core of it most, almost all queries will have some natural language understanding component to it. Almost all queries will have to sort through and make a recommendation from the trillions of possibilities filter it down and recommend a handful of answers to your queries. Whether it’s shopping or movies or just asking locations or even asking a question, the number of the possibilities of all the answers – versus what is best answer is – needs to be filtered down. And that filtering process is called recommendation. That recommendation system is really complex and deep learning is going to be involved in all that. That’s the first thing: I believe that every query will be accelerated. The second is as you know CPU scaling has really slowed and there’s just no two ways about it. It’s not a marketing thing. It’s a physics thing. And the ability for CPUs to continue to scale without increasing cost or increasing power has ended.”

But the potential for the GPU is not so much that it is a very good parallel processing engine with high-speed memory with lots of bandwidth, but more because the GPU and its CUDA environment.

“Our approach for acceleration is fundamentally different from an accelerator,” Hung continued. “Notice we never say accelerator, we say accelerated computing. And the reason for that is because we believe that a software-defined datacenter will have all kinds of different AIs. The AIs will continue to evolve, the models will continue to evolve and get larger, and a software-defined datacenter needs to be programmable. It is one of the reasons why we have been so successful. And if you go back and think about all the questions that have been asked of me over the last three or four years around this area, the consistency of the answer has to do with the programmability of architecture, the richness of the software, the difficulties of the compilers, the ever-growing size of the models, the diversity of the models, and the advances that these models are creating. And so we’re seeing the beginning of a new computing era. A fixed function accelerator is simply not the right answer.”

Nvidia did not give a lot of detail on sales within the datacenter group, but did say that shipments of the Tesla T4 accelerators were up by a factor of 4X year on year as the public cloud providers put T4s in their infrastructure for inference applications. The Tesla T4 and V100s both set records for shipments and revenues in the quarter, as it turns out.

As for the Mellanox deal, Nvidia said that it is working with the Chinese authorities to get their approval of the deal, and they expect that it will close “in the first part of calendar 2020.”

“The datacenter business at Nvidia was*** much smaller than the company’s OEM and IP…..”

With Nvidia being just shy of 1B in the Datacenter and assuming 20% in DGX/HGX your suggestion of 6-7B total server sales means that the remaining 800M are only at an average 11-13% share of wallet on their systems. Especially given that the average attach rate is likely around or higher than two with an AUR of maybe somewhere around 3-4k I am convinced that the average share of wallet on the systems is much higher. But even if that means that the 800M drive „only“ 3-4B its still very impressive and a trend I don‘t see stopping anytime soon.