These are challenging times for Intel. Long – and still – the dominant chip maker in the datacenter with its battle-tested Xeon processors, the company is now seeing challenges everywhere.

There is a growing cast of competitors – from a re-energized AMD and IBM to Arm and partners like Cavium (now part of Marvell) and Ampere (headed by former Intel president Renee James) – ongoing struggles to get its 10 nanometer processors out into the market and the search for a new CEO to replace Brian Krzanich and lead the company into this more tumultuous future.

But Intel, which has more than 95 percent of the market for server processors, has shown over the past week that it has no intention of giving up any of its ground, at least not without a fight.

AMD for more than a year has been aggressively been pushing its way back into the space behind the strength of its “Naples” Epyc processors based on the company’s “Zen” microarchitecture. The chips have been adopted by the top server OEMs, including Dell EMC, Hewlett Packard Enterprise and Lenovo, as well as a growing cadre of cloud providers, such as Microsoft Azure, Baidu and, most recently, Oracle Cloud and Amazon Web Services, the largest of the Super Seven hyperscalers.

Last week in San Francisco, AMD unveiled details of the next generation of its microarchitecture, Zen 2, which will be found in the upcoming 7 nanometer “Rome” chip, due out sometime next year. As we at The Next Platform laid out, the chip maker also talked about a roadmap that includes “Milan,” a 7+ nanometer processor that will be based on the Zen 3 microarchitecture and will come out in 2020 or early 2021, with more coming after that. To add to the pressure, AMD also introduced its Radeon Instinct MI60 GPUs that will come out this quarter and are designed to challenge Nvidia in the increasingly important accelerator space.

It all has the vibe of more than a decade ago, with AMD running out with Opteron, beating Intel to the punch with 64-bit X86 processors sporting multiple cores and gaining ground in the datacenter until its own missteps and Intel’s resources beat back the challenge. Now AMD is preparing to roll out the first 7 nanometer datacenter chip within 12 months and Intel is won’t launch its 10 nanometer “Ice Lake” Xeons in volume until 2020.

However, two days before AMD’s San Francisco soiree, Intel announced the Xeon “Cascade Lake Advanced Performance” processor, a 48-core behemoth that is comprised of two 24-core 14 nanometer chips that – like Epyc – have Intel moving away from the monolithic design of chips past and instead is embracing a multicore module (MCM) that leverages one of the three UltraPath Interconnect (UPI) links to hook the two physical chips together to create what essentially is a 48-core socket with 12 memory controllers. (AMD does this in its Epyc 7000 chips by using Ryzen chiplets, two memory controllers and PCI-Express 3.0 interconnect lanes to create an Infinity Fabric to looks like a 32-core chip with eight memory controllers. “Rome” will present 64 cores, an enhanced Infinity Fabric, and PCI-Express 4.0.)

Intel will be talking about Cascade Lake AP again this week at the SC18 supercomputing show in Dallas, including a new benchmark, performance numbers with HPC and AI applications against current Epyc chips in two-socket systems and how the chip will fit in with the evolving HPC world.

“In the past, compute falls into the scalar and then the vector, and there now are new models of parallelism around spatial or data-driven parallelism that we are aggressively working towards in capturing and expressing in hardware,” Rajeeb Hazra, corporate vice president and general manager of Intel’s Enterprise and Government Business Group, said during a press conference before the opening of the supercomputer show. “For this community, it’s been all about double-precision and floating-point operations – some single precision – and of course the expanded world of HPC and AI requires a lot more than just those. It requires numerics and precision capabilities against those numerics that expand the needs for efficient computing.”

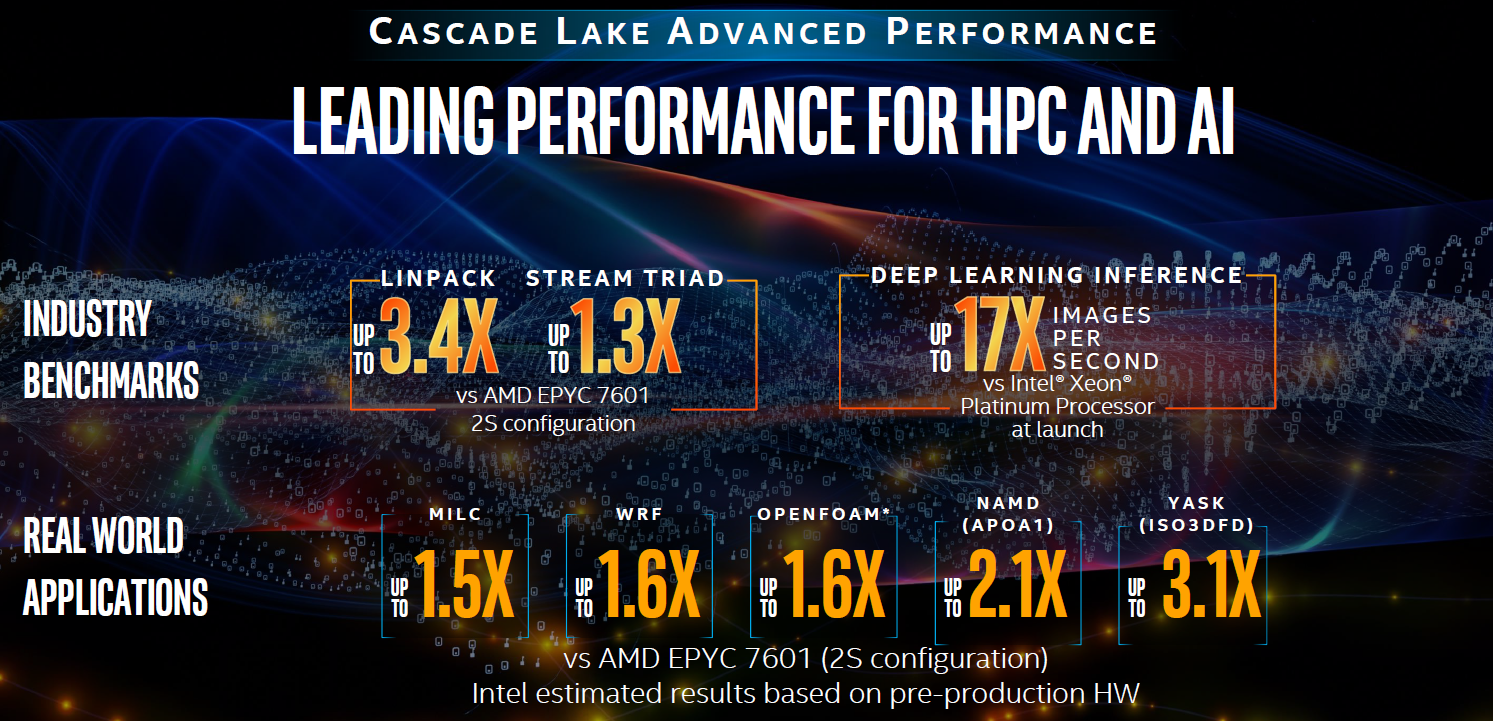

As we outlined here, Intel already indicated that Cascade Lake AP delivered up to 3.4X the performance of AMD’s Epyc 7601 in a two-socket configuration in the Linpack benchmark – which is used in the Top500 list of the world’s fastest supercomputers – and up to 1.3X in the STREAM Triad benchmark, used as a benchmark for memory bandwidth for such HPC workloads like simulation and modeling.

Hazra said that in the ResNet-50 deep learning inference benchmark – which measures image recognition for AI systems – the Cascade Lake AP chip was up to 17X better in processing images per second then the current “Skylake” Xeon Platinum chip.

In a range of applications in various industries that leverage HPC modeling and simulation, the chip, which will come out next year, also bested the Epyc 7601, including up to 1.5X the performance in the MILC applications in quantum chromodynamics, up to 1.6X in WRF, used for weather and atmospheric research, and up to 1.6X in OpenFoam, an open source application for fluid dynamics.

With the NAMD application, another application for fluid dynamics, this one for biological research, the performance gain was up to 2.1X, while for YASK – a stencil benchmark for partial differential applications – it was up to 3.1X.

Hazra said the MCM design – from the 12 memory controllers to the interconnect and architecture – optimizes the chip for HPC workloads, driving performance and density while driving down costs and facilitating “a multi-chip package without losing performance or introducing sarcastic behavior.”

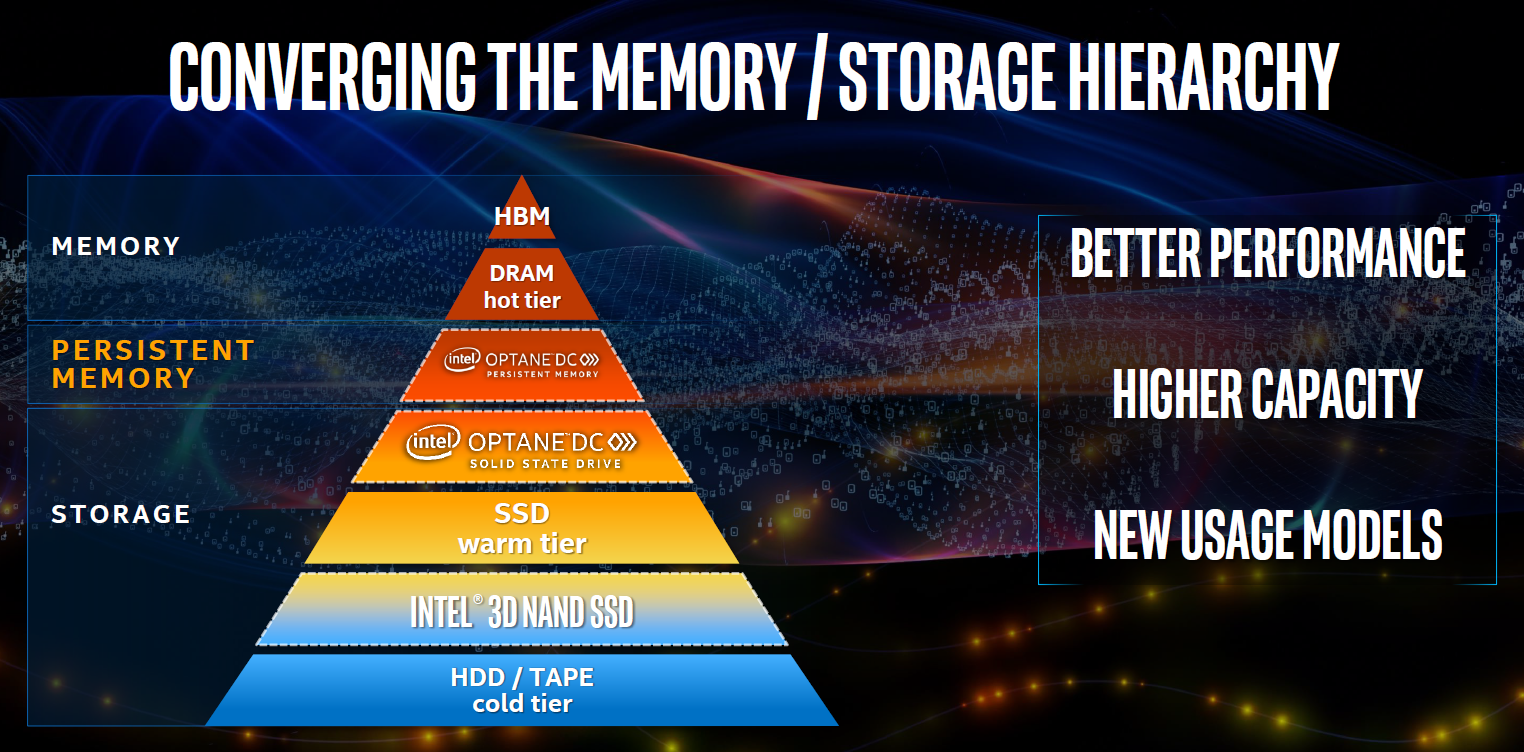

Another topic at SC18 will be Intel’s Optane DC persistent memory, a nonvolatile memory that fits in the datacenter memory hierarchy between DRAM and flash and disk that keeps data intact when power is lost, driving availability and scalability at costs lower than DRAM. At a time when data is no longer housed only in storage systems in the core datacenter but also in the cloud and elsewhere, “the challenge from a systems perspective that is trying to crunch a lot of data very quickly is this continuity has created a fair amount of burden everywhere from the application being aware of what’s in memory vs. needing to pull from storage to performance balance for a lot of things that need to be martialed between memory and storage very effectively and efficiency and, of course, system design as well,” Hazra said.

“What we have done is the blurring of those lines between memory and storage,” he said. “This persistent memory is one that fits in that gap and now starts to make what is essentially a storage-class device look like a part of memory hierarchy or an extension of the known memory hierarchy beyond DRAM. It also makes, from storage standpoint, another layer of storage that is both persistent but closer in performance and can be used via memory semantics in the platform.”

In this way, “storage gets more capable extending its reach closer to where the compute is and memory gets to be more effective in higher capacities and better performance as memory. The critical thing about this is not just better performance and higher capacity but the great new usage models such as a blurring or convergence of the memory hierarchy that can be done.”

The Frontera supercomputer at the Texas Advanced Computing Center (TACC) in Austin will be the first adopter of the technology, Hazra said. He was pressed about whether systems powered by processors from other vendors will be able to leverage Optane DC, but said that currently only those using Xeons will work with the persistent memory.

Hazra also was asked about AMD’s upcoming Rome chip with the Zen 2 microarchitecure, saying that the company as confident in the “unparalleled assets” that are driving the evolution of Intel’s products, both hardware and software.

“Those assets are not just around cores and frequencies and things like that of the past, but around how we put a diverse set of IP together, how we actually enable standards in the ecosystem … and harness the energy from both hardware and software out there on those platforms,” he said. “So, yes, in summary, I’d say we are going to be extremely competitive and drive to this next phase of converged computing for HPC and AI with the innovations we have planned and I’m very confident that that will happen in a way that our customers can continue to bank on us for the leading technologies and solutions they need to run their businesses.”

what do you mean vs. Epyc 7601 2 socket? Do you mean that one 48-core Cascade Lake AP is 3.4x better in Linpack than 2 (TWO) 7601s (64 cores total)?