If you really want to know what is going on in the HPC market, you have to be careful about using the Top 500 rankings of “supercomputers” as a yardstick. The twice annual rankings are always useful – any test on any cluster of reasonable size provides some insight – but this is by no means a ranking of systems that are doing traditional HPC simulation and modeling. There are many systems at hyperscalers and cloud builders that are now being partitioned and tested, and they are changing the nature and character of the rankings.

For many years now, Gilad Shainer, vice president of marketing at Mellanox Technologies, has peeled apart the Top 500 rankings to pull out the real HPC systems from those machines that are doing other kinds of work in their day jobs and that do not normally have anything to do with Linpack or the MPI protocol. Mellanox is well suited to try to do this analysis, since it has a strong position in true HPC networking with its InfiniBand line as well as great insight into hyperscaler and cloud builder technology because it is the dominant supplier of server adapter cards in these areas and it also sells some InfiniBand and even more Ethernet switches to some of these companies.

Bringing in these hyperscale and cloud systems obviously skews the data hugely, particularly since these companies have millions of servers with as many as 100,000 machines in a single datacenter and could, in theory, run Linpack across their massive and relatively low latency Clos networks on a scale that even the largest HPC centers in the world cannot hope to match with systems that scale at under 10,000 nodes these days.

Let’s do some math. The largest non-HPC, CPU-only system based on the “Broadwell” Xeon E5-266 v4 processor from Intel, which is fairly new, and that also uses an Ethernet interconnect is made by Lenovo. It is ranked number 105 on the list, with 57,600 cores and delivering 1.65 petaflops sustained against 2.12 petaflops peak; this machine uses 40 Gb/sec Ethernet, which hyperscalers and cloud builders are replacing with 100 Gb/sec Ethernet these days. It is based on a perfectly standard 20 core E5-2673 v4 processor, and there are two sockets in the box, so that works out to 1,440 nodes to deliver that 1.65 petaflops. For the sake of argument, let’s call this a perfectly bog standard hyperscaler or cloud builder machine.

One hyperscaler datacenter with 100,000 servers akin to these machines above is probably somewhere between 60 petaflops and 70 petaflops, just to give you a sense of scale. These hyperscaler and cloud builder companies have dozens of regions, each with many datacenters – and we are not counting the vast GPU-accelerated machine learning farms they have to run their machine learning training and sometimes inference workloads, which we believe have equally large concentrations of computing power. These hyperscaler and cloudy concentrations of CPU-only computing power may not be as compute dense as their GPU accelerated systems, but they are vast nonetheless. It is not absurd to figure that collectively across their systems, the Super 8 hyperscalers and cloud builders – Google, Amazon, Microsoft, and Facebook in the United States and Baidu, Alibaba, Tencent, and JD.com or China Mobile in China depending on who you want to rank fourth in that country – have many exaflops of double precision computing power installed. Several all by themselves might have in excess of an exaflops.

It is just important to remember who has invested more in infrastructure, and what could really be brought to bear to solve some big HPC problems if someone really wanted to.

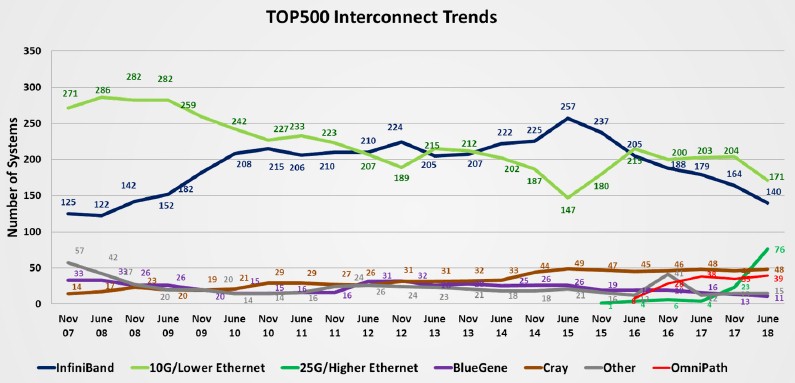

With that in mind, here is the Top 500 networking trendline over time:

If you looked at this chart and thought it was a dataset culled from just traditional HPC centers, you would draw some wrong conclusions from the data. Take a look at that jump in Ethernet connected systems with speeds in excess of 25 Gb/sec. There are 76 of them in the June 2018 Top 500 rankings, four times that of a year ago and double that of only seven months ago in the November 2017 list. That is a really big jump in Ethernet connectivity. The only issue – and Mellanox knows because its ConnectX adapters are used in all of these machines – is that only one of those 76 machines is actually running HPC applications. The others are partitions of hyperscale and cloud datacenters, with a few software development firms added in who, for whatever their own reasons, ran the Linpack Fortran benchmark on slices of their clusters.

As you can see from the full dataset, Cray’s various “Gemini” XT and “Aries” XC interconnect have collectively been holding steady on the list, and Intel’s Omni-Path InfiniBand-ish networking rose quickly in the past few years and has also reached a kind of steady state. The BlueGene interconnect from IBM is gradually declining as the BlueGene machines reach the end of their life and are being replaced. Over time, 10 Gb/sec Ethernet will continue to decline, and it seems likely that InfiniBand has reached a kind of equilibrium if the number of non-HPC machines stays roughly half of the list. (The June 2018 list had 253 true HPC machines, by Shainer’s reckoning, compared to 247 non-HPC machines.)

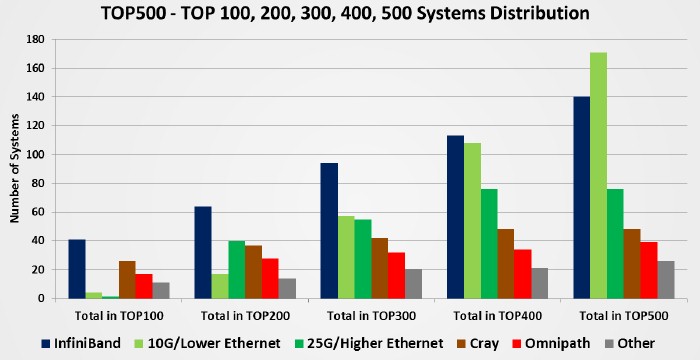

Drilling down into the June 2018 list, here is a very interesting breakdown of the distribution of various networking technologies deployed across the list in groupings of a hundred from the full list down to the Top 100. There really are differences in terms of usage and industry sector based on this segmentation. The Top 100 machines are almost always real HPC systems, but the mix dilutes as you expand the dataset by the next hundred and so on. Take a look:

With the full list, you would draw the conclusion that Mellanox InfiniBand utterly dominates the Top 100, has a very big portion of the next 200 machines, and sees more modest adoption on the bottom 200 machines, and that Ethernet across all speeds starts dominating outside of the Top 200 and, even if you count Mellanox InfiniBand and Intel Omni-Path together, beyond the Top 250 or so, Ethernet has more share than InfiniBand. Cray’s share comes mostly from the Top 200.

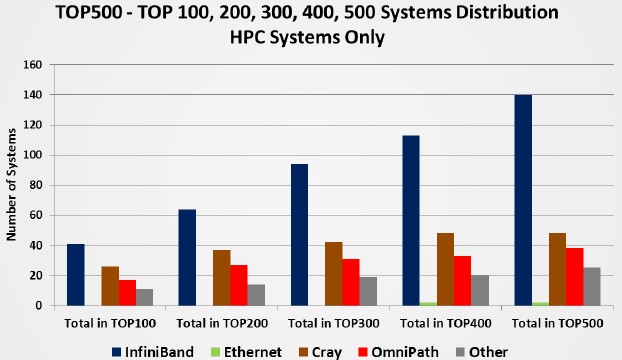

Now, look what happens when you peel out the non-HPC systems:

Ethernet is essentially non-existent, with just a handful of machines, and InfiniBand has 55.3 percent share of interconnects on HPC systems at 140 out of the 253 HPC-only machines. If the rise of hyperscaler and cloud builders on the list didn’t push out real HPC machines, we think InfiniBand’s share would be considerably higher. If you want to add InfiniBand and Omni-Path together – which we think is warranted given their heritage and the fact that both interconnects support the Open Fabrics InfiniBand drivers for applications – then even on the June list with all of that non-HPC iron pushing out real HPC machines, then the combination has a 70.7 percent share of HPC interconnect. Cray has just a hair under 19 percent share, and other interconnects (including homegrown stuff as well as the BlueGene 3D torus) have 10.3 percent share. We will concede that Ethernet might actually have a larger share if the next real 243 HPC machines were added to this list, but it would not dominate as the list as currently presented shows it to.

Having said all of this, we are still nonetheless perplexed that Ethernet with the RDMA over Converged Ethernet (RoCE) protocol is not more popular among even real HPC sites. The difference has to come down to latency, with InfiniBand and Omni-Path somewhere below 100 nanoseconds for a port-to-port hop and the best Ethernet without RoCE on it being maybe 350 nanoseconds to 450 nanoseconds and with RoCE maybe it gets to around 250 nanoseconds.

That brings us to the geographic breakdown of the current Top 500 list, and what happens when we remove those non-HPC systems from that list. On the full Top 500 list, the system count in the United States reached an all-time low of 124, down from 145 six months ago and yielding a 24.8 percent share, and China improved its system count from 202 in the November 2017 list to 206 in the June 2018 list for a 41.2 percent share. There are many machines from the United States and China that are at hyperscale and cloud sites, however, and that has been the geopolitical campaign that countries have been waging for a number of years: To try to dominate the list from these industries.

Focusing down in on the true HPC systems, however, presents a very different picture. As best as Shainer can figure, the United States had 85 true systems on the HPC-only segment of the list, giving it a 33.6 percent share. China had only 29 real HPC machines on the list, for an 11.5 percent share of the 253 real HPC machines, and Japan is still ahead of China with 35 real HPC machines for a 13.8 percent share. That leaves Europe, with a collective 78 machines (including Russia), or a 30.8 percent share, and the rest of the world with 26 systems or a 10.3 percent share. This, we think, sounds right. China is growing, has some massive machines to be sure, but the United States and Europe still dominate and Japan is right in there.

Be the first to comment