As the underdog in cloud computing, Google has to take a slightly different tack from industry pioneer and juggernaut Amazon Web Services, which simply believes that all applications should, in the fullness of time, move to the public cloud. AWS, to be precise.

Google started out way up the stack, selling platform services at a time when companies were only really able to get their brains wrapped around lower level infrastructure services of compute, storage, and networking. Large enterprises had to go through the familiar process of plugging together systems from components to get comfortable with the whole idea of public clouds. And, in the fullness of time, perhaps they will do as Google itself does internally, which is just present services to programmers who stitch them together at the platform level. Google thought they would jump straight to this with its AppEngine services, skipping the whole infrastructure step. But most companies do not want to skip that step, we think because they want to learn and understand at their own pace and in their own way to better mitigate risk. That is the nature of enterprise computing.

The trick for Google to bootstrap its Cloud Platform public cloud, then, is to make it easier for enterprises to consume its infrastructure services, and it open sourced Kubernetes, the container orchestration system inspired by its internal Borg and Omega cluster and application controllers, four years ago precisely to create a common substrate that would allow more portability of applications than has been possible with public and private clouds that are based on a mix of different virtual machine architectures at a lower level in the stack. Kubernetes is in essence a giant onramp to Google Cloud Platform, and it is quickly becoming the de facto orchestration layer for Docker containers – even on rival public clouds. Rewriting Borg and Omega in Go, making it a more generalized orchestrator, and opening it up for the community to spread it across their platforms to make it ubiquitous was a master stroke. And a risky move. But it is paying off for Google.

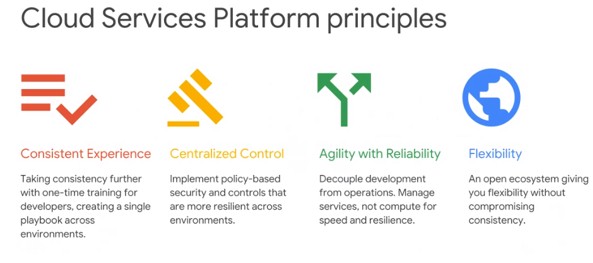

However, that doesn’t mean that it is easy for large enterprises to run Kubernetes on premises and to get the kind of consistency that they require across the myriad assortment of Kubernetes services that are available. To that end, at the Next 2018 conference today, Google is unveiling Cloud Services Platform, a managed Kubernetes service that provides the look and feel of the production-grade Google Kubernetes Engine service. Google will be working with partners in the Kubernetes ecosystem to create what is in essence a hybrid Kubernetes cloud that looks and feels like GKE, even though it really isn’t the same thing as dropping chunks of Cloud Platform into datacenters.

To get some insight into what Cloud Services Platform is and how it works, The Next Platform had a chat with Urs Hölzle, a Google Fellow and senior vice president of technical infrastructure at the search engine giant and rising cloud force. Cloud Services Platform has been deployed by early adopter customers and will be in alpha during the third quarter, with beta testing and general availability following based on feedback and feature polish. It has the potential to help Google bring a lot more customers to its cloud – at least those that are written for Kubernetes.

Timothy Prickett Morgan: I will dive right in with the obvious question. Back in the day, when OpenStack was young and difficult to consume for enterprises, Rackspace Hosting, one of the big cloud providers of the time that was spearheading the project, offered a managed service version of its own cloud that enterprises could rent and install on premises. Microsoft is sort of doing this with Azure Stack, giving a local and scaled down version of its Azure cloud. Why not just go all the way and do that with a fully managed Google Kubernetes platform service on premises?

Urs Hölzle: Kubernetes is really the portability layer, and you need some form of a cluster operating system that gives you virtual machines and a programmable network and stuff like that. That is the job of the on premises provider. Given the success of Kubernetes, that is actually in pretty good shape across the industry. You have many suppliers, and the Kubernetes certification process will help, too. For the platform itself, we are going to do the certification testing so the parameters of the on premises environment – the parameters, what kind of instances – to make sure the experience is a good one.

TPM: It seems to me that the real challenge is providing a consistent experience between a cloud provider like Google and whatever is being installed on premises. I understand that Kubernetes is the compatibility layer across the private and public clouds, but there is a lot of stuff you need to do down in the substrate. So, for instance, they don’t have the Google File System and they don’t have virtual private cloud networking like Google has created for its own use and for Cloud Platform.

Urs Hölzle: I see what you are saying, and there are really two answers. The thing to remember is that this is all really working at Layer 7 in the network, basically. Every Kubernetes container has a Layer 7 proxy built in that does all of the standard things – authentication, authorization, logging, encryption, and so on. So the underlying physical network can be a flat network. You don’t have to program the firewall because at Layer 7 every pod is encapsulated.

This is similar to the BeyondCorp strategy that we have for on premises security, where every single call needs to be authenticated at Layer 7. This means you have to worry less about network access, and that is important because in today’s world, network access is not a great proxy for figuring out of I should trust you because, one, you want to give your employees access from the road, and two, for every large company you really do not want to rely on the fact that plugging into this port in the wall should give you extra privileges.

So the Kubernetes service uses the same zero trust in principle, and because you can reach my port doesn’t mean anything. And because all of the authentication between two services involves a certificate that proves that I am the caller of that service, and there is a system that I have to call out to that ensures that I am able to call and for what operations, at the high level, unless you have a high performance need, this is all handled by the underlying flat network.

That is where partnerships come in. We have been working with Cisco Systems for over a year, for example, and with some of its networking hardware and proprietary software it can provide a higher performance experience of this on premises Kubernetes. Customers won’t know Cisco is behind the scenes, but everything will work better if you buy UCS servers and networking and so on.

So there is lots of room for differentiation to make these on premises services fast through partners. And this is similar to what we do with Kubernetes itself. Kubernetes has software load balancing built in, but F5 Networks, Cisco, and others have written plug-ins for their load balancers to allow it to be done with their hardware.

TPM: Did Google contemplate dropping in a literal piece of Google Cloud Platform into on premises datacenters? I realize that companies have their own preferences for servers and switching, and that is tough to compete against, but a literal piece of Google might have been attractive as an on premises appliance.

Urs Hölzle: Yes, but it is very hard to fit Google Cloud Platform into a box until that box becomes fairly large. There are literally thousands of binaries and microservices behind the scenes, and your minimum deployment size becomes fairly large. And that is the problem with Microsoft’s Azure Stack, which is not really Azure because it is not really possible to pull a full cloud platform into a rack. It just doesn’t work.

TPM: By definition, the storage and the data resiliency methods and the networking and a whole bunch of things have to be different and start at a much lower scale, maybe a few server nodes. I get that.

Urs Hölzle: But really, what makes it difficult is the multitude of services. I don’t know how many thousands of services – both things you can see and things behind the scenes – that are in Google Cloud Platform, but this is very hard to package into anything that looks smaller than a region. We actually do private regions, but they are very large customers and this offering misses a lot of the market which can’t afford a private cluster on Cloud Platform.

So the Cloud Services Platform approach is a much better fit for all of the other enterprise use cases. It has a smaller API surface – you don’t get Spanner, you don’t get BigQuery, and so on – but you do get all of the basics in a highly comparable way with the Kubernetes service on Cloud Platform.

TPM: Is there live migration between the public Google Cloud Platform and the on premises Cloud Services Platform? Is this something that is being baked into Kubernetes itself?

Urs Hölzle: Kubernetes basically says, “That’s not my job.” But it is the underlying platform that manages the virtual machines and other stuff where Kubernetes runs, and if the platform supports that, customers can implement that. Kubernetes does not try to be OpenStack or OpenShift, which starts at the hardware and provisions it and deals with broken stuff. This layering is what has made Kubernetes successful because it is not opinionated at that low level. You can put it on VMware or OpenShift or IBM mainframes or whatever.

TPM: Do you have any recommendation for the on premises setup for Cloud Services Platform? Customers could, I presume, do bare metal, and I know that with Cloud Platform itself, Google lays down a container, then a KVM hypervisor and a virtual machine, then a container inside of those virtual machines to create its instances. Do you advise enterprises to follow what you do?

Urs Hölzle: One of the strengths of Kubernetes is cross platform, so we don’t want to be subsetting it too strongly. There are many ways to successfully run Kubernetes. What we care about is that when you have Kubernetes applications, when you deploy them and run them, you have a comparable experience across different platforms. So there is some constraints – if it is too slow, it won’t work – but other than that, we want to allow innovation to happen in the underlying infrastructure they way that other people think fits.

Also, we don’t want to force companies to buy new hardware just to get this experience. On most of the recently modern server and networks, you will have good enough performance to be viable. Remember, this is not targeted at HPC, but the typical enterprise application where management is the majority of the cost of that application and security is not very good and you don’t have good deployment tools. We are addressing all of that, and with the early adopter customers and most did not have big issues with the lower level hardware.

TPM: Is Cloud Services Platform really an onboarding mechanism, or do you expect for companies to really run in a hybrid mode?

Urs Hölzle: First of all, transitions take a while to happen, for economic reasons for one thing if customers have just invested in servers and storage. And there are places where companies have controllers in their factories and so forth where they need to be a millisecond or closer away from the gear they are controlling. So hybrid is unavoidable in that scenario.

But Cloud Services Platform is really not just about that. The problem that we are solving – and that we hear about very strongly for customers – is that as they have a multi-year path from on premises to cloud, or maybe they want to stay hybrid longer term – as the go through this, they do not want to have N different environments where all of the basic things are different. Just trivial things like what is a service, how do I define authentication, how do I find the service, whatever. Today, every cloud and every on premises environment has a different answer. And it is different, but it is not differentiated. And these are not the same thing.

So, take a hypothetical Linux application as an analogy. If you run AWS Linux, and the process API and the file system API are different from Google Linux, so therefore if you want to move your Linux VM from AWS to GCP, you have to port your application. That’s crazy, because there is no innovation in the process and file system interface. It should be the same. At the higher level today, above Kubernetes, that is what is happening. There are dozens of ways of doing everything. We have customers who need to do on premises for a while, or maybe their regulators say they need two public clouds, and where they used to have one security problem, they now have three because in each of these scenarios they have to implement their policies with different mechanisms. And I have a fourth problem because they have gaps between the three environments and they need to cover those gaps.

And what happens for security happens for release tools, for load balancing, for everything. So that does not seem like progress to me. Kubernetes covers some of this, but the goal with Cloud Services Platform is to cover a lot more of it. It is standardization through open source software of that whole layer, and there will be one set of security, config, push policy, and other policies. There are differences between BigQuery and AWS Redshift, and obviously we can’t normalize for that, but we can do it for all of the basics and everyone trains and normalizes on that.

Be the first to comment