It has been four years since Kirk Bresniker, HPE Fellow, vice president, and chief architect at Hewlett Packard Labs, stood before a crowd of journalists and analysts at the company’s Discover show and announced plans to create a new computing architecture that puts the focus on memory and will eventually use such technologies as silicon photonics and memristors. Bresniker stood with small plastic blocks that made up a 3D mock-up of The Machine, the memory-driven computing architecture that at the time was also mainly existing as a sketch on a whiteboard in his Palo Alto office.

Since then, as we at The Next Platform have highlighted over the past several years, Hewlett Packard Enterprise engineers have worked to create the hardware and software components that will be key to how this new architecture will function, they’ve created proof-of-concepts, worked with tech partners in developing and running test applications and rolled out prototypes that were important steps in the process but underwhelmed some observers by using traditional technologies like DDR4 memory rather than something more exotic but wowed others when it hit 160 TB of main memory last year. The project also has taken advantage of technology inherited through HPE’s $275 million acquisition of SGI in 2016, including the NUMAlink interconnect fabric.

The drivers behind The Machine was the changing IT landscape that saw increasingly large amounts of data that was being generated – particularly at the edge – and such emerging compute-intensive tools as artificial intelligence (AI) and machine learning, all which promised to overwhelm traditional datacenter architectures that wouldn’t be able to handle the demand.

Most of the work up to this point had been done within the confines of HPE. The company in 2016 launched an open source community page that was designed to give developers access to tools necessary to contribute to the code development for The Machine, but the development work, testing, and proofs of concept were happening inside HPE. At the vendor’s Discover show this week in Las Vegas, Sharad Singhal, director of software and applications for The Machine at Hewlett Packard Labs, said that dozens of applications were created, but in many ways they were “research toys.” Nothing had been done with real-life business applications that are critical to enterprise operations.

That is about to change. HPE is now reaching out to outside developers to help them create memory-driven computing applications and proofs-of-concept to gauge gains in software deployment and that can run in a sandbox environment housed in a high-end Superdome Flex that includes the company’s Software-Defined Scalable Memory (SDSM) software and firmware and the NUMAlink fabric technology. Developers from customers will be able to work with experts inside HPE’s Pointnext services and consultancy arm and HP Labs to bring about and test applications for the memory-driven architecture. The sandbox will also act as a platform on which HPE will be able to introduce other technologies that are developed as part of initiative to test how they work.

At the same time, HPE is working with online travel site Travelport, which is the vendor’s first commercial customer for its memory-driven memory technologies, installing a Superdome Flex in-memory system and rearchitecting algorithms using HPE’s memory-driven computing programming techniques.

Bresniker said at the show this week that The Machine project had reached the stage where it needed commercial applications from customers in the next step of its evolution.

“How do we take today’s software and run it more efficiently?” he said. “How do we then understand what new software principles will want to come out? We did this work in our labs. We did it with partnerships. But for those one-to-one relationships, I think the other key thing is, how are we going to scale that and get more people into this conversation?”

HPE is adopting a strategy of commercializing technologies developed through The Machine project to get them into the hands of customers while continuing toward the goal of a single architecture for a company’s entire enterprise environment, from the core datacenter through the edge and out to the cloud. Matt Minetola, executive vice president of technology and global CIO at Travelport, said the need to accelerate the speed the company can address a travel request and to be able to quickly analyze the huge amounts of data that’s inundating the company helped drive it to embrace HPE’s memory-driven vision.

“The ability to take that data and mine it, there’s no way we or you are ever going to be able to move that around,” Minetola said during a panel discussion. “The limiting factor when we looked at our ability to go to sub-second with all this becomes the inability to go to multiple places for multiple things, so that’s what got us passionate about the memory-driven compute concept.”

He also noted that one-tenth to two-tenths of a second can make the difference whether the company’s offering pops up on the first page of deals pulled together through a request from a company like Kayak. Being at the top of the first page can mean a significant bump in revenue for the company; land on the second page, and no one will see it.

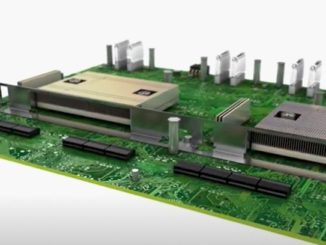

The Superdome Flex sandbox is powered by Intel’s future “Cascade Lake” Xeon processors, due later this year, and 3D XPoint DIMMs, which which enables 96 TB per rack (12 TB of SDSM per chassis) that can be dynamically assigned to compute partitions. Bresniker said HPE engineers had been able to optimize the Superdome interconnect fabric to fit with the memory-driven computing vision when the company bought SGI and its NUMAlink technology. It was a significant step in the project’s efforts in how it connects compute and memory devices.

“Rather than trying to block them up as a memory I/O read or write, I’ll say, ‘No, I want to have a load of store to my microprocessor register and I want it to go over the fabric so that every byte that’s in the system I can do an individual load of the register or a write from the register out to that memory,’” Bresniker explained. “What was different in the NUMAlink fabric that we did not have in the Superdome fabrics was that, in Superdome, we could take that big system and you could run that whole thing as one system. Every CPU could access every byte, but if you partitioned the system up into a couple of four sockets, some sixteen sockets and some eight sockets, each with its own copy of the operating system, the only way you could talk between those systems was to do it over Ethernet or over InfiniBand. That’s fine, but it’s microseconds, not nanoseconds. It’s also block-oriented. You have to move 4,096 bytes as a minimum, you can’t just move 32 bytes. Also, when you’re operating that, you’re doing reads and writes, you’re making file system calls, or you’re making open and close calls to the network stack. It takes you 24,000 kernel instructions to move one of those 4K blocks, as opposed to saying one instruction: ‘Load this register from this address.”

The NUMAlink fabric was different. “It brings the ability to partition that system up and still have that high-performance memory fabric communicate between the partitions,” Bresniker continues. “What’s important about that is now I can have the memory that’s on the fabric, so this operating system can address – because it’s an Intel CPU – the most it can address of physical memory is 64 TB. You try to add that next terabyte and there’s no address pins left. We can now assemble systems that are much larger than any one operating system can address, and this allows us to take all that memory and put it on the fabric and say, ‘You’re going to address this memory,’ and then you might slide over and say, ‘No, I want you to address this memory,’ and the memory is now independent. It’s memory-driven computing because the data in the memory has to be at the center of our thinking and we want to bring computation to the memory rather than sending data out to the computation. Once data’s in this memory, I don’t want to see if move again. I want to bring compute, address the memory, maybe change the state a little bit and then go away. Bring on this compute, address the memory and go away.”

The technology and SDSM represent “the change in the operation, so this is a stock system with a different flavor of firmware in the routing of Flex fabric chips,” Bresniker said. “It’s slightly customized, but that really allows us to then transcend the limits of this particular generation of CPU and to have larger and larger memory, and then get some more interesting memories, persistent memories, and we can just drop them into the system. It’s at 96 TB. What’s it like when it’s at 100 TB, what’s it like when it’s at a petabyte of memory? These guys might still be addressing only 96 TB, but I want to have all the memory on the fabric.”

This is not, of course, the only way that the Machine has been prototyped and this is not the only route to market for the technologies – particularly the photonics-based memory fabric – that were created under this project. We have long suspected that HPE wants to get into the exascale HPC game with The Machine, which now has glommed on machine learning. That 40 node prototype of The Machine unveiled a year ago was based on ThunderX2 processors from Cavium, and specifically using HPE’s “Comanche” server cards for what was to become the Apollo 70 line. (We did a deep dive on prior iterations of The Machine here.)

Mike Vildibill, vice president of the Advanced Technology Group at HPE, says that his group as well as those who are working on the PathForward exascale projects funded by the US Department of Energy, have over a hundred engineers working on exascale projects, and this includes optical interconnects such as those used in The Machine. While Vildibill cannot say anything about how pieces of the architecture of The Machine might comprise a third option for an exascale system – with a future IBM Power-Nvidia Tesla hybrid follow-ons to the “Summit” and “Sierra” systems installed at Oak Ridge National Laboratory and Lawrence Livermore National Laboratory, respectively, and whatever Intel is working on for the “Aurora A21” system at Argonne National Laboratory being the other two likely choices. All that Vildibill can confirm is that HPE is actively developing exascale systems. “We’re in the game. There is no doubt,” he says.

We never doubted it for a minute. HPE wants an architecture that it can own. That’s where the revenues and the profits are, after all.

Be the first to comment