When Hewlett Packard Enterprise bought supercomputer maker SGI back in August 2016 for $275 million, it had already invested years in creating its own “DragonHawk” chipset to build big memory Superdome X systems that were to be the follow-ons to its PA-RISC and Itanium Superdome systems. The Superdome X machines did not support HPE’s own VMS or HP-UX operating systems, but venerable Tandem NonStop fault tolerant distributed database platform was put on the road to Intel’s Xeon processors four years ago.

Now, HPE is making another leap, as we suspected it would, and anointing the SGI UV-300 platform as its scale up Xeon platform with the launch of the Superdome Flex. This new system, which includes a new NUMAlink 8 interconnect – although HPE is not calling it that – mashed up with some of the firmware, memory sparing, and error correction technologies in the Superdome X systems. These memory technologies could not come at a better time, with Intel charging a big premium on fat memory configurations in the “Skylake” Xeon SP product line and memory prices up by a factor of 2X to 3X compared to this time last year. (More on this in a moment.)

The DragonHawk systems took the basic design of the Superdome Itanium blade servers, which supported up to 16 sockets and up to 48 TB of main memory in a single system image, and replaced the Itaniums with Intel’s Xeon E7 processors. Specifically, the machines supported the “Haswell” Xeon E7 v3 and “Broadwell” Xeon E7 v4 processors, which had the extra QuickPath Interconnect (QPI) scalability ports that allowed an interface to an external chipset node controller like the DragonHawk or even SGI’s NUMAlink series. Under normal circumstances, if HPE had not bought SGI, it might have invested in expanding the DragonHawk chipsets to 32 sockets and the maximum of 64 TB of main memory that the 48-bit physical addressing that the Xeon processors support; this cap is in effect even on the Skylake Xeon SP chips, which is why you don’t see memory footprints expanding. But Intel is expected to extend the physical addressing, perhaps to 52 bits out of a maximum of 64 bits on the Intel architecture, with the follow-on “Cascade Lake” chips in 2018 and the “Ice Lake” chips in 2019.

Rather than invest in that chipset development, HPE decided, we think wisely, to make use of the “Ultra Violet” NUMAlink chipset family and adopt what used to be called the UV 300 platform as its main scale-up NUMA system. HPE was already reselling the UV 300, filling in a gap between the four-socket Xeon E5 ProLiant DL580 systems and the low end of the Superdome X by rebadging and eight-socket, two-node UV 300 as the MC990 X. The issue with the SGI UV line that held it back a little is that the systems were not certified to run Microsoft’s Windows Server 2016, which both the four-socket ProLiant DL580 and the Superdome X could support. All three machines can, of course, support Linux, which is perfectly understandable given the HPC heritage that SGI has. SGI has its own HPC-tuned variant of SUSE Linux Enterprise Server, but the MC990 X was certified by HPE to also run Red Hat Enterprise Linux 6 and 7 and Oracle Linux 7.

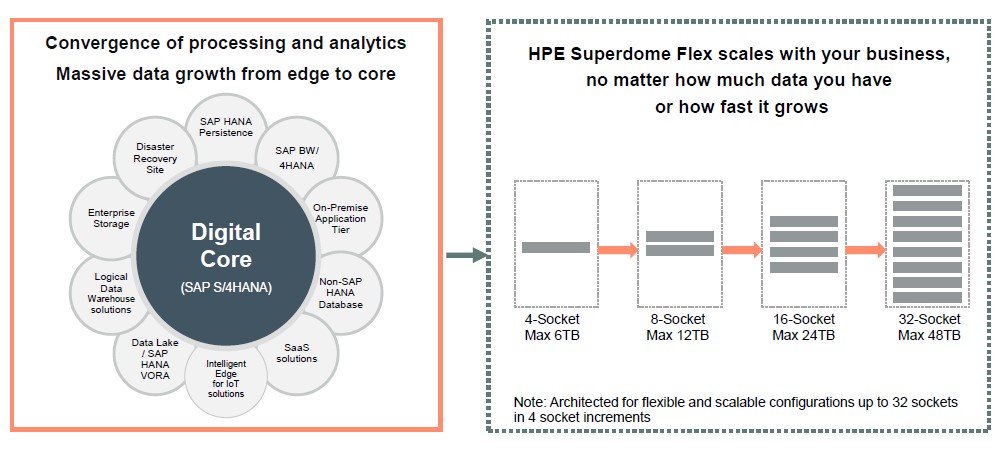

At the time when HPE decided to resell a UV 300 with a quarter of its full complement of 32 sockets and 64 TB of memory as the MC990 X, the Superdome X machines had been shipping for almost two years and about half of the revenues for the product were driven by SAP HANA in-memory applications, and another large portion were for customers that were switching from parallel Oracle RAC clustered databases running on Exadata machinery from Oracle to more traditional single-instance databases running on NUMA iron, which are easier to manage – even Oracle says so.

The Superdome Flex systems based on the Skylake Xeon SP processors and the NUMAlink 8 interconnect are not using ProLiant DL580s as their processing nodes – the motherboard and system has to be rejiggered to allow for the NUMAlink interconnect, and Jeff Kyle, director of product management for enterprise servers at HPE, hints to The Next Platform that it makes sense that, in the long run, that the machine should be based on a ProLiant node that is more of a commodity. But this time around, it is based on an SGI node that was already under design for both Skylake and NUMAlink 8. As with the UV 300 machines that SGI shipped, the Superdome Flex machines are configured in an all-to-all topology, so the distance between each node, in terms of hops across the interconnect, are the same and therefore the latencies for the NUMA are equal across the nodes. This yields a more consistent performance, which is necessary for database workloads. We don’t know what the latencies are for NUMAlink 8, but talking across NUMAlink 7 took about 500 nanoseconds, as we have previously reported, and a jump across the QPI interconnect on a single system board takes about 200 nanoseconds. Kyle didn’t give details, but said the new interconnect used in the Superdome Flex was even lower.

We suspect that the bandwidth is higher, too, with NUMAlink 8. The NUMAlink 6 interconnect, used in the SGI UV 2000 and UV 3000 systems that scale out to 256 sockets in a single image (but with an unequal number of hops between nodes) was able to hit that 64 TB peak across those sockets using an interconnect that delivered 6.7 GB/sec of bi-directional peak bandwidth; the core-to-memory ratio on these UV 2000 and UV 3000 machines was much higher, something some HPC applications need. With NUMAlink 7, which was used in the UV 3000 series, the latency was driven down and made consistent across a small number of sockets, topping out at 32 in a single image, and the interconnect had a bi-directional bandwidth of 14.9 GB/sec. It would be interesting to know how far SGI and HPE has pushed it with NUMAlink 8, but Kyle is not saying. NUMAlink 5, at 7.5 GB/sec in each direction, was actually more capacious than the NUMAlink 6 that replaced it. So you can’t assume it went up.

The Superdome Flex machine and its NUMAlink 8 interconnect are designed to scale to 32 sockets and up to 96 TB of addressable main memory in a single image, according to Kyle, but that is not going to be immediately available. The 5U Superdome Flex node has four Xeon SP processors and uses the extra UltraPath Interconnect (UPI) ports on the Platinum and Gold flavors of the Skylake chips to link to the NUMAlink 8 node controller. At the moment, HPE is selling machines with one or two nodes, with systems with four or eight nodes coming at the beginning of December, 16 nodes early in the new year, and 32 nodes in February. Support for Windows Server 2016 and the VMware server virtualization stack are also coming early next year – something that SGI was not able to get done itself.

The Superdome Flex system will top out at 48 TB of memory for now, with each chassis having 48 DDR4 memory slots and using 32 GB sticks providing 1.5 TB per enclosure. In theory, HPE could roll out support for 128 GB DDR4 memory sticks and populate the nodes, but Kyle says that 64 GB sticks cost more than twice as much as 32 GB sticks, and 128 GB sticks cost four times that of the 64 GB sticks. With memory prices already more than double, in-memory has suddenly got very expensive.

But the Superdome Flex, as it turns out, is a blessing in that HPE can pitch a box using the Xeon SP Gold chips in an eight-socket and larger system, which you cannot do with an eight-socket using Intel’s Platinum Xeon SPs. And if you want to be able to do 1.5 TB per socket to beef up the memory, you have to pay the Xeon SP M memory tax, which is pricey indeed. HPE has another plan of attack for customers that need a large memory footprint: Add more nodes, stick to the Gold Xeon SP variants, and stick with 64 GB memory.

Another big difference with the Superdome Flex is that it now has the memory protection that the Superdome X offered, which is important for big database and in-memory systems where memory cannot go down. The firmware that HPE has moved from Superdome X to Superdome Flex is “double data correction plus one” allows for the same level of memory availability as if the memory was physically mirrored without the mirroring. You don’t have to spend twice as much.

“We are at systems with 384 DIMMs, and memory makers will tell you that if you lose one DIMM per month that would be within spec,” says Kyle with a laugh. “But this is unacceptable, and our customers are not going to take downtime every month or year to replace memory.”

The Superdome Flex system is capable of supporting the following Skylake processors:

- Platinum 8180: 28 cores at 2.5 GHz with 38.5 MB L3 cache

- Platinum 8176: 28 cores at 2.1 GHz with 38.5 MB L3 cache

- Platinum 8156: 4 cores at 3.6 GHz with 16.5 MB L3 cache

- Platinum 8158: 12 cores at 3.0 GHz with 16.5 MB L3 cache

- Gold 6154: 18 cores at 3.0GHz with 24.75 MB L3 cache

- Gold 6132: 14 cores at 2.6 GHz with 19.25 MB L3 cache

Each Superdome Flex chassis has four Xeon SP sockets and 48 memory slots, and memory has to be added in groups of 12 DIMMs. At the moment 32 GB and 64 GB sticks are sold. In addition to the NUMAlink 8 ports, the chassis has two 10 Gb/sec Ethernet ports for talking to the outside world and four 2.5-inch drive bays for local storage. There are three variants of I/O on the nodes: one with no PCI-Express 3.0 slots and aimed solely at compute expansion; one with five x16 slots and seven x8 slots; and another with seven x16 slots and nine x8 slots. SLES 12, RHEL 7, and Oracle Linux 7 are currently supported.

Here is the front view of the Superdome Flex node:

And here is the rear view of the node with a 16-slot expansion chassis on top:

HPE did not provide pricing on the Superdome Flex, but Kyle gave some hints. Depending on the configuration, on a like-for-like setup, a four-socket Superdome Flex will cost somewhere between 10 percent and 20 percent more than a four-socket ProLiant DL580 with the same memory and compute. And on a socket-for-socket and memory-to-memory comparison, the Superdome Flex is somewhere between 40 percent and 50 percent cheaper than the Superdome X that HPE designed itself based on the Integrity line of blade servers. This is just a more efficient and less costly machine to build, and that is why it is essentially replacing the Superdome X. That said, Kyle doesn’t want enterprise customers thinking HPE won’t be selling Superdome X, MC990 X, or even UV 300 systems for many years to come. UV 300 will be replaced faster, but the MC990 X machine, based on cheaper “Broadwell” processors and having a larger 1.5 TB memory footprint without the Skylake memory tax will be useful for some customers. The Superdome X will be around for a few more years, too, as customers need it. But HPE is not going to certify newer chips on Superdome X, MC990 X, or UV 300 systems.

HPE will, however, be adding support for Nvidia “Volta” GPU accelerators to the Superdome Flex – just one more reason for HPC, machine learning, and database customers to move to the new iron.

I often wonder why people think adding more CPUs is a good way to counter the “Xeon SP M memory tax”?

That tax, at least according to Intel’s Recommended prices on ARK, is almost exactly $3000 per CPU.

That’s GOT to be cheaper than most of the Xeon SP chips out there, especially the higher tier Gold and Platinum chips.

To me, the problem is not the Xeon Tax itself, but the fact that, as you mentioned, the 128GB DIMMs you need to actually GET to 1.5T per Socket are so crazy expensive.

Thats where adding sockets vs M CPUs can help.