In a broad sense, the history of computing is the constant search for the ideal system architecture. Over the last few decades system architects have continually shifted back and forth from centralized configurations where computational resources are located far from the user to distributed architectures where processing resources were located closer to the individual user.

Early systems used a highly centralized model to deliver increased computational power and storage capabilities to users spread across the enterprise. During the 1980s and 1990s, those centralized architectures gave way to the rise of low cost PCs and the emergence of LANs and then the Internet for connectivity. In this new model, computational tasks were increasingly delegated to the individual PC.

That highly distributed architecture eventually evolved into mobility with the rise of laptop computers, tablets, and smartphones. But as computational demands increased, system architects began moving tasks to the cloud where they could take advantage of its virtually unlimited computational and storage resources, high reliability and low cost. So, in recent years, organizations have begun the move back toward a more centralized approach built around the cloud. Smartphones, for example, send everything back to the cloud to be processed and stored. When data is needed, it is then returned to the device.

As a result, the cloud is where businesses today perform high level computation and analysis. Companies use the cloud to run enterprise-wide applications like Oracle and then use PCs to interpret and analyze results. As companies adopt machine learning techniques and employ higher levels of artificial intelligence, it seems likely that computational resources on the cloud will play an increasingly pivotal role in every organization. Today, cloud computing offers formidable advantages. It allows organizations to streamline capital expenses and manage the operational and maintenance costs associated with an IT infrastructure. Every smart factory will need those resources to manage multiple machine vision systems and every smart city will need the cloud to coordinate traffic lights that manage traffic patterns and optimize the power efficiency of thousands of streetlights.

AI On The Edge

Not all applications will be run from the cloud, however. In fact, as designers add increasingly higher levels of intelligence to applications running at the edge, they will need to respond more quickly to changing environmental conditions. When an autonomous car enters a smart city, for example, it can’t wait to communicate with the cloud to ascertain whether to stop if the traffic light is red. It must take immediate action. Similarly, when a security system in a smart home detects movement within the house, it must rely on its on-device resources to detect whether that movement is a burglar breaking into the house or just the family dog.

This need to make decisions independently and quickly will define a new class of edge-intelligent devices. Using technologies like voice or facial recognition, these new devices will be able to customize their function as the environment changes. And, by applying machine learning and AI technologies, these devices will be able to learn and alter their operation based on the data continually collected.

But how they work with the cloud will differ dramatically from intelligent systems that use the cloud exclusively for processing and storage. Machine learning typically involves two types of computing workloads. In training, the system learns a new capability from existing data. For example, a system learns facial recognition capabilities by collecting and analyzing images of thousands of faces. Accordingly, training is compute-intensive and requires hardware that can process large amounts of data. The second type of workload, inferencing, applies the system’s capabilities to new data by identifying patterns and performing tasks. This allows systems to learn over time and increase their intelligence the longer they operate. Systems operating on the edge can’t afford to perform inferencing on the cloud. Instead, they need to utilize on-board computational resources to continually expand their intelligence.

The scope of this market opportunity is difficult to overstate. Potential applications range from consumer applications like intelligent TVs that can sense when a user leaves the room and automatically turn off, to the next generation of machine vision solutions targeted at the smart factories of the future. Clearly one of the hottest markets for edge computing solutions lies in the automotive industry. The rapid evolution of the auto from a primarily mechanical device to an increasingly electronic platform has driven that transformation. That process began with the rapid evolution of entertainment systems from radios and tape decks to highly sophisticated infotainment systems. It has continued with the emergence of Advanced Driver Assistance Systems (ADAS) designed to increase safety. Analysts at Research and Markets.com, for example, now forecast the ADAS market will grow at a CAGR of 10.44 percent between 2016 and 2021.

Recent advances in the mobile market have helped accelerate this change. Smartphones brought new capabilities and applications into the car and the development of mobile processors and standardized MIPI interfaces have helped drive down the cost of integrating these capabilities into the automobile. Today sophisticated auto entertainment systems offer both information and entertainment while ADAS solutions bring an extensive array of safety functions including automatic braking, lane detection, blind spot detection and automatic parallel parking capabilities.

To make this possible, today’s autos integrate a growing array of vision systems into the car to monitor both the driver inside the car as well as the road conditions. These embedded vision systems track the driver’s head and body movement for indications of sleepiness or distraction, as well as an expanding number of external cameras to support automatic park and reverse assist, blind spot detection, traffic sign monitoring and collision avoidance. New applications like lane departure warning systems combine video input with lane detection algorithms to establish the position of the car on the road. As a result, auto manufacturers are now integrating many cameras in each vehicle. To build out these new ADAS capabilities, designers anticipate they will require forward facing cameras for emergency braking, lane detection, pedestrian detection and traffic sign recognition and side and rear-facing cameras to support blind spot detection, parking assist and cross-traffic alert functions.

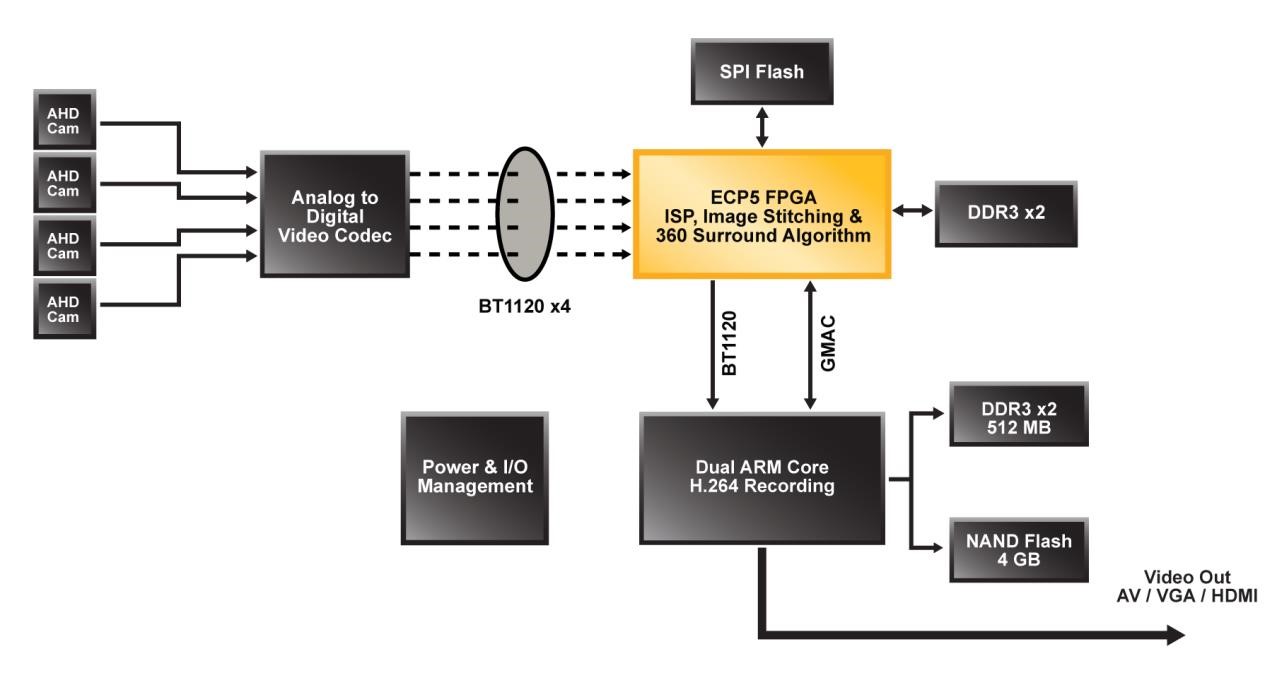

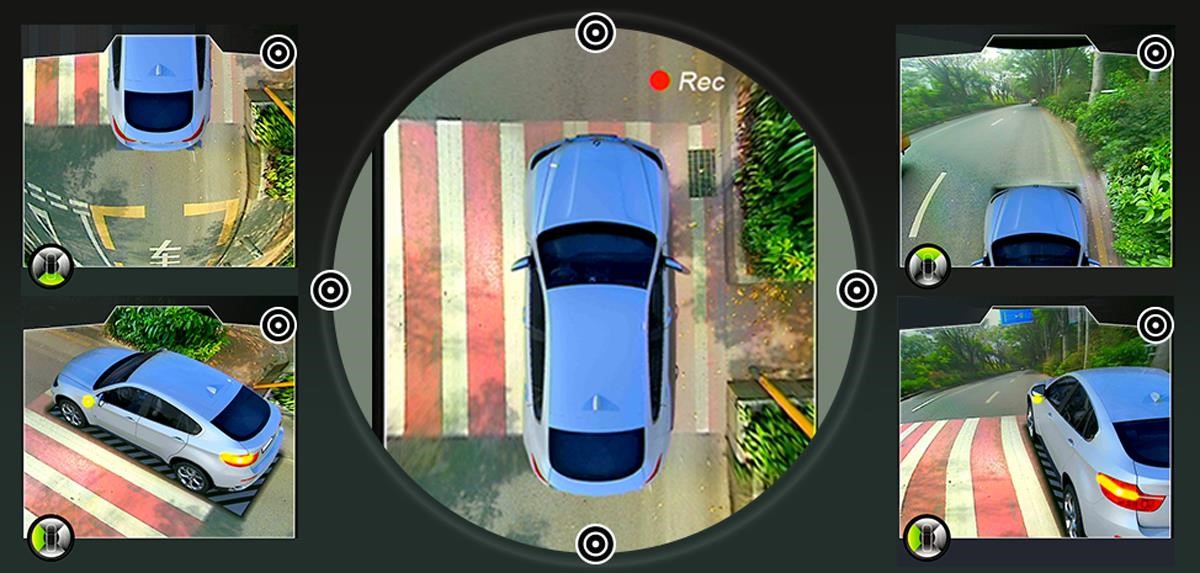

One limitation auto manufacturers face as they build these systems is limited I/O. Typically, processors today feature two camera interfaces. Yet many ADAS systems require as many as eight cameras to meet image quality requirements. Ideally designers could use a solution that gives them the co-processing resources to stitch together multiple video streams from multiple cameras or perform various image processing functions on the camera inputs before passing the data to the application processor in a single stream. In fact, one common feature in most ADAS solutions is a bird’s-eye-view function that collects data from multiple cameras and gives the driver a live video view from 20 feet above the car.

Historically designers have used a single processor to drive each display. Now designers can use a single FPGA to replace multiple processors, aggregate all incoming data from each camera, stitch the images together, perform pre- and post-processing and send the image to the system processor, as shown below:

Together these new vision and sensor capabilities are laying the foundation for the introduction of autonomous cars. Cadillac, for example, is introducing this year SuperCruise, one of the industry’s first hands-free driving applications. This new technology promises to make the driver safer by continuously analyzing both the driver and the road while a precision Lidar database provides details of the road and advanced cameras, sensors and GPS react in real-time to dynamic road conditions.

Smart Factories

In the industrial arena, AI and edge applications promise to play an increasingly pivot role in the development of the smart factory. Driven by adoption of the Industry 4.0 model first proposed in 2011, the coming generation of smart factories will integrate advanced robotics, machine learning to software-as-a-service and the Industrial Internet of Things (IIoT) to improve organization and maximize productivity.

While Industry 1.0 marked the introduction of water and steam powered machinery to manufacturing, Industry 2.0 reflected the movement to electrically-power mass production techniques defined by manufacturers’ integration of computers and automation. The coming Industry 4.0 model will introduce cyber-physical systems to manufacturing that will monitor the physical processes in a smart factory and, using AI resources, make decentralized decisions. This evolution will drive the digital transformation of the industry by introducing components such as big data and analytics, the convergence of IT and IoT, the latest advances in robotics and the evolution of the digital supply chain. Moreover, by continually communicating with each other and with human operators, these physical systems will become part of the IIoT.

How will a smart Industry 4.0 plant differ from current factories? It will offer near universal interoperability and a higher level of communication between machines, devices, sensors and people. Second, it will place a high priority on informational transparency where systems create a virtual copy of the physical world through sensor data to contextualized information. Moreover, decision making in the smart factory will be highly decentralized allowing cyber-physical systems to operate as autonomously as possible. Finally, this new evolution of the factory will feature a high level of technical assistance in which systems will be able to help each other solve problems, make decisions and help humans with tasks that may be highly difficult or dangerous.

Distinct Needs

What do designers need to bring these new AI capabilities to the edge? Traditionally, designers employing deep learning techniques in the data center rely heavily on high performance GPUs to meet demanding computational requirements. Designers bringing AI to the edge don’t have that luxury. They need computationally efficient systems that can meet accuracy targets while complying with the strict power and footprint limitations typically found in the consumer market.

Whether developers are building security systems for the smart home, automated lighting systems for smart cities, autonomous driving solutions for the next generation of automobiles or intelligent vision systems for smart factories, they need cost-effective compute engines capable of processing HD digital video streams in real-time. They also need high capacity solid state storage, smart cameras or sensors and advanced analytic algorithms.

Typically, processors in these systems must perform a wide range of tasks from image acquisition and lens correction to image processing and segmentation. In some applications designers can use a wide range of processor types ranging from microcontrollers, Graphics Processing Units (GPUs) and Digital Signal Processors (DSPs), to Field Programmable Gate Arrays (FPGAs) and Application Specific Standard Processors (ASSPs) to meet these requirements. Each processor architecture offers its own distinct advantages and disadvantages. In some cases, designers combine multiple processor types into a heterogenous computing environment. In other situations, they may integrate multiple processor types into a single device.

Demand is growing for solutions that leverage the application support and manufacturing scale associated with mobile processors and MIPI-compliant sensors and displays and employ AI, machine learning and neural networks to enable intelligence at the edge. Neural networks in machine learning for applications such as image recognition can require terabytes of data and exaflops of computational power to develop a data structure and assign weights. So, it isn’t surprising that machine learning for those applications typically occur in the data center.

Once the model is trained and ported to an embedded system, the device must make decisions more quickly and efficiently. In most cases designers require a solution that combines computational efficiency with low power and a small footprint.

There is widespread recognition within the industry that machine learning requires highly specialized hardware acceleration. But requirements vary widely depending upon the task. For instance, designers of hardware for training applications focus on high levels of computation with high levels of accuracy using 32-bit floating point operations. At the network edge, designers performing inferencing prefer the flexibility to sacrifice precision for greater processing speed or lower power consumption. In some cases, applications using fixed-point computations can deliver nearly identical inference accuracy to floating-point applications while consuming less power.

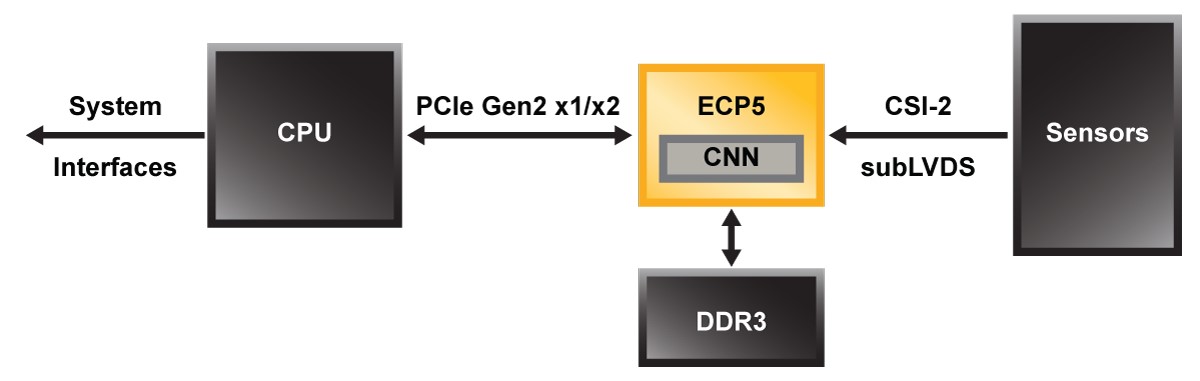

In some cases, processors that can support that type of design flexibility offer distinct advantages in these types of applications. FPGAs, for example, combine extensive embedded DSP resources and a highly parallel architecture with a competitive advantage in terms of power, footprint and cost. The DSP blocks in Lattice Semiconductor’s ECP5 FPGAs can compute fixed-point math at less power/MHz than GPUs using floating-point math.

In the meantime, industry research on neural networks continues to advance. Recently, Lattice Semiconductor collaborated with a developer of high performance soft-core processors for embedded applications to develop a neural network-based inference solution for a facial detection application. The solution was implemented in less than 5000 LUTs of a iCE40 5K FPGA. Using an open source RISC-V processor with custom accelerators, this setup significantly reduced power consumption while shortening response time.

Conclusion

Edge computing poses the next big challenge for developers of AI-based systems. As designers add higher levels of intelligence, demand will grow for solutions that can respond more quickly and accurately to constantly changing environmental conditions. Look for developers to employ a wide range of technologies to meet this emerging requirement.

Deepak Boppana is senior director of product and segment marketing at Lattice Semiconductor.

Be the first to comment