Changes to workloads in HPC mean alterations are needed up and down the stack—and that certainly includes storage. Traditionally these workloads were dominated by large file handling needs, but as newer applications (OpenFOAM is a good example) bring small file and mixed workload requirements to the HPC environment, it means storage approaches need to shift to meet the need.

With these changing workload demands in mind, recall that in the first part of our series on future directions for storage for enterprise HPC shops we focused on the ways open source parallel file systems like Lustre fall short for users with business-critical needs. This was followed another piece that looked at some of the drivers for commercial HPC and how support matters more than ever in a time of increasing workload complexity.

To conclude the series, this time we will take a look at a how a company like Panasas with a tuned, proprietary parallel file system is translating both of those trends into hardware and software that make enterprise HPC move ahead in 2018 and beyond while HPC workloads continue to evolve and put pressure on I/O systems in unexpected ways.

Curtis Anderson, Senior Software Architect at Panasas walked us through another evolution—one that started in 1999 with a parallel approach to standard NFS and leads us to the present when HPC demands, especially for commercial users, are more complex than ever. As seen below, the company’s core differentiating technologies are pictured, meshing together as one proprietary, fully supported stack with the aim making storage and I/O for HPC as plug and play as possible without sacrifices to performance.

In addition to the PanFS parallel file system, there are a number of both hardware and software elements that define Panasas. Panasas has two hardware platforms; directors and hybrid storage. The directors are control plane rooted and manage metadata without passing user data through them. File system activity is orchestrated here and reads/writes can occur in parallel between compute nodes and Panasas storage blades. We will go into more detail about these and the hybrid storage solution in a moment.

Another key differentiator from Panasas is the DirectFlow protocol for fast I/O with full POSIX compliance. This proprietary protocol runs inside each compute client and establishes the I/O relationship between the client to the PanFS storage operating environment for file reads and writes. Anderson says this creates higher performance than one would see with industry-standard protocols like NFS or SMB since it circumvents the load-balancing and bottlenecks of existing protocols when interfacing with other clustered file systems.

Most recently, Panasas has met the shifting demands of enterprise HPC environments with a new generation of its ActiveStor scale-out NAS offerings, which they say can push the capacity envelope to 57 petabytes with 360GB/s of bandwidth. The performance boost from this new generation is driven by a new approach to processing metadata based on the new ActiveStor Director 100 (ASD-100) control plane engine and the recently announced ActiveStor Hybrid 100 (ASH-100) storage appliance.

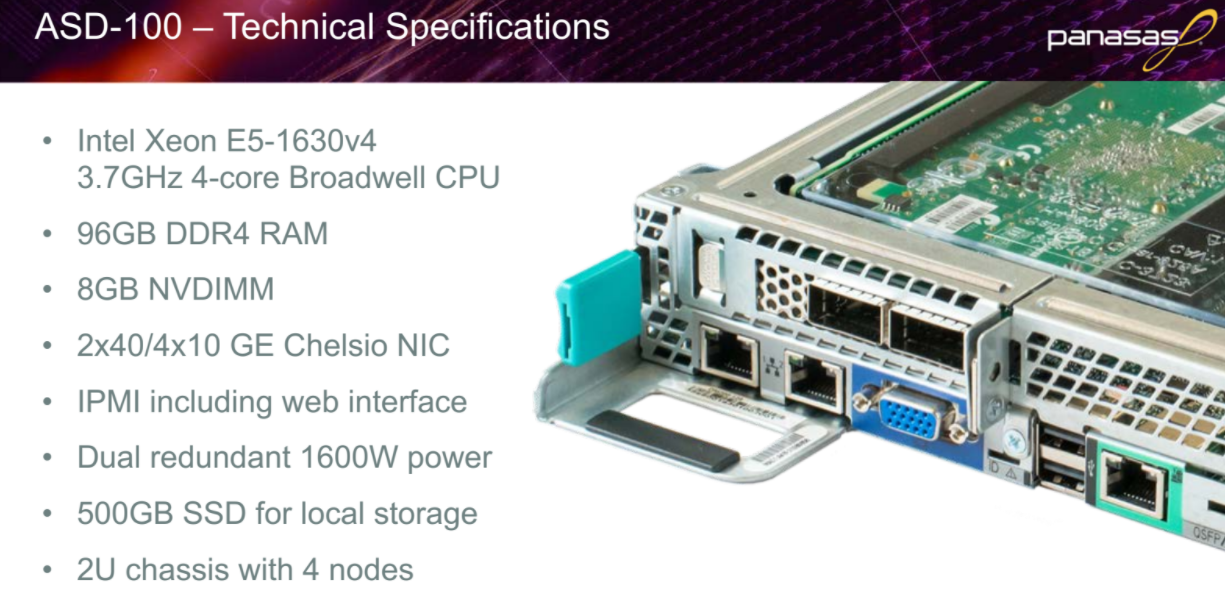

For the first time, Panasas is offering a disaggregated Director node (ASD-100), the brain of the Panasas storage system for greater flexibility. Customers can now add any number of ASD-100 nodes to drive exactly the level of metadata performance they need. With double the raw CPU power and RAM capacity of previous Director Blades, Panasas says the ASD-100 delivers double the metadata performance on metadata intensive workloads.

Based on industry-standard hardware, the ASD-100 manages metadata and the global namespace; it also acts as a gateway for standard data-access protocols such as NFS and SMB. The ASD-100 uses non-volatile dual in-line memory modules (NVDIMMs) to store metadata transaction logs, and Panasas is contributing its NVDIMM driver to the FreeBSD community.

In addition to that new offering for commercial HPC customers in particular, the ASH-100 is the first hardware platform to offer the highest capacity HDD (12TB) and SSD (1.9TB) in a parallel hybrid storage system.

A broad range of HDD and SSD capacities can be paired as needed to meet specific workflow requirements. The ASH-100 can be configured with ASD-100s or can be delivered with integrated traditional ActiveStor Director Blades (DBs), depending on customer requirements.

In terms of the ASH-100, Anderson says that this is where the workload adaptability becomes most clear. Customers can choose a pair of 4, 6, or up to 12 TB hard drives then independently choose a single 480 Terabyte or 1.9 Terabyte SSD. “If they have large file streaming workloads they will probably want 12 TB hard drives and maybe 480 or 960 GB SSDs because hard drives are actually quite good at bandwidth for large streaming files. It’s the small seeking operations that cut performance down. The ASH-100 and its flexibility with bigger flash means more choice—a choice between 2% and 24% of the total capacity being flash.”

“The need to process, store, and analyze ever-larger amounts of information has intensified the pressure on IT departments to build compute and storage environments that meet these demands. NAS is the ideal choice for unstructured data—ranging in size from small text files to multiterabyte image and video files—because it is designed for application-based data sharing, ease of management, and support for multiple file access protocols.”

Anderson thinks the ASD-100 Director will be the foundation for changes the company makes to suit future workloads in HPC and demanding enterprise environments in years ahead. “We will continue to be backwards and forwards compatible; we have headroom in the director to keep doing more.” He expects that in the near term they will follow industry trends toward producing flash products, implementing an object API into their storage, and continuing to adapt to mixed workloads from HPC users. However, for now, Panasas is continuing to carve a swift, consistent path under the uppermost tier of HPC through to many commercial HPC shops of all sizes.

Be the first to comment