While it is possible to reap at least some benefits from persistent memory, for those that are performance focused, the work to establish an edge is getting underway now with many of the OS and larger ecosystem players working together on new standards for existing codes.

Before we talk about some of the efforts to bring easier programming for persistent memory closer, it is useful to level-set about what it is, isn’t, how it works, and who will benefit in the near term. The most common point of confusion is that persistent memory is not necessarily about hardware, a fact that makes it harder to talk about than other emerging technologies. These devices already exist—what is different is how users interact with them and how it changes the role of traditional storage.

The technology used to make persistent memory can also be used for disks and DIMMs and most products today are made out of DRAM—even the NVDIMMS available today are just DRAM with a NAND chip to hoard data. So the question isn’t’ about the device, it’s about how users program to it.

With that in mind, at its simplest, persistent memory speaks bytes while storage speaks blocks.

If the goal is to access a data structure out on disk or an SSD there is no choice, even if all that is needed is a single byte, than to page in a whole block into DRAM, which on busy systems, means booting out another DRAM resident. Persistent memory, which is not block-based, focuses on bytes so to grab that single byte, a load instruction is initiated and without paging in from storage devices, that data can be accessed directly where it lives for low latency access that doesn’t kick items out of DRAM.

Intel’s head of persistent memory programming and member of the SNIA standards organization, Andy Rudoff, tells The Next Platform that it was harder than imagined to come up with a working definition of persistent memory. He defines it as being “so fast that you would reasonably just tall the CPU when trying to load a value from it,” something that would not happen with storage.

Rudoff, says that the story around persistent memory may not resonate as well as other technology trends because it is not as easy to define as a new hardware device with defined performance, even if it marks a major shift for many organizations. He says following the recent PM Summit the conversations reminded him of the pre- and early multiprocessor systems days when some application areas were way ahead of the curve to exploit the new capabilities, but many at the beginning were happy to get the native pre-optimization speedups without code modifying. “Every application would run fine because as single-threaded applications running on a machine with multiple CPUs. But to actually get use out of it, it meant working to modify codes to stay competitive.” This is just what is happening today—and it is starting in the database-driven enterprise datacenters first.

Those first in line to start prepping for persistent memory are the database folks. The speed over traditional storage for accessing data structures quickly is a major improvement, even without modifying codes, but with optimizations for persistent memory, there are some very impressive recorded speedups. More than simply having faster access to larger data structures, the “warm cache effect” for massive terabyte-or-more in memory databases is a vast improvement over rebooting and reading the whole database into memory as with SAP HANA. With persistent memory, the whole database is at the ready—something SAP has talked about in the past using 3D Xpoint.

With the database users in mind, there are others that can clearly make full use of persistent memory—think anyone with big data structures too larger for memory but that need to be accessed very quickly. This can include storage appliance builders who want to find a faster way to access massive dedupe tables for storage, which are far too large for DRAM, meaning they have to be paged in over time. Naturally, with persistent memory these can be made available without paging, marking a significant time advantage as well.

Direct memory access capabilities, especially remote DMA (RDMA) are a big deal for this database crew also because it is now possible to pull a packet directly off the network into persistence. This means rather than receiving into DRAM then copying it into DRAM out to the persistent memory, the persistent memory is right on the memory bus to grab the info right into the memory of the machine.

We already know that RDMA is a big deal in the big cluster world of HPC but for the database folks in particular, this is also a more important advancement than it may seem. Since it is possible to access the memory on a remote system, it like reaching across the entire system to talk to a remote disk without any code running given the direct NIC to persistent memory leap. A demo at Oracle OpenWorld showed this in action on the InfiniBand-based Exadata appliance. Exadata clients accessed the storage on an Exadata server without running any of the code—the CPU utilization stayed at zero because the clients knew right where to go for the data, something that has a performance ripple across these and of course, HPC systems.

HPC is where the early adopters of almost everything are found—and one where there is not much concern about modifying applications or building kernels to get maximum performance. Aside from the well known set of RDMA to persistent memory use cases in HPC, there there are also big data structures that run across massive clusters, all the while snapshotting for backup. The ability to write to something quickly that is persistent can have big value here—something we’ve already described in some of our work on the future of burst buffers.

So, with all of this in mind, what are some of the preparations that are being made by the larger standards body?

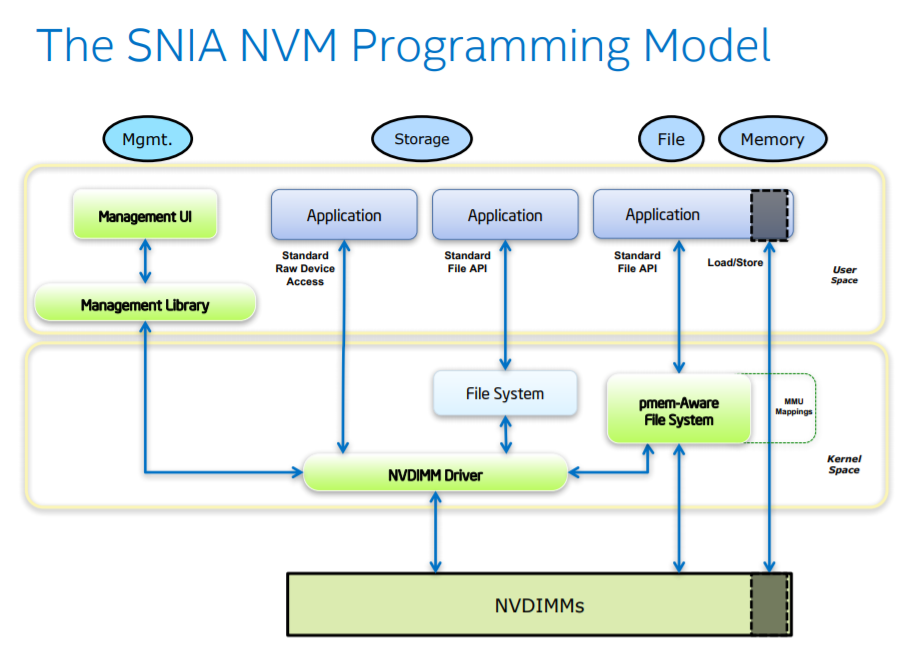

As Rudoff says, the group, which is co-led by Intel and HP but is vendor-neutral, has put all the plumbing in place to allow for direct access from user space via the programming model highlighted below.

(The NVDIMM is representative of any kind of persistent memory from any vendor). When a system boots it will notice the persistent memory and load the for this the new driver or driver stack to provide pathways to use it. On the left side are elements that only matter for install (config, health, etc) but in the middle is where the driver is providing the common block interfaces. Unmodified applications and file systems will automagically run faster thanks to this. This “legacy mode” where the storage APIs are converted into loads/stores happens under the radar.

For the real persistent memory preppers, however, look to the right. The persistent memory-aware file system makes the PM available through the standard file memory mapping interfaces. The OS vendors involved have named this—the applications can use this to do loads and stores directly to persistence without any involvement once the kernel has set it up.

Finally, there is a list of libraries to aid with persistent memory programming that Intel is working on in a standards/neutral way as well that have already been ported to ARM and other architectures. “We get better traction within the ecosystem if we do this without adding vendor lock-in or tying developments to a specific product.”

More details about current work to bring persistent memory closer to reach is available here.

Be the first to comment