Sometimes it takes a bit of tweaking to make a technology that is created for supercomputing centers more suitable for high-end enterprise customers, even sophisticated ones that are not afraid to take risks and try something different. The level of scale that enterprises need for their analytical and transaction processing systems are not necessarily on the same order of magnitude as that required to run enormous simulations at HPC centers. But there is enough overlap that technologies created for HPC can be adapted for use in the enterprise.

This is precisely what SGI has done with its “UltraViolet” family of shared memory systems, and from the looks of things, a product line that was revamped last fall with the addition of a new class of UV 300 series machines will go a long way in helping the company extend into new commercial markets as has been its goal for the past several years. Interestingly enough, to better address the needs of these commercial customers, SGI had to back off on the scalability of the top-end UV 2000 systems, which implement what is called non-uniform memory access, or NUMA, and create something that looks a bit more like a classic symmetric multiprocessing (SMP) of days gone by.

If money had been no object, and if queuing theory and the speed of light did not impose physical barriers to scalability and latency, then large enterprises would still be running their applications on a monstrous, shared system that looked and behaved, as far as software is concerned, like a big, beefy single server. The reason this would be the case is simple to explain: The programming model is much simpler. Applications would run on this single machine and the operating system would take care of assigning compute, memory, and I/O resources and moving data between memory and persistent storage. SMP is a clustering approach that gangs up multiple processors on a single memory bus and gives them access to a shared block of main memory in which their applications run. SMP is difficult to implement because memory accesses have to be the same speed for all processors, a restriction that limits the number of processors that can be linked together in this fashion because of latency issues.

NUMA, on the other hand, assumes that processors have their own main memory assigned to them and linked directly to their sockets and that a high-speed interconnect of some kind glues multiple processor/memory complexes together into a single system with variable latencies between local and remote memory. This is true of all NUMA systems, including an entry two-socket server all the way up to a machine like the UV 2000, which has as many as 256 sockets and which is by far the most scalable shared memory machine on the market today. The UV 2000 system tops out at 64 TB of main memory, and that is a hard limit imposed by the last three generations of Intel Xeon processor cores, which have only 46 bits of physical memory addressing in their memory controllers. (Back then the UV 1000 machines debuted in 2010, the limit was 44 bits of physical addressing, which meant the machine topped out 16 TB of main memory across its 256 processor sockets.)

In the case of a very extendable system like the UV 2000, the NUMA memory latencies fall into low, medium, and high bands, Eng Lim Goh, chief technology officer at SGI, explains to The Next Platform, and that means customers have to be very aware of data placement in the distributed memory of the system if they hope to get good performance.

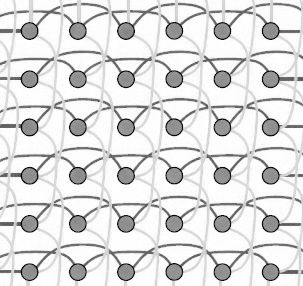

“With the UV 300, we changed to an all-to-all topology,” explains Goh. “This was based on usability feedback from commercial customers because unlike HPC customers, they do not want to spend too much time worrying about where data is coming from. With the UV 300, all groups of processors of four talking to any other groups of processors of four in the system will have the same latency because all of the groups are fully connected.”

SGI does not publicly divulge what the memory latencies are in the systems, but what Goh can say is that the memory access in between the nodes in the UV 300 is now uniform, unlike that in the UV 2000, but the latencies are a bit lower than the lowest, most local memory in the UV 2000 machine. The company was predicting something under 500 nanoseconds when it talked about the NUMAlink 7 interconnect last year, and to give a sense of just how impressive that is, SGI reminded everyone as the UV 300 was being developed that to get data out of a Xeon processor consumes around 100 nanoseconds and to use the QuickPath Interconnect (QPI) to access memory on an adjacent socket in a multi-socket Xeon system using Intel’s NUMA glue chips takes on the order of 200 nanoseconds.

Pushing that NUMAlink latency number down and getting it uniform is precisely what you would expect SGI’s engineers to do as they tailor a system that will initially be focused on running very large instances of SAP’s HANA in-memory database and eventually other kinds of commercial analytics jobs that are easier to program and run when the memory accesses are more uniform. And SGI is hoping to do even better in the future, by the way, with what Goh called “drastically lower” latencies on follow-ons to the UV 300s launched last fall.

“All of this is driven with the goal of increased usability because the customers want to feel that this big machine with 8, 16, or 32 sockets to has consistent and low latency as if they were working on a two-socket or a four-socket system,” says Goh. “Although we cannot really get there, we are trying to get there as closely as possible.”

Adapting NUMAlink To Changing Workloads

SGI started out using NUMA clustering for machines running its own MIPS RISC processors and its companion Irix Unix operating system variant, and then with the Altix line it paired Intel’s Itanium processors with a version of SUSE Linux Enterprise Server that was modified to run on its hardware to speak NUMAlink and use the Message Passing Interface (MPI) parallel processing protocol for coordinating work on clusters, much as commodity machines do. With the UV 100 and UV 1000 machines that debuted in 2010, SGI moved from Itanium to Xeon processors from Intel and also added, among other things, an MPI Offload Engine to the NUMAlink ASIC. This offload engine, much like TCP/IP offload engines in Ethernet cards, takes some of the network overhead off of the CPU (in this case, overhead related to the MPI protocol) and puts it on the NUMAlink chip. This accelerates the MPI by running it in the network, closer to the data as it is flowing around the cluster, and also frees up CPU cycles so they can do more number crunching for simulations.

Each generation of UV machines using Xeon processors from Intel has been paired with a particular NUMAlink interconnect designed by SGI, which is one of the key technologies that differentiates it from other makers of high-end systems. The initial UltraViolet machines from five years ago used the NUMAlink 5 interconnect and they came in a UV 100 and a UV 1000 flavor, a bifurcation that SGI tried back then but has revamped a bit. Both machines lashed together Intel’s eight-core “Nehalem-EX” Xeon 7500 processors using two-socket blade servers. The UV 100 was a 3U blade enclosure that could deliver a maximum of 960 cores, 1,920 threads, and 12 TB of memory in 48 blades that ate up two racks of space. The NUMAlink 5 interconnect linked the two-socket nodes in the UV 100 machines in a 2D torus. For the UV 1000 machine, which was aimed at compute intensive workloads that also wanted a larger memory space than was available in conventional X86 servers, the 256 sockets in this larger system were connected in a fat tree topology, delivering a total of 2,560 cores and 16 TB in a single shared memory space. (Threads topped out at 4,096 because of the limits on the Linux kernel at the time.) SGI had a virtual memory addressing scheme built into the NUMAlink 5 ASIC that let it hook 128 of these fat tree configurations together to make a monster system with 32,768 sockets. (As far as we know, no one ever bought such a machine, but it is in the NUMAlink architecture.) The ten-core “Westmere-EX” Xeon E7 v1 processors were eventually added to the UV 100s and UV 1000s.

With the UV 2000 systems that came out in the summer of 2012, the interconnect topology changed with the NUMAlink 6 interconnect across the 256 sockets in the 1000-class machine, and SGI did a bunch of things to make the system more scalable and more affordable. This included adopting Intel’s “Sandy Bridge” Xeon E5-4600 processors, which were less expensive than the Xeon E7 chips and, incidentally, SGI did not have much of a choice because Intel canceled the Sandy Bridge-EX processor, opting instead to wait for the “Ivy Bridge-EX” Xeon E7 v2 that came out last year. The Xeon E5-4600 had enough cores and memory slots per socket that SGI could push its UV architecture up to the 64 TB limit of the physical addressing of those Sandy Bridge chips, so it didn’t really make that much of a difference in that regard. The UV 2000 delivered 2,048 cores and 4,096 threads for applications to run on, a decrease in core count, but memory capacity on the 1000-class system increased by a factor of four. The blades in the UV 2000 system are connected using a 3D enhanced hypercube topology inside of a rack and a cross bar interconnect is added to this 3D enhanced hypercube to push it up to 256 sockets. The NUMAlink 6 hub controller is able to do the virtual memory trick up to 32,768 sockets like was available in the UV 1000 and its NUMAlink 5 interconnect. Perhaps more important than scalability, the NUMAlink 6 interconnect, at 6.7 GB/sec, delivered roughly 2.5 times the bi-section bandwidth of the NUMAlink 5 interconnect, keeping all of those cores and threads well fed.

For the NUMAlink 7 interconnect used in the UV 300 memory-intensive machines, the bi-section bandwidth is 7.47 GB/sec, and the interconnect is configured to have the lowest latency that SGI offers in the UV line and to do all-to-all, SMP-style links between processors. The bandwidth needs to be a higher because memory speeds are increasing as the server nodes are moving to faster DDR4 memory. So even if memory capacity on the Xeon chips cannot increase until Intel raises the physical memory addressing to 48 bits, a bandwidth boost might have been etched into NUMAlink 7. And by the way, there is a way, according to Goh, to change it to the hypercube topology used by the UV 2000 machine. “This is not something that we qualify, but it is technically possible,” says Goh.

The UV 300 is architected to support the current Xeon E7 v2 – specifically, the high-end E7-8800 series because they have a high number of QPI ports – but will also support the future “Haswell-EX” and “Broadwell-EX” Xeon E7 processors when they come out. As far as we know from looking at Broadwell desktop chips, Intel has not raised the physical addressing on the Xeon memory controllers to 46 bits (which would give 256 TB of physical addressing), and it is possible that this could happen with the “Skylake” tock a coming after the Broadwell tick, to use Intel’s parlance.

“It is not that the UV 300 is better than the UV 2000,” says Goh. “It is just that we are trading off scalability in the UV 2000 for the usability in the UV 300.”

So if you are looking for a strategic statement, the UV 1000 line – and by line, SGI means the past UV 1000, current UV 2000, and future UV 3000 as a family – will focus on processor and memory scalability in a coherent memory system, while the UV 100 line – meaning the current UV 300 and its follow-ons – will be focused on the commercial market and on the scientific market that would like more consistent latency and not have to worry about data placement as their applications run.

“It is not that the UV 300 is better than the UV 2000,” says Goh. “It is just that we are trading off scalability in the UV 2000 for the usability in the UV 300. When you do all-to-all in a UV 300, you give up something. That is why the UV 300 will max out at 32 sockets – no more. If the UV 300 has to go beyond 32 sockets – for instance, if SAP HANA makes us go there – we will have to end up with NUMA again because we don’t have enough ports on the processors to talk to all the sockets at the same time.”

Goh says that for a lot of enterprise customers, 32 sockets is going to be more than enough if they have 24 TB or 48 TB of memory to play with. The 24 TB limit today with the UV 300 is for a system using 32 GB memory sticks, and if customers want to pay a premium for 64 GB memory sticks, they can double up the capacity to 48 TB.

The UV 300 does not just go after a new customer set that wants a higher memory-to-CPU ratio for in-memory processing like SAP HANA. It comes with different packaging to make it easier for customers to start small and grow their systems. With the UV 2000, a rack with 16 sockets was the smallest system that SGI customers could consume, but with the UV 300, the building block is a four-socket machine with 3 TB of memory that fits in a 5U enclosure. This is not much different from other four-socket Xeon E7 v2 machines. But SGI has the NUMAlink 7 interconnect on its blades, and you can add from one to seven more enclosures until you have built up a machine that has 32 sockets and 24 TB of addressable memory. You have to have 1, 2, 4, or 8 enclosures in the system, and to extend a machine you just cable it up and reboot it and it is larger. (The version of the UV 300 that is certified to run SAP HANA is called the UV 300H, and at the moment it is certified to support HANA on four-socket and eight-socket variants, with certifications coming for the larger configurations.)

The other big change with the UV 300, says Goh, is that the system has a lot more PCI-Express slots than does the UV 2000, and this is allowing SGI to flesh out the memory-intensive box with flash memory cards, like Intel’s P3700, as well as accelerators, such as Nvidia’s Tesla GPU and Intel’s Xeon Phi coprocessors. The current design allows for a one-to-one pairing between sockets and accelerators, and in a flash-enabled machine, customers could push up to 100 TB of flash into the system and deliver a staggering 30 million I/O operations per second serving up data. (The SAP HANA setup is backed by NetApp E2700 disk arrays, not flash.)

At the moment, given the large installed base of SAP customers using its transactional and data warehousing software, SGI is focused on the transition in the SAP base to HANA in-memory processing. While many companies don’t mind the lag between transactional systems and analytical systems in their operations today, Goh says that about 20 percent of them do.

As SGI has said in the past, it reckons that somewhere between 5 percent and 10 percent of the HANA installed base is going to need a larger configuration than a standard Xeon E7 machine with four sockets. So this is the initial target for the UV 300 for now. But make no mistake: SGI is going to leverage NUMAlink 7 in a future UV 3000 system aimed at more traditional HPC applications and commercial applications where programmers can cope with the NUMA data placement issues.

Be the first to comment