The US Department of Energy fiscal year 2018 budget request is in. While it reflects much of what we might expect in pre-approval format in terms of forthcoming supercomputers in particular, there are some elements that strike us as noteworthy.

In the just-released 2018 FY budget request from Advanced Scientific Computing Research (ASCR), page eight of the document states that “The Argonne Leadership Computing Facility will operate Mira (at 10 petaflops) and Theta (at 8.5 petaflops) for existing users, while turning focus to site preparations for deployment of an exascale system of novel architecture.”

Notice anything missing in this description?

The Aurora supercomputer, which Intel is serving as prime contractor for to showcase its future “Knights Hill” Xeon Phi processor, perhaps?

We have been hearing rumors as recently as today that the Aurora supercomputer is possibly not going to be delivered as expected, and when it is delivered, it might not be in the form planned. This omission in the budget request by its name and delivery year is not reassuring. Further, the focus on a novel architecture versus what has hitherto not been considered novel (Intel’s Knights Hill processor) as the focus of preparation is causing us and others to wonder what is afoot.

To be fair, this could be wording—accidental omissions and such. We have our feelers out for more on this, but the support for these rumors appear to be backed by the budget document.

Another section (page 17) states that “The ALCF upgrade project will shift toward an advanced architecture, particularly well-suited for machine learning applications capable of more than an exaflop performance when delivered. This will impact site preparations and requires significant new non-recurring engineering efforts with the vendor to develop features that meet ECI requirements and that are architecturally diverse from the OLCF system.”

The document also says, “Increased facilities funding initiates activities with the intention to deploy an exascale system at the ALCF in 2021 and to begin preparations at the OLCF for an exascale system that is architecturally distinct to follow in 2022.” In short, even if the plan for Aurora has changed, they will still be getting a supercomputer to meet exascale-class demands—just perhaps not the one we were all expecting.

The first thing we notice as it relates to the Aurora machine, other than the fact it isn’t mentioned, is the emphasis on a novel architecture as the focus for upcoming preparations—something that several other DoE and national lab documents are now suddenly calling an “advanced architecture” (for example, see slide 16 here—this presentation from Argonne’s Paul Messina, who also talked to us about novel architectures when the exascale timeline was pushed to 2021 and “novel architectures” were set to be the basis for at least one of the exascale machines). This shift from “novel” to “advanced” could possibly be because there are no real options for truly novel (quantum, neuromorphic, and forth) to be deployed reliably and at scale by 2021.

Other supercomputer initiatives appear to be on track, including Summit and the follow-on NERSC machine, NERSC-9. According to the document, “The FY 2018 request supports the upgrade of the Oak Ridge Leadership Computing Facilities to 200 petaflops and early science operations of this powerful new capability,” the document states. This, of course, refers to the Summit supercomputer, which will be manufactured at the end of this year and installed next and appears to be on track, as does the funding plan for the NERSC-9 supercomputer, the architecture of which is not confirmed as of yet.

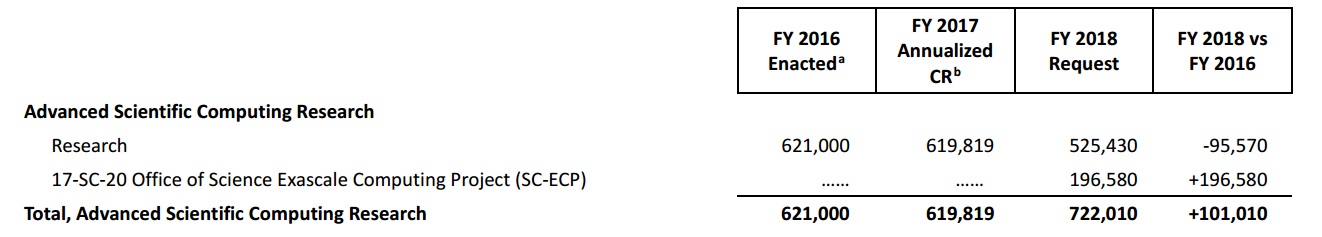

“The ASCR budget request of $722 million, is an increase of $101 million, or 16.3 percent, relative to the FY 2016 enacted level. The increase supports activities to accelerate the DoE’s Exascale Computing Initiative and intends to accelerate delivery of at least one exascale-capable system in 2021—reasserting U.S. leadership in this critical area.”

What is clear is that as we look at the future direction of supercomputing, machine learning is factoring in as an important driver for future architectural decisions. Architectures for these systems are decided on well in advance, and few could have predicted that HPC as we know it could be in for some major disruption from deep learning as part of the future simulation workflow. Much has already been made of the Summit supercomputer’s alignment to these goals to tie machine learning and HPC together in new ways–and their architecture with Volta GPUs and Power9 make it a good fit for this future in workloads at large-scale HPC sites.

A few things are possible here with Aurora, and as you can imagine, we are following all the various threads through some conversations today. First, and perhaps most disruptive (not in the positive sense of the word, either) is that Intel might have hit more serious snags with the 10 nanometer Knights Hill processor. We talked about the various opportunities and challenges with this device with Intel fellow Alan Gara here, and it is worth revisiting with Intel on how this has shaken out in the last year in terms of workload match, manufacturability, and site interest. If there is any kind of delay or problem, this would be a big issue for other supercomputing sites that are building their own large-scale and pre-exascale plans around this architecture.

Of course, the other possibility given the increased focus on integrating machine learning deeper into HPC, is that the truly novel architecture from Intel–an integrated part (Knights Crest) with the Nervana Systems deep learning IP baked in–is more attractive. However, there have been no firm dates attached to that product to tie with supercomputer production cycles (this machine needs to be in production by 2021) and if we know anything, it’s that these architectures and frameworks are changing so fast that planning a leadership-class machine around such a device might bring a big boondoggle around something that is too late to market to be relevant any longer.

It could also be that the novel architectures push changed in shape somehow. In other words, that there is really something viable on the “novel” front that Argonne wants to pursue. And perhaps the seemingly global find/replace of “novel” with “advanced” is just to make such a shift sound less dangerous from a budget perspective; after all, Rick Perry is visiting supercomputing sites this week and it wouldn’t do to make him jumpy by spending so many millions on something that “sounds outlandish or untested (even if it would be given the low maturity level of current “novel” architectures, including neuromorphic and quantum).

It could also be that Intel remains the prime contractor, things go according to plan at the high level (they are still delivering a near-exascale system in 2021) but with a revised architecture more suited to changing workloads. Or that Argonne, which has been a stable IBM national lab since the beginning, goes another route entirely. We will find out. But what we probably should stop doing just now is speculating.

The fact is, there is no Aurora mentioned; the system it should be is moved to 2021; and the emphasis on “advanced architectures” and machine learning is strong while wording for the other labs is still focused on traditional scientific simulation. There is a powerful new Volta GPU on the market–one that is primed for both HPC and deep learning–and its follow-ons shouldn’t be discounted as labs assess their research and production priorities.

We’re on it. Expect more to be added to this story regularly.

Update: 5:25 Eastern – This from Rick Borchelt, Director of Communications and Public Affairs for the DoE, Office of Science… “On the record, Aurora contract is not cancelled. That’s all we are prepared to say at this time until we work further into budgetary details. We’re not in a position without further analysis to provide more concrete budgetary details. “

Also no sign of the Nvidia-based system, called Sierra, in the budget?

That’s not an Office of Science project is why but I see your line of thinking

Sierra is the NNSA-twin of Summit. The NNSA labs saw budget growth unlike the Office of Science labs which saw decreases in actual funding levels (not just decreases in future growth). Sierra is not in jeopardy at all.

There is a decrease in energy science research in the 2018 budget, but not in scientific computing research. The funding for the Office of Science Advanced Scientific Computing Research was not cut in the 2018 proposal, it was increased.

Perhaps it looks like funding levels were decreased because the ASCR is now divided into two segments “Research” and “Office of Science Exascale Computing Project (SC-ECP)”. The sum of those two segments results in a 16% increase for proposed 2018 ASCR spending over 2016 ASCR spending. For instance, if one looks at the “Applied Mathematics” and “Computer Science” subsections of ASCR, which both show a decrease in 2018 funding from 2016 levels, there is a chart that explains: “Decrease reflects transfer of exascale related funding to the SC-ECP and does not constitute a change in scope for these activities.” Research and Evaluation Prototypes funding also looks like it was decreased sharply, but again there is an explanation in the chart: “Decrease reflects transfer of exascale related funding to the SC-ECP and does not constitute a change in scope for these activities while there is an increase to support additional quantum computing testbed activities.”

Looking directly at the Leadership Computing Facility charts comparing 2016 and 2018 budgets, one sees that ANL’s LCF budget is increased by 30% and ORNL’s LCF budget is increased by 42%. Aurora should fall under ANL’s LCF budget.

If there is a change in the plans for Aurora it does not seem to be the result of budget considerations, but rather the result of strategic or technical considerations.

We did not mean to imply by this article that the budget was the reason–the budget was just the first confirmation of changes with the timeline of Aurora, as we had been hearing from sources. As pointed out in first paras, the budget is not cut or set back and we do not suspect this is the reason for the Aurora changes–whatever those may be. What we still do feel certain of given nebulous responses from ON-RECORD sources, is that there is “no comment” or “we will provide more information soon”.

10nm yields have been low so it probably is just a matter of being patient for the next shrink. It could end up as some combination of Knights Hill, Lake Crest(deep learning), ARRIA FPGAs, or Knights Mill(low precision).

Isn’t this the old adage: Intel promises, NVidia delivers ? I see a deep murky future for Intel in the acceleration arena, as Itanium finally sinks the ghost of Larrabee spooks the Knights of Lore, what else is left in the field of innovation ? Alas; it likens to a graveyard.