In the prior two articles in this series (What Is SMP Without Shared Memory? and The Essentials Of Multiprocessor Programming), we have gone through the theory behind programming multi-threaded applications, with the management of shared memory being accessed by multiple threads, and of even creating those threads in the first place. Now, we need to put one such multi-threaded application together and see how it works. You will find that the pieces fall together remarkably easily.

If we wanted to build a parallel application using multiple threads, we would likely first think of one where we split up a loop amongst the threads. We will be looking at such later in a discussion on OpenMP, but another that we like to help explain things is an application which accepts work requests from some outside source such as the Internet. No matter the incoming rate, we would want that application to process all those requests with no noticeable delays; you really don’t want to get in a situation where some late coming request finds itself queued up behind a whole slew of long running preceding requests. This is a natural for multi-threading as needed.

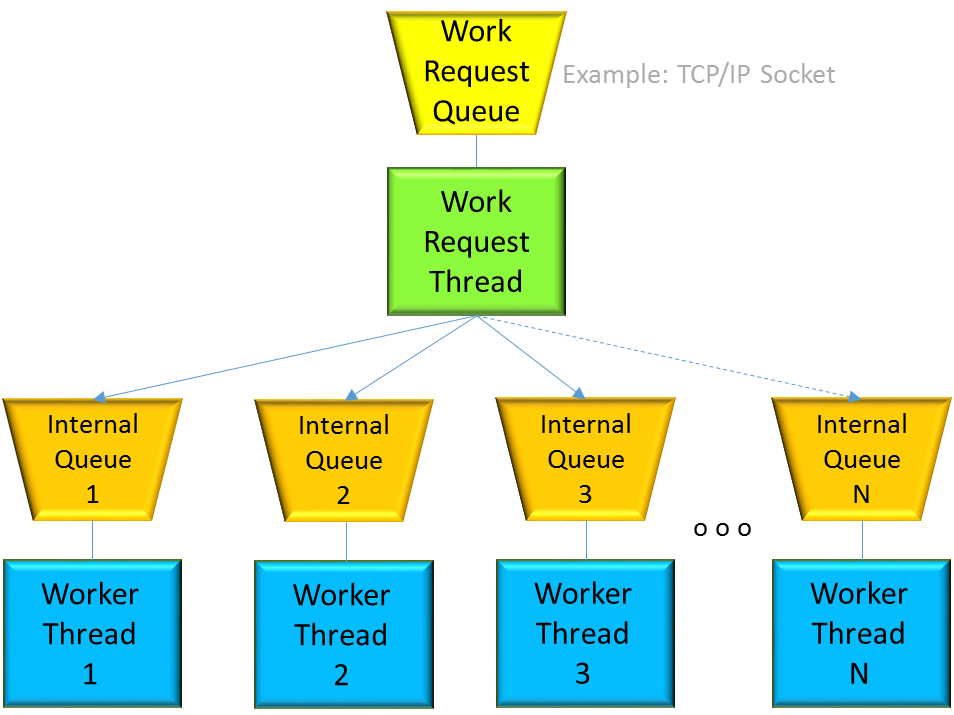

What we are going to build will be something relatively straightforward. A master Thread M accepts the incoming requests, does some minimal processing on it and quickly passes each request to one of a set of internal queues for processing, each one processed by a Worker Thread WX. The number of threads and their queues is arbitrary, potentially growing as needed. Looks pretty complex, right? No, not really. In fact, using only what you’ll be seeing here, you could build up an entire string of such queues and threads, each one doing part of the work, passing results onto the next thread(s) for subsequent processing. Such power.

We arrange for the Worker Threads to be completely independent, not sharing any data between them. Clearly, though, the starting Work Request Thread shares an Internal Queue – as well as the requests placed there – with the associated Worker Thread. We will be using some lock protection over the sharing of each such queue.

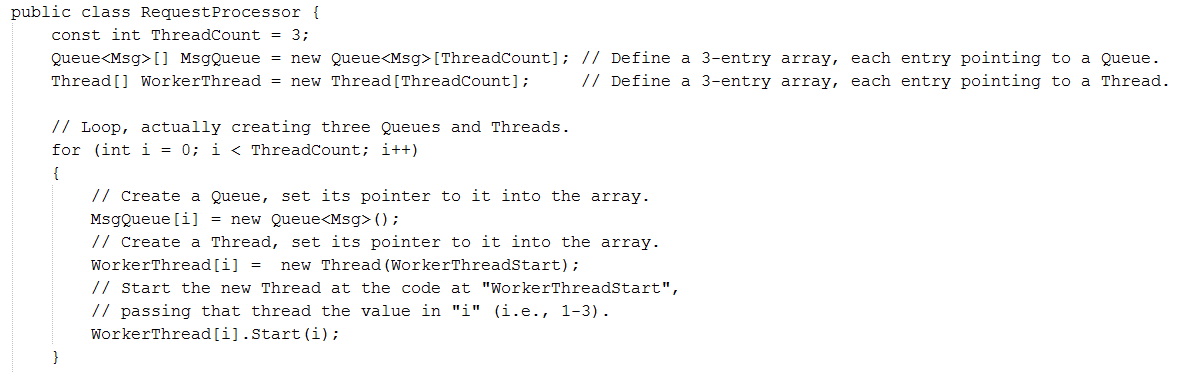

Let’s get things started. The Work Request Thread – let’s call it Master for short – needs to start by creating the Internal Queues – Queues for short – and the Worker Threads – Workers for short. (We will keep things simple in this demo and say we have just three of each.)

In storage known to the Master, using C#, we create objects representing these threads and queues.

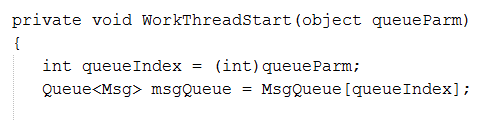

After constructing this RequestProcessor object (and executing the loop code snippet), we have three threads, all starting execution at a location in this program called “WorkerThreadStart”, a method as in the following:’

WorkerThreadStart is just a method within the RequestProcessor class, so when execution reaches here it knows all about the related RequestProcessor object which holds the MsgQueue array. Since the Start() method invocation identified which array entry to use, WorkThreadStart also knows which of the message queues is to be used by this thread. More precisely, look again at the WorkerThreadStart method. It is passing in “i” (a value from 1-3) to the new Worker thread. That is queueParm at the method “WorkerThreadStart”; this is really telling the Worker thread starting here which of the three message queues it will be using; we want a one-to-one relationship between each Worker thread and its own queue. Each Worker will be reading work request messages from only its own queue.

The purpose of each Worker thread is to process work requests provided by the Master Thread via the Worker thread’s queue. These work requests are the message “Msg” objects placed onto each of the queues. When a Worker thread finds a message waiting on its own queue, that Worker thread then knows what to do. The message “Msg” object can contain any information that you want the Worker thread to know, so I don’t define it here.

Now here it gets interesting. We want each of the Worker threads to Wait on its own queue, waiting there until it is told that there is a message (or more) waiting there for it to process. But recall this queue is in shared memory, common to both the Master thread and the corresponding Worker Thread. In our little example, the Master thread is putting messages onto the queue(s) and the Worker Thread takes them off; both are modifying the queue to accomplish this. For all we know, at the very moment that the Master is adding a new message, the Worker might be trying to remove another message that is already there. As a result, we need to Lock the queue at these times; only one thread at a time is allowed to access and modify the queue.

So, here we introduce the notion of a C# Monitor on an object. As with all locks, it synchronizes access to objects, but the notion of a Monitor provides a bit more. To explain, we continue.

Before the Worker Thread does anything to the message queue, it monitors that queue, acquiring – if it can – a lock on that object (i.e., msgQueue) as in the following:

Monitor.Enter(msgQueue);

if ( msgQueue.Count == 0 ) { … }

If our Master thread already has a lock temporarily on msgQueue, this Worker Thread waits to enter this code until later when the Master Thread frees the lock.

To explain, consider the second line where we ask the question whether there any messages on this queue. We really don’t want to being doing this if the Master thread happens to be in the process of adding a message to the queue. But once the Worker acquires the lock, we can test for messages and be sure that the answer won’t change while we are working with the msgQueue.

But, for what we are attempting to produce, if there is a message, we would go process it. If, though, there is no message on the queue, we’d want to have this thread continue to wait on the queue. So, sans message, right after this Monitored region is entered (with lock held), we execute the following:

Monitor.Wait(msgQueue);

and the Worker thread waits here for a new message.

There is a bit of a trick here. The Worker thread is waiting, but while waiting Worker thread is not accessing msgQueue; Worker thread is simply not executing. The trick is that since Worker thread is waiting, and it does not need the lock. No access of msgQueue means that the Wait can implicitly free the lock that it had previously acquired, allowing our Master to later enqueuer a new message. Upon later continuing – say because a new message did show up – the lock on msgQueue is implicitly reacquired, allowing the subsequent processing to again access msgQueue while holding its lock. Nice design. It is all hidden in these few lines of code.

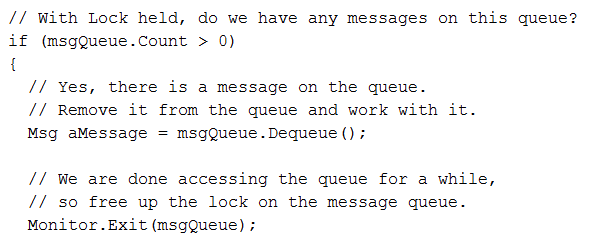

We will be looking shortly at what it is that allowed the Worker thread to leave this Wait, but for now let’s just say that it did. In the following, our Worker thread has just now exited the Wait, with the lock held.

All right, with the lock held, we proceed and access the msgQueue. As an example, we start in the code below by asking the question whether there are any messages on the queue. (This test is not strictly needed in this example, but we can only do such as this with the lock held.) Since there is at least one message, still with the lock held, we read and remove a message “aMessage” from the queue; we dequeue it. (By the way, the Dequeue() method is just part of the C++ class Queue, and has nothing to do with multi-threading. It just removes a message from a Queue.) This changes the msgQueue object, potentially leaving it empty.

With the new message dequeued, still holding the lock, we don’t intend to touch the msgQueue again for a while; we free up the lock, allowing the Master thread to enqueue a new message to the queue. Rather straightforward, and quick. I’ve said a lot of words here, but upon review you’ll see that what you needed to do to manage the multi-threading part of this was nearly trivial.

OK, changing subjects, we are shortly going to take a look at what really woke the Worker thread from its Wait. Picture the Worker thread waiting on the queue as shown above. How does the Worker thread know when not to wait, when to process another message? That’s what we are going to look at in a moment.

Recall first, though, that in our figure above we have at least three queues. A new request just came from the outside world and it is picked up by the Master thread. To which of the queues should the Master pass the request? That logic is completely up to your application. Ideally, there should be no case where a new request is waiting to be processed by a busy thread, especially if there are threads still waiting for work. Partly to keep the processor cache’s hot, we might want to keep sending work to the same thread(s) as long as they remain generally available (and expect that OS’ Task Dispatcher to assign these threads to the processor last used). Still, though, a decision is made, perhaps one based on some randomizing hash, to send the new request to a queue, ideally one with a waiting thread for immediate processing.

So, once done, how does the waiting task know of the new message? For some types of queues in some OSes, enqueuing a message on a waitor’s queue also automatically forces that the thread to become dispatchable and once executing finds and dequeues the message. That is, the act of enqueuing of the message implicitly wakes a waiting thread. Here, though, we are instead going to have, as two separate explicit operations, the enqueuing of the message and THEN the waking the thread.

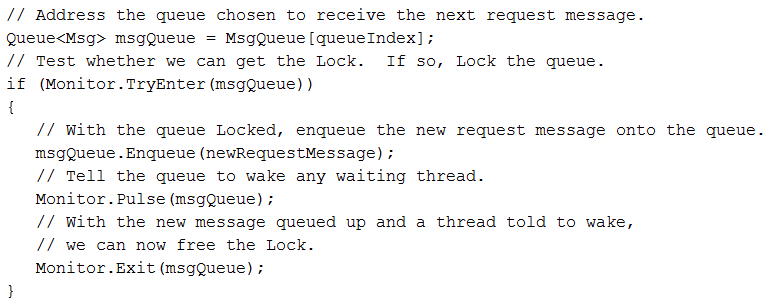

To explain, consider this next code snippet executed by the Master thread. (The integer variable queueIndex resulted from the Master choosing which queue.)

You have hopefully noticed something new here, a TryEnter (a.k.a., Try Lock). This allows you to test whether the lock can be immediately acquired. If so, the lock is acquired and processing continues within the brackets. If not, you might do some other processing like having this Master thread go back and check its own incoming message queue, or maybe even attempting to put this message on another queue; it’s up to you.

With the lock acquired, the Master thread is free to access and modify the msgQueue. In this case, we are going to Enqueue a new message onto this queue. At this point, the Master thread is just enqueueing the message; a waiting Worker thread knows nothing about it, yet.

Telling a waiting Thread of the new message is the function of the following Pulse operation. It is like an interrupt, it wakes the waiting thread, makes it dispatchable, allowing it execute and then find the newly enqueued message.

With this Pulse operation, both this Master thread – executing this code – and the Worker thread – shortly dequeueing this method as shown earlier – are potentially executing. Well, that’s not completely true; the Master thread is still holding the lock on the msgQueue at this moment; the Worker thread, coming out of the Wait, is implicitly attempting to reacquire the lock as well. So, as in the above, the first thing the Master thread does is to free the lock it still holds, allowing the Worker thread the opportunity to read from this msgQueue while holding the lock. Got that? The Worker thread was first waiting on the queue, but it can’t really proceed until it can next re-acquire the lock, a lock still held by the Master thread. Once the waiting Worker acquires the lock, dequeues the message, and then frees the lock, off it goes to do whatever is implied by the message.

So, stepping back for a moment, recall that we have some number of asynchronously executing Threads. One is a Master, delivering work request messages arriving from the outside world. (In our little model here, the Master does not really care about the work’s completion, but it could; we’ll look at that in the next article on OpenMP.) The other threads execute on their own, sharing data only with the Master thread. The only new news we’ve seen here is that we need to control the access to shared memory – that via the Monitor’s lock and we need to give each Worker thread a “kick” when new work arrives for it – that via the Monitor’s Pulse.

Interestingly, even a “kick” is not strictly required if the Worker threads instead periodically polled the contents of the queues. Instead of simply waiting, the Worker threads could wake as in the following:

Monitor.Wait(msgQueue, 100);

The extra parameter – the “100” – is just saying “Either wait until someone else interrupts us or wait for up to 100 milliseconds.” Here, again, when this thread wakes up – either way – it must first successfully acquire the lock. I should at that, if the Worker thread completed its processing of one message and then comes back and finds another, it would/should not bother waiting, just again get the lock and dequeue the next message.

We have used a Monitor on a shared queue object here largely because we were using messages to pass parameters to the waiting threads via a shared-memory queue. But if you don’t need these protections, the same basic notion of a Worker thread waiting indefinitely for work to arrive, and then being “kicked” when it does, can be supported via a simple Thread.Sleep operation, later arranging for our Master thread-generated interrupt to reach that Sleeping thread. For more, see Pausing and Resuming Threads. More broadly, you might find a starting point here for Thread Synchronization and a much more broad overview here of other Threading Objects and Features.

In the next and final article, we extend to another example, but this time with the intent of describing how to use OpenMP and comparing its features with the more primitive operations that we have been playing with so far.

Related Stories

The Essentials Of Multiprocessor Programming

What Is SMP Without Shared Memory?

This was thge right was to do threading in .NET. But no longer.

All of what is in this article in now built in via the Task Parallel Library (including optimisations like per thread work queues and work stealing should a worker empty its queue).

This entire primitive (timewaste) queue approach is a legacy of the outdated VonNeumann architecture.. soon to disappear.

One can only feel for the great tangle mans rush into computing has become.. My work ahead requires a change of methods.

whilst using a similar theoretical paradigm .. u have been trapped in compiler fiction .. changing in front of yor eyes

“.. the scope is limited to using a shared-memory, cache-coherent, symmetric multiprocessor (SMP) for the hardware and a single multi-threaded process representing a single address space for the storage model.”