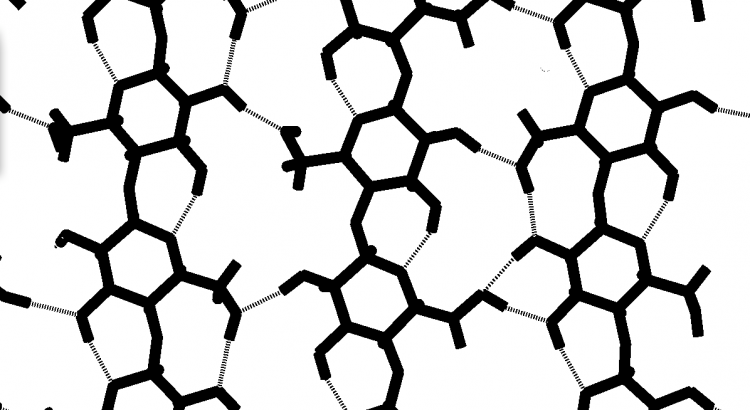

Molecular dynamics codes have a wide range of uses across scientific research and represent a target base for a variety of accelerators and approaches, from GPUs to custom ASICs. The iterative nature of these codes on a CPU alone can require massive amounts of compute time for relatively simple simulations, thus the push is strong to find ways to bolster performance.

It is not practical for all users to make use of a custom ASIC (as exists on domain specific machines like those from D.E. Shaw, for instance). Accordingly, this community has looked to a mid-way step between general purpose processors and accelerators and custom hardware—the FPGA. Researchers have been targeting FPGAs to speed the time and boost complexity of molecular dynamics codes for several years, but more mainstream success has been limited by the programmatic complexity. Higher level tools, including OpenCL have added a new base of users for FPGAs on the molecular dynamics front and while the performance might be less than with more low-level tooling, it is catching on.

As it stands now, all of the major molecular dynamics software packages have been ported to CUDA for use on GPU for acceleration, which from the base of research since 2010 (as an ideal starting point) shows has taken off. In that time, the performance and programming capabilities of FPGAs has also been bolstered and with more approachable interfaces for design and implementation via OpenCL, reconfigurable devices could see far more adoption than before—although likely not equivalent to the GPU.

FPGAs, like molecular dynamics codes, have been around for decades and changed relatively little in terms of their core architectures. However, due to increases in performance and usability of the hardware to match standard MD algorithms, there are new possibilities ahead. This area might not be a new frontier since research has been ongoing–but it certainly represents a refreshed opportunity.

“ASICs are out of reach for most researchers, although their performance is quite excellent,” notes a team of researchers at Tohoku University in Japan. The group recently developed an FPGA accelerator approach to molecular dynamics simulations using OpenCL. “Although the cost is extremely small compared to ASICs, the design time is still very large.” They point to challenges using HDL and issues around algorithm changes and update, which often require a redesign of the FPGA architecture each time. To get around these barriers, the team focused on using OpenCL, the open source toolset that has all elements to manage the FPGA. Although OpenCL does not provide the same close-to-hardware capabilities of the FPGA, leaving performance on the table, it is a promising toolset for molecular dynamics simulations because changes and updates can be made quicker than retooling via raw HDL—a time consuming task that requires deep expertise.

Even with the performance hit of using a higher level interface like OpenCL for FPGA acceleration, the Japanese research team says that with the Altera offline compiler (working with a Stratix V) they were able to get a 4.6X boost compared to a CPU-only implementation by using only 36% of the FPGA’s resources. Working ahead, they found a theoretical max of 18.4X speedup if 80% of the Stratix V resources could be used, which they say is equivalent to what would be gained with an HDL designed custom accelerator.

“Since the architecture is completely designed by software, the same program code can be reused by recompiling it for any OpenCL capable FPGA board,” the team notes, adding that they can also implement changes to the algorithm by updating and recompiling within a few hours—a far cry from what they would experience with HDL. The problem, however, is one that goes beyond software. “The data transfers between CPU and FPGA are still an issue. This can be solved by future SoC based FPGA boards that contain a multicore CPU and FPGA on the same chip.” Of course, this is something that Intel has been clear about delivering in the next few years in the wake of their Altera acquisition. With this, “the PCIe based data transfers can be replaced by much faster on-board data transfers and the use of shared memory can completely eliminate data transfers.” For now, the team is focused on their system that can be connected into multiple nodes for a cluster to cut down processing time.

With added capabilities coming in new Xilinx hardware and of course, as mentioned previously, the coming integration of CPU and FPGA on the same device, we can expect to see growing interest in non-GPU or ASIC acceleration of molecular dynamics simulations. This is enabled by higher level interfaces, including OpenCL, and growing interest in general in what FPGAs have to offer—something that is happening in enterprise analytics, machine learning, and other areas after recent news around successes at Microsoft (with its Catapult boards used to power Bing search) and other use cases in bioinformatics and other areas.

Weeks ago I posted a comment pointing out that you made a rather basic mistake of not assessing the _absolute_ performance achieved in the research highlighted and with that failing to avoid the pitfall of praising many X acceleration of some really slow code. It’s a shame that you make criticism go away by seemingly blocking some comments.

Just searched history of blocked/spam comments and didn’t find you by email or name. We always take feedback, thanks for the pointer. Generally, when we point to a research paper (since we don’t have the full data) we are merely pointing to it because it is interesting. It is not on us to contact the researchers and rerun their experiments in full fidelty.

Nicole, thanks for getting back. I agree that it is not on you to verify research results. However, when it comes to an established field like molecular dynamics with well-known highly optimized codes (like AMBER-GPU, Desmond, GROMACS, NAMD) it is reasonable to take acceleration claims that do not compare against the state of the art with a grain of salt!

Comparing is not trivial, but let me help out with some _rough_ comparison. While this work is very sparse on the details and lacks the information on what simulation system and settings they use (reproducibility FTW), what is clear is that they simulate a 22795 atom system and most likely common model physics (even that’s probably not very relevant unless their model phyisics is orders of magnitude more computationally intensive, which unlikely).

I ran some tests with GROMACS (which I happen to know rather well) using a similar-sized input (24000 atoms) on a Xeon E5-2690, SB-E just like the i7-4690X in the paper (clocked only at 2.9 GHz instead of 3.6 GHz). Their CPU code does the non-bonded force computation in 0.86 s/iteration on the CPU, that’s their baseline. They don’t seem to mention whether it’s the full 6-core CPU or just a single core, but as a comparison, GROMACS v2016 on a single E5-2690 core runs a similar computation in ~17 ms/iteration; on 6 cores with 3.2 ms/iteration (2x slower than the single-core performance of GROMACS! Take a high-end GPU like an NVIDIA TITAN X Pascal and the same force computation runs with 0.2 ms/iteration! Even a low-end 55W GTX 750 can do the same computation in ~1 ms/step with GROMACS!

Let’s validate my (potentially biased 🙂 measurements with publicly available data of other codes. Recent AMBER-GPU [2] and Desmond-GPU [3] benchmark numbers are available (plot 3) of the former and Table 2 of the latter) for the same input size as above.

To convert application performance in ns/day (nanoseconds of simulation time per day) to wall-time per iteration we can use the the 172,8*1/[perf in ns/day] formula. Taking a TITAN X Pascal GPU, that gives us 338.3 ns/day = 0.51 ms/iteration for AMBER-GPU, 383.3 ns/day = 0.45 ms/iteration for Desmond/GPU. That’s total iteration time not only the the non-bonded compute accelerated in the paper.

These comparisons should highlight why is it important to expect to have a reasonable baseline when talking about acceleration.

[1] http://arxiv.org/abs/1506.00716

[2] http://ambermd.org/gpus/benchmarks.htm#Benchmarks

[3] http://www.deshawresearch.com/publications/Desmond-GPU%20Performance%20as%20of%20November%202016.pdf