It has taken a little longer than expected, but then again, creating a datacenter operating system that mimics the sophisticated bare metal and virtualized systems inside of search engine giant Google is no simple task. And therefore, customers who have been anxiously awaiting the commercialized version of the Apache Mesos management tool – which does just about everything but mop the datacenter floors – will no doubt forgive Mesosphere for putting in the extra time.

Now, the race is on to determine what the future of computing in the datacenter will look like, pitting Mesosphere and the class of hyperscale upstarts that have helped build it with the gentle nudgings of Google against the generation of server virtualizers such as VMware, Red Hat, Citrix Systems, and maybe the OpenStack collective, who all have a certain share of the enterprise computing base and who all want to deliver what VMware has been calling the software-defined datacenter.

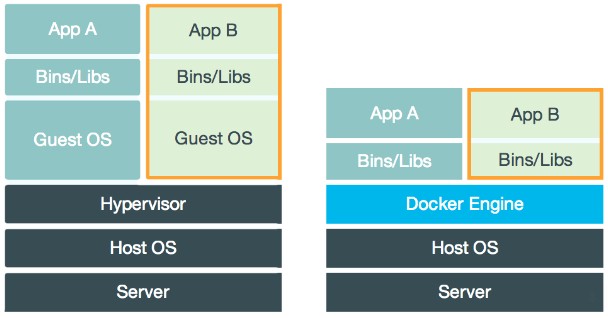

Ultimately, what all of these companies are trying to do is create a software mainframe with sophisticated partitioning capabilities that range from bare metal through containers all the way up to heavy virtualization. The difference between now, as Mesosphere is delivering its Data Center Operating System, or DCOS, and back then maybe 35 years ago, when the IBM mainframe was the central engine of compute and had logical partitions (the analogy to virtual machines) and subsystems (akin to software containers like Linux containers, cgroups, or Docker containers), is that Big Blue was creating the mixture of microcode and systems software on a single machine with some intelligent controllers for storage and peripherals. These days, the hardware of choice for enterprise applications is a scale-out cluster, almost always on an X86 processor and usually on a Xeon processor from Intel. But Mesos is being created so it can absorb new computing architectures as they come and go, riding up the ARM server wave, should it come, or perhaps a resurgence in Power systems, should that happen.

More importantly, the Mesos architecture is designed to be inclusive as well as open source, allowing for various frameworks to be created so other computing environments can snap into Mesos. This is in homage to Google’s Borg cluster management tool, no doubt, which is also similarly extensible and, in a sense, eternal. Like Ethernet will be, too, we suppose.

An Autopilot For The Datacenter

Mesosphere, the company that is commercializing the Apache Mesos cluster controller and application framework, dropped out of stealth mode a year ago, and in that short time it has put together an impressive team of techies and raised the funds that will help it bring the Google Way to the Global 2000, if all goes according to plan. While Mesosphere may be aiming at the Global 2000, as it has been saying for the past year, with over 3,800 companies having downloaded the beta of its DCOS stack, it is clearly going to a hit broader target in the enterprise, particularly among the 1 million users who are running applications on the Amazon Web Services public cloud. This is particularly true given that the Community Edition of DCOS, which is being launched today, will be a fully featured version of the Mesosphere stack that is available on AWS. Mesosphere is also launching its DCOS Enterprise Edition, which follows an “open core” strategy of adding extra goodies intended for large enterprises that are not open source and charging for them as part of a service contract for the whole stack.

The fact is, if a Data Center Operating System is good for a large financial institution or retail giant, it will probably be useful for any company with a few dozen servers and a tight budget. The idea of driving up utilization on clusters by sharing resources better is not one that is just important for large enterprises, although efficiencies matter more at megascale for companies where computing is a big component of what they offer as products and services. It is this fact, not the scale in and of itself, that has compelled hyperscale companies like Google, Facebook, Yahoo, and Amazon to create their own cluster schedulers and application frameworks. We would call Mesos a platform, of course, because that is what it really is.

If you haven’t heard about Mesos, it is probably time you did some investigating. The Mesos effort was started by Ben Hindman, who is now listed as a company co-founder and is chief architect after joining Mesosphere last September. At the time, Hindman was working at the AMPLab at the University of California at Berkeley, which is heavily sponsored by Google, and was specifically trying to make software run better on multicore, multithreaded processors. Six years ago, Hindman came to the realization that the techniques for dispatching code on multicore processors could be used for assigning work for nodes in a cluster, and with some inspiration from Google’s Borg cluster management tools, the AMPLab began to create an analog called Mesos. Since then, Mesos has become an Apache Project and Tobi Knaup of Airbnb and Florian Leibert of Twitter and then Airbnb did a lot of work making Mesos a product and using it in production at their respective companies. Knaup created the search engine and fraud detection software at Airbnb and also the Marathon application framework that is a key part of Mesos. Liebert, who is the CEO at Mesosphere, created the search engine and analytics systems at Twitter and also coded the Chronos fault tolerant job scheduler for Mesos. Hindman also spent some time at Twitter as Mesos was evolving, and significantly, Christos Kozyrakis, a researcher from Stanford University who is an expert on cloud and job scheduling software, joined the company last fall, too.

As is usually the case with any hot new technology, the largest hyperscalers cannot wait around for a commercial release of the software to put it into production, and a slew of early adopters, including Apple, Best Buy, Conviva, eBay, PayPal, Groupon, HubSpot, Netflix, OpenTable, Salesforce.com, Vimeo, and Yelp have all put Mesos into production to control the clusters underneath key applications. Apple doesn’t say much about its IT operations, but said back in April that Mesos is underpinning the third generation of the clusters that support its Siri personal assistant service; Yelp is using Mesos to power a cluster for testing applications that has over 1 million Docker containers using an application framework add-on called PaaSta that rides on top of the Marathon layer in Mesos, akin to the Kubernetes container management overlay that Google created and open sourced. Liebert tells The Next Platform that one unnamed customer is getting ready to roll DCOS Enterprise Edition out across 12,000 nodes in its datacenter.

Someday, after so many companies have worked together to create Mesos, someone might get the bright idea of using Mesos to underpin a new kind of search engine, and Google will find itself under competitive pressure from the very software it helped spawn. Google no doubt wants others to contribute to the font of knowledge about cluster management, job scheduling, and application frameworks, because it will benefit from a pool of techies who understand this as well as perhaps an idea or two its own internal techies have not thought of. But Google runs the risk of creating its own competitors, too. The fact that Google is being more helpful – including the contribution of the code for the Kubernetes container management system for Docker – shows just how much of a lead Google thinks it has over the industry.

“We have seen companies that have increased their utilization on their clusters by a factor of 5X or more, and they have even more cost savings. For companies that are running tens or hundreds of thousands of servers, that is really a game changer.”

People will argue about whether or not DCOS is truly an operating system, but at some level of abstraction, it really is. The Mesos kernel abstracts compute, memory, storage, networking, and other resources on the server nodes in a cluster and presents them as raw resources to application frameworks. Perhaps most importantly, the Mesos kernel knows how to automatically scale applications as they require more resources and free them up when it doesn’t, which allows for companies to cram as many workloads as possible concurrently on their clusters. Like a real operating system, DCOS has a user interface – both a command line and a graphical one – plus a software development kit for creating applications.

DCOS runs on just about anything, too, a bit like Linux does. Bare metal servers with Red Hat Enterprise Linux or its CentOS clone, the CoreOS containerized Linux, Canonical’s Ubuntu Server, and the raw Debian Linux can run DCOS. The software can run atop VMware’s ESXi hypervisor, which is by far the popular choice among the Global 2000 and which is running at 500,000 companies worldwide, as well as the vCloud Air public cloud that VMware has created based on its virtualization and cloud tools. Ditto for public and private clouds based on the OpenStack cloud controller and the KVM hypervisor, which are often paired up. Google Compute Engine, Microsoft Azure, Rackspace Cloud, and DigitalOcean public clouds can be wrangled by DCOS, and of course so can virtual infrastructure on AWS. Mesos is not yet supported on bare metal X86 machines running Windows, but there is no technical reason why this could not happen. And as we have said, there is nothing in the Mesos kernel that can’t be ported to ARM or Power server architectures should the need arise.

Liebert says that the Community Edition is only going to be available to manage infrastructure and applications on the public cloud, and that it has no scale or capacity limits placed on it like other freebie editions of management tools often do. It is only available running CoreOS Linux, however. Right now, DCOS Community Edition is supported on AWS, with early access customers being able to deploy it on Microsoft Azure and Google Compute Engine. Community Edition is not intended for use on private clouds, and it has no official support from Mesosphere excepting online web support through the community. If you wanted to, you could cobble together all of the components of the Community Edition from various open source projects, but this rollup saves you the trouble.

If you want to run Mesos on internal clusters or in hybrid mode across public clouds and private clouds, then you have to move to DCOS Enterprise Edition. The Enterprise Edition has features to support OpenStack and VMware clouds as well as support for the other Linuxes, plus additional security such as LDAP and Kerberos authentication. It is not free. While Liebert is not divulging precise pricing, Liebert tells The Next Platform that the Enterprise Edition has an annual license fee on the order of “thousands of dollars per node” and adds that thus far customers who have put DCOS into production have been able to offset the subscription costs with increased utilization and lower management costs “within months” of deploying the tool.

The big savings, explained Liebert, comes from running jobs that would have traditionally been on separate clusters in a shared mode across those clusters – precisely what Google has done for more than a decade using its own software containers and Borg management tool. In effect, you “bin pack” the cluster, like retainers have long since learned to do to speed up moving new items onto their shelves or indeed as shipping containers allow to be done on a larger scale for transoceanic cargo.

“DCOS increases your resource utilization easily by a factor of 2X to 3X,” explains Liebert. “So at the very general baseline, you get about a 50 percent cost savings. But we have seen companies that have increased their utilization on their clusters by a factor of 5X or more, and they have even more cost savings. For companies that are running tens or hundreds of thousands of servers, that is really a game changer.”

One early adopter of DCOS, says Liebert, is actually running clusters across multiple datacenters at 95 percent CPU utilization – a mainframe-class utilization rate that stands in stark contrast to the 8 percent to 15 percent average utilization for a server in the datacenter, and as Matt Trifiro, senior vice president in charge of marketing at Mesosphere, quipped: “IT is high-fiving it if they get CPU utilization up to 22 percent.”

Looking ahead, Mesosphere is going to try to drive utilization up even further on DCOS clusters, and to that end Kozyrakis is working with Stanford researcher Christina Delimitrou on a project called Quasar that will add oversubscription capabilities to Mesos using a mix of machine learning and collaborative filtering techniques. Elements of the oversubscription system being created by Kozyrakis will be pushed into the Apache Mesos open source project and some of the goodies will be retained for DCOS Enterprise Edition, says Trifiro.

“The efficiency of the cluster is already 2X to 3X just from bin packing, but what oversubscription will mean is that without a lot of operational headache, you can drive up utilization to 75 percent to 80 percent easily.”

The idea with Quasar is to balance jobs that have a service-level agreement against those that are best effort, and because DCOS is aware of what is running in the cluster as well as what jobs are guaranteed to run over time, it can essentially bin pack over. (Like supercomputer schedulers do already and have done so for decades.) The twist with Quasar is that it will work with the Mesos kernel to pause jobs that don’t have SLAs so those that do can complete in a timely fashion. (Spot instances on the AWS cloud work in a similar fashion compared to reserved and on-demand instances.)

The adoption of DCOS on public clouds like AWS have another effect on utilization, explains Liebert. First, DCOS has a per-host charge, and Mesosphere doesn’t care if that host is a physical server in a datacenter or a virtual one on a public or private cloud. Given that the whole point of Mesos is that it can run jobs side-by-side on a single machine and drive up efficiency, there is a tendency to buy the largest possible instances on a public cloud, generally the one where the customer is actually getting all of the machine plus a hypervisor. This means that customers are eliminating the “noisy neighbor” problem of hypervisors while at the same time offering higher compute capacity per node which, when shared by many jobs, lowers overall cost. The other thing that the use of Mesos is stopping is the over-provisioning of server infrastructure by application developers and system administrators.

Leibert says that, for instance, on a typical Java stack, the system might only need 1 GB or 2 GB of memory, but an instance is allocated with 16 GB or 32 GB “just to be safe,” which is a massive overallocation of memory. He adds that CPU overallocation is worse, and storage overallocation is even worse than that. “This overallocation is one of the problems we are really addressing,” says Liebert.

DCOS comes with the Hadoop Distributed File System as a default, but this is not the only file system supported by Mesos. In general, the Marathon framework is used for long-running jobs, while the Chronos framework is used to run short-lived jobs. There are a bunch of other frameworks that Mesos supports:

In general, Liebert says that Tomcat Java application servers, Hadoop and HDFS, message queuing software, and NoSQL data stores like Cassandra are the popular programs that are being managed by DCOS. Docker is also driving Mesos adoption, too, particularly with the combination of Mesos and Kubernetes. At least one enterprise customer is monkeying around with the MPI stack used to distribute applications on supercomputer clusters, and you could load up a Lustre cluster if you wanted to. (This would run in a static mode, unlike the dynamic mode for HDFS, which has automatic scaling and failover.) You will also note in the table above that Cray’s Chapel parallel programming environment is also supported. There is a good chance that Mesos will take off in HPC as it is doing in hyperscale and enterprise.

Be the first to comment