Clustering together commodity servers has allowed the economies of scale that enable large-scale cloud computing, but as we look to the future of big infrastructure beyond Moore’s Law, how might bleeding edge technologies capture similar share and mass production?

To say that quantum computing is a success simply because a few machines manufactured by quantum device maker, D-Wave, would not necessarily be accurate. However, what the few purchases of such machines by Los Alamos National Lab, Google, and Lockheed Martin do show is that there is enough interest and potential to get the technology off the ground and into more datacenters. And as we know, governments in the U.S., Europe, and elsewhere are making early investments in a few key options to move forward in the post-Moore’s Law era.

This morning, D-Wave announced a new subsidiary focused on reaching government customers in the U.S. at a time when agencies are just formulating plans and funded research programs for the post-exascale era. For now, it appears the real alternatives with significant traction are quantum and neuromorphic computing, although with the latter, there are several companies, established and startups, that are vying for a slice of those early stage applications. While neuromorphic computing devices come with their own production and programming challenges, the road ahead for scaling the manufacturing and user successes of quantum computing is a bit more of a nebulous story.

As D-Wave’s Bo Ewald tells The Next Platform, “there will be many more applications for quantum computers coming over the next decade, and remember there are really only three of these machines now.” Ewald likens this phase as IBM in 1955 where there was an entirely new architecture with no real applications that grew into an ecosystem in a few short years. He says that although these are early days, it is not hard to imagine a future as soon as a few years from now when quantum can be offered as a service from large cloud providers, which allows users to share the cost of one of a $15 million (or more) quantum system.

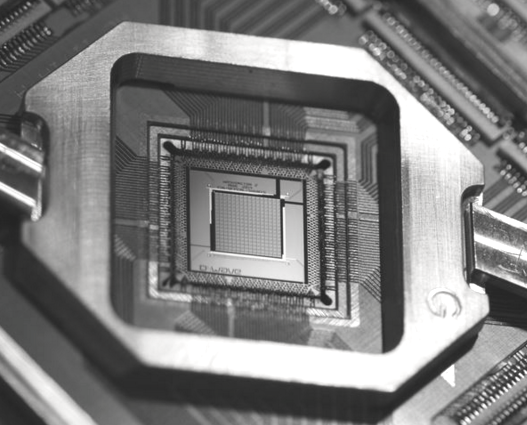

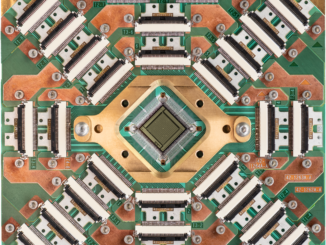

Unlike with traditional computing, there is no simple formula for how the price of quantum systems can come down over time. Shrinking parts of the chip or housing will not bring many benefits over time; the only thing that will make quantum computers more affordable over time is volume. These are, after all, chips produced in a standard fab facility. However, to keep the machines at near absolute zero requires engineering work that is hard to scale with volume for now as well.

“It is early days for our machines, mostly experimentation, but if you look at what Los Alamos is doing in terms of developing new applications, that gives us insight into the future. From NASA to the Department of Energy, to the Department of Defense, there are people who are seeing value in solving problems that aren’t much different than the kind of big logistics we see in airlines or load balancing or getting the best coverage on the ground with a minimum number of moves from a constellation of satellites. These are problems that it might seem are solved already, but we have only just scratched the surface.”

At this juncture, there is a chicken and egg problem for quantum computing; it is so expensive for such a limited number of applications that only a few centers can afford them and keep developing toward new applications. And the cost can’t come down until there is sufficient volume (which means new users and applications). Again, Ewald says this paradox is an echo of IBM in the early days with its machines and lack of application base.

As we pointed out in a piece about D-Wave training classes and how the company plans to train up a new generation of quantum programmers, it will be an uphill battle to map new applications to these systems. For now, quantum computers are hooked into a number of complex optimization problems—some of which have great value for the government customers that D-Wave hopes to reach with today’s announcement.

“For certain applications, we are going to win in performance, price performance, and certainly performance per watt. But the applications have to be there. Comparing us to performance and the ecosystem of something has developed over seventy years isn’t a fair metric. This is brand new, there are few applications, and like IBM, over the decades, there have been huge developments that have enabled the machines we know today. The same will happen with quantum computing.”

The future of supercomputing, which we can expect will not be harnessing the CMOS scaling we know today (at least in the post-exascale era) will be limited by cost, but not due to the devices. While quantum computers are indeed expensive—more so than traditional supercomputers (although figuring out the return on investment per unit of compute is itself a challenge) the real cost will be power consumption. Quantum computers now can run at 25kW since they are superconducting and require little energy, no matter how big the overall cabinet they are in (the computer is really just the chip). Keeping these are absolute zero is a cost power-wise as well, but Ewald says in the future, D-Wave will target the cooling process as it scales the business.

For D-Wave to grow quantum computing as a business and an alternative means first scaling the initial successes of applications. The new government subsidiary to target intelligence, defense, and other workloads is promising, especially because it means there will be internal resources committed to mapping optimization problems onto the devices—and seeing how far those might stretch into broader applications.

For now, we are watching how governments choose their investments in future technologies. With the U.S. and Europe adding quantum computing to their post-Moore’s Law plans, alongside neuromorphic computing, which might scale better in terms of manufacturability and usability programming-wise, there will be a war over architectures. D-Wave currently holds a singular position in the quantum arena, and while neuromorphic vendors are cropping up here and there (with many research efforts within universities and national labs) the war will also play out about which model works best; many distributed research efforts contributing to an eventual unified approach—or a monolithic company owning the foreseeable commercial future of a technology.

Be the first to comment