Where The FPGA Hits The Server Road For Inference Acceleration

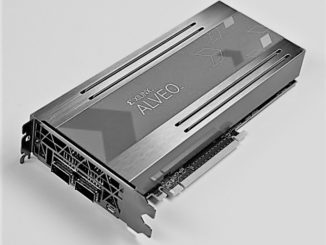

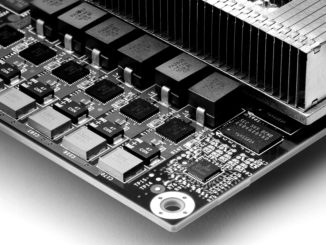

There are an increasing number of ways to do machine learning inference in the datacenter, but one of the increasingly popular means of running inference workloads is the combination of traditional CPUs acting as a host for FPGAs that run the bulk of the inferring. …