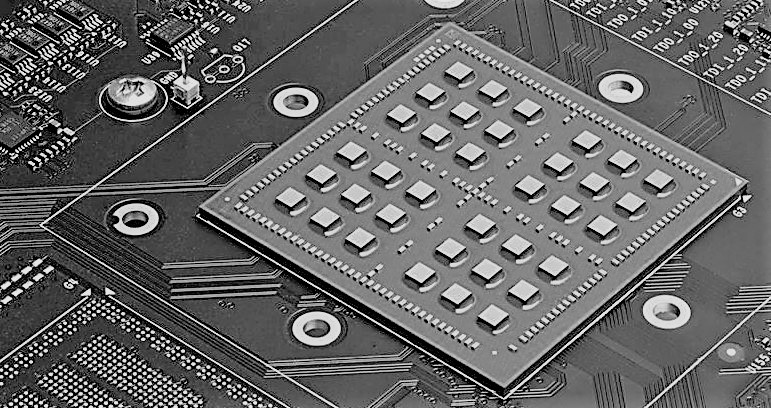

Nvidia Shows Off Tech Chops With RC18 Inference Chip

It would be convenient for everyone – chip makers and those who are running machine learning workloads – if training and inference could be done on the same device. …

It would be convenient for everyone – chip makers and those who are running machine learning workloads – if training and inference could be done on the same device. …

Processor hardware for machine learning is in their early stages but it already taking different paths. …

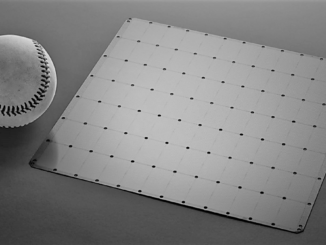

Startup Cerebras Systems has unveiled the world’s largest microprocessor, a waferscale chip custom-built for machine learning. …

Carey Kloss has been intimately involved with the rise of AI hardware over the last several years, most notably with his work building the first Nervana compute engine, which Intel captured and is rolling into two separate products: one chip for training, another for inference. …

As artificial neural networks for natural language processing (NLP) continue to improve, it is becoming easier and easier to chat with our computers. …

Enterprises are putting a lot of time, money, and resources behind their nascent artificial intelligence efforts, banking on the fact that they can automate the way application leverage the massive amounts of customer and operational data they are keeping. …

Microsoft is investing $1 billion in AI research company OpenAI to build a set of technologies that can deliver artificial general intelligence (AGI). …

Training deep neural networks is one of the more computationally intensive applications running in datacenters today. …

For The McLaren Group, it’s all about speed.

Born in 1963 as a Formula 1 race car company, it initially was about speed on the track. …

The alliance between high performance computing and artificial intelligence is proving to be a fruitful one for researchers looking to accelerate scientific discovery in everything from climate prediction and genomics to particle physics and drug discovery. …

All Content Copyright The Next Platform