The steady rise of AI over the past several years – and the accelerated growth with the introduction generative AI since OpenAI’s launch of ChatGPT in November 2022 – has shifted Intel’s status as a challenger in a chip market that it long had dominated.

For sure, Intel still commands a good percentage of the consumer and enterprise CPU space, but the focus is now – will be into the future – AI workloads. Also, AI isn’t the only reason Intel doesn’t own the industry like it once did – internal design and manufacturing challenges that led to costly and embarrassing product delays didn’t help – and trends like cloud computing and the edge opened up avenues for traditional rivals and startups alike. But Nvidia more than a decade ago put all of its money on AI, using it as the North Star for its innovations going forward.

And it’s paid off and – as we noted in February – paid off handsomely, with the GPU maker poised on the verge of becoming a $100 billion company and posting Q4 2024 revenue of $22.1 billion, a year-over-year increase of 265.3 percent fueled by its combination of hardware and software. Meanwhile, Intel earlier this month saw is important foundry business get hit with a $7 billion loss, illustrating the hurdles facing CEO Pat Gelsinger as he stares at a years-long effort to get the company going in the right direction.

Far from chipping away at his trademark enthusiasm, Gelsinger during his keynote at the company’s Intel Vision 2024 event in Arizona seemed to relish the role of the underdog as he put forth a roadmap for fueling Intel’s vision of driving AI everywhere that includes embracing an open-source approach, promising silicon that will outperform what Nvidia has on the market and offering a portfolio that will address AI workloads in the datacenter and cloud, on the PC, and at the edge.

Open Architectures and Intel

The CEO also said the AI market will evolve in a way that bends it toward Intel, noting that demand will turn to open platforms and the strong support Intel is getting from major AI and IT companies and arguing that enterprises will want to stick with an architecture they’ve been familiar with for decades if it can offer AI performance and efficiency equal to or better than Nvidia.

Whether it plays out like that is unclear, but Gelsinger, as he introduced the upcoming latest generation of Xeon server chips – the Xeon 6 family – and Gaudi 3 AI accelerators and spoke about an open systems strategy, was taking his swings.

“One of the key questions that our AI customers are asking us is, ‘How do I deploy?’” he said. “And nearly all of the GenAI deployed developments today are moving to higher level environments, PyTorch frameworks and other community models from Hugging Face. Industry is quickly moving away from proprietary [Nvidia] CUDA models. Literally, a few lines of code and you’re able to be up and running with industry-standard frameworks on power-performant, efficient cloud infrastructure.”

Intel’s got a mountain to climb. Gaudi 3 is coming in the wake of Nvidia’s introduction last month of its Blackwell datacenter GPUs, including its GB200 Grace Blackwell Superchip, which combines two B200 GPUs with a single Grace CPU linked by its next-generation NVLink connectivity. To prove the advancements in Blackwell, Nvidia founder and CEO Jensen Huang said during the company’ GTC 2024 show that it takes 8,000 previous Hopper GPUs and 15 megawatts of power to train a 1.8 trillion-parameter AI model; with Blackwell, only 2,000 accelerators are needed and will consume 4 megawatts.

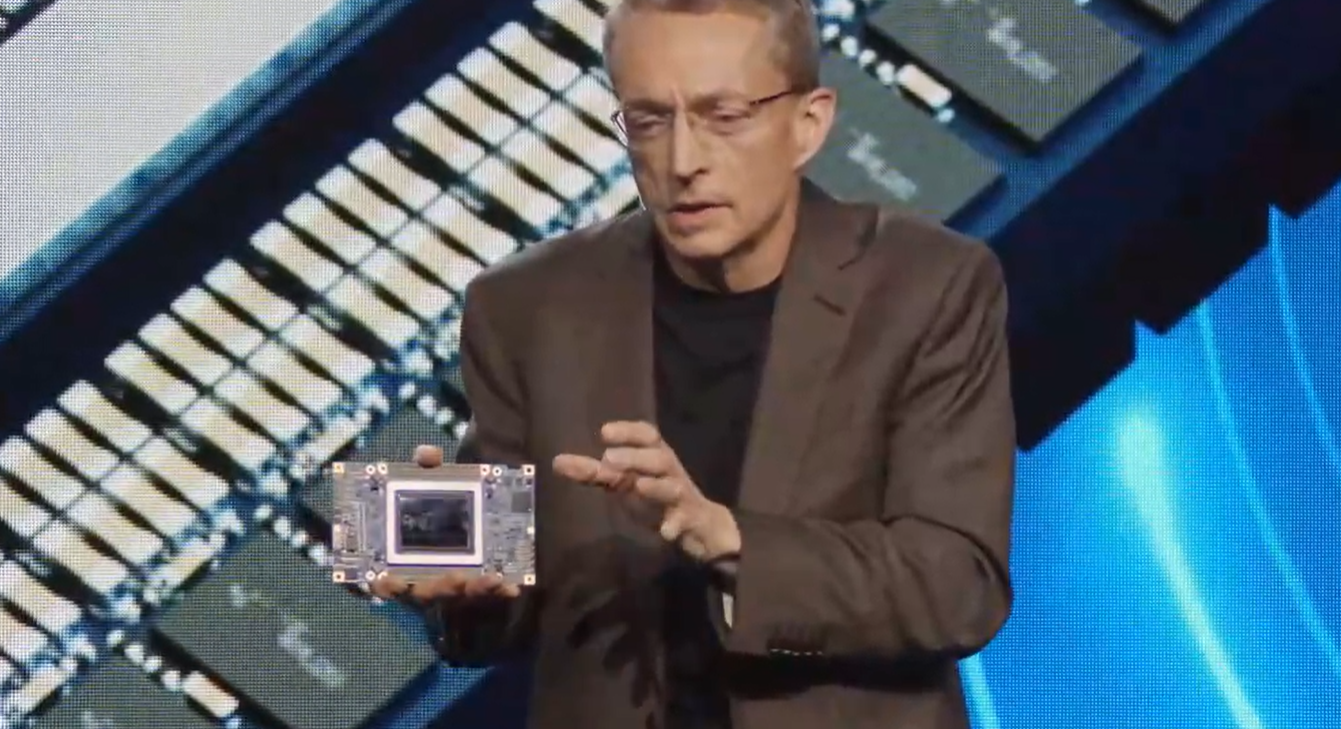

Gaudi 3: Good Performance At A Lower Cost

Gelsinger pushed back, boasting of Gaudi 3’s performance and TCO. The accelerators is 50 percent bette with inference and 40 percent more power efficient than Nvidia’s current – and at times hard-to-find H100, given the skyrocketing demand for compute power for AI workloads – and will come in at a “fraction” of the cost.

In speaking with journalists and analysts after the keynote, Gelsinger said he wasn’t ready to discuss pricing for Gaudi 3, but added that “we are very confident it will be well below the number for the H100 or Blackwell. Not a little below, but a lot below, and that can be a factor in TCO.”

The new Xeon 6 branding for the latest CPUs for datacenters, the cloud, and the edge. “Sierra Forrest,” with E-cores, will launch later this quarter, while “Granite Rapids” and their performance cores will come soon after. Intel is boasting of a 2.4-times performance-per-watt jump and 2.7X better rack density over second-gen Xeons with Sierra Forest.

Again, Gelsinger positioned the CPUs as equally performant and more open alternatives to Nvidia’s high-powered GPUs and CUDA framework. The new Xeons support the MXFP4 data format, which he described as a narrower format that allows for memory compression, efficient AI training, and AI inferencing “with minimal accuracy loss.” With MXFP4, Granite Rapids will be able to reduce next token latency by up to 6.5 times better over 4th Gen Xeons that use FP16, allowing it to run a 70-billion parameter Llama-2 model.

Love The Architecture You’re With

This will be attractive for enterprises that have long used Xeons in their datacenters, are comfortable with the Intel Architecture, and tend to gravitate to open environments.

“Your data, your data stack, and almost every one of your data stacks runs on Xeon,” he said. “It’s where the databases run on and, amazingly, here we are in year 22 or so of the cloud journey and the majority – over 60 percent – of the computing is done in the cloud or some cloud embodiment, but the vast majority of data is still on-prem. Sixty-six percent of the data is unused and 90 percent of unstructured data unused.”

Enterprises can address this unused and on-prem data for AI by adopting the Retrieval-Augmented Generation (RAG) technique to include corporate information in the data being used to train large language model.

“Xeon is obviously a tremendous machine to run these RAG environments and making LLMs more effective and efficient on your data,” Gelsinger said. “Xeon is not only able to be your database frontend, but increasingly it’s able to run the LLMs as well. No new management, no new networking, no new security models, no new IT things to learn, no proprietary networking. It just works on the industry-standard Xeons you know and love.”

Open architectures and hardware and software systems will be the key, according to the CEO.

“We’ve clearly seen there’s interest in how we can move these standards forward – standard management, no networking lock-in, no security issues, working with LLMs that run natively on Xeon but also with Gaudi 2 and 3 and Nvidia,” he said. “Now’s the time to build open platforms for enterprise AI. We’re working to address key requirements that include how you efficiently deploy using existing infrastructure, seamless integrated with hardened enterprise software stacks that you have today, a high degree of reliability, availability, security, support issues.”

According to your article Pat said “Industry is quickly moving away from proprietary [Nvidia] CUDA models.”

From what I can tell CUDA 12.1 is the default backend for PyTorch with ROCm 5.7, CUDA 11.8 and CPU as other options. Since PuTorch is commonly used with CUDA, is the main point that it’s nonproprietary?

Gelsinger’s narrative focus on “Open Ecosystem” is quite interesting IMHO, and if Altera had been kept in-house maybe they could have developed an open-bitstream format (say OBF, or UBF) with which to upload gateware into FPGAs from arbitrary vendors that use it as a standard (something to possibly suggest to Sandra Rivera now) … and I can’t wait to see how the Xeon 6 Granite Rapids performs in the ring (preliminary benchmarks on “suplex” would be great!)!

One thing that may have been missing from the Intel Vision Arizona event though, and that may be something for Gelsinger to clarify in the May 14-15 EMEA event in London, as hinted to by Tim on Tuesday (“provided that customers trust there will be enough architectural similarities between Gaudi 3 and […] Falcon Shores”), is how easy (or hard) one may expect the transitions from Gaudi 3 (AI/ML) to Falcon Shores, and from Ponte Vecchio (HPC) to Falcon Shores, to be. Binary compatibility would be great, but probably too much to hope for. I guess the focus on higher-level frameworks (PyTorch, Hugging Face) is currently the proposed approach for dealing with this in the AI/ML space(?).

Also, it’s amazing that training AI models (eg. 1.8 trillion-parameter, cited in the article) requires as much power as running Frontier, or Sierra (#1 and #10 on Top500)! Gotta hope that the results are worth the expense …

Gaudi 3 price?

Xeon Phi 7120 P/X = $4125

Xeon Phi 5110 D/P = $2725

Xeon Phi 3120 A/P = $1625

Stampede TACC card sample = $400 what a deal

Shanghai Jiatong University sample = $400 (now export restricted?)

Gaudi System on substrate if $16,147 approximately Nvidia x00 and AMD x00 gross per unit the key component cost is $3608, and if $11,516 on Nvidia accelerator ‘net’ take component cost drops to $2573. So Intel will sell them for x4 that AMD and Nvidia won’t be able to reach IF Intel does its own manufacturing.

Mike Bruzzone, Camp Marketing

Average Weighed Price of the three on 2,203,062 units of production = $2779

Gaudi 3 price?

Xeon Phi 7120 P/X = $4125

Xeon Phi 5110 D/P = $2725

Xeon Phi 3120 A/P = $1625

Average Weighed Price of the three on 2,203,062 units of production = $2779

Stampede TACC card sample = $400 what a deal

Shanghai Jiatong University sample = $400 (now export restricted?)

Gaudi System on substrate if $16,147 approximately Nvidia x00 and AMD x00 gross per unit the key component cost is $3608, and if $11,516 on Nvidia accelerator ‘net’ take component cost drops to $2573. So Intel will sell them for x4 that AMD and Nvidia won’t be able to reach IF Intel does its own manufacturing.

Mike Bruzzone, Camp Marketing