Here is a simple algebraic equation that describes the relative computing oomph of two different CPU architectures over the past two decades: If Intel an X86 core is X, then an IBM Power core equals 2X.

IBM’s Power family of processors and their resulting hardware systems has never been particularly focused on price/performance or performance per watt, which is something has been driving the X86 architecture since the “Merom” laptop cores were first pulled into the Xeon line to help Intel better compete against AMD’s “SledgeHammer” Opteron processors, which were the first 64-bit and multicore X86 chips on the market when the revamped Power4 and Power4+ processors made Big Blue a contender against Hewlett Packard and Sun Microsystems in high-end workstations and servers.

What IBM has been focused on, relentlessly, is driving its roadmap to double performance every two years and then every three years as its base of Power customers contracted and its opportunities to compete against the X86 architecture became more limited as the millions of companies adopting the software-defined datacenter attitude in the 2000s and 2010s opted for a common X86 substrate on which to write their code.

To be fair, the Power architecture had plenty of adherents and admirers for certain mission critical workloads in the enterprise, such as the databases underpinning back office systems of record such as ERP, supply chain management, and customer relationship management software. And they were willing to pay the premium in system size, heat, electricity, and hardware cost – and all of these add up to some sort of cost – to get that extra compute performance per core. And even today, there are somewhere approaching 200,000 customers who absolutely depend on Power machinery for these workloads, and in many cases for a lot of the application servers and other systems that wrap around these systems of record.

For several decades, IBM also sold a lot of Power-based iron into the HPC simulation and modeling market, and with its Power9 systems launched in 2018 it had high hopes to push more strongly into AI training. But aside from the success of the “Summit” supercomputer at Oak Ridge National Laboratories, the “Sierra” supercomputer at Lawrence Livermore National Laboratories, and a few much smaller but similar hybrid clusters using IBM Power9 chips paired with Nvidia “Volta” V100 GPU accelerators, IBM’s HPC aspirations went out the door with the sale of its System x X86 server business to Lenovo in 2014.

So here we are in 2022, and a more insular IBM has a very different attitude as the Power10 processor and its entry and midrange systems – those more suited for distributed computing than its big iron “Denali” NUMA machine launched a year ago with Power10 – are ramping. We have talked about some of the benefits of the Power10 architecture which make it well suited for HPC and AI workloads, how data analytics at scale could be a driver of growth for Power Systems, and even how AI inference seems to be the most important kind of HPC driving the Power10 business – if you can consider AI inference a kind of HPC at all. And some have commented that we at The Next Platform want IBM to revive its HPC and AI training business more than Big Blue seems to want. It is a fair observation, but it is one grounded in a desire to see a healthy and diverse ecosystem of architectures competing in the market. We have felt the same way about Sun Sparc, HP 9000, AMD Opteron, and countless dead Arm server chips, too. And we still think more GPU and DPU and FPGA and custom ASIC accelerator vendors is better than fewer. More is always better. It provides choice, new ideas, and a hedge against the real risk that a compute engine vendor screws up.

The Algebra Of Price/Performance

The algebraic expression at the top of this story applies to how the Intel Xeon core stacks up to the IBM Power core for certain workloads, but there is another interesting rule of thumb we have observed over the decades. It wiggles around some, depending on where different system makers are in their product cycles, but it goes like this: If it costs X to buy an X86 platform of a given capacity, then it costs 2X to buy a similarly powered RISC/Unix, Itanium, or proprietary system, and it costs 4X to buy an IBM System z mainframe. This equation is one where the system is fully burdened with a reasonable configuration to do actual work, has the correct complement of operating system, systems software, and database software as well as technical support for the system. And this rule of thumb, to be clear, for online transaction processing systems using Oracle, IBM DB2, or Microsoft SQL Server databases with the full enterprise licenses, which have been in the market a lot longer than these new-fangled systems of engagement with their shiny AI algorithms or open source NoSQL or relational databases.

We have gotten our hands on IBM’s own competitive analysis for its entry and midrange machines using the “Cirrus” Power10 processor, and it is interesting to see how these rules of thumb have stood the test of time. We have performance metrics for the four-socket Power E1050 compared to current “Ice Lake” Xeon SP servers of the same performance band, and price and performance metrics for two-socket Power S1022 servers against their Xeon SP equivalents.

What this data clearly shows is that Power10 has some competitive advantages, even if for the most part it will help IBM i, AIX, and Linux customers running on Power Systems irons to justify continuing investments in those platforms. IBM will, of course, make some competitive wins, mostly in emerging markets (and in some cases as Inspur sells iron in China), and it will also win some deals for new kinds of workloads like MongoDB, EnterpriseDB, or Redis. But there will also be takeouts for Power iron as some customers move to different databases and to the X86 architecture as a result of mergers or acquisitions, which will very likely wash out these gains. The Power Systems base has been relatively steady in the past decade because of these opposing forces of gains and losses.

Let’s start with the Power10 midrange machine, since we have two different SPEC benchmarks for these machines and we do not have SPEC ratings for the Power S1022.

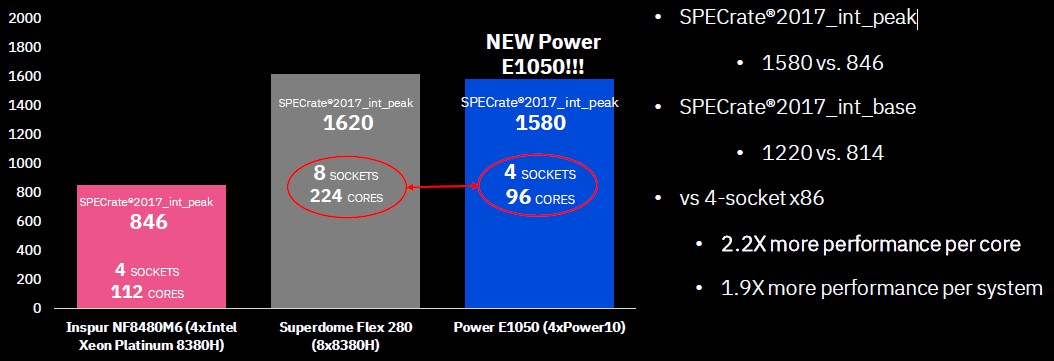

Here is how the four-socket Power E1050 stacks up against Cooper Lake Xeon SP iron from Inspur and Hewlett Packard Enterprise, according to IBM:

To be fair, Power10 was about a year later than expected, and Intel was very late – like around three years late – with Ice Lake Xeon SPs and probably planned for the impending “Sapphire Rapids” Xeon SPs. At the high end, “Cooper Lake” is a rev-rev of the “Skylake” design for machine with four or eight sockets; there is no Ice Lake four or eight socket box, although we expect a unified line again with “Sapphire Rapids” whenever that happens.

The point is, both IBM Power and Intel Xeon SP had roadmap delays caused by foundry issues – IBM with the 10 nanometer and then 7 nanometer processes from GlobalFoundries, which never made it to market with either, and Intel with its own 10 nanometer issues. So things are a bit out of whack compared to historical trends. But, in terms of raw performance, the 2X rule for Power cores is holding, as is attested by the performance ratings for the Power E1050 shown above.

The Power10 core running at 3.15 GHz has a quad of Power10 dual chip modules (DCMs) for a total of 96 cores, and has a SPECint_rate_2017 integer throughput rating of 1,580. The four-socket Inspur machine equipped with four Xeon SP-8380H Platinum processors running at 2.9 GHz has a total of 112 cores, and is rated at only 846 on the SPEC integer throughput test. If you do the math, the Power E1050 offers 1.9X more performance at the system level (which is due entirely to shifting to DCMs) and each core has 2.2X more performance if you divide the integer throughput by the number of cores.

Interestingly, an eight-socket HPE Superdome Flex 280 server using the same Cooper Lake processors is rated at 1,620 on the SPEC_int_rate_2017 test (that’s a peak rating with tuning). The eight socket Xeon SP server has 2.5 percent more performance, but it takes 2.3X times as many cores to get there. If enterprises are using software that is priced by the core – as database and datastore software often is – then the Xeon SP server is no doubt cheaper, but the software is going to be more expensive unless vendors give the X86 architecture a 50 percent price break.

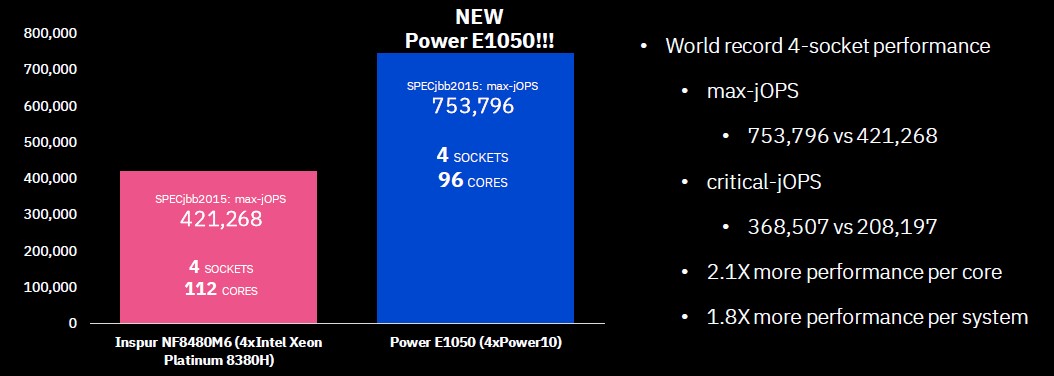

The Power E1050 stacks up similarly on the SPECjbb stock trading benchmark that is based roughly on the TPC-E benchmark. At the maximum throughput, the IBM Cirrus system offers 2.1X the performance per core and 1.8X more performance per system than a four-socket Intel Cooper Lake machine.

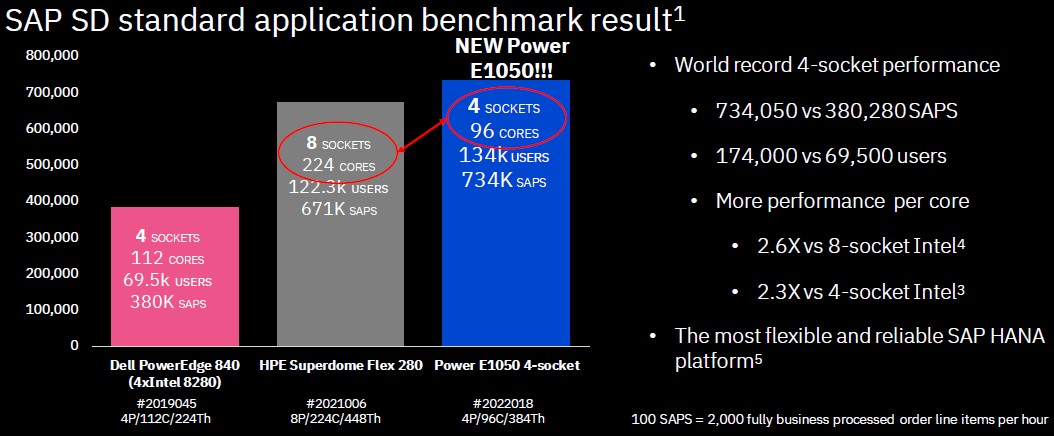

On the SAP Sales and Distribution (SD) benchmark test, which has been used for two decades to gauge relative transaction processing performance of enterprise systems, the performance gaps are a little bit bigger. Take a look:

This time, IBM pulled out benchmark tests from Dell on its Power Edge 840, which was equipped with four “Cascade Lake” Xeon SP-8280 processors running at 2.7 GHz and the HPE Superdome Flex 280 using eight “Cooper Lake” Xeon SP-8380H processors running at 2.9 GHz. The Dell machine was running Linux and the SAP ASE database and the HPE machine was running Windows Server 2016 and SQL Server 2012. These software releases are a little old, but those tests were done by the vendor, not by IBM.

In any event, on the SAP SD test, the Power E1050 had 1.9X more system throughput and 2.3X more per core performance than the Xeon SP machine. (Obviously, an Ice Lake system would have closed some of that gap, and so will a Sapphire Rapids NUMA machine). But on a big eight-way machine, even with core improvements and clock speed boosts, the IBM machine is delivering 2.6X the performance per core, and 1.9X more system throughput on the SAP test.

We cannot yet do price/performance analysis on the Power E1050 versus these other machines because pricing information on these systems is a bit scarce.

But there is pricing information available on two-socket servers, and so IBM did the performance and price/performance analysis on a number of different workloads. (We do not have SPEC ratings for these machines, however.)

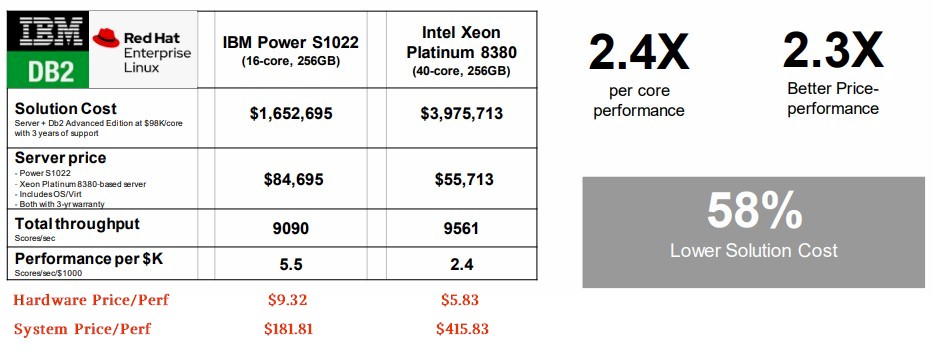

This first test is not for strict OLTP running on a relational database, but rather is measuring the inference performance of a Python application that interfaces with a DB2 database running transactions. Have a gander:

IBM did not calculate the price/performance for the hardware and the complete system, and so we have done this in red at the bottom of the rest of the charts in this story. We do not think in terms of work per $1,000, but dollars per unit of work, and IBM knows this and flipped it around.

IBM did not calculate the price/performance for the hardware and the complete system, and so we have done this in red at the bottom of the rest of the charts in this story. We do not think in terms of work per $1,000, but dollars per unit of work, and IBM knows this and flipped it around.

IBM did not calculate the price/performance for the hardware alone, and for obvious reasons: IBM Power machines are more expensive than analogous X86 machines. In this case, the Ice Lake Xeon SP hardware shown is 34 percent cheaper for roughly similar performance. Which sounds bad, until you add the cost of DB2 Advanced Edition, which costs a whopping $96,000 per core with three years of support. The Xeon SP machine has 40 cores instead of 16 cores with the IBM Power10 machine, so the software license bill skyrockets and when it is all done, the Xeon SP machine costs 2.3X more to do 5.2 percent more work.

That’s new.

Because software makers want to be consistent between on premises and cloud infrastructure, they have settled on per-core pricing for a lot of their systems software, and the odds are that they will not change from this any time soon. Oracle offers per-score software license scaling factors by CPU architecture, and in fact X86 processors have a license cost scaling factor of 0.5, which would wipe out IBM’s per core performance advantage, and that is no accident on the part of IBM. Or Oracle, which chopped license costs in half on X86 iron and its own Sparc iron and left full list price in effect on Itanium and Power iron. So that is why you don’t see IBM talking about Power10 machines running the Oracle database.

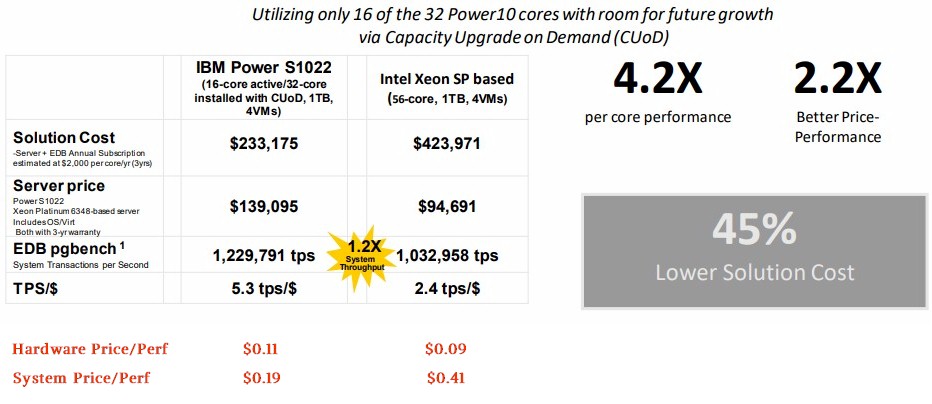

Here is how the Power S1022 stacks up against X86 iron running the pgbench test on the open source EnterpriseDB Advanced Server Postgres relational database:

On this test, IBM is pitting a 32 core Power S1022 machine with only 16 cores activated against an X86 system with a pair of Ice Lake Xeon SP-6348 Gold processors. (The chart says Platinum, but it is Gold.) Both machines have 1 TB of memory, are running Red Hat Enterprise Linux, and have four virtual machines set up on the systems. The IBM machine does 19 percent more work, but the Intel machine costs 32 percent less and when the math is done, the Intel hardware is 19 percent cheaper than the IBM hardware. But fully burdened with software and support, then the Intel system costs 2.16X as much as the IBM system because of software licensing by core.

In case you haven’t figured it out, IBM is betting customers will spend a little bit more on hardware to save a lot on software. And if this starts working, the software makers will be under tremendous pressure to pull an Oracle and offer scaling factors to cut the cost of software licenses on X86 iron. Or to move to per-socket based pricing to level the playing field.

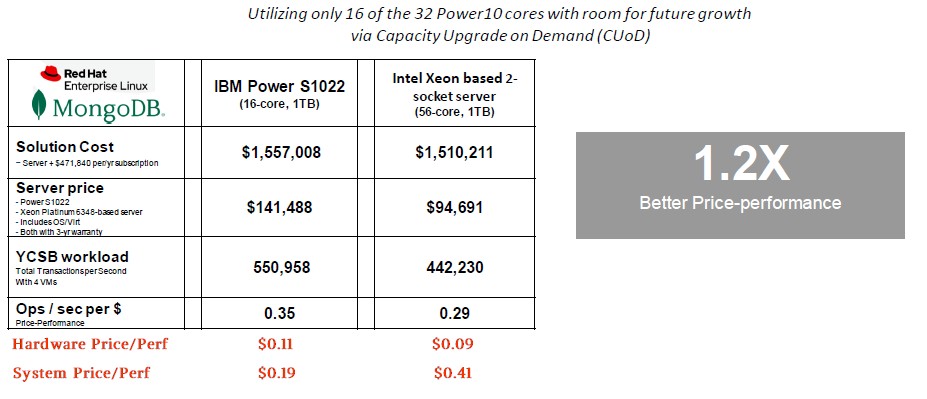

The story is similar for the Yahoo Cloud Serving Benchmark (YCSB) running on Red Hat Enterprise Linux against the MongoDB NoSQL document data store.

In this case, thanks to the eight threads per core on the Power10 chip, the 2.95 GHz Power10 core is delivering 4.36X more performance than the 2.6 GHz Ice Lake Xeon SP core, but overall throughput for the respective two-socket systems narrows to only a 20 percent advantage for IBM because the Intel setup has 56 cores compared to IBM’s 16 cores.

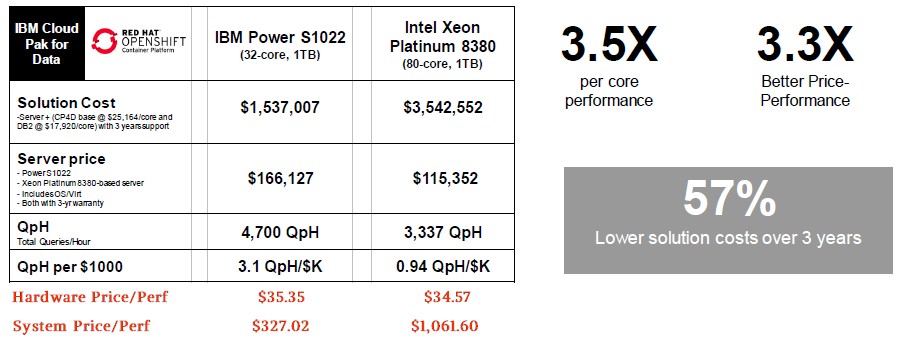

We do not think that comparisons are odious here at The Next Platform, so we are going to keep rolling with two more comparisons. This one shows some Watson AI software running against a DB2 data warehouse:

The performance and price/performance gaps are at 3.5X and 3.3X, respectively, higher than the historical trends for OLTP workloads.

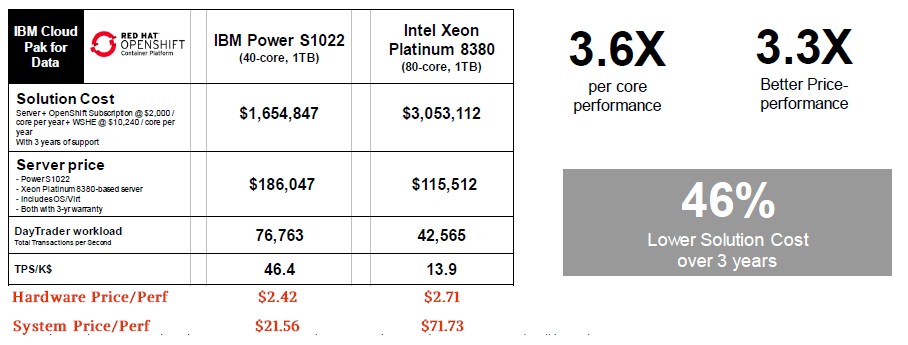

And finally, here is a benchmark that shows the DayTrader Java benchmark (presumably also a variant of the TPC-E test) running in containers atop Red Hat Open Shift and Enterprise Linux and IBM’s own WebSphere Liberty application server.

The performance and price/performance gaps are also well above 3X here.

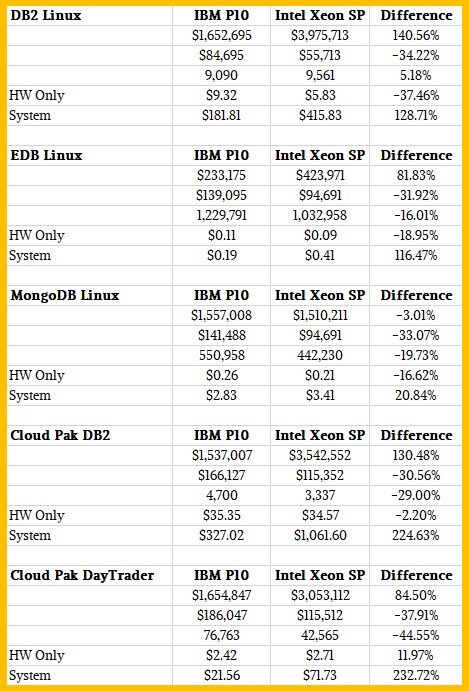

Just for a summary, here is a table of all of these results on the Power S1022 machines:

Why IBM is not banging the drum about this is beyond us. . . . Probably because someone would bring up the performance of AMD Epyc processors. But the per-core pricing is even worse for AMD for systems software.

Fun fact: 8380H isn’t ICL-SP. It’s not even closely related to ICL-SP. It’s Cooper Lake – the strange final rev of Skylake-SP with a small clock increase and the new bfloat16 ops. ICL is only capable of 2s scalability, so Cooper is what’s left until SPR-SP ships.

You may want to update the many places where you call 8380H Ice Lake…

Yup. I knew that. I just forgot how screwed up it all got for a moment. Thanks for the catch on the four-way and eight-way comparisons.

> In case you haven’t figured it out, IBM is betting customers will spend a little bit more on hardware to save a lot on hardware.

I think you mean to say that IBM is betting customers will spend more on hardware to save on software…

Poor Intel. They really do have it coming from all sides – from AMD in their own x86 world, from Apple’s ARM, particularly on low power, and from IBM Power, as seen here. Competition is good, though – maybe a desperate Intel with an overmilked cash cow will suprise us.

Yes. Indeed. Freaking dyslexia….

Depends how you look at it. If you spend a little more on hardware today it can save you long term on purchasing hardware in the future given you can run it at high utilisation and it scales so well. On top of that it will also help in terms of sustainability costs (power and cooling).

“The more you spend, the more you save” – rando semi CEO

AMD had “F” series of frequency optimised processors that will likely compete at the lower end. Please evaluate those.

Indeed, I’d like to see that also. Especially once Genoa is out.

With the phrase hybrid cloud all over IBM’s Power10 release, I’d like to know where can a person rent Power10 virtual machines that range in size from one core with a gigabyte of RAM to hundreds of cores with terabytes of memory inception.

Who performed these tests?

The SPEC tests and the SAP SD tests are done by each server maker and audited by SPEC and SAP, respectively.

IBM did the other tests on its own iron and it looks like it ran the MongoDB, EDB, and Cloud Pak tests itself on the X86 iron shown as well.

It’s called Power Virtual Server, and eventually Power10 will get there. I don’t think memory inception is running yet or Power10 iron installed, but I can ask.

I note this also doesn’t include any software costs for an x86 software hypervisor (as most shops virtualise these days) vs the mostly in hardware PowerVM hypervisor on the IBM gear (usually included free for the first 3 years with a hardware purchase). How much further would that skew the software difference with the extra x86 cores ? Ive also read figures in the past like a moderately loaded vmware host expends around 30% of its resources running the hypervisor vs about 2% with PowerVM (equating to more workload capabilty per physical Power Host). Have you seen any update on those hypervisor resource figures recently ?

Actually, some of the comparisons do.