It is beginning to look like the Dell Technologies and Hewlett Packard Enterprose, the world’s two biggest original equipment manufacturers, are finally going to start benefitting from the generative AI wave, mainly because they are finally getting enough allocations of GPUs from Nvidia and AMD that they can start addressing the needs of customers who don’t happen to be among the hyperscalers and largest cloud builders.

These hyperscalers and cloud builders generally do not buy systems from Dell and HPE but a number of who are clearly buying systems from Supermicro, which has a market capitalization of more than $63 billion as we go to press, which is going to be added to the S&P 500 index in two weeks, and which has seen its revenues skyrocket as the its hyperscaler and cloud customers – who importantly get their own GPU allocations directly from Nvidia and AMD– seek a low-cost, indigenous manufacturing for their AI systems.

Dell and HPE, on the other hand, have been suffering through a recession in spending on general purpose servers for the past year and have not gotten nearly enough GPU allocations to fill in the revenue holes. But both companies, in their own way, have made the best of it and are ready for a rebound in server spending this year as well as getting more of a fair share of AI server money. Both companies are positioned well to peddle both CPU and GPU systems to normal customers – no, hyperscalers and cloud builders and the national HPC centers are definitely not normal – as GenAI goes mainstream and service providers, enterprises, governments, and academic institutions turn to their regular OEM suppliers for machines to support these new workloads and also start upgrading their general purpose server fleets.

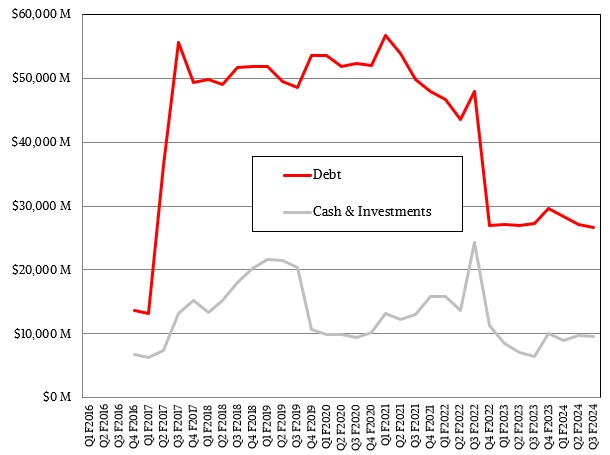

Let’s start by analyzing the financials of Dell, which just put out its numbers and which is the larger of the two OEMs – a goal that Dell has had for decades. And if Dell and HPE are not careful, Supermicro will be consistently larger, albeit against a much smaller customer base that makes the latter more of an original design manufacturer, or ODM, than an OEM.

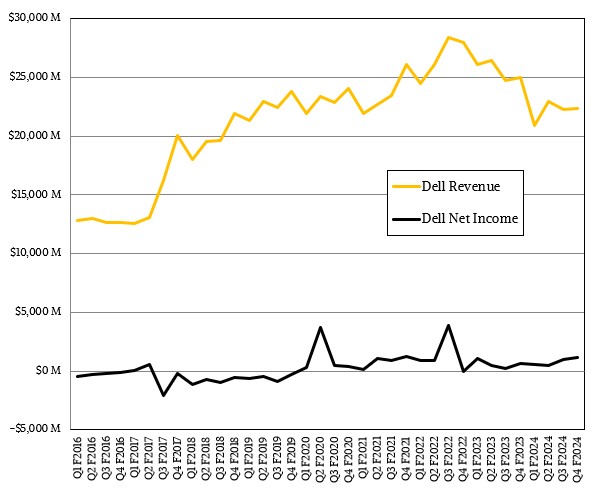

In its fourth quarter of fiscal 2024 ended on February 2, Dell posted sales of $22.32 billion, down 10.9 percent year on year and essentially flat sequentially. Net income, however, was up by 1.9X to $1.16 billion, which is not just an indication of the fatter margins it can get from AI servers but also that it has maniacal control of its costs. It hasn’t hurt that the PC business, which Dell still participates in (but HPE does not after its spinoff of HP Inc quite a while ago), is slightly more profitable even though revenues were off 12.3 percent to $11.72 billion in the period.

Dell’s product revenues in Q4 F2024 were off 15.2 percent to $16.15 billion, but its services revenues rose by 2.8 percent to $6.17 billion.

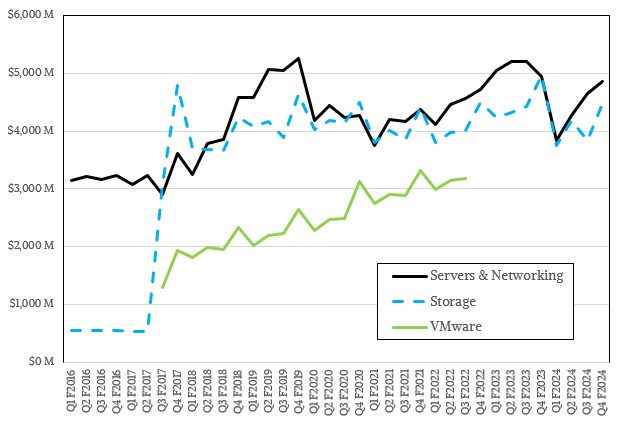

Dell’s Infrastructure Solutions Group, which includes the EMC storage business but which has not included the VMware server, storage, and networking virtualization software business for more than a year, had a 5.8 percent decline to $9.33 billion, but was actually up sequentially by 9.8 percent, which is good news. Operating income was down 7.5 percent year on year to $1.43 billion, but was up 33.6 percent sequentially from Q3 F2024 ended in November. The Servers & Networking division saw a 1.7 percent decline to $4.86 billion, but a 4.3 percent improvement sequentially, while the Storage division had a 9.9 percent decline to $4.48 billion, which was up 16.4 percent sequentially.

That sequential growth in these areas is important. And having operating income grow at more than 3X the rate of revenue is always a good thing – unless you are cutting into the bone of your employee pool, which is a short term gain when companies do it but often hurts in the long run.

Jeff Clarke, vice chairman and chief operating officer at Dell, said on a call with Wall Street analysts that Dell “saw positive signs in the business” as the company exited its fiscal 2024 year, but added that “enterprise and large customers remained cautious with their spend.”

On the AI front, Clarke gave Wall Street some tidbits – the kind we can use to start building a model. He said that orders for AI servers were up 40 percent sequentially from Q3 F2024 and that the AI server backlog nearly doubled to $2.9 billion. With the allocations that Dell could get for GPU and other kinds of accelerators, it was able to ship $800 million in AI servers in fiscal Q4. Dell shipped around $525 million in AI servers in Q3, so that is 52.4 percent growth sequentially, and in our rudimentary model, we think that Dell’s AI server sales were up around 10X year on year and its backlog has grown by 22X since this time last year.

It wasn’t the lack of interest that held Dell back, but the lack of GPUs. Other OEMs were not getting much in the way of allocations, either, so it was like Nvidia was driving a pace car, keeping the roaring beasts behind it all in line as it went for the easy money with the hyperscalers and cloud builders. And, we have to add, giving them what might be an insurmountable advantage when it comes to GenAI.

All that Dell can do, given all of this, is wait for supplies to improve and do the business it can with the allocations it gets, or its customers get.

“Demand continues to outpace GPU supply, though we are seeing H100 lead times improving,” Clarke explained on the call. “We are also seeing strong interest in orders for AI-optimized servers equipped with the next generation of AI GPUs, including the H200 and the MI300X. Most customers are still in the early stages of their AI journey, and they are very interested in what we are doing at Dell. We are helping them get started and work through their use cases, data preparation, training, and infrastructure requirements. They appreciate our perspective, our collaborative approach, and the capabilities we can provide to help them create holistic AI solutions, including our end-to-end portfolio, engagement with our engineering teams, consulting services, and financing options. Progress in this space won’t always be linear, but we are excited about the opportunity ahead.”

Looking ahead, Dell’s new chief financial officer, Yvonne McGill, said that Dell expects for Q1 F2025 revenue to be between $21 billion and $22 billion, with the midpoint of $21.5 billion representing 3 percen growth compared to Q1 F2024, adding that the ISG datacenter group would see growth “in the mid-to0high teens.” For the full F2025 year, Dell anticipates revenues between $91 billion and $95 billion, with the midpoint of $93 billion representing 5 percent growth. So it gets better as the year goes on – but not hugely so. McGill said Dell expects for ISG to grow “in the mid teens,” driven by AI and “a return to growth in traditional servers and storage.”

Clarke added that Dell expected to sell more AI servers in Q1 F2025 than it did in Q4 F2025, and added that the backlog portfolio included machines with Nvidia H100, H800, and H200 GPUs as well as AMD MI300X – and that current sales do, as well.

And demand for traditional servers grew year over year in fiscal Q4, too, representing the third consecutive quarter of sequential growth for these non-AI servers overall.

“We look into the pipeline of the coming year and it continues to improve,” said Clarke. “This is the longest digestion period that I can recollect in this industry. Everything is setting up for an investment in traditional servers to run traditional server workloads, which are very different than these accelerated AI workloads. So our line of sight into what our customers need gives us confidence that we believe that traditional servers are recovering. The question will be what rate will they recover, but we’re optimistic. A long digestion period, those workloads still very important running mission-critical workloads in many of our largest customers all the way down to small and medium businesses. The performance that we exit or the momentum that we exit in the year with I think gives us the ability to look going forward. Our own internal modeling says that our traditional server market is a modest growth on a year-over-year basis.”

Clarke also dropped this line: “We are excited about what’s happening with the H200 and its performance improvement. We’re excited about what happens at the B100 and the B200, and we think that’s where there’s actually another opportunity to distinguish engineering confidence. Our characterization in the thermal side, you really don’t need direct liquid cooling to get to the energy density of 1,000 watts per GPU. That happens next year with the B200.”

FYI: We had heard the B100 would be 1,200 watts, and strongly suspect that the B200 will be whatever the B100 is.

There was some back and forth with the Wall Street analysts that suggested AI servers ain’t all that profitable, too. Which is something that we have seen with other kinds of high performance computing historically, especially at IBM, SGI, Cray, and HPE as well as Dell in the traditional HPC simulation and modeling market. It is also true of Supermicro right now, as we have discussed. These are hard markets for anyone except Nvidia to make money in – just as was the case with Intel during the general purpose computing boom a decade ago.

Which brings us to HPE.

It sure looks to us like HPE is tired of people like us pointing out that its HPC & AI division can bring in the revenues but, as its predecessors in the HPC arena have so aptly demonstrated in their decades of history, can’t bring much in the way of profits. And so it has done away with the HPC & AI division that largely represents the former Cray business and has rejiggered itself so that its Storage division is now part of something called the Hybrid Cloud division – even if that storage is not sold under GreenLake utility-style pricing – and the systems, including HPC and AI machinery, are now in something called the Server division if they are sold outright. It also looks like the cloudy parts of the Intelligent Edge division, which is largely the Aruba wireless campus network stuff, are moved into Hybrid Cloud, too.

Yup, this obfuscates HPE’s financials rather than illuminating them, and it is absolutely intentional as such. There is no reason why HPE could not have created a Hybrid Cloud breakout to show how GreenLake and other subscription-priced things were doing and left the divisions alone.

So, let me remind you one more time just to make a point. In the prior fiscal year ending in October 2023, The HPC & AI division had sales of $3.91 billion, up 22.6 percent and largely due to the acceptance of the “Frontier” supercomputer at Oak Ridge National Laboratory, which cost $500 million, in December 2022. Most of that money appears to have gone to AMD for CPUs and GPUs, but some went to HPE for memory, storage, Slingshot interconnect, and enclosures. (Call it $100 million or so.) Pay all the bills for the HPC & AI division, and you get an operating income of $47 million, or 1.2 percent of revenues. In the prior fiscal year, HPC & AI had $3.19 billion in sales, but only brought $11 million, or three-tenths of a point, to the operating line.

We have railed for years that HPE, Dell, and others who have left the HPC field – do you really think SGI and Cray wanted to be eaten by HPE? Do you really think IBM wanted to leave the field entirely? – need to get a fair price for the work they do for the national labs. This is not bad management, this is greedy silicon suppliers and tough customers who act like they are research and development rather than extremely demanding users who are used to an unnatural and unhealthy economic relationship.

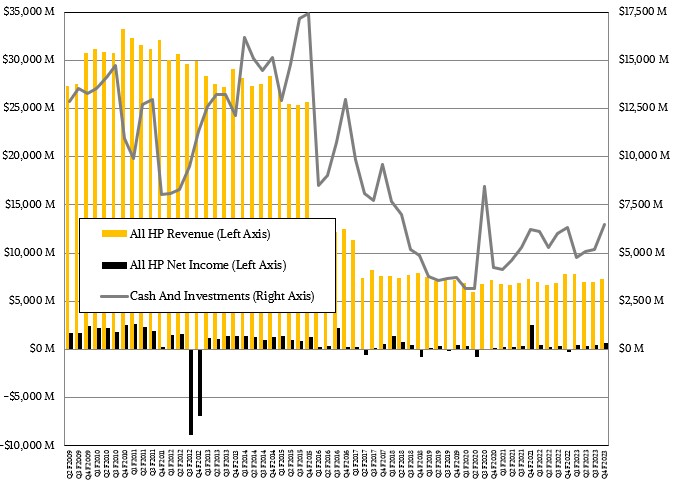

In HPE’s first quarter of ending in January, which is part of its fiscal 2024 year that will end this October, HPE did not do as well as Dell did. Revenues were off 13.5 percent to $6.76 billion, and that was down 8.1 percent sequentially, and net income fell by 22.8 percent to $387 million, which was down 39.7 percent sequentially. The company exited the quarter with just a tad bit over $6 billion in cash, which is good.

The new Server division, which includes machinery sold normally and not rented out under a GreenLake utility pricing scheme, had sales of $3.35 billion, which was off 22.6 percent year on year and down 6.2 percent sequentially. Earnings before taxes for the Server division were $383 million, down 43.5 percent year on year but up 6.4 percent sequentially.

The Hybrid Cloud division, which includes all storage sales plus any HPE hardware or software sold as a subscription (including some Aruba stuff formerly reported in the Intelligent Edge division), fell by 9.8 percent to $1.25 billion and was off 6.9 percent sequentially. Earnings for the Hybrid Cloud division fell by 41.3 percent to $47 million and were down 7.8 percent sequentially. We begin to wonder why HPE rejiggered its financials, but it is clearly looking ahead to when these businesses will be doing better and it wants to be able to show the cloud aspects of its business.

In any event, HPE’s annualized run rate for cloudy stuff was $1.4 billion, and HPE expects to see it grow 35 percent to 45 percent in the coming quarters.

In a sense, all of HPE’s business is either in the datacenter or at the edge or in the campus, which is just a fuzzy bit of distributed datacenter after all, so nearly all of HPE can be considered “datacenter” as has been the case for years since the spinout of the PC, printer, and services businesses. But what we like to track is the core systems business.

Based on our estimates, this is what HP’s core systems business looks like in terms of revenues and earnings before taxes:

We reckon that this core systems business at HPE generated $5.54 billion in revenues in fiscal Q1, down 15.3 percent, and that earnings before taxes was $492 million, down 46.5 percent. But revenues and profits were up a smidgen sequentially, so that is a good thing – provided our model is accurate, of course.

As you can see, this business has let off a little steam in fiscal 2018, but has been more or less stable, averaged over the four quarters of a fiscal year, since then. The profitability is a little hardware to come by, too, in terms of percentage of shrink.

The question is will sales of AI servers – and HPC servers, as if there was an HPC machine not doing AI these days – help the profitability even if they eventually help the revenues?

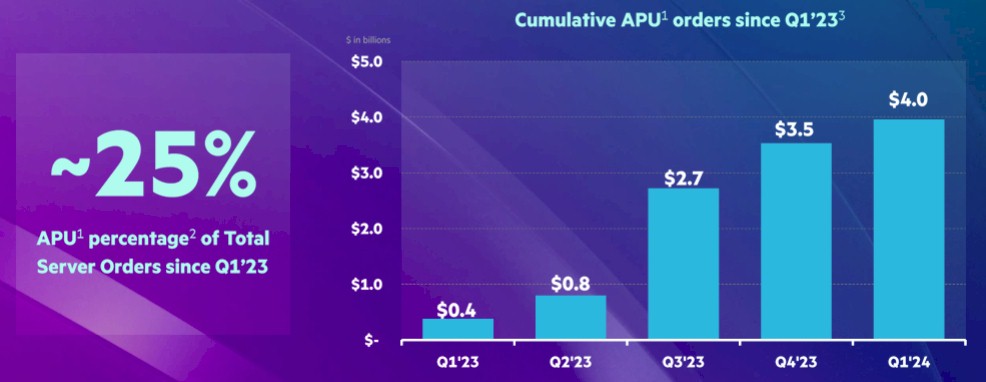

According to HPE, machines with “accelerated processing units,” or APUs in them have comprised about a quarter of server orders since Q1 F2023, inclusive. And this chart shows the cumulative orders since that time:

APU system orders did a pretty big jumps in Q3 F2023 – the quarter that ended in July – with $1.9 billion in orders for that quarter alone. In Q4 F2023, HPE did $800 million in orders, and in Q1 F2024 it did $500 million, using its own numbers. On the call with Wall Street analysts, Marie Myers, HPE’s new chief financial officer, said that APU system orders increased sequentially and were well over $400 million and that it had an APU system backlog of over $3 billion, with the pipeline of opportunity being “well above that.” Apparently one of the hyperscalers – who was not named – did a big GreenLake deal on an AI cluster, but was not named. The AI server backlog was under $1 billion a year ago, by the way.

Myers said that revenue from the traditional server business was up sequentially but faced a tough compare to the January quarter a year ago. That was also when the Frontier supercomputer was accepted, as we said. Good times. Myers added that HPE expected sales to be up sequentially and nominally to somewhere between $6.6 billion and $7 billion in Q2 F2024, but cautioned that the lumpiness in Cray supercomputer deals and in big GreenLake deals could make things move around a little. Antonio Neri, HPE’s chief executive officer, said on the call that a couple of big deals slopped into future quarters as customers prepare their datacenters to take on AI servers.

And if you ain’t ready to deploy, then Nvidia doesn’t allocate.

Neri also said that HPE’s lead times on GPUs were in the range of 20 weeks or more. Which we say is a whole lot better than the 52 weeks or more that prevailed for the second half of calendar 2022 and most of calendar 2023.

¨What’s good for Hewlett Packard Enterprise is good for America´ * HPE is the first construction HPC company of the World.

The oncoming end of the CoWoS-induced GPU gummy bear famine can’t come soon enough IMHO! Near-empty supply guts with 52-week transit times was a right stomach ache, and this relief to 20-week will feel like Maalox for matrix-vector unit digestion regularity, even without extra servings of opto fibers! May this restoration of the microprocessor industry’s microbiome ease the supply discomforts of the AI tract, for some most enjoyable of experiences on its leaders’ “thrones”! 8^b

what?