It was only six months ago when we were talking about how system maker Supermicro was breaking through a $10 billion annual revenue run rate and was setting its sights on a $20 billion target. Well, maybe $25 billion or even $30 billion would be better numbers given the unprecedented demand for AI servers and the difficulties of managing the supply chain and manufacturing process for these complex machines.

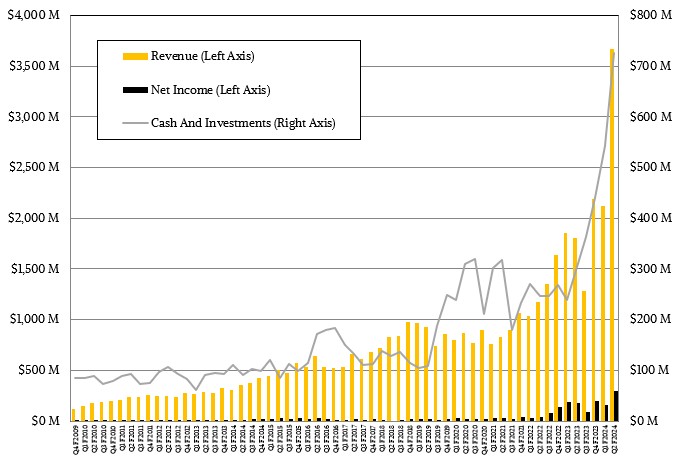

In the second quarter of fiscal 2024 ended in December, Supermicro’s revenues more than doubled to $3.67 billion, and rose sequentially by 72.9 percent from a pretty impressive $2.12 billion mark. Operating income rose by 72.8 percent to $371 million in the period, and net income rose by 68 percent to $296 million. These rates of change all are large, but the fact remains that net income as a percent of revenue was only 3.4 percent, compared to an average of 9.1 percent in the prior seven quarters when the generative AI boom was really gathering some steam.

The problem that Supermicro faces as it grows faster than anything else in the IT sector other than Nvidia’s datacenter business – a tsunami wave curling in the sky 50 stories above the ocean surface which Supermicro itself is riding with grace – is that it has to build out manufacturing capacity at a very quick pace to keep up. So much so that Supermicro recently floated $600 million in stock to get some money in the bank, given it $726 million in the coffers as December came to a close and a buffer to help buy the inventory it needs to make the next batch of server racks.

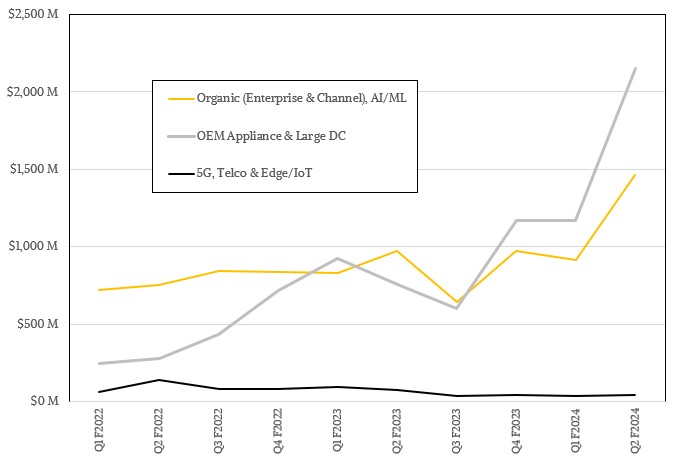

And now that Nvidia H100 GPU supplies are improving, AMD has entered the fold with very credible MI300X alternatives, and even Intel is getting into the act with its Gaudi2 devices, supply constraints are not tempering demand as they did a year ago. And thus if Supermicro wants to gain new customers and become the go-to maker of AI server iron for those operating at scale, it has to make deals with cut-throat prices, ramp up facilities as fast as it can, and treat customers well so they will come back and buy even more gear that will have higher margins because the ramp work will be done.

In theory. There is always a chance that demand will dry up, or just keep accelerating for an even longer period.

“Overall, I feel very confident that this AI boom will continue for another many quarters, if not many years,” Charles Liang, co-founder and chief executive officer of Supermicro, explained to Wall Street analysts on a call going over the numbers. “And together with the related inferencing and other computing ecosystem requirements, demand can last for even many decades to come. We may call this an AI revolution.”

Many certainly are.

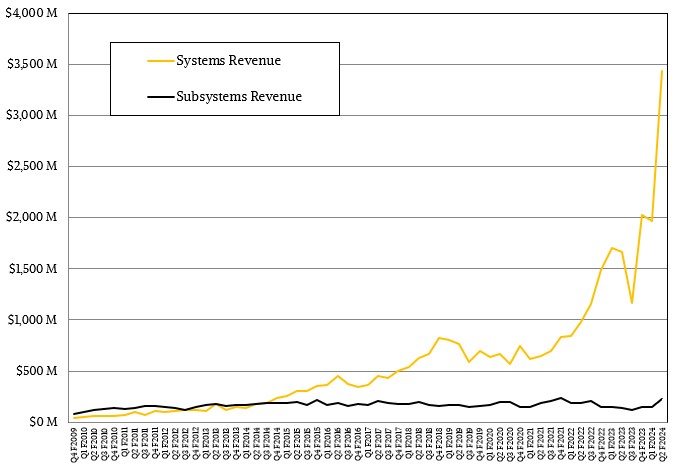

Long gone are the days when Supermicro’s business was mostly motherboards and adapter cards for servers, but the experience of building components has taught the company to be a flexible yet purposeful builder of systems. And now, with customers needing speed as much as they need raw compute, storage, and networking capacity, the rackscale systems approach that Supermicro is now peddling to the hyperscalers, cloud builders, and other service providers as well as large enterprises who want to emulate them is becoming a key differentiator. And Supermicro’s ability to scale up that rackscale manufacturing capability is therefore a gating factor in its ability to grow, which is why you see Supermicro sacrificing some short-term profits for long-term relationship building.

We don’t have a lot of data on the rackscale part of Supermicro’s business, but we do know that back this time in 2022, it had the capacity of building around 2,000 racks per month. We also know that with the expansion it has done in its factories in San Jose and Taipei and the addition of its factory in Malaysia, the company currently has the ability to build 4,000 racks per month. By the June quarter, which is the end of the company’s fiscal year, Supermicro will be up to a 5,000 racks per month run rate, and of that 1,500 racks will be for direct liquid cooled systems – something that is increasingly necessary as the GPU does a lot of the calculating on AI and HPC workloads.

Over the past four quarters, Supermicro has hinted about how its AI and rackscale system sales have been doing. It is hard to believe that all AI servers are not being bought at rackscale, all preconfigured and ready to crunch data, but Supermicro talks about these as if they are different and then combines them together. What we can tell you is that this part of the business had $372 million in sales in Q3 F2023, representing 29 percent of the business and it has been averaging above 50 percent for the past three quarters after that. In the trailing twelve months, this AI/Rackscale category has accounted for $4.5 billion in sales, 48.6 percent of the company’s total sales of $9.25 billion.

This ratio will only get larger, we think, and that is because Supermicro does a whole level deeper configuration, including software installation, in its rackscale offerings, which the original design manufacturers (ODMs) that have been popularly used by hyperscalers and cloud builders do not. That extra hand holding creates a virtuous cycle, and as long as Supermicro’s own GPU allocations as well as those of its hyperscaler and cloud builder customers hold – then Supermicro will keep winning massive infrastructure deals.

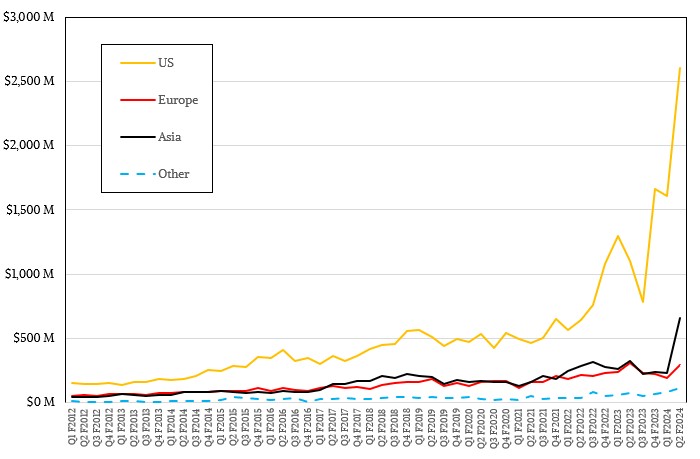

This will create a virtuous cycle between Supermicro and Nvidia, AMD, and Intel that is driven as much by the customers as it is driven by the manufacturers bestowing that benefit upon a server maker. It doesn’t hurt that Supermicro builders machinery for Intel’s own internal use, mostly for its massive EDA simulators, and if the rumors are true, is also building the DGX machinery for Nvidia’s own use and for its direct sales to customers. Supermicro has been masterful at keeping good relations with the big three compute engine makers as well as with networking providers, so there is no reason to believe that Supermicro can’t take away ODM business from Quanta, Inventec, WiWynn, and others as well as take a bite out of the ODM-like businesses from Lenovo and Inspur. In fact, that’s precisely what we think is happening, and a big part of this is that Supermicro is an American company building gear for American customers.

In the fiscal second quarter, Supermicro’s business in the United States was up by a factor of 2.4X to $2.6 billion, and its European business actually shrank by 4.4 percent to $293 million. (There really are not hyperscalers in Europe, not like there are in China and the United States.) Supermicro’s business in Asia more than doubled to $660 million, an indication we think that Supermicro is getting traction in Japan and China, the two biggest markets for systems. That uptick in revenues from Asia in the chart above didn’t come out of nowhere. It came out of someone’s hide.

What has Wall Street more excited than anything is the guidance that Liang & Co offered during the call. Supermicro expects for sales of between $3.7 billion and $4.1 billion in the fiscal third quarter, and revenues of between $14.3 billion to $14.7 billion for the full fiscal year ended in June. That implies a revenue of around $4.82 billion in Q4 F2024.

We don’t have any question that Supermicro will be able to grow its business to $25 billion and beyond. The rack is the new server and the datacenter is the new rack. As infrastructure gets more complex and as companies like Supermicro – it will have emulators – do more of the configuration and integration heavy lifting, more and more companies will buy rackscale, not a bunch of servers and a bunch of switches and put them together themselves. The question we do have is whether Supermicro will be able to extract higher margins out of that extra work it does, or if that will just be table stakes.

Great article thanks. So If I understand correctly the typical top OEM server vendors in HP, Dell etc are not playing in the servers, racks, and cooling for NVDA and AMD GPU’s? Ww been out of tech for 5 years and what a change! Is APC and Schneider Electric or Emerson/Eaton playing at all? Thanks

Great question! With the advent of rack platform and server explosion SuperMicro. is beyond a doubt ready and waiting at the station for the AI Train (which includes the companies you mentioned on board!) to arrive. We have engineered and innovated a formidable moat of leading technology configured exactly to fill the AI shaped slot these companies need to fill. The results garnered by use of our systems along with our support will engender ever more demand going forward in this vast new and expanding paradigm created and led by NVDA!

Net income for Dec 2023 as % should be 7.4 not 3.4. Must be a typo.