Containers are still the hot new technology in the datacenter to some, but many of the pieces and parts that eventually would find their way into today’s container platforms have long-since been used by enterprises and developers thanks to Docker.

The company, which was founded a decade ago, pulled those technologies together and created software containers, changing the way applications are programmed and managed.

“Cgroups, namespaces, copy-on-write file systems,” Docker chief executive officer Scott Johnston said during a video conference this week with journalists to recognize Docker’s launch in 2013, its growth, and its continuing innovation. “One needed a Ph.D. in Linux kernel engineering in order to benefit from these very powerful technologies. But Docker founder Solomon Hykes and his team, they had a vision to simplify the complexity. and Docker’s powerful abstraction ignited the imaginations of millions as a result. Historically, our industry has had to trade one off for the other – productivity off for safety or safety off for productivity. We at Docker set out to solve that so devs don’t need to give up one in exchange for the other.”

That’s Hykes speaking in the feature image at the top of this story, by the way.

Enterprise adoption of containers, and later microservices, was swift and the technology is now common in developer offices everywhere. Johnston noted the more than 18 million developers using Docker Desktop and 450-plus partners in Docker Hub, the benefits of containers – 13 times more frequent application releases with the Docker tools, a 65 percent reduction in developer onboarding time, and a 62 percent drop in security vulnerabilities.

It’s also been a boon for Docker itself, with more than $130 million in annual recurring revenue and more than 70,000 commercial customers (including 79 of the Fortune 100). More than 7 million developers use Docker every day, Johnston said.

Ten years after mainstreaming containers, the space that Docker helped create is being squeezed in an increasingly crowded space. Red Hat OpenShift is now a key competitor, with others like SUSE with Rancher and Apache Mesos having some footing. Major cloud service providers like Amazon Web Services, Microsoft Azure, Google Cloud, and Oracle Cloud have their own container services, and they loom even larger. And in the middle of all this is Kubernetes, born from Google nine years ago and now the de facto container orchestration platform, pushing aside others like Docker Swarm and Mesosphere and becoming the foundation for offerings like VMware’s Tanzu.

Docker in 2019 took another tack, moving out the production side of things and instead put its

focus on developer tools. Mirantis, an open-source cloud software company, bought Docker’s

enterprise container platform business that year.

Docker has worked to adapt to the time, with Johnston noting a pivot by the company in 2019 to focus on two areas, including rising demand for new app development that’s driving a need for more developers – IDC is predicting a need for 750 million new apps over the next few years, the CEO said – and a growing use of open-source components that raising supply chain security issues, such as the ongoing Log4j vulnerability threat and the high-profile hack of SolarWinds in 2020.

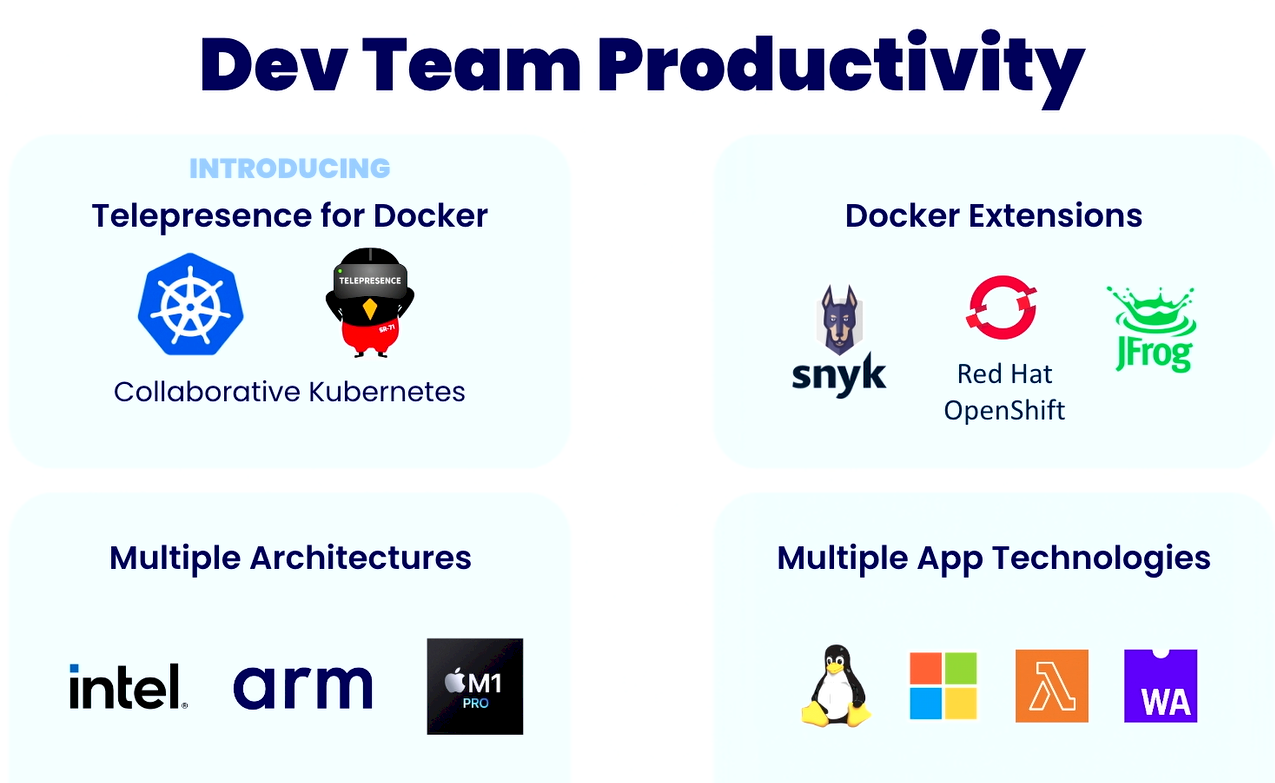

While Docker started with Linux containers in 2016, it’s since evolved to include Windows, serverless environments via Amazon Lambda, and in October WebAssembly (WASM).

The latest tools coming into the Docker portfolio include Telepresence for Docker, a new offering created through a new partnership with developer infrastructure vendor Ambassador Labs that’s designed to make app development and testing on Kubernetes easier, accelerate delivery, and drive collaboration. It also aims to reduce maintenance and cloud costs.

“This provides a shared cluster development environment for developers to work together as a team and collaborate on building applications for deployment to Kubernetes applications, to Kubernetes stacks,” he said. “Docker extensions enable developers to use their favorite tools in an integrated, secure manner inside their Docker desktop environment.”

Looking forward, the company is going to go deeper in to AI and machine learning, building on features available in Docker Desktop, and embracing the growing hybrid collaborative development environment, which essentially means using laptops – with their low local latency – more tightly with the cloud and its on-demand and collaborative properties.

That was an issue raised during a discussion led by Johnston that included Hykes and other developers and focused on developer needs the coming years. Ilan Rabinovitch, senior vice president of product and community at monitoring service provider Datadog, said such hybrid environments are promising but complex. What’s needed is the “next step of tooling.”

“Developers don’t want to think about it, but how do we make this not be a thing that they care about?” Rabinovitch said. “There’s been a lot of promising technologies over the years talking about how they’re going to solve it. For a while it was service meshes and all these other tools which I haven’t seen take off just yet. But there are other places we’ve talked about, remote development environments, shared development environments, so those might be a way as well. I don’t know that we found the silver bullet or if there will be one silver bullet, but we have the next level of complexity that we have to try to solve for.”

Security and automation also will be key. Hykes, who in 2018 founded Dagger, a programmable CI/CD engine for containers, said the adoption of containers in the application and infrastructure stacks happened quickly in parallel. However, there’s a middle section of scripts and pipelines that is the most difficult to change and where automation is crucial.

“It’s just a mess still, and it’s not because of containers that it’s a mess, but now the bar is so much higher,” he said. “The developer expectations are so much higher and with the infrastructure, the operators’ expectations are so much higher. So in the middle, here are four shell scripts that make file in a YAML and it hasn’t really kept up. Now it’s the bottleneck, so actually there’s a huge opportunity that Dagger is pursuing but others should pursue also. I don’t think it’s a single-company problem. It’s an industry-wide problem to solve, to actually set a higher bar in the delivery of automation in the supply chain and just how it’s automated using containers, using all the tech that is now available.

Addressing AI and machine learning will be key for Docker and other companies as the technologies become more mainstreamed through such vendors as Microsoft, Google, and Amazon. Craig McLuckie, co-founder of Kubernetes and now an Entrepreneur-In-Residence at Accel, said enterprises will learn quickly that AI tools, due to how they ingest corporate data, are not meant for multi-tenant environments and will be forced to build their own single-tenant spaces for it, which will be expensive and difficult. Tools like those in Docker should reduce some of the barriers of entry “to democratize access to AI both from an operational expertise perspective, but also just from a resource consumption perspective.”

Rabinovitch likened it to the evolution in infrastructure, with cost and access becoming lower and easier from the days of big iron, when scaling meant organizations having to give millions of dollars to IBM, Sun, HPE, or Dell. Infrastructure evolved to become more powerful and less expensive.

“Each step of the way, we’re making it more approachable for folks, and then some new level becomes harder,” he said. “In this AI case, it’s going to be, ‘Do you have the dataset for you to train that? Do you have the right data for it? Do you have the compute power to make it happen?’ How can we make that more accessible? I don’t know what the next AI thing is going to be. I don’t know if it’s we’ve automated away software development or social media marketing or anything in between. I don’t know what it is, but it’s going to enable something cool.”

Containers, known at first as jails, I believe can out on FreeBSD in 1999, long before docker. Docker help to popularize them.

Your title is rather misleading. Docker came out 13 years after FreeBSD Jails were already performing containerization and 5 full years after Linux containers which it originally was based off of. They certainly helped popularize them, but giving Docker credit for helping to invent them is a stretch.