In a relatively few short years, Kubernetes has become the de facto orchestration platform for managing software containers, besting a lineup that included such contenders as Docker Swarm and Mesosphere. Since spinning out of Google eight years ago, Kubernetes has developed at a rapid pace, with new releases coming out as often as four times a year.

Kubernetes also has spawned a range of platforms from the likes of Red Hat (with OpenShift, a key driver of IBM’s $34 billion acquisition of Red Hat in 2019, VMware (Tanzu), and SUSE (Rancher) and its now being offered as a service via top public cloud companies Amazon Web Services, Microsoft Azure, and Google Cloud, as well as others.

That said, there are continuing signs of maturation and stabilization in Kubernetes, which is under the auspices of the Cloud Native Computing Foundation (CNCF). For one, the torrid pace of the release cycle is beginning to ease, from four releases a year to about three. And now, there is talk among those within the Kubernetes project about possibly rolling out longer-term support releases, according to Brian Gracely, senior director of product strategy for OpenShift at Red Hat.

“There’s a certain amount of stability that’s there in the core Kubernetes project,” Gracely tells The Next Platform. “From a purely Kubernetes perspective, if you look at the community, if you look at where the community is – the velocity of the community – there’d be a lot of people who would probably tell you for the most part, the thing that is really Kubernetes – the API, the scheduling system, its framework for being able to plug in storages and networks and so forth – is fairly complete. I don’t ever want to say it’s 100 percent complete.”

However, there is furious work going on by vendors themselves in their Kubernetes platforms to meet the growing demands of enterprises that are increasingly putting their Kubernetes efforts into production. As we wrote about last year, Pure Storage is seeing Kubernetes beginning to become important in managing data and infrastructure. In addition, another key demand from enterprises is to bring Kubernetes to the rapidly expanding edge environment and to greater smaller versions of those platforms to give organizations a consistent IT experience whether in the datacenter, in branch and other remote places or at the edge.

There is K3s, a lightweight Kubernetes distribution that is designed to be easier to install, aimed at the edge and optimized for the low-power Arm chip architecture. Red Hat also has introduced MicroShift, a version of OpenShift that is designed to work with edge-optimized versions of Linux, including Red Hat Enterprise Linux (RHEL) for Edge and Fedora IoT.

“We’ve seen people take Kubernetes, which was fundamentally designed for datacenters and clouds, and we’ve seen a miniaturization trend,” Gracely says. “It’s the same technology, but what can we miniaturize? What can we reduce, whether that’s the control plane or whatever? We’ve seen people realizing that, in Kubernetes, you have this very structured, somewhat large control plane. … Then we see things like Cluster API [a project around declarative APIs and tooling for managing multiple Kubernetes clusters] and we’ve seen various ways of saying, ‘I want to have a lighter weight control plane’ and those end up becoming implementation details.”

He notes that it’s “a variation on the way that you use Kubernetes. Companies don’t necessarily want a big cluster anymore that’s multi-tenant. Maybe they want lighter weight things that might be one application or one group or whatever. We’re still seeing these kind of variants on Kubernetes to fit the place and the workload.”

The trend of adapting a fairly mature Kubernetes to more specific workloads and needs is partly the result of the way Kubernetes’ developers moved ahead with the project. Rather than pulling in myriad elements into the project – such as a service mesh or the operating system – the Kubernetes developers allowed those to become ancillary efforts outside core Kubernetes development work. They are tied to Kubernetes but are not part of Kubernetes.

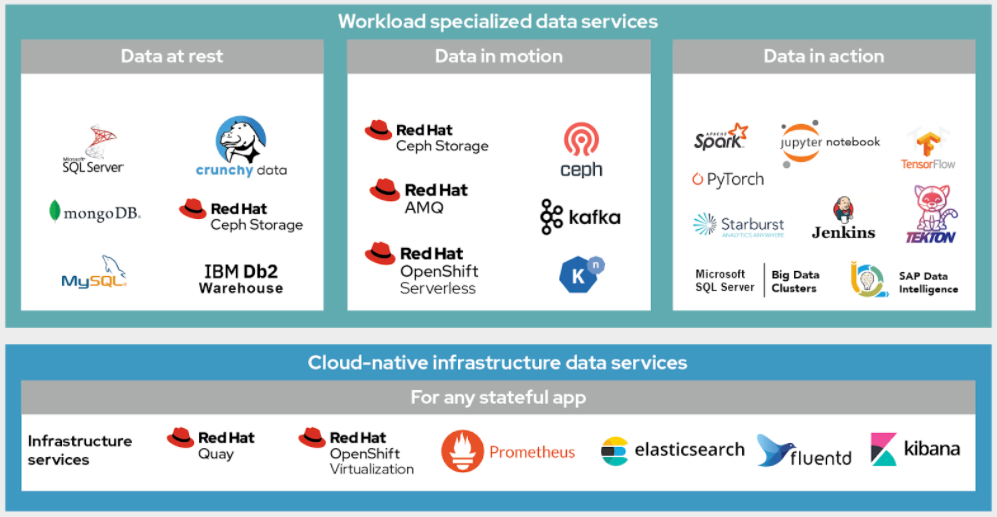

“Those have exploded,” Gracely says. “We’ve seen Service Mesh, Istio and those things really mature. We’ve seen projects like KubeVirt bring virtualization to it. We’re now seeing probably a dozen vendors support that. We’re seeing Knative [for serverless, cloud-native applications] mature. We’ve seen a whole ecosystem of security stuff like OPA and other stuff come around. Kubernetes is .. sort of the new kernel of what you do in the cloud and then these other things will become the stuff that lives on top of the kernel.”

Kubernetes has become pretty stable, with everyone consuming the same APIs on premises or in the cloud and what’s coming out of the adjacent work becomes user space and applications sitting on top. There is some comfort to that, ensuring users that the work on Kubernetes is not going to spin off in an unpredictable tangent. It’s going to continue to adapt in a steady way.

He uses the OpenStack project as a comparison. The open and free cloud computing platform that can run in public and private clouds launched in 2010 as a joint project between Rackspace and Anso Labs, a NASA contractor and the creator of the Python-based Nova cloud computing fabric controller. It started off as an infrastructure-as-a-service (IaaS) platform, but eventually started adding other components, such as platform-as-a-service (PaaS) and artificial intelligence (AI) and machine learning capabilities, all of which were going to be part of OpenStack.

“What that ended up doing – and probably caused them to have some challenges – was, people didn’t want all of those things, every use case,” Gracely says. “They didn’t require all those things. But yet, the project sort of said, ‘We’ve got to have all these things.’ One of the things that Kubernetes did – I think they probably learned some foresight from Linux and OpenStack – was to build a really good foundation, build a sort of kernel for that project and then recognize that you’re not going to know back in 2015 or 2014 what all the projects are going to look like in 2022 and be OK with that. That’s a hard thing sometimes. Some may argue for adding more to the project.”

It was that thinking that led Red Hat around 2017 to adopt a modular approach as it continued to develop OpenShift. It was when the company saw the adjacent work being done around Kubernetes – from service mesh to serverless – and engineers realized that to offer those capabilities on OpenShift would mean having to move to a modular architecture. It also gives Red Hat greater flexibility for adding and removing components to OpenShift, depending on enterprise needs.

The company this week added OpenShift Data Foundation – essentially software-defined storage for containers – to its OpenShift Platform Plus offering, a comprehensive multicloud Kubernetes stack that includes not only the container platform but also cluster security and cluster management for Kubernetes and Red Hat Quay, a scalable container registry. Including the storage technology adds to making OpenShift Platform Plus a more complete offering.

“But at the same time, that modularity also allows us to now say we can break that down when we want to do single-node OpenShift on the edge,” Gracely says. “We can take away a lot of things that you don’t need at the edge. We think we have an architecture that’s flexible enough that if we want to add some new component, there is just going to be a good, known way to plug it in and it’s not going to blow up the system. It’s not monolithic, but it also it’s going to give our customers the flexibility to choose what they need.”

Be the first to comment