GPU computing platform maker Nvidia announced its financial results for its fiscal fourth quarter ended in January, which showed the same digestion of already acquired capacity by the hyperscalers and cloud builders and the same hesitation to spend by enterprises that other compute engine makers for datacenter computing are also seeing.

That’s not news, and it is not really a big surprise. And don’t get us wrong: The Q4 and fiscal 2023 financial results are interesting. But what is really interesting – and what Nvidia said very little about on the call – was something called Nvidia DGX Cloud, which the company thinks will position it to help democratize generative AI capabilities for all organizations around the world, not just the hyperscale and cloud elite.

Nvidia is not big enough – or stupid enough – to try to build its own cloud infrastructure with its own money, and co-founder and chief executive officer Jensen Huang no doubt saw the OpenStack collective, VMware, Dell, and Hewlett Packard Enterprise all try to go up against Amazon Web Services and try to elbow their way in beside Microsoft Azure and Google Cloud in the early part of the last decade.

VMware, Dell, HPE, and Rackspace/OpenStack all failed, and for the most part the latter two were trying to catch up to AWS. (Azure has carved out its place, thanks to its Windows Server platform, and Google Cloud is very much a work in progress but only a fool counts Google out.)

At a certain point, no company in tech besides those with absolutely huge advertising businesses – like Google and Facebook – huge software businesses – like Microsoft – and huge retail operations with first mover advantage – like Amazon and Apple – can actually build their own cloud. Facebook and Apple choose not to build big infrastructure and not rent capacity out on it. The others see the wisdom in having millions of other organizations give them money to borrow capacity on – what? – 100X to 10,000X more capacity than they would ever need to run their own businesses. And pay a premium for the pleasure of doing so.

But like we said, Nvidia, even with over $13 billion in cash in the bank, and even with enough market capitalization that it could entertain paying more than $40 billion to acquire Arm Holdings a few years back, is not rich enough to play the big cloud builder game. And at the same time, if it wants to capitalize on the enormous hype cycle that ChatGPT has created in the past three months – the Bing search engine is the new Pets.com sock puppet – then it had better find some sort of middle ground.

And so, it seems, Nvidia is going to do the next best thing to building its own cloud. And that is to literally put its own DGX systems inside of the big clouds so customers can use the same exact iron on a cloud that they could install in their own datacenters. The same CPUs and GPUs, the same NVSwitch interconnect in the chassis, the same NVSwitch fabric connecting up to 256 GPUs into a single memory space, the same 400 Gb/sec Quantum 2 InfiniBand switches and ConnectX-7 network interfaces, the same Bluefield-2 DPUs for isolation and virtualization, and the same Linux and AI Enterprise software stacks, all for rent just like the other cloudy infrastructure.

This is similar to VMware throwing in the towel on building a cloud back in the fall of 2016 and hooking up with Amazon to build the VMware Cloud on AWS, which is AWS iron supporting the VMware SDDC stack. (Dell was too busy paying off the $60 billion it borrowed to acquire EMC and VMware to be a tier one cloud builder.) Or like Cray putting its “Cascade” XC40 supercomputers in Microsoft Azure datacenters back in the fall of 2017.

The details on the DGX Cloud are a bit thin – Huang will provide more insight at the spring GPU Technical Conference 2023 next month – but we did geta bit of a teaser.

“To help put AI within reach of every enterprise customer, we are partnering with major service cloud service providers to offer Nvidia AI cloud services offered directly by Nvidia and through our network of go to market partners and hosted within the world’s largest clouds,” Huang explained on the call with Wall Street analysts going over the numbers. “Nvidia AI as a service offers enterprises easy access to the world’s most advanced AI platform while remaining close to the storage, networking, security, and cloud services offered by the world’s most advanced clouds. Customers can engage Nvidia AI cloud services as the AI supercomputer acceleration library software or pre trained AI model layers. Nvidia DGX is an AI supercomputer and a blueprint of AI factories being built around the world. AI supercomputers are hard and time consuming to build, and today we’re announcing the Nvidia DGX Cloud, the fastest and easiest way to have your own DGX AI supercomputer. Just open your browser. Nvidia DGX cloud is already available through Oracle Cloud infrastructure and Microsoft Azure, Google GCP, and others on the way.”

Huang added that customers using the DGX Cloud can access Nvidia AI Enterprise for training and deploying large language models or other AI workloads, or they can use Nvidia’s own NeMo Megatron and BioNeMo pre-trained generative AI models and customize them “to build proprietary generative AI models and services for their businesses.”

It will be interesting to see how this is all packaged and priced.

Now, back to Q4.

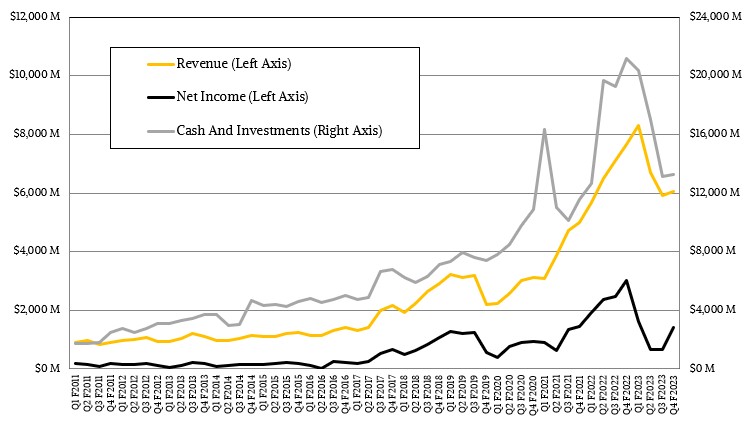

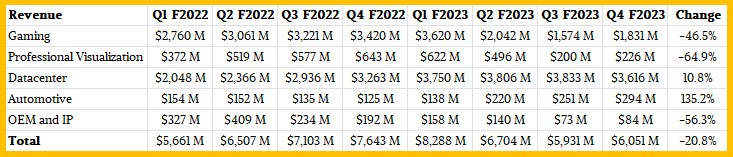

In the January quarter, Nvidia’s overall revenues were $6.05 billion, down 20.8 percent, and net income fell by 52.9 percent to $1.14 billion. Nvidia’s cash hoard shrank by 37.3 percent to $13.3 billion year on year, but was actually up a smidgen sequentially. Revenues were up a tiny bit sequentially from the third quarter of fiscal 2023, and net income was more than double, so this was a better quarter in many ways than the prior one. But it was clearly not as impressive as the year ago quarter.

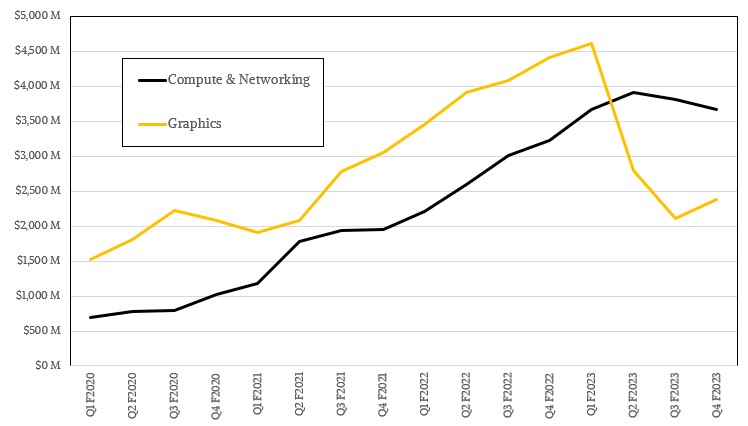

The graphics business, which is linked pretty tightly to the PC business, had a terrible quarter – again – with revenues falling 46.2 percent to $2.38 billion. But the Graphics group improved a bit sequentially as GPU card inventories are burning down and mostly back in balance and as the “Ada” GeForce 40 series cards are starting to ramp.

The Compute and Networking group posted sales of $3.67 billion, up 13.9 percent sequentially and delivered a tiny bit over $15 billion in sales for the year, up 36.4 percent for the full year compared to fiscal 2022.

Nvidia provided operating profits for these two groups for a while but no longer does.

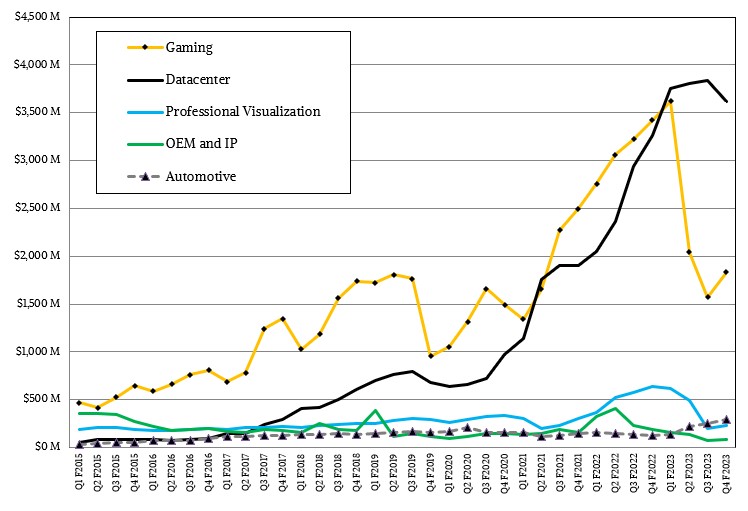

The Datacenter division has been Nvidia’s biggest money maker for the past four quarters, and was only able to grow revenues by 10.8 percent in fiscal Q4 as the hyperscalers and cloud builders cut back heavily on GPU and system board spending even as they spent a lot of dough on H100 GPU accelerators. Colette Kress, Nvidia’s chief financial officer, said on the call that revenues for the H100 GPUs were higher than for the A100 GPUs in the quarter, which is a pretty fast ramp for the H100. And while Nvidia is adamant that it will sell the A100s for the foreseeable future, it sure looks like no one – and particularly not the hyperscalers and cloud builders – want them if sales have tapered off that fast. If the H100s are in tight supply, then the rest of the world will have to make do with the A100s, we presume. As we have seen in a number of recent supercomputer buildouts, such as at IBM Research and Meta Platforms.

Here is a summary table with the actual numbers for the Nvidia divisions for the past two years:

Kress said that of the $15 billion that the Datacenter division brought in during fiscal 2023, around 40 percent of the revenues – around $6 billion – came from hyperscalers and cloud builders. Our best guess is that these “cloud titans” as some call them accounted for close to have of revenues early in fiscal 2023, but in Q4 only accounted for about a quarter of revenues for the numbers to work out. That means spending by the hyperscalers and cloud builders fell by around 50 percent year-on-year in Q4. That is a big drop and is one of the reasons why the Datacenter division did not turn in the quarter that Nvidia was obviously expecting.

Looking ahead to Q1 of fiscal 2024, Kress said that Nvidia was expecting for sales of $6.5 billion, plus or minus 2 percent. So it looks like a carbon copy of Q4 2023. There are worse fates.

DGX cloud. AIaaS. CSPs have obviously evaluated the Grace+Hopper solution and proposed business model. The idea every one of the CSPs is bought-in is a big deal. There is obviously a lot more to be disclosed about the solution and partnerships in March. Would these CSPs just wheel-in prepackaged Nvidia servers and plug them in to their highly curated data centers if they were wanting in some way? I think not, so it’s pretty safe to assume partners are enthusiastic. Either that or in the DGX form factor is the only way they could acquire next level Grace+Hopper performance. And a fully supported and portable software stack just creates migration opportunity, or so believes Jensen “On-Prem” Huang.

I think that “fully supported and portable software stack[s]” are key to long-term success of this (and other new) architecture[s]. Especially for ARM CPUs, that have been the “future” knees-bees for (at least) a decade (and now challenged by RISC-V/VI). For these, as in prior gravy periods, it should be strategically most beneficial to seed university computer labs with workstations (and, possibly, small supers) of the target arch., so as to develop a generation of young minds trained in the programming, and use (and enthusiasm for), such highly-performant (yet slightly non-mainstream) computational systems. It worked nicely for SGI and SUN back in the days (dot-com boom) … but x86 fought back with outstanding affordability. If “emergent” system archs can remain relatively “affordable” (yet performant and energy efficient), they should get themselves a rather sweet ride, for a couple of decades, provided that the enthusiasm of young minds can be switched on for them.

And hence, the IBM mainframe and the AS/400 (Power Systems running IBM i) are still around. Fully supported stacks with complex and hard to move applications make even even proprietary hardware palatable. Ya know, like a TPU. Or an Nvidia GPU. HA!

[in-reply to TPM]: Quite so (esp. w/r to CUDA)! On the CPU front, in relation to enthusiasm for an arch., I’ll quote Robert Smith who develops Quantum Computing simulation software in Common Lisp (SBCL) at Rigetti, eventually targeting Summit: “I also like ppc64el because I have two POWER8 servers racked up in my spare bedroom”. Many young minds with Neoverse laptops and workstations (affordable yet performant ones) would likely do wonders for that arch’s software stack, in short and long terms (I think). There’s a bit of a gaping hole between smartphones/tablets/hobby-boards on the low end, and HPC/AI-ML/Enterprise/Cloud (A64FX, Grace, Altra-Max, Graviton3, …) on the high end, except for Apple’s very nice kit (which doesn’t quite run Linux nor Windows natively I think).