The “Cirrus” Power10 processor from IBM, which we codenamed for Big Blue because it refused to do it publicly and because we understand the value of a synonym here at The Next Platform, shipped last September in the “Denali” Power E1080 big iron NUMA machine. And today, the rest of the Power10-based Power Systems product line is being fleshed out with the launch of entry and midrange machines – many of which are suitable for supporting HPC and AI workloads as well as in-memory databases and other workloads in large enterprises.

The question is, will IBM care about traditional HPC simulation and modeling ever again with the same vigor that it has in past decades? And can Power10 help reinvigorate the HPC and AI business at IBM. We are not sure about the answer to the first question, and got the distinct impression from Ken King, the general manager of the Power Systems business, that HPC proper was not a high priority when we spoke to him back in February about this. But we continue to believe that the Power10 platform has some attributes that make it appealing for data analytics and other workloads that need to be either scaled out across small machines or scaled up across big ones.

Today, we are just going to talk about the five entry Power10 machines, which have one or two processor sockets in a standard 2U or 4U form factor, and then we will follow up with an analysis of the Power E1050, which is a four socket machine that fits into a 4U form factor. And the question we wanted to answer was simple: Can a Power10 processor hold its own against X86 server chips from Intel and AMD when it comes to basic CPU-only floating point computing.

This is an important question because there are plenty of workloads that have not been accelerated by GPUs in the HPC arena, and for these workloads, the Power10 architecture could prove to be very interesting if IBM thought outside of the box a little. This is particularly true when considering the feature called memory inception, which is in effect the ability to build a memory area network across clusters of machines and which we have discussed a little in the past.

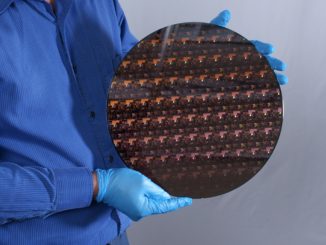

We went deep into the architecture of the Power10 chip two years ago when it was presented at the Hot Chip conference, and we are not going to go over that ground again here. Suffice it to say that this chip can hold its own against Intel’s current “Ice Lake” Xeon SPs, launched in April 2021, and AMD’s current “Milan” Epyc 7003s, launched in March 2021. And this makes sense because the original plan was to have a Power10 chip in the field with 24 fat cores and 48 skinny ones, using dual-chip modules, using 10 nanometer processes from IBM’s former foundry partner, Globalfoundries, sometime in 2021, three years after the Power9 chip launched in 2018. Globalfoundries did not get the 10 nanometer processes working, and it botched a jump to 7 nanometers and spiked it, and that left IBM jumping to Samsung to be its first server chip partner for its foundry using its 7 nanometer processes. IBM took the opportunity of the Power10 delay to reimplement the Power ISA in a new Power10 core and then added some matrix math overlays to its vector units to make it a good AI inference engine.

IBM also created a beefier core and dropped the core count back to 16 on a die in SMT8 mode, which is an implementation of simultaneous multithreading that has up to eight processing threads per core, and also was thinking about an SMT4 design which would double the core count to 32 per chip. But we have not seen that today, and with IBM not chasing Google and other hyperscalers with Power10, we may never see it. But it was in the roadmaps way back when.

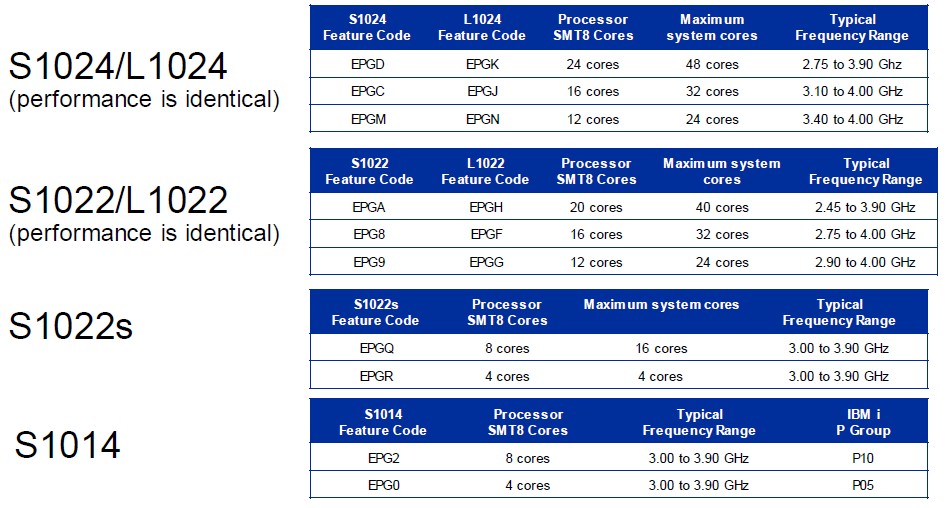

What IBM has done in the entry machines is put two Power10 chips inside of a single socket to increase the core count, but it is looking like the yields on the chips are not as high as IBM might have wanted. When IBM first started talking about the Power10 chip, it said it would have 15 or 30 cores, which was a strange number, and that is because it kept one SMT8 core or two SMT4 cores in reserve as a hedge against bad yields. In the products that IBM is rolling out today, mostly for its existing AIX Unix and IBM i (formerly OS/400) enterprise accounts, the core counts on the dies are much lower, with 4, 8, 10, or 12 of the 16 cores active. The Power10 cores have roughly 70 percent more performance than the Power9 cores in these entry machines, and that is a lot of performance for many enterprise customers – enough to get through a few years of growth on their workloads. IBM is charging a bit more for the Power10 machines compared to the Power9 machines, according to Steve Sibley, vice president of Power product management at IBM, but the bang for the buck is definitely improving across the generations. At the very low end with the Power S1014 machine that is aimed at small and midrange businesses running ERP workloads on the IBM i software stack, that improvement is in the range of 40 percent, give or take, and the price increase is somewhere between 20 percent and 25 percent depending on the configuration.

Pricing is not yet available on any of these entry Power10 machines, which ship on July 22. When we find out more, we will do more analysis of the price/performance.

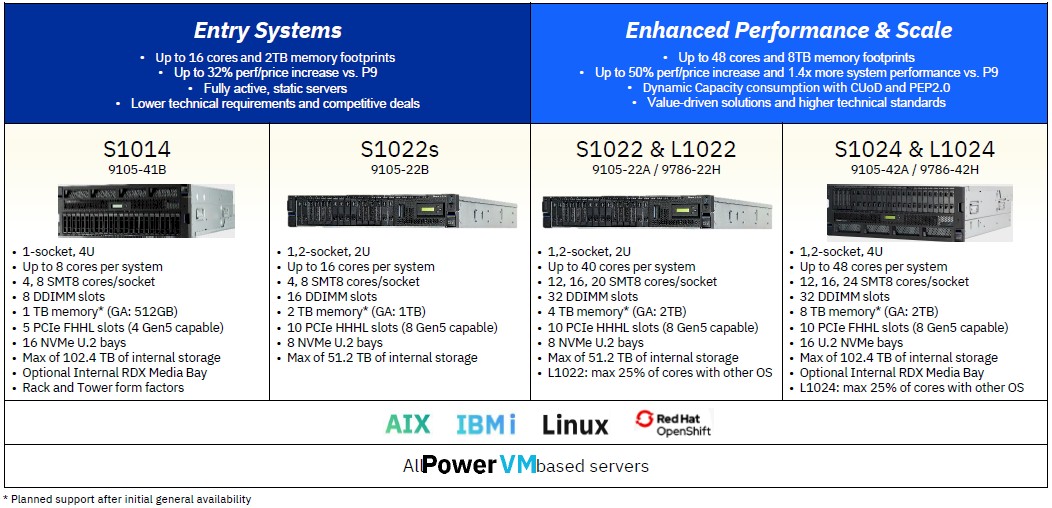

There are six new entry Power10 machines, the feeds and speeds of which are shown below:

For the HPC crowd, the Power L1022 and the Power L1024 are probably the most interesting ones because they are designed to only run Linux and, if they are like prior L classified machines in the Power8 and Power9 families, will have lower pricing for CPU, memory, and storage, allowing them to better compete against X86 systems running Linux in cluster environments. This will be particularly important as IBM pushed Red Hat OpenShift as a container platform for not only enterprise workloads but also for HPC and data analytic workloads that are also being containerized these days.

One thing to note about these machines: IBM is using its OpenCAPI Memory Interface, which as we explained in the past is using the “Bluelink” I/O interconnect for NUMA links and accelerator attachment as a memory controller. IBM is now calling this the Open Memory Interface, and these systems have twice as many memory channels as a typical X86 server chip and therefore have a lot more aggregate bandwidth coming off the sockets. The OMI memory makes use of a Differential DIMM form factor that employs DDR4 memory running at 3.2 GHz, and it will be no big deal for IBM to swap in DDR5 memory chips into its DDIMMs when they are out and the price is not crazy. IBM is offering memory features with 32 GB, 64 GB, and 128 GB capacities today in these machines and will offer 256 GB DDIMMs on November 14, which is how you get the maximum capacities shown in the table above. The important thing for HPC customers is that IBM is delivering 409 GB/sec of memory bandwidth per socket and 2 TB of memory per socket.

By the way, the only storage in these machines is NVM-Express flash drives. No disk, no plain vanilla flash SSDs. The machines also support a mix of PCI-Express 4.0 and PCI-Express 5.0 slots, and do not yet support the CXL protocol created by Intel and backed by IBM even though it loves its own Bluelink OpenCAPI interconnect for linking memory and accelerators to the Power compute engines.

Here are the different processor SKUs offered in the Power10 entry machines:

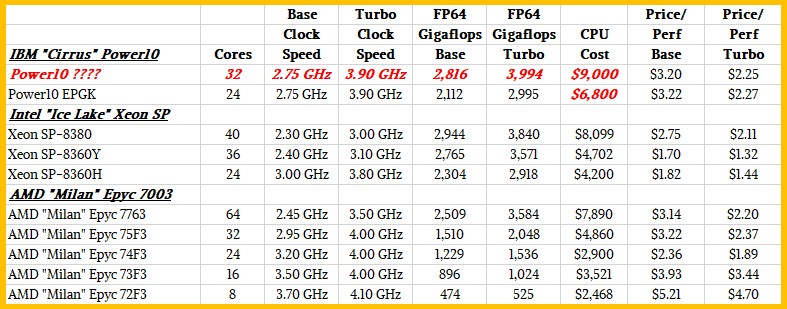

As far as we are concerned, the 24-core Power10 DCM feature EPGK processor in the Power L1024 is the only interesting one for HPC work, aside from what a theoretical 32-core Power10 DCM might be able to do. And just for fun, we sat down and figured out the peak theoretical 64-bit floating point performance, at all-core base and all-core turbo clock speeds, for these two Power10 chips and their rivals in the Intel and AMD CPU lineups. Take a gander at this:

We have no idea what the pricing will be for a processor module in these entry Power10 machines, so we took a stab at what the 24-core variant might cost to be competitive with the X86 alternatives based solely on FP64 throughput and then reckoned the performance of what a full-on 32-core Power10 DCM might be.

The answer is that IBM can absolutely compete, flops to flops, with the best Intel and AMD have right now. And it has a very good matrix math engine as well, which these chips do not.

The problem is, Intel has “Sapphire Rapids” Xeon SPs in the works, which we think will have four 18-core chiplets for a total of 72 cores, but only 56 of them will be exposed because of yield issues that Intel has with its SuperFIN 10 nanometer (Intel 7) process. And AMD has 96-core “Genoa” Epyc 7004s in the works, too. Power11 is several years away, so if IBM wants to play in HPC, Samsung has to get the yields up on the Power10 chips so IBM can sell more cores in a box. Big Blue already has the memory capacity and memory bandwidth advantage. We will see if its L-class Power10 systems can compete on price and performance once we find out more. And we will also explore how memory clustering might make for a very interesting compute platform based on a mix of fat NUMA and memory-less skinny nodes. We have some ideas about how this might play out.

It’s interesting yields appear only to support 12 cores per chip right now. Even so, a system with 48 SMT8 cores still includes 384 threads.

Even without memory inception Power10 provides important diversity to the ecosystem of Linux-capable architectures. I wonder how widely IBM’s Redhat will get deployed and how well it runs compared to AIX.

On one hand, AIX doesn’t have to support all the weird combinations of efficiency and performance cores offered by other manufacturers for mobile and the desktop. On the other hand, the engineering needed to make Linux so flexible could also have led to greater algorithmic efficiency.

I am glad that you are bringing IBM back to this discussion! As you reported at length (at least since 2019) they were leading the way to SerDes-oriented composability (especially with POWER10) and the HPC trend seems to be towards building upgradeable systems that would likely benefit most from the resulting ability to disaggragate heterogeneous subcomponents (OpenCAPI/CXL being to DDR-DIMMs as USB has been to printer parallel ports, potentially).

Does IBM want to be in the HPC business? They clearly decided to stop investing in HPC-specific hardware when they gave up on Blue Gene and Torrent interconnect. Is there money to be made selling the power10 systems they already make into HPC if they don’t have to do any custom development? Maybe, but I bet there is market-specific investment (software, sales infrastructure) unique to the HPC market. It may not be worth it for IBM to capture a small piece of the HPC business, given the small margins. Also, I’m not sure how interested many customers are to port codes to power/aix or power/linux to get something that runs about as fast as x86, for (at best) the same price.

No matter it’s AIX or Linux running on power machine, our software can make it more secure by providing a true zero trust computing environment.