It is hard to say how many Xeon and Xeon SP CPU sales have been obliterated by Nvidia GPUs, but the number is a big one. And that is why Intel finally came around to the idea that GPU compute was not just going to be a niche thing, but normal. And thus, it is scrambling to get a full GPU product line out the door.

Intel is also trying to get some good news out there about its compute engines to try to counter-balance the cacophony of roadmap issues it is facing in its CPUs and GPUs.

For years, Intel loudly and proudly pushed the message that datacenter and HPC computing ran best on general-purpose X86 processors and for a long time it was difficult to argue with the company. Intel chips were dominant in those spaces – though IBM certainly held its own with HPC and supercomputers – and Nvidia was only beginning to push the idea of GPUs in the datacenter.

Much has changed over the past decade and a half, from the rise of Nvidia and later AMD GPUs and their parallel processing prowess in HPC and enterprise computing to the emergence of AI and machine learning and the ensuing demand for more specialized and workload-specific silicon.

As this was happening, Intel made stabs and jabs in the accelerator space, from its wayward “Larrabee” GPU program to the ill-fated “Knights Landing” Xeon Phi many-core processors, both based on X86 cores. With the latter, Intel made a brief push onto the supercomputer shores before its tide went out.

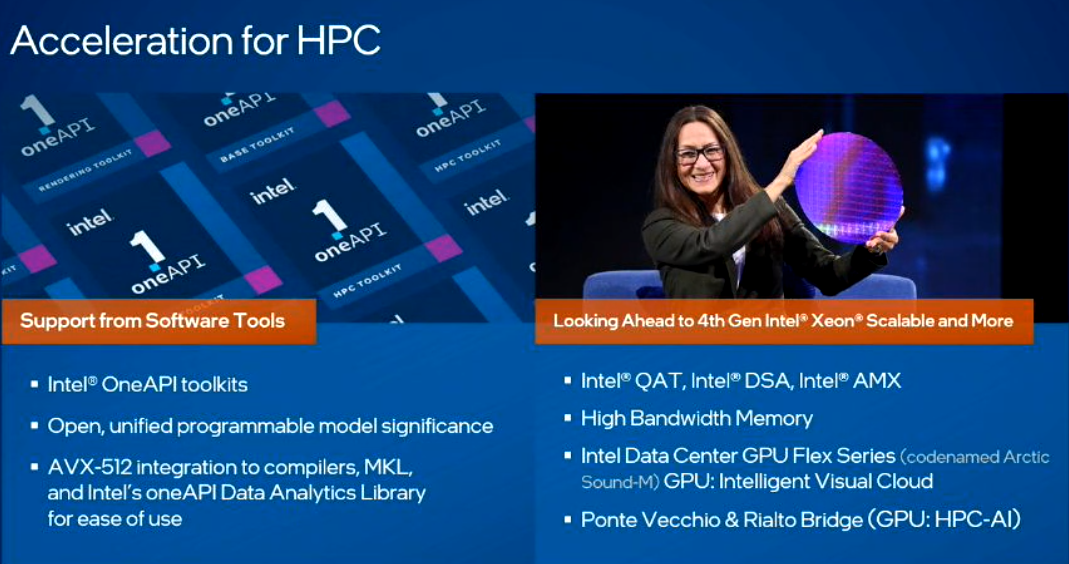

But as we recently noted, Intel is listening to what its customers are asking for and is on the cusp of delivering. The vendor, after several delays, is building up to the eventual release early next year of “Sapphire Rapids” Xeon SPs, which will include a range of accelerator technologies to address the compute and performance demands of the ever-expanding number of AI-based workloads.

At the same time, the company is prepping its first discrete GPU, dubbed “Ponte Vecchio,” while talking about “Rialto Bridge,” the Ponte Vecchio follow-on that is expected to come later in 2023, and “Falcon Shores,” the combo CPU-accelerator “Falcon Shores” XPU. All this comes as others push their accelerator and special-purpose chip plans. Nvidia – now entrenched in the enterprise datacenter, HPC facilities, cloud builders, and hyperscalers and the biggest player in the AI space – continues to expand its portfolio and grow into a full-stack platform provider, despite the fiscal stumble in the last quarter.

AMD is bolstering its GPU story through its planned $49 billion acquisition of FPGA maker Xilinx (echoing Intel’s own $16.7 billion purchase of FPGA vendor Altera in 2015) and $1.9 billion bid for Pensando, which builds popular data processing unit (DPU) accelerators. There also seemingly is no end to companies and startups building processors specifically for AI workloads.

That said, Intel hasn’t been sitting idly, having bought neural network chip makers Nervana Systems and then Habana Labs. And GPUs of all kinds are going to be important in Intel’s future.

This week – outside of the silicon-fest that is the Hot Chips 34 show – Intel punched the accelerator, announcing its datacenter Flex Series “Arctic Sound-M” GPU family (shown in the feature image at the top of this story) that delivers high transcode throughput performance for such jobs as AI inferencing, desktop virtualization, cloud gaming and media processing. At the same time, company executives during a virtual “chalk talk” spoke about the virtues of Sapphire Rapids, Ponte Vecchio, and on-CPU acceleration technology for HPC, enterprise computing, AI workloads and 5G networking.

And Intel will be able to compete as the roadmap rolls out, according to Jeff McVeigh, vice president and general manager of the chip maker’s Super Compute Group.

“Ponte Vecchio has really been designed for this convergence between HPC and AI,” McVeigh said during the chalk talk with analysts and journalists. “It’s not only an HPC benchmark GPU, which I characterize some of our competitors are. It’s not only heavily focused on the AI space. It’s really how you bring this together, supporting FP64 down to various data types with very high throughput performance. We feel that it will compete very well from a standalone performance standpoint, if you’re looking at codes across that spectrum, which many of our customers are psyched to have. … We’re new in this high-end GPU space in the HPC world, so we’ll also make sure that it’s delivering great TCO to our customers.”

Sapphire Rapids will bring with it up to 60 cores and a range of features designed to accelerate workloads, including Advanced Matrix Extensions (AMX) for AI and machine learning and Data Streaming Accelerators (DSA) to more quickly move data to and from memory. QuickAssist Technology (QAT) speeds up data encryption and compression and other features include the High Bandwidth Memory (HBM2E), CXL 1.1 protocol and DDR5.

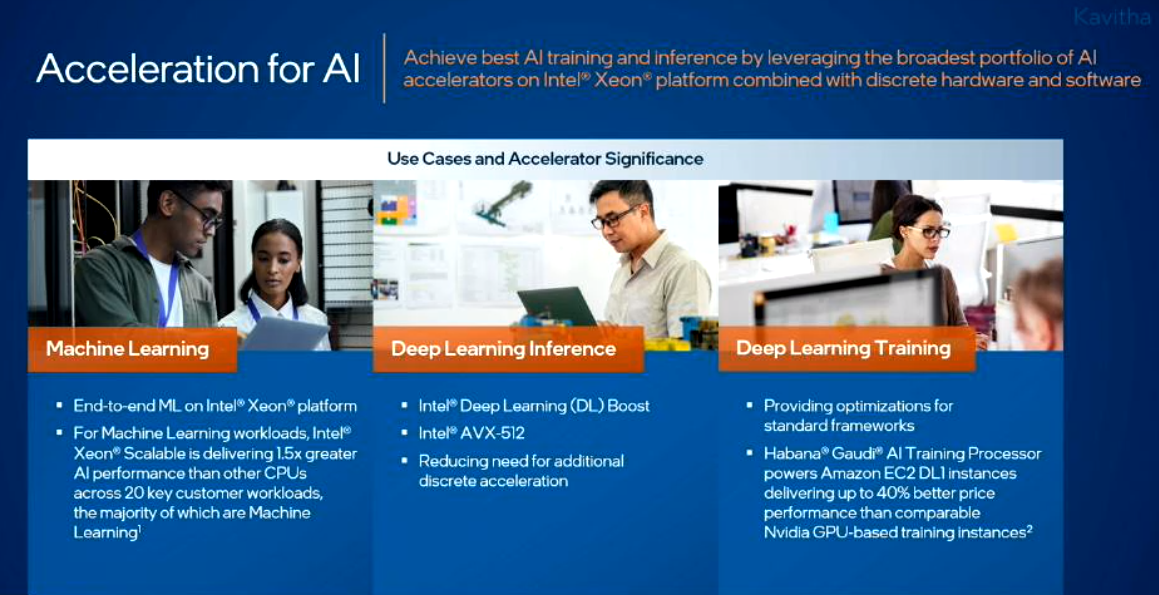

For AI workloads, there also are such accelerators as Intel’s Advanced Vector Extension 512 (AVX-512) X86 instruction set aimed at improving performance and power efficiency. Both AVX-512 and Deep Learning Boost (DL Boost) are aimed at AI inferencing jobs; the Habana Gaudi chip is for AI training workloads in AWS EC2 cloud instances.

“A major focus in Sapphire Rapids was to make sure that we look at some of these growing and emerging usages and identify the predominant functions that are at the center of all of those and build architecture capabilities that actually provide improvements in some of those emerging usages,” said Sailesh Kottapali, chief datacenter CPU architect at Intel, noting that AI was a key focus area. “We did that using both instruction set architecture acceleration capabilities as well as dedicated engines.”

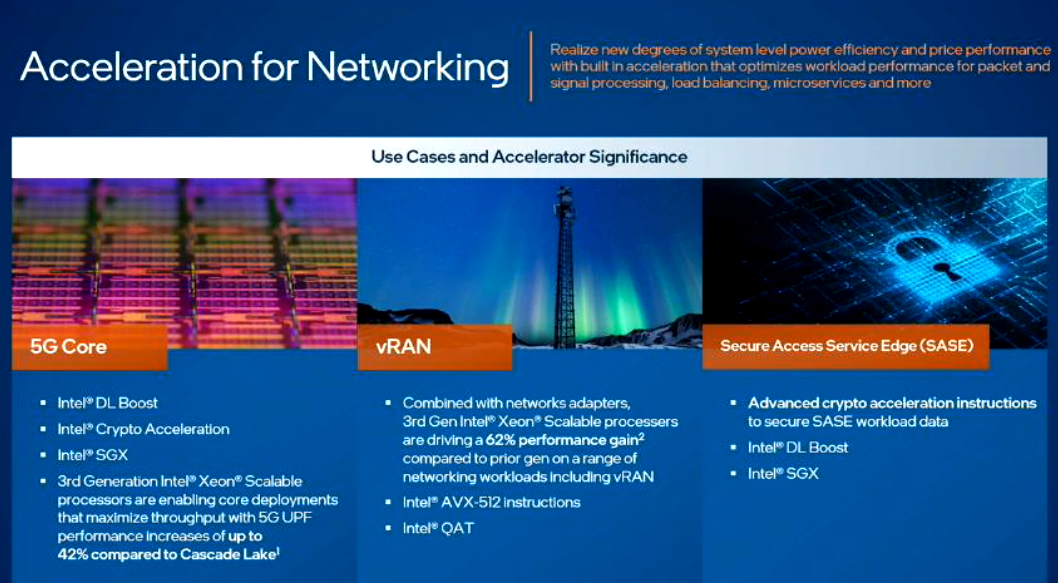

The Intel execs also talked about the role of the on-CPU accelerators in Sapphire Rapids for 5G networking and at the edge, including for vRAN and Secure Access Service Edge (SASE) operations.

McVeigh noted that Intel’s integrated accelerators will be complemented by the upcoming discrete GPUs. He called the Flex Series GPUs “HPC on the edge,” with their low power envelopes, and pointed to Ponte Vecchio – complete with 100 billion transistors in 47 chiplets that leverage both Intel 7 manufacturing processes as well as 5 nanometer and 7 nanometer processes from Taiwan Semiconductor Manufacturing Co – and then Rialto Bridge. Both Ponte Vecchio and Sapphire Rapids will be key components in Argonne National Labs’ Aurora exascale supercomputer, which is due to power on later this year and will deliver more than 2 exaflops of peak performance.

Each Aurora node will include two Sapphire Rapids CPUs with HBM and six Ponte Vecchio GPUs, all tied together with Intel’s Xe Link coherent interconnect and its OneAPI collection of tools and libraries for developing high-performance and data-centric applications across multiple architectures.

“Another part of the value of the brand here is around the software unification across Xeon, where we leverage the massive amount of capabilities that are already established through decades throughout that ecosystem and bring that forward onto our GPU rapidly with oneAPI, really allowing for both the sharing of workloads across CPU and GPU effectively and to ramp the codes onto the GPU faster than if we were starting from scratch,” he said.

McVeigh added that Rialto Bridge will be an evolutionary step up from Ponte Vecchio, the “tick” in Intel’s “tick-tock” development model. Architecturally it will be similar to Ponte Vecchio with some improvements to various features.

“The next generation beyond Rialto Bridge is called Falcon Shores,” McVeigh said. “This is really where we bring together a large architectural change – chiplet-based – that brings together both CPU and GPU x86 and Xe cores together. That would be more of that top model. You can think of Rialto as an evolution from Ponte Vecchio to build out what we’ve already established, both from a software standpoint as well as deployment.”

Be the first to comment