Managing an aging nuclear weapons stockpile requires a tremendous – and ever-increasing – amount of supercomputing performance, and the HPC system business the world over is focused on this as much as trying to crack the most difficult scientific, medical, and engineering problems.

Los Alamos National Laboratory has had its share of innovative supercomputers over its long history, and remains one of the stalwart believers in CPU-only massively parallel architectures to run its applications. The lab has taken delivery of some early editions of the hardware that will be going into its “Crossroads” system, slated to be operational this year and built by Hewlett Packard Enterprise using processors from Intel and interconnect from HPE.

Like many pre-exascale and exascale system design wins in the United States, the Crossroads machine is based on a system design from the formerly independent Cray, which HPE acquired for $1.3 billion in May 2019, and specifically on its “Shasta” system design that has the “Slingshot” Ethernet interconnect, with its HPC extensions, at its heart. That Cray – now HPE – got the Crossroads deal is not much of a surprise. Los Alamos and Sandia National Laboratories share capability-class and capacity-class supercomputers, with each lab siting some of the machines. The “Cielo” supercomputer installed at Los Alamos in 2013 was an all-CPU Cray XE6 system based on AMD Opteron 6136 processors and the Cray “Gemini” 3D torus interconnect, and its kicker, the “Trinity” machine installed in 2015 and 2016, was supposed to be built entirely from Intel “Knights Landing” Xeon Phi many-core X86 processors, but ended up being built from over 9,486 two-socket nodes using the 16-core “Haswell” Xeon E5-2698 v3 processors and another 9,934 nodes based on the 68-core Xeon Phi 7250 processors, for a total of 19,240 nodes with 979,072 cores with a peak performance of 41.5 petaflops at double precision.

Because of Intel’s 10 nanometer process delays, the Crossroads system is about two years late getting into the field, which is no doubt a source of frustration to the scientists working at Los Alamos and Sandia, but at the same time, the US Department of Energy has to invest in multiple technologies to hedge its bets and mitigate its risks – just as Intel’s many product roadmap changes and process and product delays show is necessary.

Los Alamos has said very little about the architecture of the Crossroads machine, and we pointed that out when the $105 million award for the system was given to HPE back in October 2020 after a year of going through a competitive procurement process. But that doesn’t stop us from speculating what it might look like – especially with the first four nodes rolling into Los Alamos from HPE’s factory and the lab bragging about being one of the early receivers of Intel’s “Sapphire Rapids” Xeon SP processors, slated for introduction later this year. What we know is that Crossroads will have about 4X the performance of the Trinity system, and knowing what we do about Sapphire Rapids and what Los Alamos is showing in its pictures, we can work backwards to speculate on its configuration.

First, here is a two-socket test and development node based on Sapphire Rapids, with a number of them being added to the “Darwin” testbed cluster that Los Alamos set up in 2018 to try various technologies on a small scale to give them a spin, including various X86, Power, and Arm server processors, many generations of Nvidia GPUs, as well as specialized systems such as the SambaNova Systems reconfigurable dataflow unit (RDU) matrix math engines. It is, as Los Alamos puts it, a “frankencluster.”

Anyway, here is the outside of the Darwin node based on Sapphire Rapids:

The left side of the machine in the picture above appears to be the two-socket compute node, and it is not clear what is taking up the right side of the machine. Perhaps a bank of “Ponte Vecchio” Xe GPU accelerators linked to the two-socket Sapphire rapids node over PCI-Express 5.0 and CXL 1.1 protocols? Just because Crossroads doesn’t use GPUs doesn’t mean that Darwin doesn’t.

Here is the Darwin Sapphire Rapids system board:

Los Alamos configured that the Darwin nodes use 56 core Sapphire Rapids sockets (which have four chiplets, as we have reported a bunch of times) and that have eight DDR5 memory slots per socket, half their full complement (as happens with some server designs). We presume those controllers off to the right are a pair of “Cassini” Slingshot 11 network interface cards, which we discussed recently here.

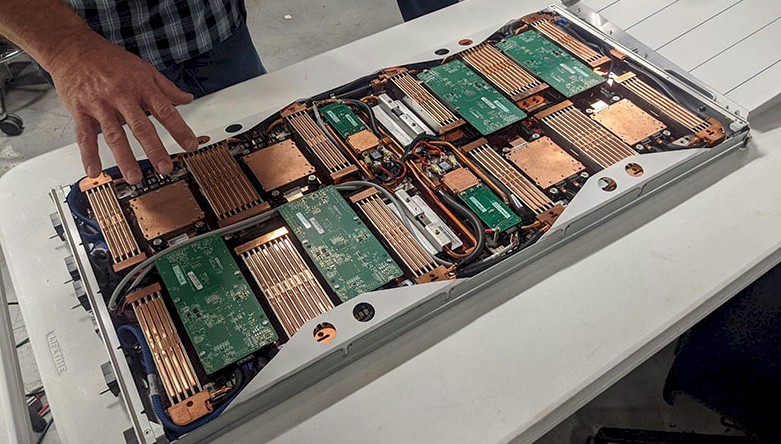

Now let’s have a look at the Crossroads system node – Cray EX Shasta node based only on Sapphire Rapids CPUs – with the cover off:

Cray has been designing system boards and nodes based on eight compute sockets for a very long time, and Shasta is no different. Customers could mix and match CPUs and GPUs, or even other accelerators, within this eight socket footprint, and in past Cray supercomputers the CPU to GPU ratio was often locked down with one CPU paired with one GPU.

With the Shasta nodes in the “Frontier” supercomputer at Oak Ridge National Laboratory and in the “El Capitan” supercomputer at Lawrence Livermore National Laboratory, there is one CPU socket for every four GPU sockets, and two nodes per server sled, but the ratio is actually not any different logically, as we pointed out here. The custom “Trento” Epyc 7003 and stock “Genoa” Epyc 7004 processors used in these two machines have eight CPU chiplets per socket, and the AMD “Aldebaran” Instinct MI250X accelerators have two logical GPUs in a package and four packages for a total of eight logical GPUs. The compute device density is twice as high on the Shasta node as on the prior “Cascades” Cray XC nodes, but the ratio of CPUs to GPUs, at a logical level, is still one to one.

The Crossroads machine will come in two phases, just like Trinity did, and possibly for similar reasons. Trinity ended up being a mix of Xeon CPU and Xeon Phi CPUs because Intel could not get Knights Landing out the door the way Los Alamos wanted it when it wanted it. The initial Crossroads nodes will use DDR5 memory and the follow-on Crossroad nodes will use HBM2 memory – 64 GB per socket, to be precise. We strongly suspect that Los Alamos will only put 256 GB of DDR5 memory on the boards for cost reasons – 32 GB memory sticks will be a lot cheaper per unit of capacity than 64 GB, 128 GB, or 256 GB memory sticks – and because all of the eight slots are full, the nodes will get the full DDR5 memory bandwidth available, and with one memory stick per memory controller there will not be any interleaving, either, reducing memory latency. If the DDR5 memory runs at 4.8 GHz as we suspect, then it looks like Intel will be kissing 260 GB/sec of memory bandwidth per Sapphire Rapids socket, based on where we think “Ice Lake” Xeon SPs, “Cascade Lake” Xeon SPs, and “Skylake” Xeon SPs were.

As you can see, the Crossroads machine has liquid cooling on the CPUs and memory. The Darwin node is air cooled.

There are four Slingshot 11 interface cards atop four of the processors shown in the picture above, and we presume they have a pair of 200 Gb/sec ports, one for a pair of CPUs, such that there are eight ports coming out of each node for eight processors running at the full 200 Gb/sec speed. (On the Frontier and El Capitan machines, each GPU has its own Slingshot 11 port running at 200 Gb/sec, and the CPU is out of the loop as a serial accelerator and fat, slow memory accelerator for the GPUs. Intel has said that the HBM2e variant of the Sapphire Rapids Xeon SPs will have 64 GB of capacity (four stacks of four chips at 4 GB per chip), and it could have gone twice as dense with eight stacks but didn’t. We have seen the bandwidth estimates for this HBM variant of Sapphire rapids all over the map, from 1 TB/sec to 1.8 TB/sec, and it is possible that Intel charges more for variants that run HBM memory at higher speed and therefore provide more bandwidth. Intel always charges a premium for a premium feature, why not now?

The point is this: If supercomputer buyers like Los Alamos have to sacrifice memory capacity by a factor of four (and possibly eight), then they better get a factor of 4X more in memory bandwidth by switching out to HBM memory. At 1 TB/sec, if our numbers are correct, they will get that, and anything above and beyond 1 TB/sec is gravy.

For point of reference, the Trinity Haswell nodes had 64 GB of DDR4 memory and offered about 55 GB/sec of bandwidth per socket, so 128 GB per node and 110 GB/sec, so if the Sapphire Rapids Xeon SP DDR5 nodes in Crossroads are configured as we expect, they will have 2X the main memory (4X seems a stretch for a machine that only costs $105 million) and something more like 5X the bandwidth per socket. The Trinity Knights Landing nodes had what looks like was 16 GB of MCDRAM (a variant of HBM memory cooked up by Intel and Micron Technology that didn’t go anywhere) and what looks like 80 GB of DDR4 memory per socket. That MCDRAM had around 420 GB/sec of bandwidth and that DDR4 memory had around 105 GB/sec of bandwidth. It would be nice for Los Alamos, therefore, if Intel could hit the 1.64 TB/sec of bandwidth with the HBM2e version of the Sapphire Rapids chip, since that would be 4X the bandwidth and 4X the capacity of the Xeon Phi’s MCDRAM memory.

So that leaves performance when it comes to Crossroads and the possible number of nodes this machine might have. And when it comes to HPC, we still think in terms of 64-bit double precision floating point math since everything is less hefty than that.

At 4X the performance of Trinity, Crossroads is at 166 petaflops or so. Assuming that Crossroads is sticking with 56 core Sapphire Rapids and going with top bin parts, then we figured last month in a story called In The Absence Of A Xeon Roadmap, Intel Makes Us Draw One that the top bin 56 core Sapphire Rapids CPU will run at 2.3 GHz and have the same AVX-512/FMA2 vector engines that Skylake, Cascade Lake, and Ice Lake all have, which can 32 double precision flops per cycle per core. Do the math, and each Sapphire Rapids socket can do 4.12 teraflops. With eight CPUs per node, as we see in the picture above, that is still an amazing 5,034 nodes for Crossroads to reach 166 petaflops, but that is not strange considering that Trinity had 19,420 nodes. Packing 4X the compute into just over one-quarter the space is a very good trick – but one that is made possible in part by the Crossroads machine being in the field two years later than Los Alamos had planned. But the other part – and most of the part – is just some good engineering on the part of Intel and HPE/Cray.

In terms of price/performance, Crossroads is a very good deal, and we think this is so at least in part because of Intel’s delays with processors and mothballing the Omni-Path interconnect and getting aligned to Cray Shasta servers and Slingshot interconnect.

The progression in performance and price/performance are impressive. The “Roadrunner” hybrid supercomputer built by IBM for Los Alamos installed in 2008 and decommissioned in 2013, weighed in at 1.7 petaflops peak and cost $121 million, or about $71,200 per teraflops. The Cielo system in 2010, which went back to an all-CPU design (driven by codes used by Sandia and Los Alamos to take care of the nuclear weapons, we think) cost $54 million and delivered a little more than 1 petaflops peak performance for $52,530 per teraflops. Trinity, which started rolling out in 2015 and was finished in 2016, cost $186 million and at an aggregate of 41.5 petaflops peak, comes in at $4,482 per teraflops. And Crossroads, at $105 million and 166 petaflops peak will go down to $632 per teraflops.

That’s a little more than 100X improvement in performance and a little more than 100X improvement in price/performance over those intervening years between Roadrunner and Crossroads.

We can’t wait to see what the real configuration of Crossroads looks like.

“the CPU to GPU ratio was often locked down with one CPU paired with one CPU.” – seems like it should be “one CPU paired with one GPU.”

Yes, thanks.

Interesting that all cpu machines can compete with accelerated designs on cost/performance. Though I suspect storage costs make up a big part of system cost. It’s not alway easy to figure out what the cost of a system really is.