Back in early July, we covered the launch of IBM’s entry and midrange Power10 systems and mused about how Big Blue could use these systems to reinvigorate an HPC business rather than just satisfy the needs of the enterprise customers who run transaction processing systems and are looking to add AI inference to their applications through matrix math units on the Power10 chip.

We are still gathering up information on how the midrange Power E1050 stacks up on SAP HANA and other workloads, but in poking around the architecture of the entry single-socket Power S1014 and the dual-socket S1022 and S1024 machines, we found something interesting that we thought we should share with you. We didn’t see it at first, and you will understand immediately why.

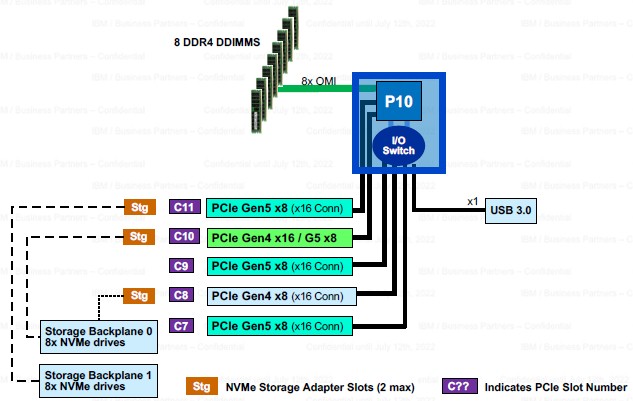

Here is the block diagram we got our hands on from IBM’s presentations to its resellers for the Power S1014 machine:

You can clearly see an I/O chip that adds some extra PCI-Express traffic lanes to the Power10 processor complex, right?

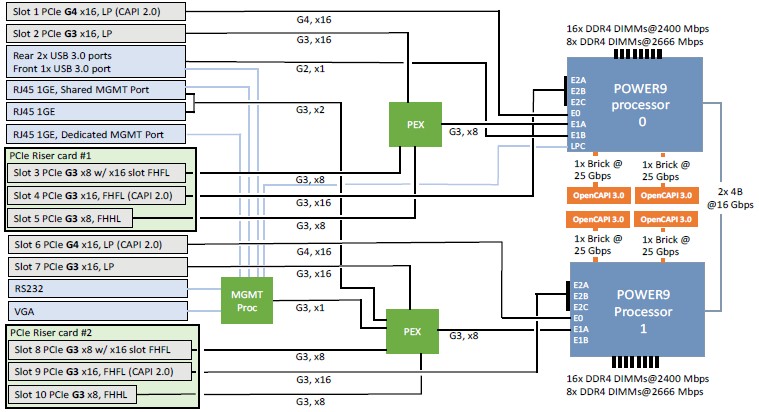

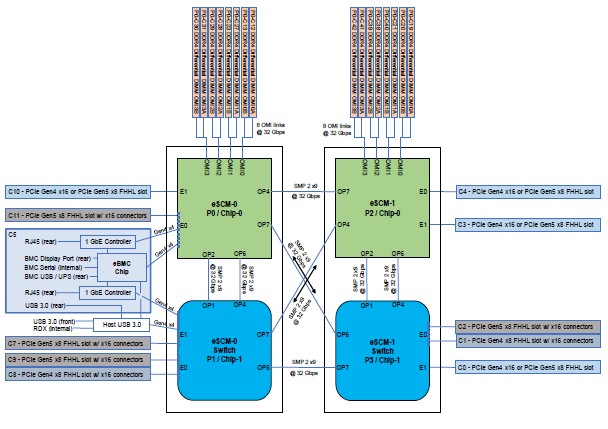

Same here with the block diagram of the Power S1022 (2U chassis) machines, which use the same system boards:

There are a pair of I/O switches in there, as you can see, which is not a big deal. Intel has co-packaged PCH chipsets in the same package as the Xeon CPUs with the Xeon D line for years, starting with the “Broadwell-DE” Xeon D processor in May 2015. IBM has used PCI-Express switches in the past to stretch the I/O inside a single machine beyond what comes off natively from the CPUs, such as with the Power IC922 inference engine Big Blue launched in January 2020, which you can see here:

The two PEX blocks in the center are PCI-Express switches, either from Broadcom or MicroChip if we had to guess.

But, that is not what is happening with the Power10 entry machines. Rather, IBM has created a single dual-chip module with two whole Power10 chips inside of it, and in the case of the low-end machines where AIX and IBM i customers don’t need a lot of compute but they do need a lot of I/O, the second Power10 chip has all of its cores turned off and it is acting like an I/O switch for the first Power10 chip that does have cores turned on.

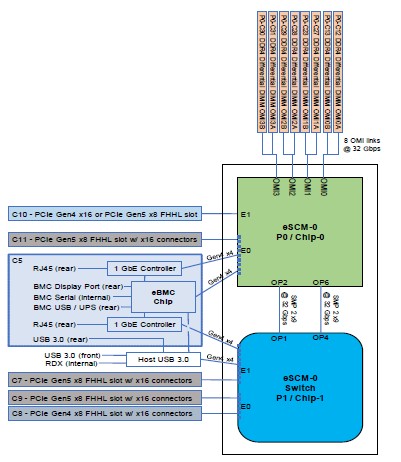

You can see this clearly in this more detailed block diagram of the Power S1014 machine:

And in a more detailed block diagram of the two-socket Power S1022 motherboard:

This is the first time we can recall seeing something like this, but obviously any processor architecture could support the same functions.

In the two-socket Power S1024 and Power L1024 machines

What we find particularly interesting is the idea that those Power10 “switch” chips – the ones with no cores activated – could in theory also have eight OpenCAPI Memory Interface (OMI) ports turned on, doubling the memory capacity of the systems using skinnier and slightly faster 128 GB memory sticks, which run at 3.2 GHz, rather than having to move to denser 256 GB memory sticks that run at a slower 2.67 GHz when they are available next year. And in fact, you could take this all one step further and turn off all of the Power10 cores and turn on all of the 16 OMI memory slots across each DCM and create a fat 8 TB or 16 TB memory server that through the Power10 memory area network – what IBM calls memory inception – could serve as the main memory for a bunch of Power10 nodes with no memory of their own.

We wonder if IBM will do such a thing, and also ponder what such a cluster of memory-less server nodes talking to a centralized memory node might do with SAP HANA, Spark, data analytics, and other memory intensive work like genomics. The Power10 chip has a 2 PB upper memory limit, and that is the only cap on where this might go.

There is another neat thing IBM could do here, too. Imagine if the Power10 compute chip in a DCM had no I/O at all but just lots of memory attached to it and the secondary Power10 chip had only a few cores and all of the I/O of the complex. That would, in effect, make the second Power10 chip a DPU for the first one.

The engineers at IBM are clearly thinking outside of the box; it will be interesting to see if the product managers and marketeers do so.

Always nice to see “out-of-order”-ideas, or as you said “thinking outside of the box”, especially after recently having read and watched some articles/videos about the PRISM/Alpha development at DEC, i.g. about the spirit at DEC during the Olsen era.

That’s the reason I like the Russina Elbrus CPU and it would be worth a deeper look from an expert, because they stuck to VLIW, just doing their thing, and are Spectre-proof (afaik the highest performing Spectre proof CPU in the world). Okay, they are not as fast as other chips (but at least 16-core version finished, and a 32 to come), but: What helps any fast CPU when always having to catch up with new found “Spectr-ations”? There stays always a bad feeling.

Sometimes I think even Intel has nightmares because of driving Itanium/Itanic against the iceberg, all just because of performance and the holy money…and having done this with afaik smart Boris Babajan and his team on board…

…well I: Timothy 6:10 “For the love of money is a root of all evil…”

…I think security is still totally underrated, and the Elbrus seems to have some special architectural additions (s. https://russianscdays.org/files/talks/VVolkonsky-RSCDays-2015.pdf)

I think this was necessary to support some of their business plans to ship pre-populated hardware to customers. In order to support some of the desired IO configurations for High Availablity, you needed to have more slots populated than a single CPU could practically support (configuration I am thinking of here is dual redundant VIO servers). In order to ship some common configs so that capacity on demand could be enabled the way customers want it, lots of IO is desired, this IO chip design helps to facilitate these implementations.

Spectre is a side-effect of speculative execution (at least as implemented in current CPUs) — if ELBRUS doesn’t have speculative execution, then it is Spectre-proof, but it is also a low-performance CPU (by today’s standards). Cloud systems, social media and banks should turn speculative execution off for security between virtualized workloads. HPC, on the other hand, should keep it turned on for maximum performance.

You would also have to turn off hyperthreading.

How and where can you disable completely and Spectre-safe spec.exec in any BIOS or UEFI?

What are high performance CPUs with hyperthreading and speculative execution turned off – what is a Porsche Turbo with the Turbo disabled?

Are all these HPC systems with thousands of cores always used only by one customer at a time, so that there is no chance of stealing info of the other company/university/… currently testing or doing any simulation on e.g. aerodynamics, which the other user is also interested in?