For more than a decade, the pace of the server market was set by the rollout of Intel’s Xeon processors each year. To be sure, Intel did not always roll out new chips like clockwork, on a predictable and more or less annual cadence as the big datacenter operators like. But there was a steady drumbeat of Xeon CPUs that were coming out the fab doors until Intel’s continual pushing out of its 10 nanometer chip manufacturing processes caused all kinds of tears in the Xeon roadmap, finally giving others a chance to get a toehold in datacenter compute on CPUs.

As we look ahead into 2022, the datacenter compute landscape is considerably richer than it was a decade ago. And not just because AMD is back in the game, creating competitive CPUs and GPUs and will by the end of the first quarter acquire FPGA maker Xilinx if all goes well. (That $35 billion deal, announced in October 2020, was expected to close by the end of 2021, but was pushed out as antitrust regulators are still scrutinizing the details.) Now, for those of you who have been around the datacenter for decades, the diversity we are looking at is nothing like what we knew in the distant past when system makers owned their entire hardware and software stacks and developed everything from the CPUs on up to operating systems, databases, and file systems. Back in the day in the late 1980s, there were around two dozen different commercially viable CPUs and probably three dozen operating systems on top of them in the datacenter. (Those were the days. . . . ) It looked for a while like we might end up with an Intel Xeon monoculture in the datacenter, but for myriad reasons – namely that customers like choice and competitors chase profits to get a piece of them – that obviously has not happened. Which sure makes datacenter compute a whole lot more interesting.

So does the increasingly heterogeneity of compute inside of a system and the sufficiently wide variety of suppliers and architectures that compete for work in the glass house full of such systems.

This year, albeit a bit later than it had hoped, Intel will be launching the “Ponte Vecchio” Xe HPC GPU, its first datacenter GPU aimed at big compute and the replacement for the many-core “Knights” family of accelerators that debuted in 2015 and were sunsetted three years later. AMD has launched its “Aldebaran” GPU engine in the Instinct MI200 family of accelerators, which absolutely are a credible alternative to Nvidia’s “Ampere” GA100 GPUs and the A100 accelerators that use them and which are getting a little long in the tooth, having been launched in May 2020. (Don’t worry, Nvidia will fix this soon.) And to make things interesting, Nvidia is working on its own ”Grace” Arm server CPU, although we won’t see that come into the market until 2023. So that is all we are going to say about Grace as we look ahead into just 2022. The point is, the big three datacenter compute vendors – Intel, AMD, and Nvidia – will all have CPUs and GPUs in the field at the same time in a little more than a year and Intel and AMD will have datacenter CPUs, GPUs, and FPGAs in the field this year.

Nvidia does not believe in FPGAs as compute engines, so don’t knee-jerk react and think Nvidia will go out and buy FPGA maker Achronix after its SPAC initial public offering was canceled last July or buy Lattice Semiconductor, the other FPGA maker that matters. It ain’t gonna happen.

But a lot is going to happen this year in datacenter compute, and below are just the highlights. Let’s start with the CPUs:

Intel “Sapphire Rapids” Xeon SP: The much-anticipated kicker 10 nanometer Xeon server chip, and one that is based on a chiplet architecture. Sapphire Rapids, unlike its “Ice Lake” and “Cooper Lake” predecessors, will comprise a full product line, spanning from one to eight sockets gluelessly. (Ice lake was limited to one and two sockets and Copper Lake was limited to four and eight sockets because the crumbled up roadmap made them overlap. Sapphire Rapids will have up to 56 cores per socket in a maximum of 350 watts if the rumors are right. Sapphire Rapids will sport DDR5 memory and PCI-Express 5.0 peripherals, including support for the CXL interconnect protocol, and is said to support up to 64 GB of HBM2e memory and 1 TB/sec of bandwidth per socket for those HPC and AI workloads that need it. The chip is rumored to support up to 80 lanes of PCI-Express 5.0, so it will not be starved for I/O bandwidth as prior Xeon SPs, such as the “Skylake” and “Cascade Lake” generations, were. The third generation “Cross Pass” Optane 300 series persistent memory will also be supported on the “Eagle Pass” systems that support the Sapphire Rapids processors.

AMD “Genoa” and “Bergamo” Epyc 7004: While Intel is moving to second-generation 10 nanometer processes for Sapphire Rapids, AMD will be bounding ahead this year to the 5 nanometer processes from Taiwan Semiconductor Manufacturing Co with its “Genoa” and “Bergamo” Epyc 7004 CPUs based on the respective Zen 4 and Zen 4c cores, which were unveiled with very little data back in November while also pushing out the “Milan-X” Epyc 7003 chips with stacked L3 cache memory. The Genoa Epyc 7004 is coming out in 2022, timed with think with whenever AMD thinks Intel can get Sapphire Rapids out, sporting 96 cores and support for DDR5 memory and PCI-Express 5.0 peripherals. It looks like AMD wanted to get the 128 core Bergamo variant of the Epyc 7004 out the door in 2022, but is only promising it will be launched in 2023. We think that depending on yield and demand, AMD could try to ship Bergamo before its official launch to a few hyperscalers this year. We shall see.

Ampere Computing “Siryn” and probably not branded Altra: The company has been ramping up sales of its 80 core “Quicksilver” Altra and 128 core “Mystique” Altra Max processors throughout 2021, both based on the Arm Holdings Neoverse N1 cores and both etched using 7 nanometer processes from TSMC. This year sees the rollout of the Siryn CPUs, which are based on a homegrown core, which we have been calling the A1, that has been under development for years by Ampere Computing as well as a shift to 5 nanometer TSMC manufacturing. It will be interesting to see if the A1 cores go wide, as Amazon Web Services has done with its Graviton3 processors (which are based on the Arm Holdings Neoverse V1 cores), or if Ampere Computing will use a more minimalist design and pump up the core count. As we wrote back in May last year, we think that the Siryn chips will sport 192 of the A1 cores, which will be stripped down to the bare essentials that the hyperscalers and cloud builders need, and we think further that the kicker to Siryn, due in 2023, will have up to 256 cores based on either a tweaked A1 core or a brand new A2 core. The Siryn chips will almost certainly support DDR5 memory and PCI-Express 5.0 peripherals, and very likely will also support the CXL interconnect protocol for accelerators. We never expect for Ampere Computing to add simultaneous multithreading (SMT) to its cores, as a few failed Arm server chip suppliers have done and as AWS does not do with its Graviton line, either.

IBM “Cirrus” Power10: Big Blue claims to not have a codename for its Power10 chip, so last year we christened it “Cirrus” because we have no patience for vendors who don’t give us synonyms. The 16 core Cirrus chip, which we detailed back in August 2020, debuted in September 2021 in the “Denali” 16 socket Power E1080 servers. The Power E1080 has a Power10 chip with eight threads per core using SMT and 15 of the 16 cores are activated in each chip and IBM also has the capability to have two Power10 chips share a single socket. But with the lower-end Power10 chips coming this year, IBM has the capability to cut the cores in half to deliver twice as many cores with half as many threads – a capability that was also available in the low-end “Nimbus” Power9 chips, by the way. Anyway, IBM will be able to have up to 30 active SMT8 cores and up to 60 active SMT4 cores in a single socket using dual chip modules (DCMs), and has native matrix and vector units in each core to accelerate HPC and AI workloads to boot. The Power10 core has eight 256-bit vector math engines that support FP64, FP32, FP16, and Bfloat16 operations and four 512-bit matrix math engines that support INT4, INT8, and INT16 operations; these units can accumulate operations in FP64, FP32, and INT32 modes. IBM has a very tightly coupled four-socket, DCM-based Power E1050 system (we don’t know its code name as yet) which will have very high performance and very large main memory, as well as the “memory inception” memory area networking capability that is built into the Power10 architecture and that allows for machines to share each other’s memory as if it is local using existing NUMA links coming off the servers.

IBM “Telum” z16: The next generation processor for IBM’s System z mainframes, the z16, which we talked about back in August 2021, is architecturally interesting and is certainly big iron, but it is probably not the next platform for anyone but existing IBM mainframe shops. The Telum chip is interesting in that it has only eight cores, but they run at a base 5 GHz clock speed. The z16 core supports only SMT2 and has a very wide and deep pipeline, and it also has its AI acceleration outside of the cores but accessible using native functions that will allow for inference to be relatively easily added to existing mainframe applications without any kind of offload.

It would be great if the rumored Microsoft/Marvell partnership yielded another homegrown Arm server chip, and it would further be great if late in 2022 AWS put out a kicker Graviton4 chip just to keep everyone on their toes. And of course, we would have loved for Nvidia’s Grace Arm CPU, which will have fast and native NVLink ports to connect coherently to Nvidia GPUs and more than 500 GB/sec of memory bandwidth per socket, would come out in 2022.

Now, let’s talk about the GPU engines coming in 2022.

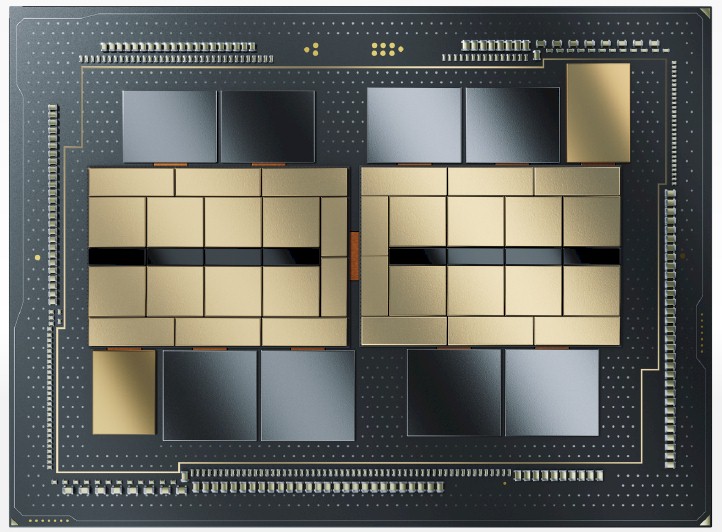

Nvidia “Hopper” or A100 Next: There is plenty of confusion about codenames for Nvidia GPUs, but we think Nvidia will deliver its kicker to the GA100 GPU, called A100 Next on the roadmaps and codenamed “Hopper” and the GH100 in the chatter, will be announced at the GPU Technical Conference in March of this year. Very little is known about the GH100, but we expect it to be etched in a 5 nanometer process from TSMC and we also expect for Nvidia to create its first chiplet design and to put two GPU chiplets into a single package, much as AMD has just done with the “Aldebaran” GPUs used in the Instinct MI200 series accelerators. AMD is delivering 47.9 teraflops of double precision FP64 performance in the Aldebaran GPU and Intel is expected to offer in excess of 45 teraflops of FP64 performance in the “Ponte Vecchio” GPU coming this year, it will be interesting to see if Nvidia jacks up the FP64 performance on the Hopper GPU complex.

AMD “Aldebaran” Instinct MI200 Cutdown: AMD has doubled up the GPU capacity with two chiplets in a DCM for the Instinct MI200 devices, so why not create a GPU accelerator that fits in a smaller form factor, lower thermal design point, and at a much cheaper price point per unit of performance by only putting one chiplet in the package. No one is talking about this, but it is a possibility. It could take on the existing Nvidia A100 very nicely.

Intel “Ponte Vecchio” Xe HPC: Intel will finally get a datacenter GPU accelerator into the field, but at a rumored 600 watts to 650 watts, the performance that Intel is going to bring to bear is going to come at a relatively high thermal cost if these numbers are correct. We profiled the first generation Xe HPC GPU back in August 2021, which is a beast that has 47 different chiplets interconnected with Intel’s 2D EMIB chiplet interconnect and Foveros 3D chip stacking. With the vector engines clocking at 1.37 GHz, the Ponte Vecchio GPU complex delivers 45 teraflops at FP64 or FP32 precision and its matrix engines deliver 360 teraflops at TF32, 720 teraflops at BF16, and 1,440 teraops at INT8. This may be a hot GPU, but it is a muscle car. This is a lot more matrix performance than AMD is delivering with Aldebaran – 1.9X at BF16 and 3.8X at FP32 and INT8.

And finally, that brings us to FPGAs. There has not been a lot of activity here, and frankly, we are not sure where Xilinx is on the rollout of its “Everest” Versal FPGA compute complexes, which have a chiplet architecture, and where Intel is at with the rollout of its Agilex FPGA compute complexes, which use a chiplet architecture and EMIB interconnects, and its successor devices (presumably also branded Agilex), which will use a combination of EMIB and Foveros just like the Ponte Vecchio GPU complex does. We need to do more digging here.

As for AI training and inference engines, which are also possibly an important part of the future of datacenter compute, that is a story for another day. There is a lot of noise here, and some traction and action, but these are nowhere near the datacenter mainstream.