Starting way back in the late 1980s, when Sun Microsystems was on the rise in the datacenter and Hewlett Packard was its main rival in Unix-based systems, market forces compelled IBM to finally and forcefully field its own open systems machines to combat Sun, HP, and others behind the Unix movement. At the time IBM was the main supplier of proprietary systems — completely vertically integrated stacks, from down into the CPU all the way to the application development tools and runtimes — and the rise of Unix was having a detrimental effect on sales of these machines.

Here we are, more than three decades later, and IBM represents around half of the revenues outside of the x86 server market. That relatively big piece of the non-X86 server market is despite the rise of single-socket machines based on Arm servers at selected hyperscalers and cloud builders, which is eating into x86 server growth but which also making the non-x86 piece of the pie rise more than it has in about a decade. Sun and HP have left the RISC/Unix server battlefield long since — about a half decade ago if you want to be generous — and AMD has no interest whatsoever in building four-socket, eight-socket, or larger machines based on its Epyc architecture. Quite the opposite. AMD is the poster child for the single-socket server, and has made that a centerpiece of its strategy since the “Naples” Epyc 7001 CPUs — the company’s re-entry into the server market — launched four years ago. Google has caught the religion with its Tau instances on its eponymous public cloud, but that is mainly to combat the single-socket Graviton2 instances at Amazon Web Services.

While machines with two or fewer sockets are expected to dominate shipments in the server racket for the foreseeable future, and will continue to dominate the revenue stream, there is still a healthy appetite for machines with four or more sockets, and these machines drive a very considerable amount of revenue and probably the vast majority of whatever profits there are in the server business.

The reason is simple: If you have a big job that needs big memory and a lot of cores and threads to perform, then a big NUMA server is the easiest way to accomplish this for most enterprise IT organizations. These NUMA machines look and feel like a gargantuan workstation and are relatively easy to program and scale, at least compared to distributed computing clusters that have a much looser linkage between compute and memory.

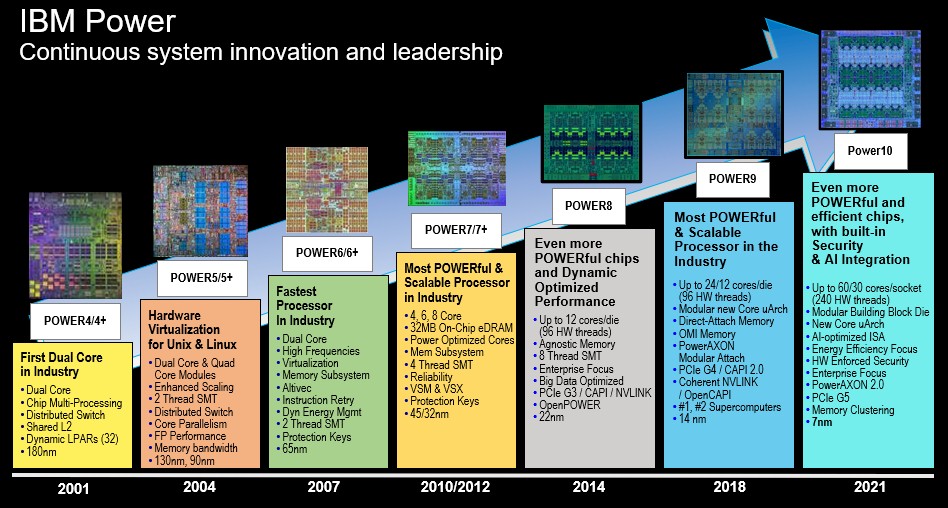

IBM reminded its customers and partners of the revenue stream for big iron as part of its briefings for the “Denali” Power E1080 server launched two weeks ago, the first and biggest of the servers that Big Blue is expected to roll out this year and next based on the Power10 processor that has been in development for more than three years. As has been the case with prior Power chips since the Power4 was launched in 2001, the IBM focuses on making a brawny core that can do a lot of work, and then scales the machine out with dozens of sockets.

What this chart does not show is the socket scalability over time. Back in 1997, the RS/6000 Unix line and the AS/400 proprietary line were both based on the “Northstar” 64-bit PowerPC processor and could scale to eight or 16 sockets, and IBM also sold machines with the prior generation of “Apache” PowerPC processors that could scale to eight sockets. In 2000, the single-core “I-Star” PowerPC chips debuted, and IBM increased the top-end scale by 33 percent to 24 sockets, and in early 2001, IBM had machines using its single-core “S-Star” PowerPC chips in essentially the same machines with a 20 percent clock boost and a bunch of microarchitecture features.

In late 2001, IBM launched the Power4 processor, the first dual-core chip and the first one to break the 1GHz barrier in the server racket, and Big Blue started smacking Sun and HP around — and increasingly so over the next decade and a half. So much so that they abandoned the Unix market and adopted Intel Xeon processors and embraced Linux. In any event, with Power4 chips, IBM scaled up the chassis using NUMA clustering to a mere eight sockets but could drive overall scale by 33 percent over the S-Star machines considerably because of improvements in the NUMA interconnect and having two cores per socket.

With Power5, IBM rebranded the AS/400 line iSeries and the RS/6000 Unix line pSeries, and the core counts and socket counts remained the same at two per chip; with Power5+ IBM created its first dual-chip module (DCM) that put two whole processors into a single socket, doubling the core count for the systems, and added two threads per core with simultaneous multithreading (SMT), but because clock speeds were lower only driving system throughput by around 60 percent or so. Although this roadmap doesn’t show it, IBM had DCMs with the Power6+ chip, too. But again, because of core microarchitecture and NUMA enhancements, IBM could get more work done with fewer cores with Power5 and then Power6 machines, and then add DCMs at the half-step between generations to make a nice, smooth performance increase curve.

The Power5 high-end machines laid the foundation for the multi-socket node, four-node system designs that are still used to this day for big iron at IBM — including the Power E0180 machine. (IBM did have a big, bad 32-socket NUMA machines using Power6 chips called the Power 595 and using Power7 chips called the Power 795 , similar to the 24-socket I-Star and S-Star machines and using its backplane and book packaging technologies. But the Power 795 was the last such machine that IBM has done in this fashion. They have been mutlinode machines since that time, and that is because the NUMA interconnect optical links have improved to the point that you don’t need a backplane, which costs money to build.

With the enterprise-class Power 770 and Power 780 machines based on the Power7 and then Power7+ processors, IBM moved from two-socket nodes to four-socket nodes, and delivered 16-socket systems that had half the raw scale of the 32-socket Power 795, but cost less to manufacture because it used the four-socket NUMA server as its core component. This approach has not changed and will not change in the future, we think. Queuing theory and communication overhead make it very difficult to interlink more than 16 nodes to each other in an efficient manner. If you move to 32 or 64 sockets, the amount of extra performance is never realized because the cores end up waiting for memory and I/O accesses across the NUMA cluster. Or you end up partitioning the machine, and at that point, you may as well not pay for the NUMA electronics overhead and just buy smaller, cheaper physical machines. There are diminishing marginal returns with NUMA — and it diminishes fast. It is better to make the cores inside the socket more and more tightly coupled and then make each socket more and more tightly coupled.

The upshot, over the past two decades, is that IBM has been able to increase the top-end NUMA system throughput by a factor of 112X between a 16-core iSeries 890 or pSeries 690 (rated at n rPerf relative performance rating from IBM at 71.44 across the system) and a 240-core Power E1080 (rated at 7,998.6 rPerf by Big Blue).

That consistent, predictable, and dependable increase in NUMA scale is why IBM has around 14,000 Power-based machines that have four sockets or more in the field. (To be precise, IBM told business partners that it has over 10,000 customers using its “enterprise, scale-up servers” based on Power7, Power7+, and Power8 processors, and we are estimating there are another 4,000 customers around the world with Power Systems iron with more than four sockets based on Power9 processors. This may not sound like a lot of customers or machines (a lot of customers have at least two systems with high availability clustering across the boxes), but such machines are expensive — from hundreds of thousands of dollars to tens of millions of dollars, depending on the configuration — and the money sure do mount up.

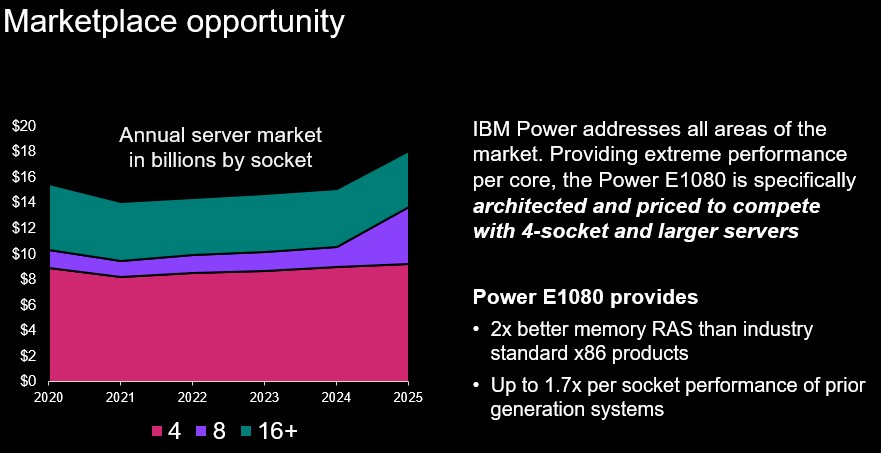

Here is how IBM is forecasting the market, in fact, for machines with more than four-sockets:

Because of the processor transitions in IBM’s own System z and Power Systems lines, it would not be surprising to see a dip in big NUMA server sales from 2020 to 2021, but as you can see in the chart above, a lot of the dip was from diminished sales of four-socket boxes, which is interesting. This could be a stall in demand or a more aggressive pricing environment as Intel peddles “Skylake” and “Cascade Lake” and “Cooper Lake” Xeon SP systems against Power9 iron — especially in China for various workloads and especially for supporting SAP HANA in-memory databases and their application stacks.

What is also interesting is that IBM thinks the market will be remarkably steady and growing in the coming years, and in 2025 the forecast for eight-socket machines is expected to balloon. IBM is telling resellers and other business partners that it wants to have a 50 percent share of the high-end market (where machines cost in excess of $250,000) and a 10 percent of the midrange market (where machines cost between $25,000 and $250,000). The Power E1080 will address the high-end, and the future Power E1050, which is due sometime in the second quarter next year, will address the midrange.

What is truly surprising is that IBM does not have a more aggressive stance on the four-socket market, given that it represents half the opportunity and the bulk of server shipments in this upper echelon of the NUMA system space. It may just be a matter over coverage and profitability. HPE is going to protect its turf very aggressively, including its sales of 16-socket Superdome Flex 280 machines running Linux. Dell does not have much of an appetite for eight-socket Xeon SP machines, but it is very aggressive with four-socket boxes — and so are Lenovo, HPE, Inspur, and Cisco Systems. Ultimately, market share begets market share. Sun and HP customers used to RISC/Unix machines moved over to IBM because they could get a familiar big iron experience. Customers used to HPE and Dell four-socket boxes will sometimes consider Lenovo, Inspur, and Cisco gear, but generally they bounce between the two largest OEMs in the world, playing them off each other.

A lot depends on what two-socket infrastructure servers companies are used to buying and, funnily enough, what baseboard management controller (BMC) they have invested their time and money into learning how to use. Remember, enterprise servers are pets, generally running one workload per physical machine, and the BMC is — we kid you not — the single biggest determinant of what servers companies will buy. If there were a standard BMC, as the Open Compute crowd is trying to cobble together, all hell could break loose in the enterprise datacenter.

Which could be fun, indeed.

Timothy, there are two types of Superdome Flex machines: the 8-socket “Cooper-Lake” based Superdome Flex 280 with no node-controllers and the “classic” Superdome Flex 32-socket, based on “Cascade Lake” and the SGI inherited NUMA-Flex node-controller (now called Superdome Flex ASIC). This machine scales (for some government customers on demand) also to 64-sockets, due to the SGI NUMA-Flex architecture. SDF280 whitepaper: https://www.hpe.com/psnow/doc/a50003250enw, SDF whitepaper: ttps://www.hpe.com/psnow/doc/a00036491enw