The Edinburgh Parallel Computing Center (EPPC) is up and running with its Cerebras CS-1 waferscale system and is already working with European companies in biomedical and cybersecurity arenas in addition to its own research into different programming and AI models and projects in natural language processing and genome-wide association studies.

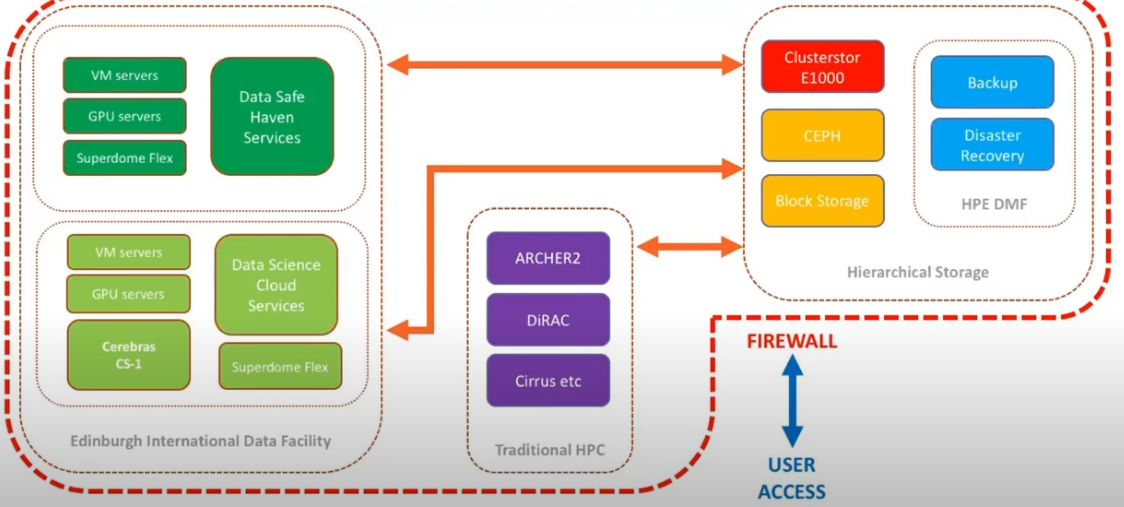

EPCC director, Mark Parsons, says that while they have traditionally focused on supercomputing modeling and simulation, the last five years have pushed them into the data science arena, spurring the creation of a new facility, the Edinburgh International Data Facility where the CS-1 is housed with an HPE SuperDome Flex system.

The CS-1 arrived in March and was ready for its first workloads in May. Parsons says they chose the SuperDome Flex because it serves as a “stepping stone between the massively parallel supercomputing world and that of virtualized data science.”

That push and pull between the requirements of traditional HPC and emerging data science and AI/ML is also part of what made the center look to Cerebras. “The problem on the supercomputing side is that the system constrains the software and on the other end, the data science side runs out of horsepower for demanding users. That’s what we were trying to solve with the CS-1.”

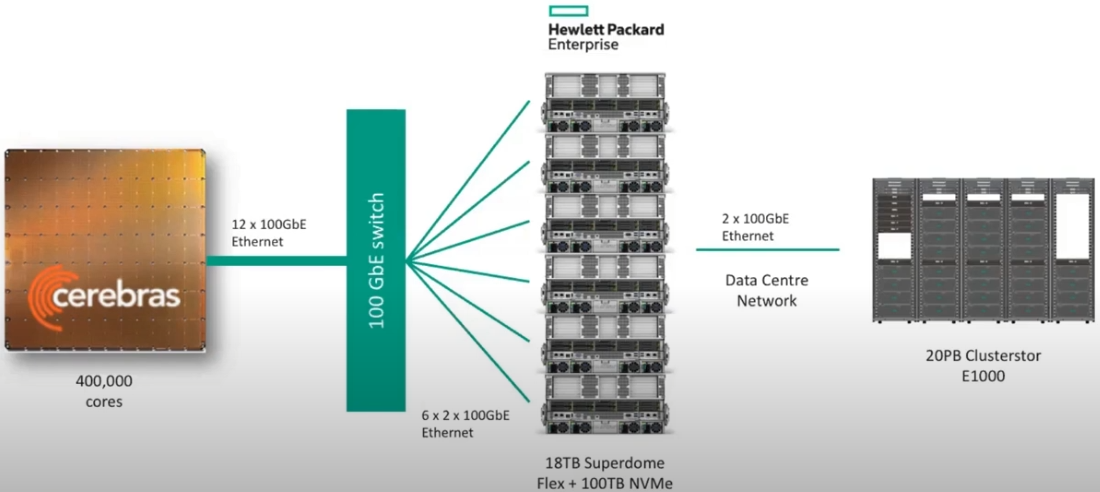

The setup EPCC has is similar to what the Pittsburgh Supercomputer Center has with its own CS-1. EPCC has a large ClusterStor system plugged into the network, which talks to the SuperDome system (18TB main memory and 100TB NVMe dedicated to the Cerebras system).

“AI has transformative potential to change how we process data and make decision but it’s fundamentally constrained by computing infrastructure today. The primary symptom is that models take too long to train. It’s not uncommon in NLP to take days or weeks even on large clusters of GPUs on real-world datasets,” says Cerebras VP of Product, Andy Hock.

“Existing machines were not built for this work. CPU and GPU are suitable but they’re not optimal at scale they can be under-utilized, inefficient, and difficult to program.”

Recall that Cerebras has announced its CS-2 system, which doubles compute and memory although this was not released in time for EPCC’s new center. The CS-2 moves from around 400,000 cores to 850,000, makes an on-chip memory jump from 18G to 40GB, and increases memory bandwidth from 9PB to 20PB with over double the fabric. As Cerebras co-founder and CEO, Andrew Feldman, tells us, all of this translates into a system with a redesigned chassis and power/cooling tweaks that is roughly 20% to 25% more expensive than its predecessor.

There are several companies and institutions who have figured out the data pipeline for their CS-1 and several lined up for the CS-2, Feldman says. Recall that Cerebras has CS-1s at Argonne, LLNL, Edinburgh, with a system doing drug discovery work now at GlaxoSmithKline.

Be the first to comment