It is common knowledge in the manufacturing sector of the economy that many of the companies that should have deployed HPC simulation and modeling applications one or two decades ago to help with product design, among other tasks, did not do so. With the lowering of costs of HPC clusters based on X86 processors and the advent of open source software to perform many tasks, this seemed counterintuitive. Perplexing. And very annoying to those who were trying to address “the missing middle,” as it came to be called.

There are a lot of theories about what went wrong here, but at the very least, even with the lowering of the cost of HPC wares, buying a cluster, managing it, and keeping it busy to therefore justify the substantial investment was tough to rationalize, no matter the potential returns. Then there was the difficulty of sorting out how to make an HPC workflow, consisting of multiple applications hooked together, work well when existing systems – designing with scientific workstations and easier to use CAD/CAM tools – did the job. And even with the advent of the HPC in the public cloud, HPC machines might be easier to consume, but no one would call them inexpensive.

No one wants a missing middle with machine learning in the enterprise, which is a much broader market to be sure, and that is going to mean democratizing AI as vendors like Dell, Hewlett Packard Enterprise, Lenovo, IBM, and others have been trying to do for years in the HPC space. The good news now is that HPC and AI applications can usually run on the same iron, which allows investments to be amortized over more – and more diverse – workloads. The breadth of the market – everyone has exploding datasets as they hoard data in the hopes of transmuting it into valuable nuggets of information – also helps, because at the very least all companies can potentially use AI, whereas not every company can use traditional HPC simulation and modeling.

Dell was founded with the idea of making a decent X86 PC available for a reasonable price, and when Michael Dell formally entered the datacenter in November 1994 with the first PowerEdge machines, the idea was to bring that same commodity X86 philosophy to the glasshouse. And Dell, as much as any other vendor, pushed the X86 agenda hard and benefitted greatly from this, becoming the top server shipper during the height of the dot-com boom in 2001. Suffice it to say, the company knows a thing or two about bringing technologies to the middle and has also pushed up into the high end, particularly with the acquisition of EMC. That acquisition also brought Dell the VMware server virtualization juggernaut, whose software is used by over 600,000 organizations these days and has become over the past dozen years the dominant management substrate in the enterprise. And it is the combination of Dell iron and VMware software that can help shrink that potential missing middle in AI and also speed up the absorption of AI technologies by VMware.

The first step in this “making AI real” effort by Dell was to get the vSphere 7 virtualization stack, which we previewed back in March, out the door, complete with its Tanzu Kubernetes container orchestrator integrated with the virtual server platform that VMware is best known for. We could argue about whether or not running Kubernetes atop the ESXi hypervisor makes sense, but the point is moot. For a lot of enterprises, who are risk averse, this is how they are going to install Kubernetes because the VMware stack is how they manage their virtual infrastructure and the software that is packaged for it. This is very hard to change, and that is why VMware still has a growing and profitable business, with somewhere around 70 million VMs under management of ESXi and that had somewhere around an $11 billion run rate in the final quarter of fiscal 2020 ended in February, by our estimates. That’s about three-quarters of VMware’s business, with another 15 percent being driven by vSAN virtual storage and another 10 percent being driven by NSX network virtualization. Going forward, ESXi will still grow modestly, and by the end of 2022, we expect that it will have an annualized run rate of $11.5 billion in revenues and represent about half of the business, with the remaining half split pretty evenly between vSAN and NSX.

If you wonder why – as we often did – VMware didn’t go ahead with “Project Photon” and create a clean-slate Kubernetes platform, that’s your answer. You don’t upset that applecart. But you do try to tell people they can mix oranges and apples.

Ravi Pendekanti, senior vice president of server solutions product management and marketing at Dell said in an announcement today that Dell and VMware were finally working to preinstall the VMware stack on PowerEdge servers, which will make deployment for those enterprises. And that vSphere 7 stack will also include the Bitfusion GPU slicer and aggregator, which VMware acquired last summer, raising a few eyebrows. (But not ours.) The Bitfusion software allows for multiple GPUs in enclosures to be distinct from the servers and virtualized so they can be shared dynamically with servers and also allows for them to be pooled to create larger aggregations of GPU compute for both AI and HPC workloads, as it turns out. This disaggregation and pooling is key because GPUs are expensive and not every workload that can use them can have them installed. That is just way too costly. So having them disaggregated and available in a pool drives up sharing across time and utilization at any specific time, thus yielding better ROI for AI and, for those who need it, GPU-accelerated HPC workloads.

“We are essentially doing for the accelerator space what we did for compute several years ago,” explained Krish Prasad, senior vice president and general manager of VMware’s Cloud Platform business unit, during the announcement today. “But we have taken it one step further and have given customers the ability to pool the accelerators.”

As an aside: It is a pity that VMware can’t do the same thing for CPUs and memory across individual servers, but perhaps it will buy TidalScale and fix that. Anyway, the GPU middleware from Bitfusion, called FlexDirect, doesn’t just do aggregation and remote pooling, but also has partitioning capability – and without resorting to actually carving up the GPU hardware as Nvidia has done to create the “Ampere” GA100 GPU, which has slices that can act as one large GPU or eight tinier ones. With Bitfusion, the slices can be as small as 1/20th of the GPU and its memory. It is not clear how large of a pool Bitfusion can see, but in the past it was limited to eight GPUs.

Prasad added that the Bitfusion support with vSphere 7 comes initially on Dell PowerEdge R740 rack machines and PowerEdge C4140 semi-custom, hyperscale style machines. Presumably it will eventually be available on other Dell iron, and indeed on any server that supports VMware.

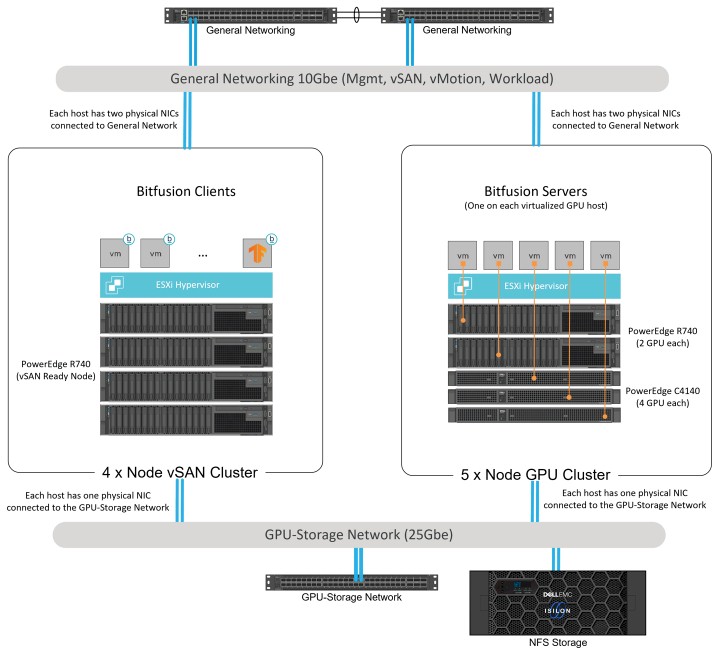

In the long run, these are all good first steps to getting broad adoption of AI among large enterprises, but there will have to be reference systems with software stacks, which Dell is getting ready for market but which the company did not talk about in detail today. It’s the details that matter – and that we can all learn from. Some information about the reference architectures can be found at this link, and the one for Bitfusion is interesting. This is a mix of the PowerEdge servers mentioned above plus physical networking:

This more generic reference architecture for virtual GPUs is also interesting.

We do know that these stacks can include the whole Cloud Foundation enchilada of virtualization and management software from VMware – ESXi, its vSphere and now Bitfusion extensions, vCenter management, vSAN virtual storage, and NSX virtual networking – or for those who want to go barer bones, the vSphere Scale-Out Edition, which has the ESXi hypervisor and vMotion for compute and storage, the vSphere Distributed Switch for virtual networking, and a bunch of management tool add-ons. Ultimately, full AI and HPC application stacks need to have reference architectures, probably in T-shirt sizes so customers can choose quickly, to make this even easier. Prices on these would be nice, too.

Be the first to comment