In July, VMware acquired Bitfusion, a company whose technology virtualizes compute accelerators with the goal of enabling modern workloads like artificial intelligence and data analytics to take full advantage of systems with GPUs or with FPGAs. Specifically, Bitfusion’s software allows for virtual machines to offload compute duties to GPUs, FPGAs, or even other kinds of ASICs. The deal didn’t get a ton of attention at the time, but for VMware, it was an important step in realizing its cloud ambitions.

“Hardware acceleration for applications delivers efficiency and flexibility into the AI space, including subsets such as machine learning,” Krish Prasad, senior vice president and general manager of VMware’s Cloud Platform business unit, wrote in a blog post announcing the acquisition. “Unfortunately, hardware accelerators today are deployed with bare-metal practices, which force poor utilization, poor efficiencies, and limit organizations from sharing, abstracting and automating the infrastructure. This provides a perfect opportunity to virtualize them – providing increased sharing of resources and lowering costs.”

Bitfusion’s products – such as its FlexDirect GPU virtualization technology – enables better sharing of GPU resources among isolated workloads and allows sharing across the network. The software decouples particular physical resources from servers and can work across AI frameworks, networking stacks, and public clouds – and importantly with either containers or VMs as the abstraction layer. VMware’s plan is to integrate Bitfusion into its vSphere platform.

“The acquisition of Bitfusion will bolster VMware’s strategy of supporting AI- and ML-based workloads by virtualizing hardware accelerators,” Prasad wrote. “Multi-vendor hardware accelerators and the ecosystem around them are key components for delivering modern applications. These accelerators can be used regardless of location in the environment – on-premises and/or in the cloud.”

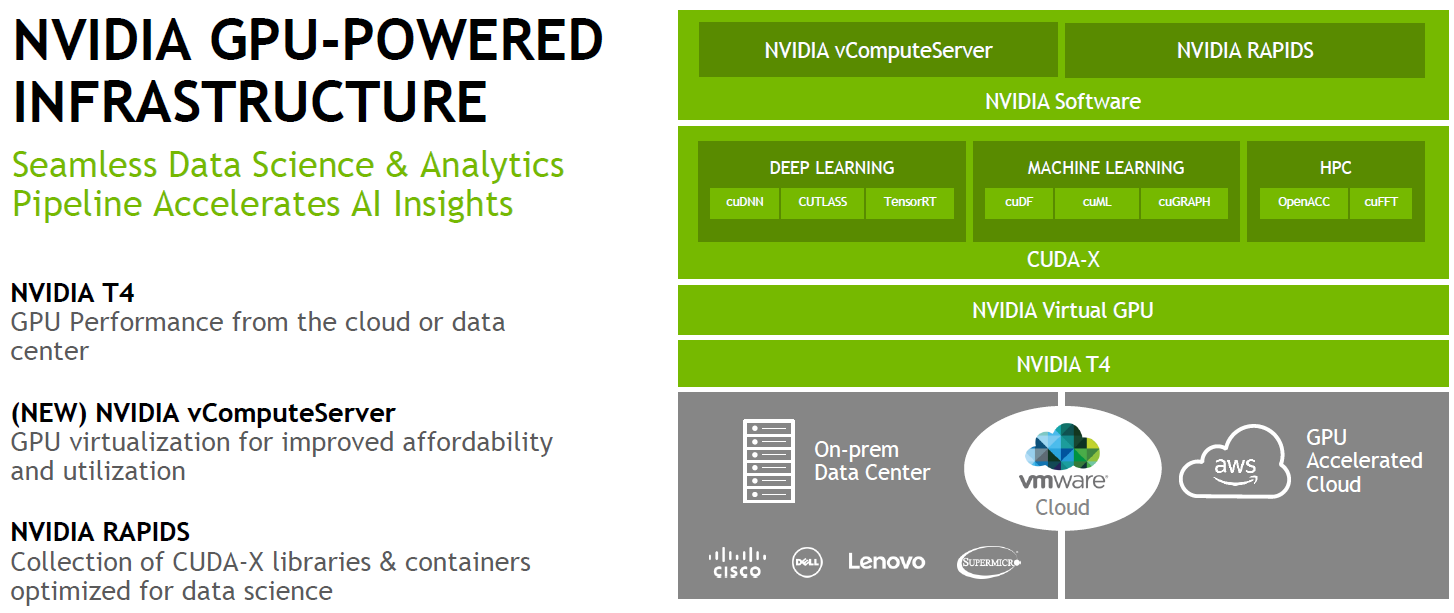

VMware’s push to leverage virtualization for accelerated AI and machine learning workloads was on display at the recent VMworld conference, where Nvidia announced it was bringing its virtual GPU capabilities to vSphere and VMware Cloud on Amazon Web Services (AWS) to accelerate AI and data science workloads. The company unveiled its Virtual Compute Server (vComputeServer) software, which not only works with VMware Cloud on AWS but also vSphere, vCenter, vMotion and VMware Cloud. The diagram below lays out the integration that Nvidia and VMware announced:

“AI is the most powerful technology of our time,” John Fanelli, vice president of product management at Nvidia, said in a briefing ahead of VMworld. “The growth of AI and the computer requirements have completely outpaced Moore’s Law, so the only way to really be successful today is to drive GPU acceleration technologies throughout AI. AI is the most powerful technology of our time and the result is actually creating the greatest computing problem of our time due to this. This technology is so powerful that machine learning and deep learning algorithms and computers are actually writing their own software. This is allowing us to solve problems that we never thought we could solve. When we look at the market and the impact on customers, you can see that there are large enterprises moving aggressively to machine learning over the next three or four years.”

The role of the data scientist is growing in both the enterprise and academia to address the increased use of AI and analytics, Fanelli says.

“Our enterprises are looking at a much broader strategy, one that encompasses not only the datacenter but a hybrid cloud and a much shorter timeline,” he says. “When it comes to AI, Nvidia is working with hundreds of enterprises [and] thousands of startups across every industry – healthcare, transportation, retail, financial services – all of these organizations are using a desire to drive their business processes to make them more efficient. They’re adding intelligence … so they make better decisions to drive better results for customers and shareholder value.”

As we have seen at The Next Platform, VMworld was not the first place VMware has talked about GPU virtualization. In a presentation at the Stanford HPC Conference in February, the company showed how its ESXi hypervisor can run machine learning workloads on virtualized GPUs, all the while at speeds that approach that of bare metal. Virtualization plays a foundational role in public and private clouds, and Mohan Potheri, principal systems and virtualization architect at VMware, said at the time that virtualization can make GPUs more productive than they would be in a native environment.

vComputeServer includes features such as GPU sharing, enabling multiple VMs to be powered by a single GPU, and GPU aggregation, which means multiple GPUs can run a VM, driving flexibility, utilization, and cost efficiency. It is supported by an array of Nvidia GPU cards, from the Tesla T4, based on its “Turing” GPU, and the Tesla V100, based on the “Volta” GPU. The Quadro RTX 8000 and RTX 6000 cards, based on the Turing chips, are can also be hooked into vComputeServer, as can earlier Tesla P40, P60, and P100 GPU accelerators based on the previous “Pascal” architecture. The software provides error correcting code and dynamic page retirement to protect against data corruption, live migration and thanks to those massively parallel banks of CUDA cores and the Tensor Core matrix math units up to 50 times faster deep learning training than CPU-only environments, similar to what is found on bare-metal systems running GPUs.

With VMware Cloud on AWS environments with Nvidia GPUs, enterprises will be able to move workloads powered by vComputeServer software with a click of a button using VMware’s HCX service for migrating workloads between public and private clouds and automatic scaling of VMware Cloud on AWS clusters accelerated by Tesla T4 GPUs. In addition, having GPU acceleration will enable organizations to leverage Nvidia’s RAPIDS GPU acceleration libraries for data science workloads, including deep learning, machine learning and data analytics.

“In a modern datacenter, organizations are going to be using GPUs to power AI, deep learning, analytics,” Fanelli says “Due to the scale of those types of workloads, they’re going to be doing some processing on premise in datacenters, some processing in clouds and continually iterating between them.”

The cloud was an overarching focus of VMworld for VMware, which not only rolled out its own technology enhancements but also has been pushing forward through partnerships with the likes of top-tier cloud service providers AWS, Microsoft Azure and Google Cloud Platform. Some of the news coming from the show highlighted other collaborations with OEMs such as Dell EMC and Hewlett Packard Enterprise. Underlying a lot of that was also the ability to run data-intensive modern workloads and to quickly manage, store and analyze petabytes of data that enterprises are generating. AI and machine learning will play key roles in addressing those challenges, which VMware Pat Gelsinger noted when talking about the partnerships with the GPU maker and AWS and the GPU acceleration services they are now bringing to the cloud.

Be the first to comment