What goes around comes around. After fighting so hard to drive volume economics in the HPC arena with relatively inexpensive X86 clusters in the past twenty years, those economies of scale are running out of gas. That is why we are seeing an explosion in the diversity of compute, not just on the processor, but in adjunct computing elements that make a processor look smarter than it really is.

The desire of system architects to try everything because it is fun has to be counterbalanced by a desire to make systems that can be manufactured at a price that is set way before delivery dates. This is the fundamental challenge of HPC in its many guises, and it is the thing that Steve Scott, the once-and-future chief technology officer at Cray who has also done stints at Nvidia and Google, spends a lot of his own cycles pondering. The Next Platform sat down with Scott to talk about the Cambrian compute explosion being driven in large part by the HPC and hyperscale communities and what it might mean for the future of computing.

Timothy Prickett Morgan: People weren’t ready for the diverse kind of compute that you were focused on in the early 2000s. With the Cambrian explosion of compute here, there seems to be more of a willingness to use it all. It is kid in candy store time for people building systems. What do these things all mean at the higher end of computing? How do you figure out where to place bets for the kinds of current and future workloads?

Steve Scott: As in most areas of computer architecture and system design, the architecture is driven by technology. My PhD advisor used to tell me when talking about the architecture qualifying exam this is the one area where the questions remained the same and only the answers changed. As the underlying technology changes, the right way to do things changes. Architecture is not a static field. What this means traditionally is as we have gotten more transistors and we can do more with them; as relative latencies and the balance between different technologies changes, the optimal architecture changes.

Over the past decade or so with the slowing of Moore’s Law and the end of Dennard scaling, power becoming more of a constraint, we are seeing the end of CMOS coming. As the technology stops improving at the same rate it used to, that is driving architectural innovation. It is driving us toward greater levels of specialization. It used to be the case that you would never build a specialized processor because it was extra effort and time and only met by a certain class of applications and by the time you were done with it, the standard processors had become fast enough that it the specialization was not particularly helpful.

But now, as the rate of improvement slows, it is causing everyone to look at greater specialization and that is what has pushed exploration into GPUs – finding something efficient for highly parallel work and now people are starting say maybe what we need are specialized accelerators that are only used for specialized applications. It is a variation of the idea of dark silicon, where the power budget does not allow us to use all the transistors on the chip. So, the alternative is to use different transistors that are good for a particular job and use accelerators when there is good cause for those. We see this clearly in mobile with its high volumes and specialized tasks around software radios or various kinds of transcoders for dealing with digital signals, but it really makes sense to have specialized accelerators for those functions. But it is only just starting to make sense for servers.

TPM: With this in mind, how do the price/performance curves change? Everyone shifted to a generic processor and now it seems it the pendulum is shifting back. We rode that X86 curve down and it gave great price/performance and MPI gave us scalable clusters – if you go from making tens of millions of things, now it’s $50,000 or less of something for a particular job. Does each of those specialized processors or accelerators get more expensive but the price/performance benefit washes it out?

Steve Scott: That is, in fact, the issue. Some people think we will start seeing specific ASICs for a narrow range of applications. I am not bullish on that idea. I do not think we will see twenty different types of specialized ASICs for a thin set of applications for just those reasons – lower volumes and resulting higher cost chips.

I think what we will see instead is a moderate number of specialized processors tailored for a class of applications with that constantly balance of whether there is enough of an advantage in the niche of applications to do a specialized processor with high enough volumes to support the cost. And we have to ask if is the architectural advantage enough to use an older process technology to make it cheaper to design to overcome the disadvantage of using that older process. So the cost benefit analysis will continually be explored.

There are, however, some cases where that is clearer – in deep learning, for example. We have passed the tipping point in deep learning. It has become widely adopted among hyperscalers and proven itself to be extremely effective – and it is a high volume application.

So Google looked at that equation and realized it was worth building a specialized processor just for deep learning. That’s a case where you are clearly on one side of the specialization boundary. On the other side, there are other cases now where people are trying to build specialized processors that ultimately will not be successful. I don’t know how many neuromorphic chips there are out there now – several.

TPM: Speaking of specialization of compute, what do you think of FPGAs now? You bought OctigaBay all those years ago, what now? Hybrid CPU-FPGA chips will be available through Intel, and you will be able to plug into the CS Storm machines, and there is obviously a way to take FPGAs either as distinct compute elements or hybrid processors and those into XC systems. But what are you doing today?

Steve Scott: We actually do ship systems with FPGAs today, but you would not know about because we do not do this as a mainstream product.

We are definitely exploring them and they do make sense for certain applications, so for the moment, FPGAs at Cray are a custom engineering solution. We have customers that have problems where FPGAs work; we can support them in our CS Storm platform, which has been successful for dense GPU solution are also good for FPGA cards and we can ship FPGA dense systems.

Signal processing is one of the cases; there are others that are customer specific. But they are appropriate in wide-ranging sets of applications. As long as the application is fairly stable that you want to do a lot of and especially if it has complex control flow or something that lets an FPGA give better performance (so in signal processing where you do not need full precision and can get more throughput). For things that have complex control flow that can be cast into an FPGA pipeline, this can save a lot of overhead compared to a conventional processor. So as is well known, Microsoft deployed FPGAs to accelerate Bing search a few years ago – and who would have thought that? But they looked at the algorithm, they do a lot of it, parts of it are relatively stable and they saw a path to FPGAs.

With all of that said, we have not gotten to the point where we see FPGAs as general purpose enough to put into our standard product map or pitched regularly to customers. That has to do with the difficulty in programming them. Compilers have gotten better – OpenCL and high level approaches – but it is much more difficult to use than a conventional processor or a GPU.

If you think about the spectrum of processors along the lines programming ease versus efficiency, at the one end is the general purpose CPU, which has a lot of overhead and is not efficient but is easy to use, then moving to the left there are vector processors – a little less general purpose and less easy to program but more efficient and there is broader use of these today. Then you might move to GPUs on that spectrum. A bit less general purpose and harder to program and more efficient, then you would go to the far left with FPGAs and custom ASICs and application-specific processors. So if you really want to do a lot of something you can build a custom ASIC and these can be very power efficient. In our mind, FPGAs are just still not easy enough to program to make a general purpose solution. We will continue to watch them and see uptick in them very slowly. Having Intel’s integrated FPGA in a Xeon package will help make them a bit more broadly accessible.

TPM: What about digital signal processors? Everything gets a chance in the sun, but are DSPs also coming back around or are they just done because so much focus is on GPUs and FPGAs?

Steve Scott: If you go back to the continuum of ease of programming versus efficiency, I do not really think DSPs will have much of a play in HPC because there are GPUs that are close enough in efficiency but have a lot more in the way of software infrastructure. It is more of an embedded thing inside a device with a constrained environment.

On another note, I think the GPUs also represent the baseline that entrants into the machine learning space have to live by. If I were going to bet on a new type of specialized processor to merge and be successful it would be an accelerator for machine learning or deep learning. You can build a processor that is more efficient than that than GPUs, as we have seen with Google’s TPU. The market may end up being large enough to support that, but the GPUs are the incumbent that needs to be overthrown. Intel will point out that if you look at the overall deep learning space, specifically on the inference side, the majority is done on conventional CPUs and they have a pretty aggressive roadmap there too.

TPM: What about doing hybrid differently? There was a DARPA project you were involved at the same time as Cray was developing “Cascade,” which we know as the XC line. In that project, they took different computing elements and made them different shapes, and you shook then in the chip package and they oriented themselves to interconnect. Each of these elements could be implemented using a different process tuned to boost the price/performance of each subcomponent. So you might do cores in 10 nanometers, graphics processors 20 nanometers, and communications in 32 nanometers. You could add them all to the package and have something less complicated compared to an Intel Knights Landing or Nvidia Pascal interposer.

My point is, you could not only have different options for a package but different ways to plug things into a socket because the interconnects seem to be getting better. Why are we trying to put everything on one chip? Every piece of what goes into a system today could be implemented in a kind of modular socket.

Steve Scott: That makes a lot of sense and we will start seeing more of that in the future. This is part of what happens when the Moore’s Law train slows down. It makes sense for a variety of reasons. You can change the balance, for starters. Think about CPUs and GPUs – sometimes you want different ratios of those elements, so by building “chiplets” and putting many of those on a package you can be more successful at balancing components. You can also independently upgrade elements this way as well and get a new spin of one chip when you don’t need it for the others. Optimizing the IC process for different functions is also possible; so with CPUs and GPUs, you might want one to have a deeper metastack and be optimized for latency while the other is optimized more for power efficiency and density. You can also take things that do not need to be implemented in the latest technology and implement those in slower technologies and use them for multiple generations. The key is to getting all of this to communicate on package and there are many ways to do this.

To your point about what Sun Microsystems was doing with inductive coupling in the HPCS program, they did not actually have electrical contacts between chips, they used capacitive coupling between chips and were able to get very high bandwidth. But there are also ways of doing very low energy communication over organic substrates so you do not have to use a large interposer, which can limit package size. There are companies doing very efficient communication between chips. If you got to transfer a centimeter, it is actually more power efficient to go between two chips than to move that distance a monolithic piece of silicon because the wires are larger and you do not use the really thin traces on the IC. So for many reasons all of this makes sense and we will start seeing this from multiple vendors.

TPM: Will you as a system vendor try to do this or will you wait for other companies to put these components together? They won’t all come from the same place? There is a volume argument here though, right?

Steve Scott: We do not currently have plans to do any package-level integration. We are talking with multiple processor companies and we are talking about this particular technology with them but we will be consumers of this.

On the volume front, it can reduce cost so you do not need so much of a volume market for building custom packages when custom means a different collection of chiplets on a package. Taking a collection of these chiplets costs a lot less than building a custom ASIC. Cray does have influence with processor companies, but it is not because of our high volumes. It is more because of our influence in HPC and with certain companies.

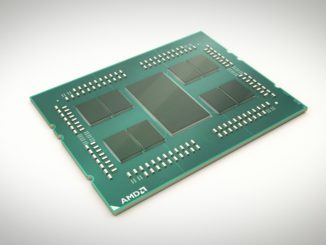

TPM: How do you carve up compute, what goes on the CPU and what comprises the rest? These things are getting more diverse. For instance, we think Radeon is a credible GPU, and AMD can now make a case for Epyq X86 processors. We can clone CUDA and emulate on that Radeon Instinct cards and that removes some barriers. And you and I have talked about ARM in the past, too. What is really on the horizon, and what is a lot of noise?

Steve Scott: In a sense, we are in the same situation as many of our customers, looking down the road and trying to see which processor will be the optimal choice this far out. We try not to make those predictions. Instead we try to build a system infrastructure that is flexible and then to be agile and bind those decisions as late as possible.

Ideally, if you are going to put in a large machine somewhere, you should make the processor decision perhaps two years ahead of when that is delivered and revisit that decision even closer to the installation deadline.

We are trying to have flexibility, build the right hardware and software infrastructure and bind that as late as we can. We talk to everyone; we have detailed discussions with Intel, Nvidia, ARM, and AMD and even people building specialized processors so we can use the best nearest-term projects we have to make that late-as-possible decision. End customers, of course, have their own biases or workloads that lend themselves to one processor or another. We really do not see how it is going to shake out in four years and we want to have the flexibility to make that call. It is a very deliberate strategy on our part.

TPM: When can you start doing R&D on new technologies? When do guys in labs start with integrating stuff? You can’t be too dependent on anything, as you know from Cray’s experience with AMD’s “Barcelona” Opterons.

Steve Scott: We did get burned, and it was because we had an infrastructure in the Cray XT and XE that was tied to a specific processor line because our interconnect ASICs were based on HyperTransport and not PCI-Express. That is why we moved to PCI-Express. It gave us an interface – from an ASIC perspective, and that is a four-year investment to build one – that was open. From a board design and software stack perspective it is possible to have a shorter time horizon of around eighteen months. It is possible to build a board for a specific processor, get it through test and then into production systems in about that timeframe.

TPM: If you decide XC50 should have alternate CPUs or GPUs, for instance, because the price/performance is far better – what if customers pull you that way? In 2003 through 2005, you were in front of Opteron then IBM got in, then HP got in, and Dell was finally dragged kicking and screaming into the market after holding onto railings for a long time. If the processor is decent and the price is okay, do you anticipate that and are you in the pattern for that? Is AMD back in the game?

Steve Scott: Diversity is great. Look at the Department of Energy exascale program and the emphasis on diversity in processors and systems companies. Diversity is good for risk mitigation and for meeting a diverse market. It is important for the market and we are talking to everyone – and we are open to shipping multiple processors. In our next generation “Shasta” systems that come out in 2019, we will support Intel but we will also support other processors.

The XC system in the meantime has the ability to support interesting things that might come along in a PCI-Express form factor. The XC50 has the ability to put full-sized PCI-Express cards on every node and today we ship our Pascal GPUs in the XC50 in PCI-Express form factor, so it is possible to put an FPGA card there as well, for example.

With the CS Storm line, it is quicker to plug in new things. Those eighteen months have more to do with the high-end systems. We can be nimbler in the cluster space.

TPM: Wither ARM? It is not a power issue really, because ARM chips seem just as hot as Xeons. It is about an alternative, but then with the Naples Epyc processor, you can have an alternative with the same instruction set, so why bother?

Steve Scott: I do expect ARM to make inroads into traditional high-end HPC and I do expect that one of the exascale systems will likely be an ARM-based system.

We are starting to see some credible processor companies that will be shipping good ARMv8 64-bit server processors. We have not had that to date – the market has been chips optimized for mobile and some 32-bit servers and some weak initial 64-bit servers. That will be changing, but how fast they make inroads, the market will have to determine. But I do expect them to break into HPC to some degree.

Be the first to comment