When we think about high performance computing, it is often in the context of liquid-cooled systems deployed in facilities specifically designed to accommodate their power and thermal requirements. With artificial intelligence, things can get tricky. While the systems themselves are powered by many of the same CPUs, GPUs, and NICs, the environments in which they are deployed varies wildly.

In some facilities, direct-liquid cooling and 100 kilowatt-plus racks may be the norm. A few months back, we looked at Colovore, which houses Cerebra Systems’ “Andromeda” and “Condor Galaxy 1” AI supercomputers. But, in most cases, customers won’t have these luxuries.

Where customers choose to deploy AI compute often has more to do with where their data already resides. For the co-location providers responsible for housing those resources, this can be a real challenge, as they not only need to support conventional deployments, but denser configurations that are more demanding when it comes to thermal management and power delivery.

“It’s not just all GPUs, unless you’re in a farm,” Digital Realty chief technology officer Chris Sharp tells The Next Platform. “What we see is a mixed environment of infrastructure.”

Predicting The Next Compute Step Function

To be clear, workloads like AI, ML, deep learning, and data analytics have been around and deployed in co-location facilities for years, Sharp emphasized. What’s changed is that generative AI has proven to be a step function in the overall trend.

Large language models with billions of parameters – like GPT-4, Falcon 40B, Stable Diffusion, and Dall-E-2 — require an order of magnitude more compute than your typical image classification job. To put things in perspective, to train a model like GPT-3 on a 1.2TB dataset would require something like 1,024 Nvidia A100s running at full tilt for just over a month.

Despite the quantities of compute required, the potential of these models to drive profits and divine a competitive edge are tantalizing enough that many enterprises are unwilling to risk falling behind.

For Sharp, it was clear the direction things were headed. Chips were getting faster, hotter, and more power dense. “The big jump that I’m working through right now is how quickly… the next step function is going to come to us,” he says. “Generative AI, and quite frankly, some of these GPU designs, is a big catalyst to expedite that.”

Balancing the evolution of compute against what customers are realistically going to deploy is a balancing act for real estate investment trusts like Digital Realty. Overbuild for power and cooling, and the facility is no longer competitive; under build, and you may have to turn away your most demanding customers.

“I can’t go ahead or behind our customers,” Sharp said. “I go behind, it’s not viable; if I go too far ahead, I’m straining a lot of either power or cooling technology that’s just not being utilized.”

Because of this, Sharp says building in a degree of flexibility into the system is key. “We’ve been building our facilities over the years… in a very modular approach.”

Making It All Fit

As a general rule, co-location facilities have to plan for and cater to the lowest common denominator. Because of this, most co-location cabinets top out somewhere in the neighborhood of 7kilowatts to 10 kilowatts. That’s plenty for your typical mix of enterprise storage, workloads, databases, and the like, but nothing compared to the demand of modern AI systems.

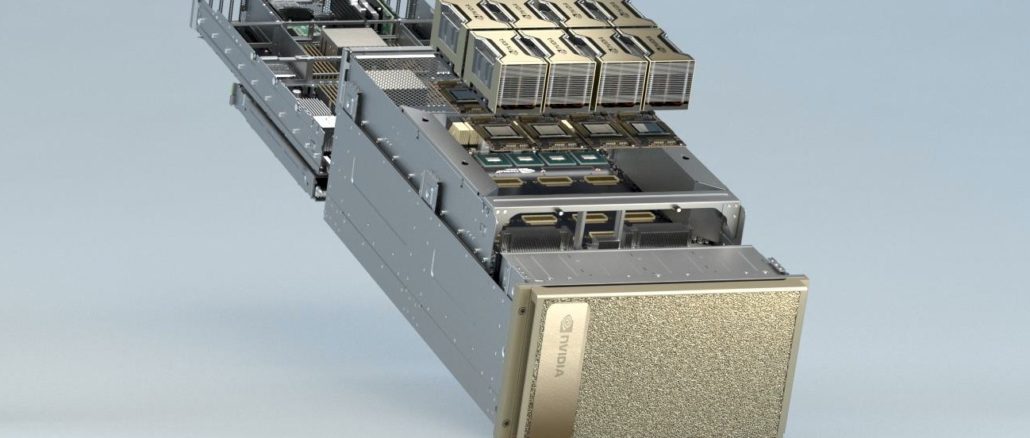

A single 8U DGX H100 from Nvidia can consume upwards of 10.2 kilowatts when fully loaded up. While powerful on its own – capable of delivering on the order of 32 petaflops of peak FP8 performance – companies that want to train LLMs on a reasonable timescale are going to want more than one. Perhaps hundreds or thousands or, in extreme cases, tens of thousands.

This is a challenge that Digital Realty recently tackled with its KIX13 site in Osaka, which is the company’s first bit barn equipped to handle Nvidia’s DGX H100 systems, and more specifically the 32-node SuperPOD.

Each of the eight compute racks demands in excess of 42 kilowatts of power and capacity, just on the edge of what Sharp says its facilities can accommodate with air alone.

“With air, we have designs that can support 50 kilowatts. There’s a lot you can do with that,” he said. “With rear-door heat exchangers, we can get up to 90 kilowatts per rack.”

However, cooling is only part of the equation, Sharp said. “We’ve seen so many AI deployments fail because they didn’t really think through the power, the cooling, the interconnectivity.”

As it turns out, the power delivery ended up being a bigger challenge for Digital Realty than thermals. “The DGX A100 just needed an A/B source; it was kind of like your traditional redundant delivery of power,” Sharp said. “With the DGX H100, you need three discrete power sources.”

“Because of our modularity, we were able to pull from our PDUs and be able to support that with minimal to no change,” he explained, adding that this heightened level of redundancy and power is becoming increasingly common across the board, not just in Nvidia systems.

Less Dense = Broader Appeal?

While not every AI system deployed is going to be a DGX system, the design is at least representative of many of the GPU nodes available from OEMs today. However, that could be changing.

The limitations faced by enterprise and co-location datacenters with regard to power and cooling appear to have informed some of the design decisions around Nvidia’s DGX-GH200 cluster, detailed at Computex this year.

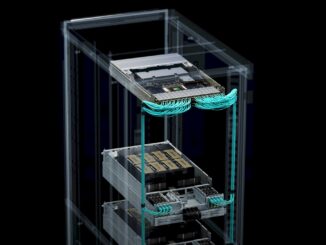

The cluster is comprised of 16 compute racks, each with 16 nodes equipped with a single Grace Hopper superchip, and networked using an NVLink Switch fabric. If you don’t recall, each of these frankenchips feature a 72-core “Grace” Arm CPU, a GH100 GPU, 512 GB of LPDDR5X memory, 96 GB of HBM3 memory, and a TDP of around 1 kilowatt.

Like the DGX H100 SuperPOD, the cluster is capable of delivering around an exaflop of peak AI (FP8) performance. However, it’s spread out over twice the area, something that should make it easier to deploy, especially in older co-los.

Be the first to comment