Rumors have been running around for months that Hewlett Packard Enterprise was shopping around for a way to be a bigger player in the hyperconverged storage arena, and the recent scuttlebutt was that HPE was considering paying close to $4 billion for one of the larger players in server-storage hybrids. This turns out to not be true. HPE is paying only $650 million to snap up what was, until now, thought to be one of Silicon Valley’s dozen or so unicorns with over a $1 billion valuation.

It is refreshing to see that HPE is not overpaying for an acquisition, as it certainly did back in the summer of 2011 to buy data analytics firm Autonomy for $10.2 billion, when the system maker had aspirations to compete with IBM by building a much larger software and services business. You might argue that even Aruba, which HPE bought for $3 billion nearly two years ago and that represents the largest deal that the company has done since Autonomy (which was largely written off) was a bit pricey even if it did fill some holes in the company’s networking business.

With SimpliVity raking in over $276 million in four rounds of funding in the past five years – with the last round being a whopper of $175 million in March 2015 – you can bet that Accel Partners, CRV, Kleiner Perkins, DFJ Growth, and Waypoint Capital, who all kicked in the funds, wanted to either go public, like much larger rival Nutanix, or find a buyer like HPE. (Rumors were going around in June 2014, as hyperconverged storage was starting to get traction, that HPE was trying to buy SimpliVity for as much as $2 billion, but Doran Kempel, chairman and chief executive officer at the company at the time, denied to use that SimpliVity was talking to HPE about any kind of commercial deal and certainly not an acquisition, and said further that the company was in talks with anyone about such a deal.)

With IBM not interested in controlling its own hyperconverged storage, Dell having VMware’s Virtual SAN and EMC’s ScaleIO after its massive acquisition last year, Cisco Systems pushing Springpath in its HyperFlex stacks, and both working, in varying degrees, with Nutanix, the options for SimpliVity to find a buyer were shrinking. While Huawei Technologies and Lenovo both have OEM agreements with SimpliVity to sell its OmniCube hyperconverged storage, they seemed unlikely to acquire the company for its software and the FPGA accelerator at the heart of the hyperconverged system. We are not at all surprised that HPE was able to argue the price down, even from the $1 billion valuation SimpliVity enjoyed two years ago. Times, and conditions, have changed, and honestly, even at that price, this is not bad for a company that IDC estimates had $129 million in revenues in the trailing twelve months ending in the third quarter of 2016 and it is also not a bad return on investment for the $276 million kicked in.

Some unicorns turn out to be workhorses, and that is probably better in the long run. For readers of The Next Platform, the most important thing is that HPE is going to leverage a technology that was created for midrange datacenters and scale it up to support heftier workloads run by large enterprises and scale it out across its global network of direct and channel sales, backed by its own support. HPE will, it hopes, be able to give Nutanix and Dell a run for the hyperconverged money.

Simplivity Sends StorageVirtrual VSA Out To Pasture

The HPE deal to acquire SimpliVity is not expected to close before the end of HPE’s second quarter of fiscal 2017, which ends in April, so the company is not at liberty to say much about its plans for the product. But Paul Miller, vice president of marketing for the Software Defined and Cloud division within HPE’s Enterprise Group, gave us some hints about what the roadmap ahead looks like.

As we have pointed out before, LeftHand Networks, which HPE bought in October 2008 for $360 million, was the actual pioneer in hyperconverged storage, with a virtual SAN product that was called SAN/IQ and that is now called StoreVirtual VSA. HPE has on the order of tens of thousands of shops running StoreVirtual VSA software atop its Xeon-based ProLiant servers and VMware’s ESXi hypervisor, and through the summer of 2015 it had distributed over 1 million free licenses of this software.

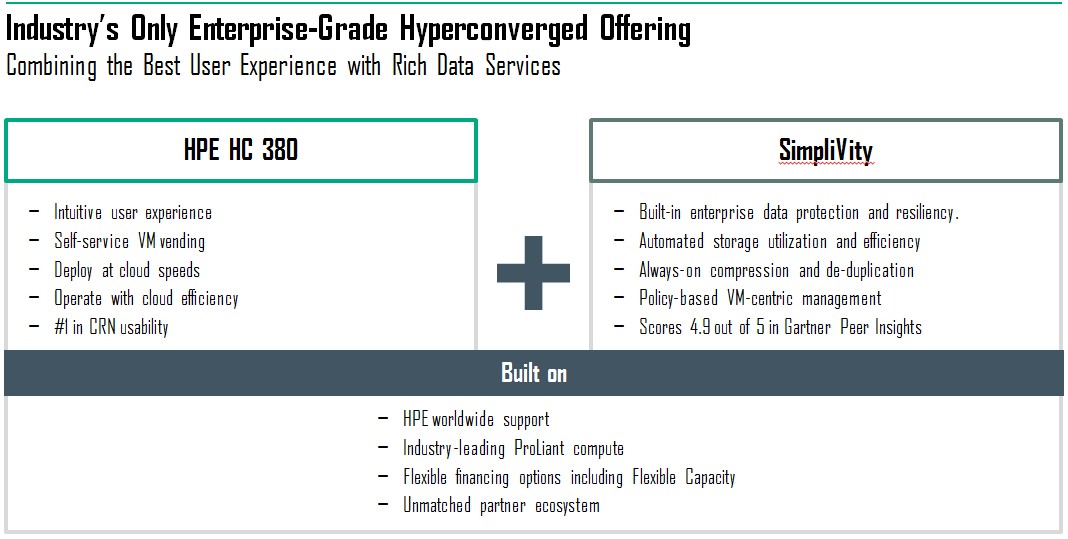

At that time back in 2015, HPE had married its Apollo 2000 series of servers, aimed at HPC customers, with the StoreVirtual VSA software to create an appliance to take a bigger slice of the hyperconverged pie, creating the Converged System 250 HC StoreVirtual appliance, or HC 250 for short. Three node configurations start at north of $120,000, which is in line with pricing from Nutanix, believe it or not, and the system scaled up to 16 nodes standard with 32 nodes as a manual option. The heftier HC 380, launched in March last year, was based on ProLiant DL380 Gen9 servers using a different mix of software and scaling to only 16 nodes in a single cluster and using VMware’s ESXi hypervisor as its compute layer. The goal HPE set for the HC 380 was to be 20 percent cheaper than the equivalent Nutanix offering.

For a lot of reasons, not the least of which that HPE has been focused on selling its 3PAR disk and flash SANs because of the higher margins it enjoyed with these products, the StoreVirtual VSA does not have the same mindshare or market share SimpliVity, much less VSAN, ScaleIO, or Nutanix. So HPE has to do something, and that something was to buy a new foundation for its hyperconverged storage. As innovative as the StoreVirtual software was eight years ago, there is a lot of functionality that it needed. And, as is often the case with the big IT vendors, rather than start from scratch and create a new thing it is often cheaper – and certainly faster – to buy a new foundation on which to build a growing business.

This, says Miller, is precisely the plan with OmniCube stack, which is now the main weapon that HPE will be using to attack the $2.4 billion market for hyperconverged storage, which is expected to swell at a compound annual growth rate of 25 percent between 2016 and 2020 to reach $6 billion.

The way SimpliVity has created it server-storage hybrid, it is more than just a clustered file system for running virtual machines for application on the same iron. The OmniCube stack brings in virtual servers, storage for them, plus data tiering, networking, WAN optimization, backup, and recovery software all to the stack. (It is these functions, integrated into the platform, that help SimpliVity cut the capital and operating costs of its platform compared to setting up servers and SANs and a slew of appliances to do these other functions.) One of the secret sauces in the OmniCube stack is a field programmable gate array that has been set up to run de-duplication and data compression algorithms, radically speeding up the processing for these. The OmniCube software creates a single pool for VMs to play in, and the whole shebang of 32 nodes in a cluster of machines is managed as a single instance. Initially, OmniCube supported only the VMware ESXi hypervisor, but in the past two years, support for Microsoft Hyper-V, Red Hat KVM, and Citrix XenServer hypervisors has been added. The 32-node cluster can support up to 8,000 VMs, which is a pretty sizeable number of fake servers and which more than the 6,400 supported on 64 node Virtual SAN clusters but less than the 9,500 supported on Dell|EMC VxRAIL clusters with 64 nodes. The top-end Nutanix NX 6000 series with 64 nodes supporting ESXI can support 8,000 VMs, and Nutanix says that using its own Acropolis variant of KVM, the scalability of the cluster and therefore the number of VMs is unlimited. (We don’t believe in unlimited here at The Next Platform.)

SimpliVity, with 6,000 systems shipped to over 1,300 customers, will give HPE a much larger revenue stream than it has been able to garner with the StoreVirtual VSA software to date, regardless of how many licenses it has distributed and the percentage that have been activated.

“There were obviously a lot of companies we could buy and that we did look at,” Miller tells The Next Platform. “But what we were really looking for was what would accelerate HPE versus our existing investment in hyperconverged. The HC 380 has a great user experience, and that is where we focused on building a OneView managed platform that also allows customers to manage other machines. Where SimpliVity really focused was on building in enterprise-class data management services from the ground up into their software defined software stack, and in this regard, bar none, they have done the best, and they have always on de-dupe and compression, and they have great technology that gets higher performance and they have sophisticated policies to control VM placement on clusters to ensure that performance.”

Here’s the plan. Within 60 days of the deal closing, HPE will have the OmniCube stack, including its FPGA accelerator, up and running on ProLiant Gen9 servers. Over time, the VSA software used in the HC 250 and HC 380 will be replaced by a revamped OmniCube stack that borrows the interfaces and ease of use features of the HC 380 and marries it to the OmniCube server-storage hybrid software. In the meantime, the existing OmniCube, HC 250, and HC 380 customers will be supported, even OmniCube setups on Dell, Cisco, Huawei, and Lenovo iron. Eventually HPE will stop selling the old stuff and start selling the new converged HC-OmniCube platform. (So that is doubly converged, then. . . ) The initial combined product will be based on that FPGA accelerator, but over the long haul, Miller says that HPE will be moving to an implementation of the OmniCube stack that does not have an external FPGA card dependency, which suggests that it will be using features of Xeon processors to accelerate in-line, always-on de-duplication and compression or that it will be making use of the Xeon-FPGA hybrids that Intel is building for hyperscalers. Miller was not at liberty to be specific, but we think HPE will go to an implementation of OmniCube that does not require FPGAs in any form and therefore can be run on any ProLiant server, and indeed, any server.

It is interesting to us that HPE’s 3PAR line of SAN storage uses accelerators to manage thin provisioning, de-duplication, and other data reduction techniques (but not data compression), but in this case it is a line of homegrown, purpose-built chips called the Thin Express ASICs, which were announced in their fifth generation back in June 2015. This ASIC also couples up to eight controllers together to create a clustered SAN that can use disk, flash, or a combination of the two. It will be interesting to see if there is any cross pollination of technologies between 3PAR and OmniCube.

In the meantime, HPE absolutely expects for customers to use a mix of 3PAR SAN and OmniCube hyperconverged storage in their datacenters.

“OmniCube will be complementary to 3PAR,” says Miller. “On the roadmap, we will be working on the ability to migrate VMs between 3PAR and SimpliVity systems. Think of different use cases. Customers don’t have to decide to buy a SAN or a software-defined storage stack. They can buy both. They may have really hot applications and the VMs supporting them need the fastest performance available on array-based SANs, but over time they become less interesting and less hot and can be migrated to a hyperconverged stack. Or, conversely, the VMs that need more performance can move from OmniCube to 3PAR. So we see these as enterprise-class storage devices with slightly different attributes and enable customers to use and migrate between both.”

This enterprise angle means that we can probably expect to see the OmniCube setup expand its scalability significantly. We have said for some time that companies do not necessarily want all of their storage on a single cluster or array because of the potential liability of putting all of the bits in one bucket, but that also did not want to have small hyperconverged clusters or SAN arrays scattered all over hither and yon, either. We think that HPE needs to ramp up the capacity of OmniCube to compete with all-flash arrays that deliver high performance and up to 1 PB or 2 PB of capacity in a single domain. That is about where storage managers get uncomfortable, and if they put 500 TB on one “device” (these are clustered systems, after all), they have plenty of headroom before they have to start breaking datasets and scattering them. When we suggested this, Miller neither confirmed nor denied anything, but said, with a laugh, that our suggestion was not stupid.

Wow! I’m glad they “got serious about hyperconvergence” by buying Simplivity.

Hopefully they won’t get serious about word processors and buy WordPerfect!