Public clouds bring a lot of advantages to enterprises, such as more flexibility and scalability for their many of their workloads, a way to avoid expensive capital costs by using someone else’s infrastructure and having someone else manage it all, and the ability to pay only for the resources they use.

That said, there also are a number of headaches that come with the cloud. There is the complexity in having to manage and secure corporate applications and data that reside in different public cloud environments, which can be in different areas of the world, as well as the fact that evaluating services from one cloud provider to another is not always an apples-to-apples comparison.

There also is the issue that not all applications can be migrated to the cloud. Companies in highly regulated industries like financial services and healthcare will want to keep some workloads on premises to ensure they remain in compliance, and other applications may be too costly or difficult to rework for the cloud to make the effort. All of this fueling the rise of the hybrid cloud that we at The Next Platform have been talking about over the past several years.

The hybrid cloud also has been the driver behind public cloud services providers looking to establish themselves in on-premises datacenters, essentially to play both sides of the table. That is being done through hardware (think Amazon Web Services’ Outposts on-premises infrastructures for running cloud services), software stacks (Microsoft Azure Stack, which can run on OEM hardware in datacenters) and partnerships with such established datacenter players like VMware.

For Google Cloud, the third largest public cloud provider, that comes with Anthos, an open platform announced in April and designed to let enterprises run their unmodified applications in the public cloud – not only Google Cloud, but also AWS and Azure – or in their own datacenters, and uses containers, microservices and Kubernetes as key foundational technologies. Anthos relies heavily on Google Kubernetes Engine (GKE), and Google sees Anthos as not only a way for enterprises to embrace hybrid clouds, but also as a platform for developers looking to leverage those cloud-native technologies in their work. Those developers are looking not only for a rich platform on which to build their applications, but also a way to have someone else manage it, according to Jennifer Lin, director of product management at Google Cloud.

Anthos also is an important step in Google Cloud CEO Thomas Kurian’s ongoing push to attract more enterprise business as it looks to compete more closely with AWS and Azure. While it’s not the only part of Google’s hybrid cloud play – two years ago Google and Cisco partnered to accelerate the adoption of such environments – it has become central to the company’s efforts.

“When we announced this deal, we grounded it very heavily in the container environments where the developer pain in terms of managing microservices was very obvious,” Lin tells The Next Platform. “Developers really wanted the ability to essentially connect and secure and then manage and get better diagnostics telemetry from their services. More and more customers have seen how moving from sort of a monolith to microservices is a lot about how they drive developer and operational efficiency. But it does cause some complexity.”

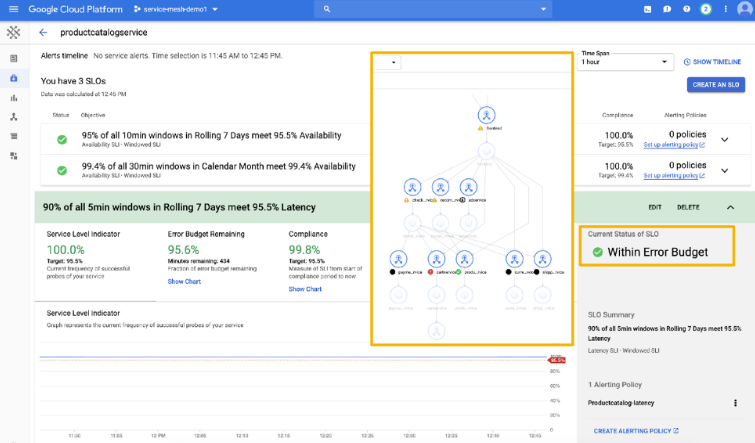

The company this week sought to ease some of that complexity with the introduction of Anthos Service Mesh, a managed service currently in beta that is built atop Google’s Istio service mesh APIs and provides a unified administrative interface for managing and securing traffic running between services. It also gives enterprises improved visibility into the application traffic to make it easier to detect and remediate problems. According to Lin, “as a service mesh, it’s all about eliminating that complexity but also increasing observability, operational agility and improving the security posture of those services themselves.”

The screenshot below gives an idea of the deep visibility from Anthos Service Mesh:

Along with Anthos Service Mesh, Google also introduced several other new features, including Cloud Run for Anthos for running stateless workloads in a fully managed Anthos environment, Config Management that now can automate and enforce company-specific policies and Binary Authorization, which ensures that only images that are validated and verified are integrated into the managed build-and-release development process. All of these feed into the demand from organizations for an Anthos environment that can increasingly be fully managed by Google

Lin points to KeyBank as an example of a customer that is continuing to modernize its software through the use of cloud-native technologies but that is looking for Google to manage the environment. The bank, a subsidiary of KeyCorp with $137 billion under management, announced in August that it had joined the Anthos early access program. It has been using containers and Kubernetes for the past several years, primarily with customer-facing online banking.

“They have quite deep experience with Kubernetes and alternative platforms like OpenShift from Red Hat and their priority is very much around modernization,” she says. “But obviously the governance requirements they have are actually quite stringent, so the notion of agility with governance is very important to us. And for customers like that, they had to invest in the early days with the open-source code or Intel’s managed Kubernetes environment, so they’ve learned the operational pain and how hard it is to make sure that the security updates are done in a timely fashion, that they’re producing the right level of visibility for their tenants within the cloud, that they’re making their auditors and regulators happy. All of that stuff. We’re at a level of maturity with Kubernetes. It’s been five years since [Google] open sourced it, where people are like, ‘OK I understand the value. Now I just want someone to manage it for me so I can go to the application layer and put my engineering resources into more services and applications that are business-impacting.’”

The growth of serverless computing also fits into this trend toward outsourcing the management of infrastructure. Cloud Run for Anthos also is in beta and is based on Knative, a platform designed to tie serverless with Kubernetes and pushed by Google, Red Hat, IBM (which now owns Red Hat), SAP and Dell’s Pivotal business. Cloud Run for Anthos is designed to make developers’ lives easier by letting them write code the way they always have without having to do a deep dive into Kubernetes. It integrates with Anthos, enables workloads to run in the same cluster through cloud accelerators and allows developers to run their workloads in Google Cloud or on premises and have the same experience.

“A lot of folks really feel that for the past decade they’ve been mired in infrastructure complexity,” Lin says. “Developers don’t want to know anything about the infrastructure very often. They just want to write a consumer-facing function. Now how you do it essentially is not really what the developer’s passionate about, but they’re glad someone else is thinking about it.”

In addition, the business model of paying only for what is needed “is also very attractive to the developers, so we introduced per-second billing sometime back. But the ability to do that where you only pay when the function is active is an important aspect of it. From a developer perspective, they just want to deal with the user experience and the endpoint of the service itself and nothing in between. They don’t want to know about firewalls and load balancers and access controls and identity and all that stuff. That’s why what we’re trying to do is strike that balance between the developer who just wants to be optimized for moving quickly and a platform that is richer than just scheduling compute. It needs to essentially handle a lot of the linkage between what the application intent is and what the service itself is trying to offer. Within Google, we’ve got a lot of that. We have thousands of developers in a very globally distributed team and they just want to be able to check their code and move on to the next thing.”

Of benefit to the article platform is voice technology independently reviewed on August 6th, 2019, at cmsvoice.com on SPEECH MORPHING already in global use.