Memcache has become the ubiquitous way of loading objects quickly for most of the world’s largest websites and for that matter, for plenty of smaller enterprises that need to store and retrieve other objects from dense data stores.

It is therefore no surprise that all of the major cloud vendors have stepped in to provide managed Memcache services (AWS Elasticache, Azure Cache, Google App Engine Memcache), which are the backend fast-pull for many services we rely on.

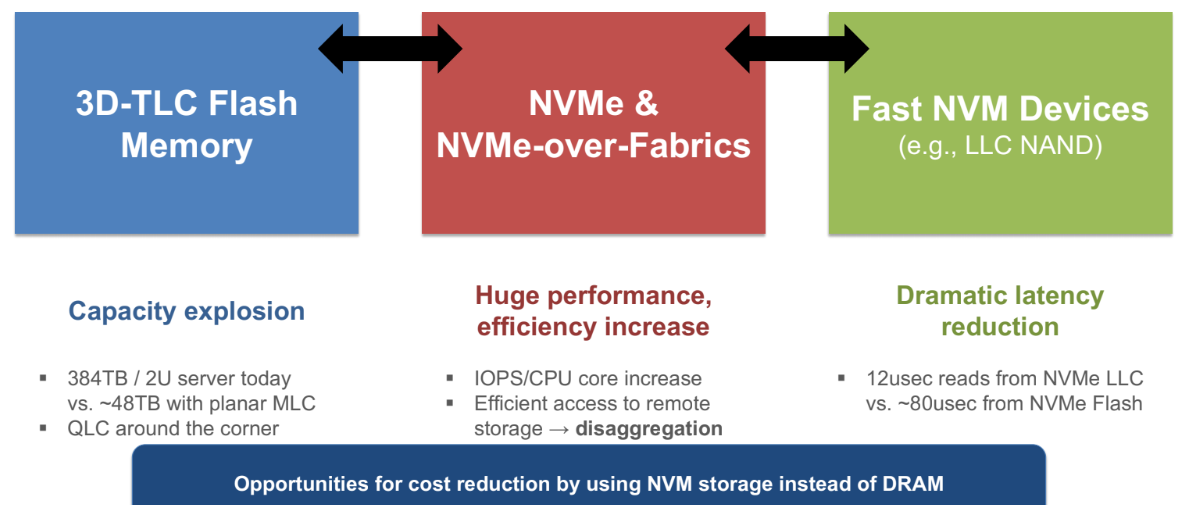

However, these services are all based on DRAM on the backend, which might be leaving some cost effectiveness on the table, especially when compared to approaches that hinge on NVMe and NVMe-over-fabrics now that the prices of these devices have settled out.

From what we can tell, IBM Research is the first to connect NVMe and Memcache, pulling this effort together into the IBM cloud as an experimental service, which is free for now. The effort, as one of the Zurich lab researchers, Nikolas Ioannou, tells us, is based on a data store they have developed called uDepot that can tap into the performance on NVM devices.

“When compared to DRAM, users can expect roughly 20x lower costs when using Flash and 4.5x lower hardware costs when using 3DXP, without sacrificing performance. In addition, Data Store for Memcache offers higher cloud caching capacity scalability,” he adds.

On the right are NVM devices like NAND and 3DXpoint, which are valued for low latency. On the other side is the ultra-dense high-capacity flash, and in the middle is the sweet spot for boosting cost effectiveness Memcache—NVMe/over fabrics for both local boosts and those over the network.

If we just think about the largest shopping and social media sites on the planet, we can see why Memcache is worth paying attention to. If you go to Facebook and request an image or any other object and it is in the distributed tier in cache sitting behind the servers of such a site, it is being served by the cache directly. If there is ever a delay in those objects loading, it’s probably because the system had to go all the way back to the main persistent data service—a database, most likely.

Memcache, the technique used by developers to speed up dynamic databases on websites such as Reddit, Instagram and Wikipedia, have traditionally relied on DRAM since 2003. And while this has flourished, DRAM isn’t the best media for the technique since it’s very expensive – in 2017 it had a 47% increase in price-per-bit, has limited capacities due to DRAM scaling issues, and is volatile, meaning when it loses power it loses the data.

The other reason this might appeal to IBM cloud users (and spark interest for other cloud providers) is due to security. The infamous memcached protocol hack coming from UDP port 11211, to amplify a distributed denial of service attack (DDos) caused widespread concern. Ioannou says that prevent this from happening on the Data Store for Memcache his team implemented authentication and authorization measures that secure it against DDos and other similar attacks. “We also employ encryption in transit and avoid UDP all together, which adds an additional layer of security since the default memcache protocol is typically run in a trusted network without any authentication method.”

Data Store for Memcache is available now in the public catalogue within the IBM Cloud as an Experimental service with a free lite plan with one gigabyte of cloud storage capacity for 30 days. It incorporates IAM authentication and authorization, and all data is encrypted at rest and over the wire.

Be the first to comment