Cannibalize your own products or someone else will do it for you, as the old adage goes.

And so it is that Amazon Web Services, the largest provider of infrastructure services available on the public cloud, has been methodically building up a set of data and processing services that will allow customers to run functions against streams or lakes of data without ever setting up a server as we know it.

Just saying the words makes us a little woozy, with systems being the very foundation of the computing platforms that everyone deploys today to do the data processing that runs our modern world.

Of course, the server – meaning that physical machine that is abstracted away by hypervisors and containers – does not really go away at all, so calling this minimalist form of platform as a service, or PaaS, computing serverless is a bit of a misnomer. But from the point of view of the application programmer and the company who operations are embodied in the functions of a serverless architecture, there is no server – virtual or physical – that they need to ever think about.

This is, perhaps, the final state of computing as we know it, with massive clouds abstracting their infrastructure services at such a high level that no one even knows there is an operating system or such things as middleware, databases, or web servers.

The heart of the serverless architecture at Amazon Web Services is a service called Lambda, which is an event-driven computational engine. The Lambda service was previewed at the re:Invent conference in November 2014 and has been gradually expanding in use and maturity, and it has spawned reactions from all of its competitors out there on the cloud, including Google Cloud Functions, which launched in February as an alpha service; Microsoft Azure Functions, which came out in March as a tech preview; and IBM Bluemix OpenWhisk, which made its debut in February as well. A number of other companies, including Iron.io and Serverless, are offering frameworks that run on private or public clouds that provide similar event-driven functionality and high-level abstraction away from the underlying infrastructure.

Here we go again, with a new computing paradigm and a slew of competing formats and ways of computing. But this time around, the incompatibilities might be relatively minor and the resulting functions – we hesitate to call them applications – might be more portable and therefore the microservices applications that are comprised of dozens or hundreds or thousands of functions might be more portable than monolithic applications encased in virtual machines that dominate the public cloud.

OK, you can stop laughing now. We just wanted to try that on to see what true portability across infrastructure might feel like. It will never happen, so best to forget it now.

But these event-driven compute services that have popped up are the logical end point in the evolution of computing, and the ultimate level of abstraction at what we will still call the infrastructure level of systems.

To get a handle on what is happening with serverless computing, we had a chat with Lowell Anderson, who is director of product marketing at AWS and the person who led the launch of the Lambda service. And as such, this is probably the easiest marketing job that Anderson has had in his many decades in the IT sector. Lambda being a compute service, you don’t provision servers or worry about capacity planning as we know it.

“All you do is write your code in whatever developer environment you have and load it into Lambda and Lambda runs that code when it is triggered by certain events,” as he succinctly put it.

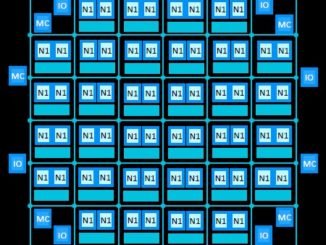

It is, of course, more complicated than that, and as we point out, a lot more complicated for AWS itself, which still needs to do capacity planning on boring things like servers, storage, and switches and provision machines with hypervisors and containers to run Lambda services. As it turns out, the Lambda service runs on top of the EC2 compute service, which has compute carved up by a homegrown variant of the Xen hypervisor, not on bare metal machines, and then has a homegrown variant of Linux containers (LXC) abstracted on top of that to host the Lambda code snippets that get activated by events. Each customer using the AWS service has its Lambda functions running in their own unique virtual machines on top of Xen to ensure isolation across customers, just like EC2 compute slices affords.

At launch time back in 2014, AWS supported Node.js, which is a server-side implantation of the JavaScript language, for code snippets. Node.js was expressly created for applications that mainly move and manipulate data, and the Node,js support for Lambda includes the V8 JavaScript engine that was initially created by Google for its Chrome browser and that significantly speeds up the processing of JavaScript. Java support was added to Lambda late last year (we presume using OpenJDK), and Python support was added earlier this year. Other languages will be added to the Lambda service over time, says Anderson, but we would not hold our breath for Google’s Go. (By comparison, Google Cloud Functions only supports Node.js at the moment, while IBM OpenWhisk does Node,js and Swift, Apple’s analog to JavaScript, and Microsoft Azure Functions supports code snippets written in Node.js, C#, F#, PHP, and Python.)

The Lambda functions can scale horizontally and automatically, with billions of operations per second possible, and do things such as serve static HTML pages directly out of S3 object storage or automatically compress images on the fly or run various kinds of calculations as data streams in from users and/or applications. The containers can be spun up very quickly, according to Anderson, and users can even “pre-warm” the functions in the containers so they start instantly.

The Price Could Be Crazy Right

Aside from not having to wrestle with buying virtual servers (whether they are EC2 instances or containers on the ECS Docker container service that AWS also sells), the other neat thing about Lambda and its serverless computing competitors is that you only pay for the compute cycles and memory used when the code is actually executing. With a virtual machine, you pay by the hour or by the minute, and even if the VM is doing nothing, you still owe the money to the cloud provider. With serverless, event-driven computing, the trend is to charge for time and sometimes memory capacity used over that time.

So, for instance, with Lambda, the smallest increment of compute when a function is running is 100 milliseconds, and the price works out to 20 cents per million requests. When Lambda launched, AWS had a timeout of 30 seconds, after which a Lambda function had to complete or it would be killed, but it has since extended that time limit to five minutes. Customers can set their own timeouts. The pricing also has a memory tiering component. So, for example, with Lambda, the free tier has 1 million free requests and an aggregate of 400,000 GB-seconds of compute time per month; after that, it is 20 centers per million requests and there are ways to scale up the memory for a higher fee if your functions are memory hogs. (You can see the detailed pricing here.) Lambda is explicitly designed to integrate with the S3 object storage, DynamoDB NoSQL data store, Kinesis streaming, and Simple Notification Service (SNS) push messaging services. (Hint: No service should have the word service in its name, but vendors do it all the time.) Microsoft’s Azure Functions pricing is very similar, and Google and IBM don’t have pricing published yet.

Just like mainframes and midrange systems not running Linux or Windows Server persist in the datacenter because of legacy applications, not every application will not be rewritten in a microservices approach based on containers, and not every application will be broken down into functions like those that run on Lambda. There is always a spectrum in the datacenter.

“There continues to be a set of common use cases that are well-optimized for traditional virtual servers like EC2,” says Anderson. “Applications like those from SAP, Oracle, or Microsoft need a dedicated virtual machine to execute on the cloud, and you obviously would not run SAP ERP, Microsoft SharePoint, or any of these large enterprise applications within a set of Lambda functions in a container. These applications bring a tremendous amount of value to our customers, and we see these as continuing as far as we can see into the future at this point.”

Moreover, even though AWS has offered the Elastic MapReduce (EMR) service as a hosted Hadoop setup for quite some time, there are still customers who want to set up their own Hadoop and other analytics frameworks on raw infrastructure on the AWS public cloud. As for the newer Elastic Container Service (ECS), there is increasing demand for workloads that have been migrated to Docker formats as well as batch jobs for high performance computing. So Lambda does not mean AWS is giving up on dedicated virtual machines.

“We have gotten really strong response for Lambda since it launched, in particular in two areas,” Anderson explains. “One is with data processing workloads, where we are executing code in response to changing data, whether that is files uploaded to S3 that need real-time processing or stream processing for all sorts of logs, clickstream, or ad serving data. Spark Streaming still has its advantages because it is understood and many users have already built applications for it. The other area of interest is running web back-ends to build an entirely serverless website.”

Of course, none of this is really serverless, as we said. But it could turn out, for instance, that the combination of S3 for storage, Kinesis for streaming data, and Lambda for certain kinds of processing offers the scale and ease of programming and management that, for new workloads, competes with Spark and its streaming extensions, or even the combination of Hadoop and Kafka for batch-oriented jobs. (It is telling that Amazon’s own Alexa service, which competes in a way with Apple’s Siri, is built in part on Lambda.)

The issue will be how much it costs to do work, and we are pretty sure that AWS is not only willing but able to charge a premium for making it simpler. This surely worked with EC2 and EBS and other services that are arguably more expensive than what many datacenters can do on premises. But by charging for usage rather than time in a strict sense, Amazon is giving back, too. How it all balances out remains to be seen, and we will start doing the math to try to figure that out. We do suspect that there will be elastic demand for Lambda once people figure out how and where to use it, and that means AWS can make it up in volume, keeping its server infrastructure running at even higher utilization as it adds a new type of ephemeral workload.

You can see the shape of some Lambda reference architectures here.

Hi Timothy,

We’ve also developed an toolkit to make serverless development simpler by providing a visual interface to explore and add different services. Please have a look by visiting SLAppForge and let me know your thoughts. And do consider adding us to the article as well.