Finally, we get to test out how well or poorly a well-designed Arm server chip will do in the datacenter. And we don’t have to wait for any of the traditional and upstart server chip makers to convince server partners to build and support machines, and the software partners to get on board and certify their stacks and apps to run on the chip. Amazon Web Services is an ecosystem unto itself, and it owns a lot of its own stack, so it can just mike drop the Graviton2 processor on the stage at re:Invent in Las Vegas and dare Marvell, Ampere, and anyone else who cares to try to keep up.

And that is precisely what Andy Jassy, chief executive officer of AWS, did in announcing the second generation of server-class Arm processors that the cloud computing behemoth has created with its Annapurna Labs division, making it clear to Intel and AMD alike that it doesn’t need X86 processors to run a lot of its workloads.

It’s funny to think of X86 chips as being a legacy workload that costs a premium to make and therefore costs a premium to own or rent, but this is the situation that AWS is itself setting up on its infrastructure. It is still early days, obviously, but if even half of the major hyperscalers and cloud builders follow suit and build custom (or barely custom) versions of the Arm Holdings Neoverse chip designs, which are very good indeed and on a pretty aggressive cadence and performance roadmap, then a representative portion of annual X86 server chip shipments could move from X86 to Arm in a very short time – call it two to three years.

Microsoft has made no secret that it wants to have 50 percent of its server capacity on Arm processors, and has recently started deploying Marvell’s “Vulcan” ThunderX2 processors in its “Olympus” rack servers internally. Microsoft is not talking about the extent of its deployments, but our guess is that it is on the order of tens of thousands of units, which ain’t but a speck against the millions of machines in its server fleet. Google has dabble in Power processors for relatively big iron and has done some deployments, but again we don’t know the magnitude. Google was rumored to be the big backer that Qualcomm had for its “Amberwing” Centriq 2400 processor, and there are persistent whispers that it might be designing its own server and SmartNIC processors based on the Arm architecture, but given the licensing requirements, it seems just as likely that Google would go straight to the open source RISC-V instruction set and work to enhance that. Alibaba has dabbled with Arm servers for the past three years, and in July announced its own Xuantie 910 chip, based on RISC-V. Huawei Technology’s HiSilicon chip design subsidiary launched its 64-core Kunpeng 920, which we presume is a variant of Arm’s own “Ares” Neoverse N1 design and which we presume will be aimed at Chinese hyperscalers, cloud builders, telcos, and other service providers. We think that Amazon’s Graviton2 probably looks a lot like the Kunpeng 920, in fact, and they probably borrow heavily from the Arm Ares design. As is the case with all Arm designs, they do not include memory controllers or PCI-Express controllers, which have to be designed or licensed separately from third parties.

This time last year, AWS rolled out the original Graviton Arm server chip, which had 16 vCPUs running at 2.3 GHz; it was implemented in 16 nanometer processes from Taiwan Semiconductor Manufacturing Corp. AWS never did confirm if the Graviton processor had sixteen cores with no SMT or eight cores with two-way SMT, but we think it does not have SMT and that it is just a stock “Cosmos” core, itself a tweaked Cortex-A72 or Cortex-A75 core, depending. The A1 instances on the EC2 compute facility at AWS could support up to 32 GB of main memory and had up to 10 Gb/sec of network bandwidth coming out of its server adapter and up to 3.5 Gb/sec of Elastic Block Storage (EBS) bandwidth. We suspect that this chip had only one memory controller with two channels, something akin to an Intel Xeon D aimed at hyperscalers. This was not an impressive Arm server chip at all, and more akin to a beefy chip that would make a very powerful SmartNIC.

“In the history of AWS, a big turning point for us was when we acquired Annapurna Labs, which was a group of very talented and expert chip designers and builders in Israel, and we decided that we were going to actually design and build chips to try to give you more capabilities,” Jassy explained in his opening keynote at re:Invent. While lots of companies, including ourselves, have been working with X86 processors for a long time – Intel is very close partner and we have increasingly started using AMD as well – if we wanted to push the price/performance envelope for you, it meant that we had to do some innovating ourselves. We took this to the Annapurna team and we set them loose on a couple chips that we wanted to build that we thought could provide meaningful differentiation in terms of performance and things that really mattered and we thought people were really doing it in a broad way. The first chip that they started working on was an Arm-based chip that we called our Graviton chip, which we announced last year as part of our A1 instances, which were the first Arm-based instances in the cloud and these were designed to be used for scale out workflows, so containerized microservices and web-tier apps and things like that.”

The A1 instances have thousands of customers, but as we have pointed out in the past and just now, it is not a great server chip in terms of its throughput, at least not compared to its peers. But AWS knew that, and so did the rest of us. This was a testing of the waters.

“We had three questions we were wondering about when we launched the A1 instances,” Jassy continued. “The first was: Will anybody use them? The second was: Will the partner ecosystem step up, support the tool chain required for people to use Arm-based instances? And the third was: Can we innovate enough on this first version of this Graviton chip to allow you use Arm-based chips for a much broader array of workloads? On the first two questions, we’ve been really pleasantly surprised. You can see this on the slide, the number of logos, loads of customers are using the A1 instances in a way that we hadn’t anticipated and the partner ecosystem has really stepped up and supported our base instances in a very significant way. The third question – whether we can really innovate enough on this chip – we just weren’t sure about and it’s part of the reason why we started working a couple of years ago on the second version of Graviton, even while we were building the first version, because we just didn’t know if we’re going to be able to do it. It might take a while.”

Chips tend to, and from what little we know, the Graviton2 is much more of a throughput engine and can also, it looks like, hold its own against modern X86 chips at the core level, too, where single thread performance is the gauge.

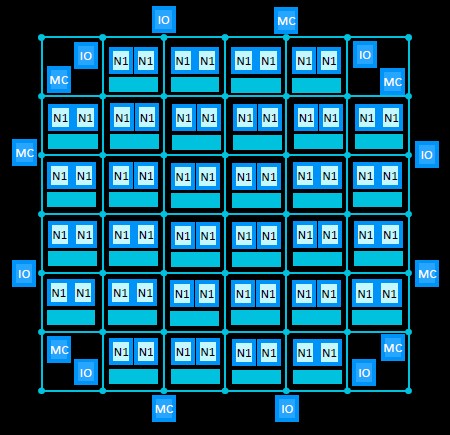

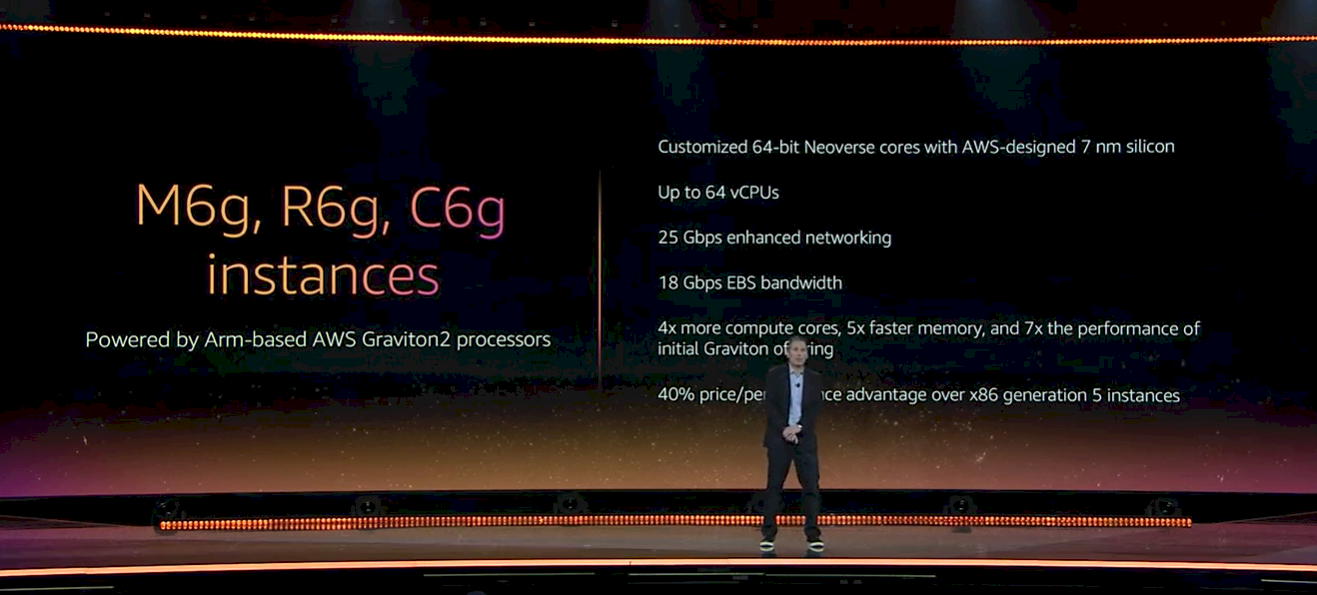

The Graviton2 chip, with over 30 billion transistors, and up to 64 vCPUs – and again, we think these are real cores and not the thread count in half the number of cores. We know that Graviton2 it is a variant of the 7 nanometer Neoverse N1, which means it is a derivative of the “Ares” chip that Arm created to help get customers up to speed. The Ares Neoverse N1 has a top speed of 3.5 GHz, with most licensees driving the cores, which do not have simultaneous multithreading built in, at somewhere between 2.6 GHz and 3.1 GHz, according to Arm. The Ares core has 64 KB of L1 instruction cache and 64 KB of data cache, and the instruction caches across the cores are coherent on a chip. (This is cool.) The Ares design offers 512 KB or 1 MB of private L2 cache per core, and the core complex has a special high bandwidth, low latency pipe called Direct Connect that links the cores to a mesh interconnect that links all of the elements of the system on chip together. The way Arm put together Ares, it can scale up to 128 cores in a single chip or across chiplets; the 64-core variant had eight memory controllers and eight I/O controllers and 32 core pairs with their shared L2 caches.

We think Graviton2 probably looks a lot like the 64-core Ares reference design with some features added in. One of those features is memory encryption, which is done with 256-bit keys that are generated on the server at boot time and that never leave the server. (It is not clear what encryption technique is used, but it is probably AES-256.)

Amazon says that the Graviton2 chip can deliver 7X the integer performance and 2X the floating point performance of the first Graviton chip. That first stat makes sense at the chip level and the second stat must be at the core level or it makes no sense. (AWS was vague.) Going from 16 cores to 64 cores gives you 4X more integer performance, and moving from 2.3 GHz to 3.2 GHz would give you another 39 percent, and going all the way up to 3.5 GHz would give you another 50 percent on top of that, yielding 6X overall. The rest would be improvements in cache architecture, instruction per clock (IPC), and memory bandwidth across the hierarchy. Doubling up the width of floating point vectors is easy enough and normal enough. AWS says further that the Graviton2 chip has per-core caches that are twice as big and additional memory channels (it almost has to by definition) and that these features together allow a Graviton2 to access memory 5X faster than the original Graviton. Frankly, we are surprised that it is not more like 10X faster, particularly if Graviton2 has eight DDR4 memory channels running at 3.2 GHz, as we suspect that it does.

Here is where it gets interesting. AWS compared a vCPU running on the current M5 instances to a vCPU running on the forthcoming M6g instances based on the Graviton2 chip. AWS was not specific about what test was used on what instance configuration, so the following data could be a mixing of apples and applesauce and bowling balls. The M5 instances are based on Intel’s 24-core “Skylake” Xeon SP-8175 Platinum running at 2.5 GHz; this chip is custom made for AWS, with four fewer cores and a slightly higher clock speed (400 MHz) than the stock Xeon SP-8176 Platinum part. Here is how the Graviton2 M6g instances stacked up against the Skylake Xeon SP instances on a variety of workloads on a per-vCPU basis:

- SPECjvm 2008: +43 percent (estimated)

- SPEC CPU 2017 integer: +44 percent (estimated)

- SPEC CPU 2017 floating point: +24 percent (estimated)

- HTTPS load balancing with Nginx: +24 percent

- Memcached: +43 percent performance, at lower latency

- X.264 video encoding: +26 percent

- EDA simulation with Cadence Xcellium: +54 percent

Remember: These comparisons are pitting a core on the Arm chip against a hyperthread (with the consequent reduction in single thread performance to boost the chip throughput). These are significant performance increases, but AWS was not necessarily putting its best Xeon SP foot forward in the comparisons. The EC2 C5 instances are based on a “Cascade Lake” Xeon SP processors, with an all core turbo frequency of 3.6 GHz, and it looks like they have a pair of 24-core chips with HyperThreading activated to deliver 96 vCPUs in a single image. The R5 instances are based on Skylake Xeon SP-8000 series chips (which precise one is unknown) with cores running at 3.1 GHz; it looks like these instances also have a pair of 24-core chips with HyperThreading turned on. These are both much zippier than the M5 instances on a per vCPU basis, and more scalable in terms of throughput across the vCPUs, too. It is very likely that the extra clock speed on these C5 abnd R5 instances would close the per vCPU performance gap. (It is hard to say for sure.)

The main point here is that we suspect that AWS can make processors a lot cheaper than it can buy them from Intel – 20 percent is enough of a reason to do it, but Jassy says the price/performance advantage is around 40 percent. (Presumably that is comparing the actual cost of designing and creating a Graviton2 against what we presume is a heavily discounted custom Skylake Xeon SP used in the M5 instance type.) And because of that AWS is rolling out Graviton2 processors to sit behind Elastic MapReduce (Hadoop), Elastic Load Balancing, ElastiCache, and other platform-level services on its cloud.

For the rest of us, there will be three different configurations of the Graviton2 chips available as instances on the EC2 compute infrastructure service:

- General Purpose (M6g and M6gd): 1 to 64 vCPUs and up to 256 GB of memory

- Compute Optimized (C6g and C6gd): 1 to 64 vCPUs and up to 128 GB of memory

- Memory Optimized (R6g and R6gd): 1 to 64 vCPUs and up to 512 GB of memory

The “g” designates the Graviton2 chip and the “d” designates that it has NVM-Express flash for local storage on the instance. All of the instances will have 25 Gb/sec of network bandwidth and 18 Gb/sec of bandwidth for the Elastic Block Storage service. There will also be bare metal versions, and it will be interesting to see if AWS implemented the CCIX interconnect to create two-socket or even four-socket NUMA servers or stuck with a single-socket design.

The M6g and M6gd instances are available now, and the compute and memory optimized versions will be available in 2020. The chip and the platform and the software stack are all ready, right now, from the same single vendor. When is the last time we could say that about a server platform? The Unix Wars. . . . three decades ago.

Be the first to comment