We hear the rumors and scuttlebutt, just like many of you probably do. This hyperscale company is testing one ARM server chip as a possible alternative to Intel Xeons, or that hyperscaler is tire kicking a different ARM chip with its applications. But for a bunch of different reasons, no ARM server chip maker has landed that big deal that will be a tipping point, that will convince the world that ARM chips can compete head-to-head and toe-to-toe in the datacenter against the Xeons and their allies, the Atoms and the Xeon Phis.

With the widest and deepest processor lineup that we have ever seen from Intel, including custom chips that hyperscalers, cloud builders, and system makers can have cooked up by the world’s largest chip maker, why do any of these chip buyers care if there is an alternative to Intel chips for compute, storage, and networking workloads? We have a fairly homogeneous datacenter, for the first time since perhaps the 1960s. Isn’t this a good thing, with the X86 architecture being ubiquitous and software able to run across a wide variety of machine types and sizes?

The reason most people want – and expect – competition for Intel’s Xeons is simple: Companies always want a second source, and they always want options for key components, and that is because competition drives innovation up and prices down.

It looks like 2016 could be the year when some of these 64-bit ARM chips could finally get some traction in the datacenter beyond prognostications, science projects, and prototypes. But we have heard this before. The market has been waiting years for an ARM server chip that sells in volume and is put to the test in the real world.

The Waiting Is The Hardest Part

Since late 2011, when Calxeda made a big splash with its 32-bit EnergyCore processors and their use in Hewlett-Packard’s prototype “Redstone” hyperscale machines (the predecessors to the current “Moonshot” systems), the market has been waiting for a 64-bit ARM server chip that has the right properties and software support for deployment in datacenters. The reason is simple: Companies always want second sources and options for their key components. The world was easier for large-scale system buyers when Opterons were competing well against Xeons, but frankly, since 2009, Intel has cranked up the engineering effort and made it very tough for anyone to crack into the datacenter that was not already in there.

Calxeda went bust two years later before it got its own 64-bit ARM server chips to market. Nvidia was working on its own “Denver” ARM server chips to mash up with its Tesla GPUs to make powerful hybrid compute elements for systems back in 2011, but backed away from this to attack the datacenter in different ways (including but not limited to the OpenPower partnership with IBM and Mellanox Technologies) in the summer of 2014. (The Denver designs did make it into Tegra processors for client devices, by the way, and Nvidia is pairing its GPUs with other ARM server chips as well.) Samsung Electronics, which was widely rumored to be building a server chip in its Austin facilities in late 2012, apparently pulled the plug on its ARM server chip efforts around the same time.

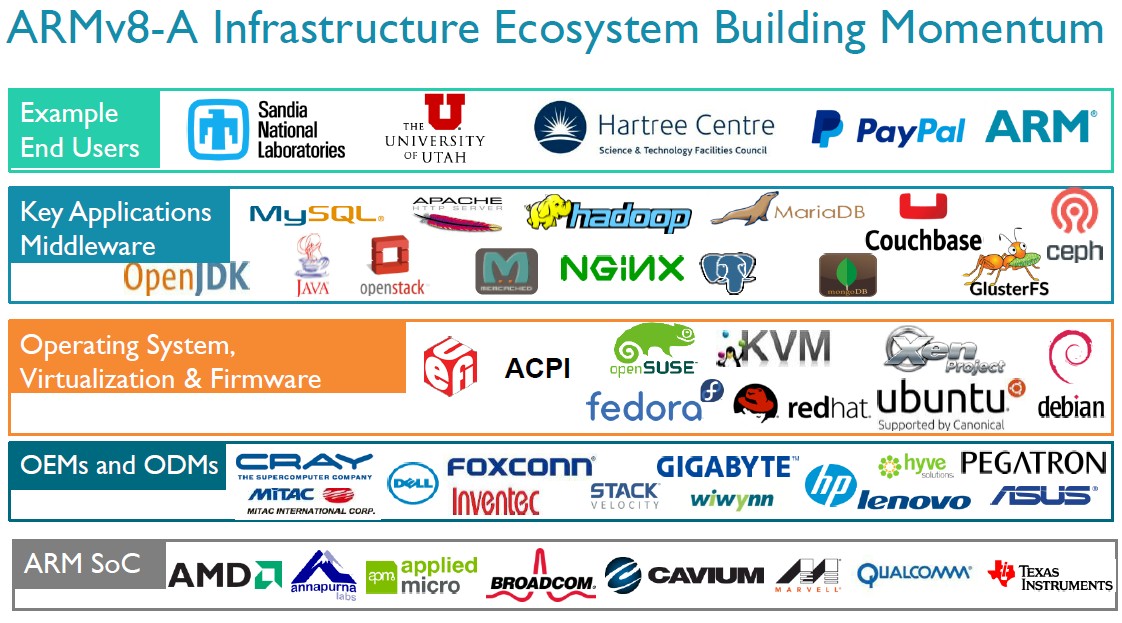

Others have stepped up and joined AMD and Applied Micro in the ARM server fray – including Broadcom, Cavium Networks, Qualcomm, HiSilicon, and Phytium Technology. Marvell and Texas Instruments are still building ARM chips that can do server duty as are FPGA makers Xilinx and Altera (the latter of which is in the process of being acquired by Intel). Samsung is also seeing some action in HPC environments with the ARM chips it aims at client devices. Cray and Lenovo, as we have previously reported, are doing HPC prototypes based on Cavium ARM chips. One wildcard is Annapurna Labs, an ARM chip designer that was acquired by Amazon in January 2015, and EZchip, which was just acquired by Mellanox last week and which had bought massively multicore chip maker Tilera, is another possible entrant into the ARM server fray. (Tilera had moved from a custom RISC core to an ARMv8 core with its latest 100-core Tile-Mx processors, aimed at network jobs but also possibly a sweet server chip.)

We will explore what each of these companies is doing in detail, but there is a larger problem here, and one that early ARM chip makers learned, along with AMD with its Opterons: You cannot give Intel years to formulate an offensive and defensive strategy against a competitive threat.

After its run in with the AMD Opterons a decade ago, where the upstart chipmaker was able to garner a 25 percent share of the server space for a short period of time while Intel was trying to push 64-bit Itaniums and forestall 64-bit Xeons to help prop up the Itaniums, Intel shifted gears and brought to market better Xeon designs – these were the “Nehalem” generation – just as the Great Recession was building steam and just as AMD was having issues with its Opterons. And since that time, Intel has put out generation after generation of Xeons, with progressively more compute and memory capacity, sophisticated power management, and integrated controllers of various kinds that make it very hard to Opterons to compete. Which is why AMD essentially gave up on servers for a few years, as it has admitted recently that it did.

As for the potential competitive threat from ARM processors, Intel took it very seriously from the beginning and forged server-class versions of its Atom processors aimed at microserver compute and storage and network controller jobs, and it is getting ready to ship its “Broadwell” Xeon D family of system-on-chip designs aimed at hyperscalers, which was announced back in March. And Intel still takes the ARM threat very seriously, but does not seem all that concerned.

“One of the things that the ARM camp has going for it is that it certainly has numbers of vendors,” Jason Waxman, the general manager of the Cloud Platforms Group, explained at the recent Data Center Day hosted by Intel for Wall Street analysts. “They are all jockeying for position, and we keep a close eye on them. We are now in multiple years of watching where it is that they are going to try and get early deployments. And we expect that this is happening, that there will be evaluations. Someone is always going to look and kick the tires on something new. So there are always press articles about the next deployment. I think our goal is to try and make sure that investors ask, are they real deployments or are they the proof-of-concept/eval type of thing. There are certainly a lot of evaluations. So far we have not seen any big or broad deployments.”

By the way, the threat to Intel’s hegemony in the datacenter when it comes to processors for compute and storage is not just under threat from ARM. Power chips designed by IBM and its OpenPower partners such as Suzhou PowerCore could get traction, and homegrown processors and DSP accelerators being made by the Chinese military-supercomputing establishment are something the company is keeping its eye on, too. But ARM is perhaps the biggest threat, considering how many companies are eager to take a slice out of Intel’s datacenter business. Any company that can get around 50 percent operating profits selling hardware, as Intel does, is going to be a target. The ability to customize ARM chips for myriad jobs spanning small client devices up to large systems is one reason why Intel has so many custom SKUs in its processor product line. The company is using hyperscalers and cloud builders to perfect its custom Xeon chip design and to appease these top-shelf customers, who might be the first to leave the Xeon fold for some or all of their workloads should the right ARM chips and system components be delivered reliably, in volume, and with compelling price and performance advantages.

Those are big ifs, no doubt about it.

The Hardware Is Hard, Too

It is important to remember that the three big hyperscalers – Google, Amazon, and Microsoft – all have ARM-based client devices that they sell to consumers and deep experience in creating Linux or Windows code to run atop them, as well as deep experience with the X86 instruction set running Linux or Windows. (Apple had a 64-bit chip adhering to the ARMv8 specification out the door ahead of ARM Holdings, the company that shepherds and licenses the ARM instruction set and its own chip designs. The company has not shown any desire to be in the server business after backing out years ago, but that could change, particularly if it decides to build machines for its own use.)

The hyperscalers control their own software stacks from the operating system kernel all the way up through their databases, data stores, caching layers, middleware, cluster management, workload scheduling, and application software, and they are the companies that will have the easiest time porting their code over to a new architecture if an advantage can be had.

“From an Intel strategy perspective, our goal is to make sure that we deliver the best value across every workload where we possibly can, and we just literally don’t leave any seams.”

This is one reason why Google is taking a leading role in the OpenPower Foundation and helping open up the Power architecture, something that IBM should have long before it lost the Apple PC business to Intel and before its own Unix system business went into decline. Had IBM done OpenPower many years ago, Power chips could have been an alternative on smartphones and handhelds and carved out a bigger piece in PCs and servers, too. (IBM was considering a push into adjacent markets way back in the mid-2000s, but did not execute well.) After being cautious about revealing what it was doing with prototype Power8 servers, Urs Hölzle, senior vice president of the Technical Infrastructure team at Google, told The Next Platform back in April that the company would switch architectures from X86 to Power, even for one generation, even for a 20 percent advantage. Google has not said it has Power8 machines in production, by the way, but it has said that it tries its stack of code on various architectures to prevent “bit rot” and to make sure it is getting the best value in terms of performance per watt and raw performance.

“From an Intel strategy perspective, our goal is to make sure that we deliver the best value across every workload where we possibly can, and we just literally don’t leave any seams,” Waxman explained to the Wall Streeters. “And that’s why we have Atom-based SoCs to be competitive there. In the midmarket, we have got a whole range of Xeon E5 SKUs. And so every time I see an ARM benchmark that comes out, I look and I say you know what, I have a SKU that’s lower cost, higher performance, better power, and I think we have something that can go meet that customer’s needs.”

Waxman also contended that the hyperscalers and cloud service providers are “buying up the stack,” as Intel has talked about in the past, which means they are buying chips with higher clock speeds, more cache, or more cores to get the most oomph for their particular workloads. Waxman took a jab at the ARM suppliers, pointing out that “the original ARM hypothesis was that you could win on low power and low performance, but the reality is that is opposite of where they are buying.” True enough, Facebook open sourced its original “Group Hug” microservers with a variety of ARM and Atom processors, and found in production that they did not have enough performance to do the jobs it aimed them at. The “Yosemite” microservers based on the Xeon D processors do have what Facebook needs and are going into production, and by the way, the Yosemite server design was created explicitly to be processor agnostic and specifically has the space, power, and thermal capacity to support Xeon, ARM, and Power processors.

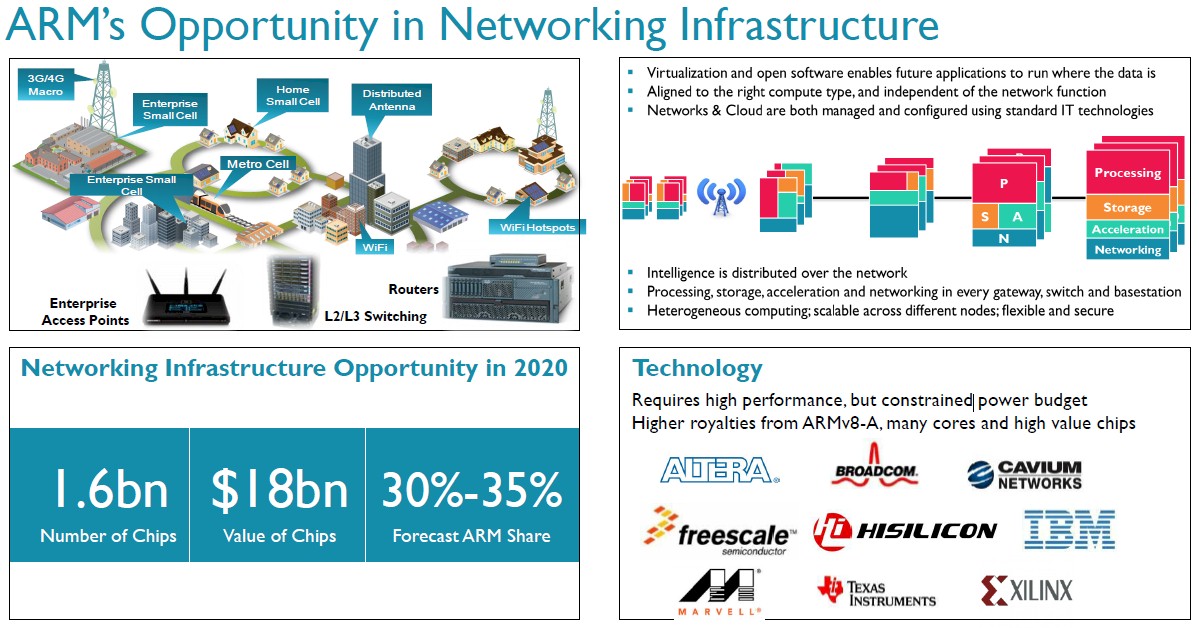

“I suspect that ARM’s initial success in the datacenter will come from switches and storage not servers running traditional operating systems and applications,” Nathan Brookwood, semiconductor analyst at Insight64, tells The Next Platform. “And if you are not Intel or AMD, and if you want to build an SoC for network or storage jobs, then your only choice is ARM.”

But as Brookwood told us, in something of a wry understatement that we both had a chuckle about, “it is really tough to crack into the server market.” Historically, the attack in the datacenter always comes from below. We started with mainframes, which were surrounded by and somewhat replaced by proprietary minicomputers. Then came the Unix revolution with open systems software and RISC-based machines, and after that came client/server, with relatively intelligent clients and collaboration between servers and clients. And in the background, Intel beefed up some desktop PC chips for servers, called the Pentium Pro, back in 1995 – after customers had been using PCs as servers for many years, mind you, but in the front office on local area networks – and started its direct assault on the datacenter.

Intel had zero percent market share in the datacenter twenty years ago, and now X86 processors account for 99 percent shipment share and 80 percent revenue share on servers (nearly all of that is Intel Xeons). Intel says it has close to 80 percent shipment share on storage arrays; we do not know what the revenue share is for X86-based storage arrays and storage servers, but it has to be pretty high. Intel is pushing its own network ASICs and X86 chips into networking devices, too, and wants to grow this business. But our larger point is that these transitions take time, and that the ARM collective has the best chance of competing against Intel in the datacenter. (That said, we think that OpenPower can win some very large installations among hyperscalers and HPC shops and that IBM can continue to sell Power machines to its AIX and IBM i enterprise customer bases.)

The Software Is Actually The Hardest Part

ARM Holdings has been working on 64-bit processing and memory addressing since 2007 and delivered the 64-bit ARMv8 specification in October 2011, which is when all of the chatter about ARM servers really started to rise to the level of cacophony. The shuttering of Calxeda in December 2013 and difficult ramps by AMD with its “Seattle” and Applied Micro with its “X-Gene” chips, and Nvidia and Samsung bowing out of ARM servers, have cooled the enthusiasm a bit. But perhaps back to sensible levels, which is a good thing, and other equally credible and more committed players – Broadcom, Cavium, and Qualcomm – have come onto the field.

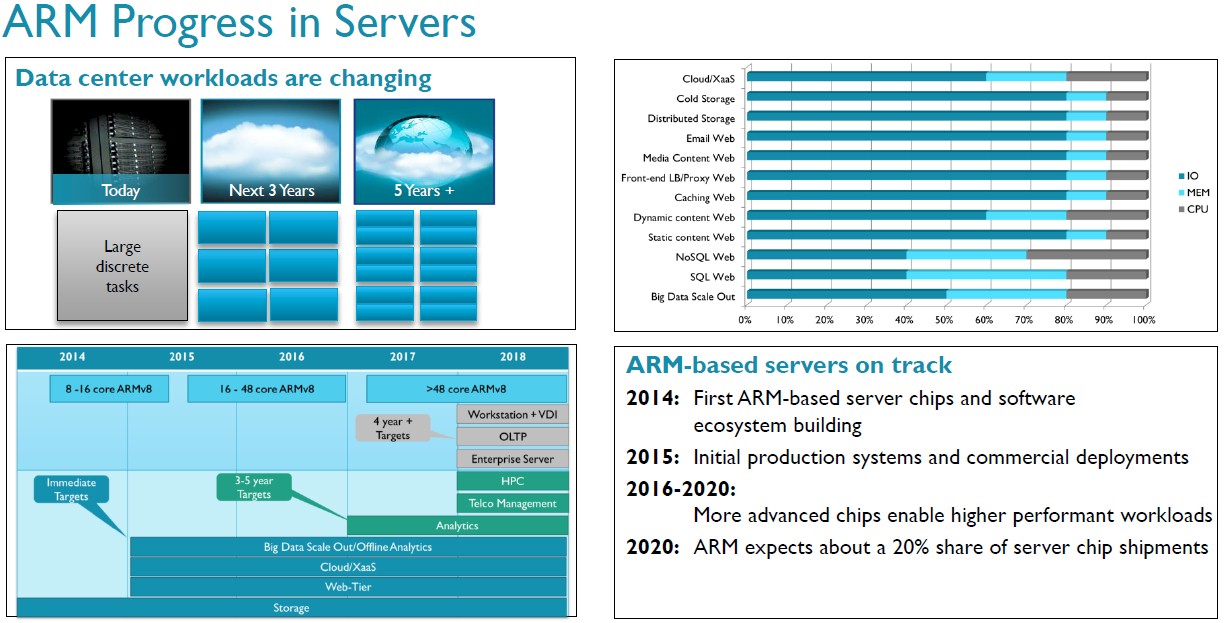

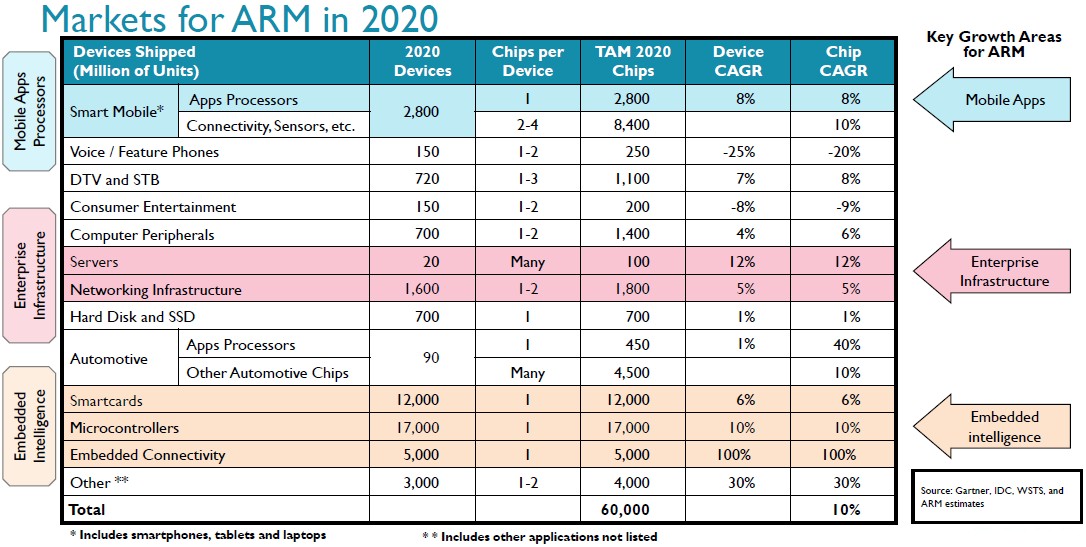

“There are initial shipments, and obviously it is relatively small,” concedes Jeff Underhill, director of server programs at ARM Holdings. “With all of these ODMs and OEMs and the software ecosystem maturing, we are right at the cusp of that knee in the curve, if you will. Next year, we will see ramps and deployments that are coming out of the pipeline from proofs of concept this year, and we are expecting ARM to be 15 percent to 20 percent of the server market in the 2020 timeframe. We are heavily invested in this area, and there is a lot of goodness going on right now.”

The biggest issue with ARM servers, once credible 64-bit chips with competitive performance and price compared to Xeon and Atom processors are out, is software. Both the systems software stack and applications need to be ported what will end up being the popular processors, and software companies have not had to worry about anything but Xeons (more or less) for the better part of six years now. Adding ARM to the mix makes each release more expensive, and most software makers are loathe to do this until they know there will be sufficient volumes to justify the software engineering effort. Of course, without such software, it is hard to get enterprises lined up. Which is why hyperscalers that control their own software are the obvious first volume customers for ARM server chips.

All of this takes time, but the ice will break once enough machines are in the ecosystem, enough commercial software is ported over to the ARMv8 architecture, and enough companies can see the benefits of an ARM architecture – should they pan out, as many anticipate.

As we have pointed out before, the IBM PC launched in 1981 with an Intel 8088 processor, and it took fourteen years for that chip to evolve into the Pentium Pro server processor. Because of the high cost of proprietary and Unix systems and the widespread adoption the upstart Windows operating system in enterprise networks and the rise of Linux at the end of the 1990s during the dot-com boom, Intel very quickly saw its share of the datacenter processor pie grow. But it started with a base of business on PCs and on local area networks, which gave it the fuel to break into the glass house. It looks like ARM will have to do something similar, with intellectual property, expertise, and volumes coming from client, networking, and storage devices and breaking into servers from an oblique angle. Similar to, but not the same as, the route that Intel itself took.

In the next parts in this ARM server series, we will look at the status of the 64-bit ARM server chip makers and their products, and take a deeper look at the evolving software stack for these machines as well as the potential early use cases for enterprise, hyperscale, HPC, and cloud customers.

ARM’s only change is that we are approaching the end-side of the current technology S-Curve in silicon-based substrate for computation. So to squeeze more performance out people look for different turn-wheels and knobs.

But I still have doubts that their micro-architecture can actually squeeze more performance out per Watt than x86. So far there is hasn’t been any solid proof that this is the case.

But if someone is really keen to enter and dominate the server market in the next decade he has to look at the next big thing that replaces the current silicon paradigm or find a total new way of computing (reversible computing would be a good example)

https://www.scaleway.com/

Works great.

The Scaleway machines are not using 64-bit processors, but look like a cool design. When do you plan to have 64-bit and what one do you plan to adopt?

It is hard to guess 14 years later? I don’t think ARM can even last so long years, because you can’t be long if you are not number 1. It is what comes with number 2.

ARM in software is very big problem, & hardware is too big, too. Just deeper idea is that arm has stopped in 3 to 2 last years in progress, hoped progress of fabs bring A SMALL advance for them, & ARM can’t succeed EVEN W %100 performance/watts up, seriously so…

Just I think companies go ARM for price war, no other reason can drive success, & for it, they have better than them ATOM. Quality? lol,,, that’s a problem in server really if you want to beat mission critical xeon….. Hard job in High Performance server jobs…

Thanks!