Server maker Lenovo might have just shelled out billions of dollars to acquire IBM’s System x X86 server business, but don’t be confused. Lenovo is keeping its eye on developments in the ARM server processor market. To see what kind of potential ARM server chips have in the HPC arena, Lenovo has just teamed up with Hartree Centre, a supercomputing center in the United Kingdom, to build a prototype cluster that will marry Lenovo’s NeXtScale minimalist server designs with Cavium’s ThunderX ARM server processors.

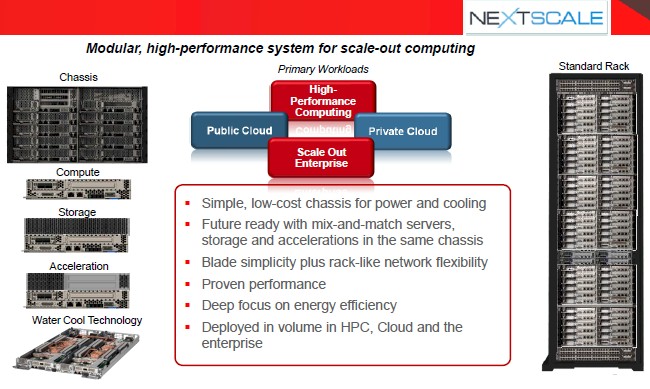

The NeXtScale machines debuted from IBM back in September 2013, and they are the follow-on machines to its iDataPlex systems. Both machines are aimed at high performance computing and hyperscale customers alike, but Big Blue got more traction with both lines among the HPC crowd than it did the hyperscalers. IBM officially supported only Xeon processors from Intel in the iDataPlex and NextScale machines, and Lenovo is sticking with that plan for now, too, except for a few prototypes.

This is not the first time that the former IBM techies who now work for Lenovo have built a prototype ARM machine. A few years back, says Scott Tease, executive director of Lenovo’s HPC business, IBM put together a NeXtScale system that married together 32-bit processors from now-defunct ARM chip designer Calxeda with 3 TB disk drives to create a server sled with integrated networking that could support six drives for 18 TB of capacity per sled. This storage array was “a little too early” in terms of the software stack and market acceptance, but Tease says that Lenovo is convinced that “there is real gold at the end of the tunnel” when it comes to ARM processors, “but it is a big tunnel to get through to get to the gold.”

Hartree Centre was founded in 2012 in the United Kingdom by the Science & Technology Facilities Council to, among other things, explore energy efficient HPC systems. Specifically, Hartree Centre has been working on compiler and application optimization to improve the energy efficiency of supercomputers since it was established with £37.5 million from the UK government. Hartree Centre operates in the Sci-Tech Daresbury and the Harwell Oxford national labs. The Science & Technology Facilities Council received over £170 million in funding for its various facilities, and £19 million of that has been allocated to do research on low-power computing architectures.

The supercomputing organization currently has two systems built by IBM. The first is a 114,000-core BlueGene/Q massively parallel machine with 7 TB of main memory across its nodes; this is called Blue Joule. The second machine is a 512-node System x iDataPlex cluster with 8,192 Xeon E5-2600 cores and 16 TB of main memory. Both machines share a parallel storage array running IBM’s GPFS that was built by DataDirect Networks using its SFA10K storage arrays and that has a total of 5.7 PB of storage capacity. The clusters have an IBM System Storage TS3500 tape library backing them up that has 15 PB of capacity.

Lenovo is building the prototype ARM-based NeXtScale machine now, and somewhere in the middle of this year, Hartree Centre will have it installed and start getting to work on tuning it up and putting it through the paces.

The thermal and price/performance advantages for server-class ARM processors compared to Xeon and Opteron chips are still too hard to quantify, says Tease, because many of the chips are not yet shipping and even those that are still have compilers and software stacks that will need considerable tuning to get every ounce of performance out of the chips.

What has Lenovo and Hartree Centre both excited is the system-on-chip design of ARM server chips, which include multiple cores, networking and other peripheral controllers, and various accelerators to speed up encryption, data compression, and other functions. While the X86 server node presents a solid compute foundation that most software runs on, to fully utilize it in an HPC environment companies have to add networking and storage controllers and perhaps encryption and other kinds of accelerators to have an energy efficient node as the basis of a cluster.

Lenovo and Hartree want to build a NeXtScale system that eliminates as many of those outside peripheral components as possible and to exploit the functions built into an ARM-based system-on-chip. Lenovo and Hartree are experimenting to see if they can trade off some of the wide choice in peripherals available in the X86 hardware stack for an ARM-based system that has as many elements of the system on the chip as possible. This will, they hope, eliminate cost while boosting energy efficiency compared to applications that do not have any acceleration or those that are boosted by field programmable gate arrays (FPGAs), digital signal processors, or graphics processing units (GPUs). There is only one way to find out for sure if this will work, and that is to build a system. (Incidentally, Intel is widely expected to be delivering a Xeon-based SoC sometime this year based on its “Broadwell” designs, so ARM will not be the only SoC game in town.)

The NeXtScale systems are minimalist server designs that have a low-cost chassis and a mix of plain compute, accelerated compute, and storage nodes that fit into the enclosures. The prototype being developed by Lenovo and Hartree Centre is not aimed at every workload out there in the datacenter, and will not be aimed at those workloads that need the very highest single-thread or single-core performance, says Tease. And many commercial software packages will probably not run on the ARM nodes for a while, too. Hartree is looking at Canonical’s Ubuntu Server and Red Hat’s Fedora development release as potential operating systems for the ARM cluster, and is also seeking to leverage the Red Hat early access program for a variant of Linux that it is creating that will sit somewhere between Red Hat Enterprise Linux and Fedora in terms of support when it comes out.

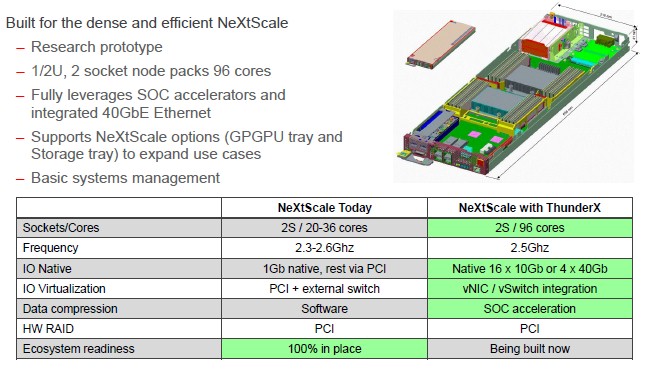

Lenovo is working with all of the ARM server chip makers – AMD, Applied Micro, Broadcom, Cavium, and Qualcomm – to keep abreast of what they are doing, but for this prototype Lenovo and Hartree Centre chose the ThunderX ARM variant from Cavium as the main engine. The ThunderX chip is interesting for a number of reasons. First, it has two-socket NUMA linking of processors and their main memories, giving it parity in this respect with a Xeon E5-2600 processor from Intel. The entry ThunderX will have eight cores and consume about 20 watts, and a top-end 48-core ThunderX chip will burn about 95 watts. Cavium has said that once you take into account the NUMA and peripheral chipset and a LAN-on-motherboard card to a Xeon E5-2600, the ThunderX has about a 50 percent lower power envelope at roughly equivalent performance.

Interestingly, the top-end ThunderX chips will have an integrated Layer 2/3 switch fabric that is based on chips developed by XPliant, which Cavium acquired in July 2014. The fastest XPliant Ethernet network ASIC can push 32 ports running at 100 Gb/sec speeds, 64 ports running at either 50 Gb/sec or 40 Gb/sec, and 128 ports running at 25 Gb/sec or 10 Gb/sec. All that Cavium has said to date is that the high-end ThunderX chips will have multiple 10 Gb/sec and 40 Gb/sec ports coming right off the SoC. The idea is to eliminate the need of some of the top-of-rack switches in a typical rack of server nodes in a cluster. We can see from the presentation above that Hartree Centre will be getting the top-end 48-core ThunderX chips running at 2.5 GHz, and further that these chips will have four 40 Gb/sec ports on them. Customers can use these for very thick pipes between server nodes and switches or use breaker cables to turn it into 16 ports running at 10 Gb/sec speeds. (Presumably half of that port count comes from each ThunderX chip in a two-socket nodes.) The upshot, says Tease, is that you can build a mesh or torus topology with a network of these ThunderX processing nodes without needing so many top of rack switches.

The ARM-based NeXtScale machines will offer up to 1,152 cores across the dozen nodes in 6U of rack space. The Xeon-based nodes can deliver between 20 and 36 cores per node, or between 240 and 720 cores in the same 6U of space. It is anybody’s guess right now what the performance is for those ThunderX ARM cores, so it is hard to make a comparison. So much depends on the workload and how it stresses each system architecture.

This lack of data is precisely why Hartree Centre and Lenovo are working together to build the prototype, and incidentally, if you want to work with Lenovo on a prototype cluster that employs GPUs as accelerators, Lenovo is looking for a partner to do that.

Lenovo is not the only HPC player that is building early ARM-GPU hybrids. At last year’s International Supercomputing Conference in Hamburg, Germany, Cirrascale took two of the “Mustang” system boards from Applied Micro, which are based on that chip maker’s initial “Storm” X-Gene 1 processor, and lashed a single Tesla GPU coprocessor to it to create a hybrid compute node. These half-width boards allow for up to 84 nodes to be stack in a standard 42U rack, with each node consuming about 800 watts. Cirrascale is very clear that this is a development platform, and says it is aimed at various data analytics applications as well as seismic processing, computational biology and chemistry, weather and climate modeling, computational finance, computational physics, computer aided design, and computational fluid dynamics. In other words, the full HPC gamut plus any GPU-accelerated analytics, provided the code runs on Linux on ARM.

A prototype consisting of 78 of the dual-node, 1U Cirrascale ARM-GPU machines is being installed at Lawrence Livermore National Laboratory and will be tested using a variant of Red Hat’s Linux that runs on ARM chips that is available under an early access program. This early access Linux is best thought of as somewhere between the Fedora development release and the full-on Red Hat Enterprise Linux distribution. Red Hat has said it intends to deliver an ARM variant of its Linux stack that has for-fee technical support but stops short of being RHEL.

At the SC14 supercomputing conference in November, Cray announced that it is working with Cavium to deliver clusters based on the 48-core variant of the ThunderX chips to investigate the feasibility of using these chips to run HPC workloads. Cray also announced that it was awarded a contract under the FastFoward 2 program, run by the US Department of Energy’s Office of Science and the National Nuclear Security Administration, to explore HPC architectures involving 64-bit ARM processors.

Eurotech, which sells dense, water-cooled systems that use both Intel Xeon Phi and Nvidia Tesla GPU accelerators married to processors for hybrid computing on supercomputers, announced its Aurora Hive hybrid machines at the SC14 event as well last fall. The Intel compute modules in the Aurora Hive combine Intel’s “Haswell” Xeon E3-1200 v3 processors with its Xeon Phi 7120x processors, with four Xeon Phis per Xeon E3; customers can also add in Nvidia Tesla K40 GPU coprocessors. Eurotech has put in a PCI-Express 3.0 switch from Avago Technologies (which now owns PLX Technology) to link the coprocessors to the CPUs; this switch also hooks out to a two-port 56 Gb/sec InfiniBand adapter from Mellanox Technologies. The compute bricks fit four across and sixteen high in the Hive rack, for a total of 64 nodes. Ignoring the performance of the four cores on each Xeon E3 processor, a rack of water-cooled Aurora Hive nodes delivers around 614 teraflops for Xeon Phi configurations and around 732 teraflops for Tesla GPU configurations. Eurotech is awaiting the “Shadowcat” X-Gene 2 chip to be delivered by Applied Micro before creating a hybrid ARM-Tesla compute node, and the plan is to ship such an option before June this year.

Interconnect details disclosed here suggest ARM is not ready for clustered computing. 40 GbE alone does not cut it: you need IB or RoCE offload to meeting clustered computing performance requirements, leaving the main CPU cores for application compute cycles.