Oracle Takes The Whole Nvidia AI Stack For Its Cloud

The top hyperscalers and clouds are rich enough to build out infrastructure on a global scale and create just about any kind of platform they feel like. …

The top hyperscalers and clouds are rich enough to build out infrastructure on a global scale and create just about any kind of platform they feel like. …

Predicting the future is hard, even with supercomputers. And maybe specifically when you are talking about predicting the future of supercomputers. …

If the semiconductor business teaches us anything, it is that volumes matter more than architecture. …

Like everyone else on planet Earth, we were expecting for the next generation of graphics cards based on the “Ada Lovelace” architecture to be announced at the GTC fall 2022 conference this week, but we did not expect for the company to deliver a passively cooled, datacenter server friendly variant of the GeForce RTX 6000 series quite so fast. …

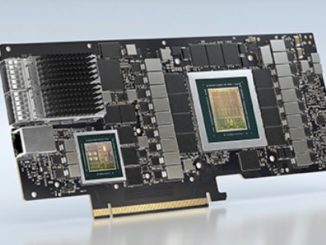

You can’t be certain about a lot of things in the world these days, but one thing you can count on is the voracious appetite for parallel compute, high bandwidth memory, and high bandwidth networking for AI training workloads. …

One of the first tenets of machine learning, which is a very precise kind of data analytics and statistical analysis, is that more data beats a better algorithm every time. …

China is the world’s second largest economy, it has the world’s largest population, and it is only a matter of time before has a world-class technology ecosystem spanning the smallest transistors to the largest hyperscale and HPC systems. …

Databases and datastores are by far the stickiest things in the datacenter. …

Imagine, if you will, that Nvidia had launched its forthcoming “Grace” Arm server CPU three years ago instead of early next year. …

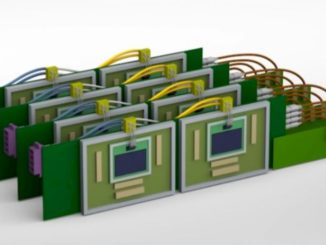

We have been talking about silicon photonics so long that we are, probably like many of you, frustrated that it already is not ubiquitous. …

All Content Copyright The Next Platform