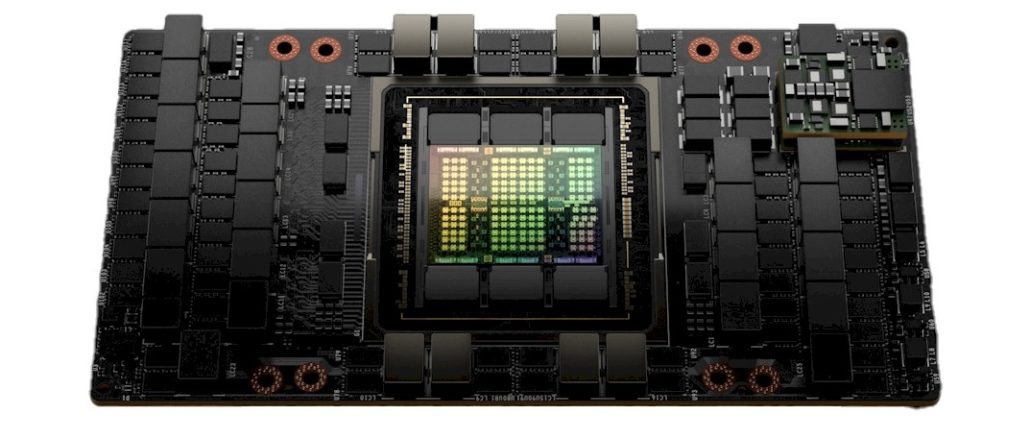

You can’t be certain about a lot of things in the world these days, but one thing you can count on is the voracious appetite for parallel compute, high bandwidth memory, and high bandwidth networking for AI training workloads. And that is why Nvidia can afford to milk its prior generation “Ampere” GA100 GPUs and take its time getting the “Hopper” GH100 follow-ons, announced back in March, into the field.

The gaming and cryptocurrency parts of the Nvidia business are taking it on the chin right now, but the datacenter business, driven largely by AI training and inference with a smattering of traditional HPC simulation and modeling, is doing just fine.

It’s not like there are any high volume alternatives to Nvidia GPUs at the moment. Intel isn’t shipping its “Ponte Vecchio” Xe HPC GPUs and probably won’t be until much later this year. And while AMD has fielded a pretty impressive “Aldebaran” Instinct MI250X GPU launched in November 2021, the company had to pour all of the manufacturing capacity of these devices into a couple of big HPC deals. And by the time AMD is able to sell the Instinct MI200 series – both PCI-Express MI210s launched in March and OAM form factor MI250Xs from nearly a year ago – in volume, Nvidia will be shipping the PCI-Express versions of its Hopper H100 GPU accelerators (which use the GH100 chip) in volume through the channel and through OEM and ODM partners starting in October. And according to Nvidia co-founder and chief executive officer Jensen Huang, the major clouds will have H100 GPU accelerators available “in just a few months.”

As you can see from the chart above, the DGX-H100 systems, which use the SXM5 socket for GH100 GPUs and using NVLink 4.0 interconnects instead of PCI-Express 5.0, are only available right now on the Nvidia Launchpad service it runs in conjunction with Equinix. Customers who want DGX-H100 systems can order now, but Nvidia will not be able to deliver them until Q1 2023.

Interestingly, we think that the HGX boards and the SXM5 socket for the GH100 GPU are being delayed because of the rumored delay by Intel in delivering the “Sapphire Rapids” Xeon SP processor, which has PCI-Express 5.0 links and support for the CXL protocol and which was chosen by Nvidia as the serial compute engine for its homegrown DGX-H100 Hopper accelerated systems. Intel has not admitted to this delay, and therefore Nvidia can’t do it on Intel’s behalf and so everyone danced around the issue.

How much does it matter that the DGX-H100 systems are delayed until Q1 2023? Not a hell of a lot over the long run and in the big picture.

First of all, the DGX servers are the Cadillac GPU accelerated system created by Nvidia for its own use and some marquee accounts; it is not a big part of the Datacenter group revenue stream – although we certainly think it could be some day if Nvidia thought otherwise about it.

Second, the hyperscalers and cloud builders who are going to be deploying HGX-H100 system boards with eight Hopper GPUs and NVLink 4.0 switch interconnect between them all can use whatever CPU they want as the serial processor. Some are going to use current “Ice Lake” Xeon SPs or current AMD “Milan” Epyc 7003s, which have only PCI-Express 4.0 links out to the GPU complexes. Some will wait for Sapphire Rapids and some will choose AMD’s forthcoming “Genoa” Epyc 7004s (we have seen that they might be called the Epyc 9000s), which have PCI-Express 5.0 support.

From what we have heard, a lot of the hyperscalers and cloud builders plan to use the PCI-Express versions of the Hopper GPU accelerators as inference engines for large language models, and they do not need PCI-Express 5.0 bandwidth back to the CPU for this work; PCI-Express 4.0 links will work fine. So the current Ice Lake Xeon SP and Milan Epyc 7003 processor can handle this work. Moreover, for big AI training runs, everybody is doing GPU Direct over InfiniBand networks to communicate between the GPUs across a cluster and they are not going back to the CPU anyway. In these cases, what they care about is having a lot of Hoppers connected locally in a node using NVLink and across nodes using InfiniBand or Ethernet with RoCE so GPU Direct keeps the CPU out of the loop. And those who have one monolithic, huge AI training job that spans thousands – and maybe up to 10,000 GPUs – for a single run. They want Hopper GPUs now because of the lower mixed precision and the higher performance compute and memory bandwidth.

“The majority of Hopper GPUs are going to the large CSPs followed by the major OEMs,” Ian Buck, general manager of Accelerated Computing at Nvidia, tells The Next Platform. “And they have all chosen slightly different CPUs in different configurations. And you will see a mix of those early offerings going that way, and then they are thinking about kickers for next year. I can’t tell you what they have chosen, but not all of them have chosen CPUs with PCI-Express 5.0.”

But there is another reason, and this might be why we can get PCI-Express versions of Hopper faster than the SXM5 versions on the HGX system boards.

“You have seen the building blocks from Computex earlier this year for Grace-Hopper,” Buck continues. “You can see where we are moving to a more modular design where is it one CPU to one GPU plus a switch chip, with everything linked by cables. Cables offer much, much higher signal integrity than PCB traces, although PCB traces have the virtue of being cheap. But they are just copper poured over fiberglass, so it doesn’t have very good dielectric capability. You can go to a more expensive PCB to get a better dielectric. But the best is a copper cable or an optical transceiver. And so many companies are doing PCI-Express cable connected systems already to improve the signal integrity. You do copper if you can, and optics after that. And not just to scale training outside of the box. We are seeing cables even inside of the box.”

Some system makers have been doing cables inside the node and fiber optics across the nodes for NUMA scale up for CPU systems for years. This is a bit like that.

As for the HGX-H100 boards, the delay is really about the qualification of these boards, and we begin to see why this might be the case, given what Buck tells us above. It is not about component delays as much as it is about testing and qualification of complex manufacturing processes.

Having said all of this, we think that Nvidia was hoping to have this all done earlier, but the coronavirus pandemic has messed up supply chains as well as design and manufacturing cycles up and down the IT ecosystem.

Which brings us to the “Grace” Arm server CPU that Nvidia is pairing with its Hopper GPUs. Nvidia never gave a hard deadline on when the Grace CPU would be delivered. When it was first announced and throughout last year and into early this year, as more was divulged about the Grace CPU, its deliver was qualified as “early 2023.” And now, the top brass at Nvidia are saying that systems will be available “in the first half of 2023.” In IT vendor speak, the former is a way to channel “no later than March or so” and the latter means “we will be kissing June 30 so hard it will think we are in love.”

In the longest of runs, this delay will not be significant, even if in the short run it is disappointing because we want to see what companies do with Grace and Hopper together, how it performs, and what they will cost as a hybrid compute and memory engine.

Long live the King.

H100 will ship for years in a multitude of configurations. Dedicated transformer hardware and the CUDA stack assures it will be SOTA for some time to come. Nvidia’s regular software performance kickers are a thing of beauty. Makes one wonder when all the AI chip startups — even AMD and Intel — exhaust their ML budgets chasing, with little business to show, and with little hope of catching up. I can’t help recalling how many competitors said emphatically Nvidia “lucked” into the ML business, and “GPUs aren’t purpose-built” enough so had little in the way of a future. Yet Grace ships next year in what’s looking likely to provide another performance boost, this time on the system level. Nvidia just keeps seeing and raising the pot. The rest of us are made to wonder when all the purpose-built parts are going to show up and provide more than a press release to try and wrestle the crown away…

Thank you for the insights. Do you anticipate NVIDIA ultimately abandoning the gaming market to focus exclusively on the data center in light of the challenges they are having there now?

It seems highly unlikely. The gaming business gives Nvidia the volume and a CUDA platform on every desktop. The pro viz business gives them render and inference engines. A lot of logic blocks are moved between the datacenter and the other two sets of customers. Nvidia would lose so much scale if it did that.

Thank for you Timothy for providing additional clarity and perspective. 🙂

Agree with TPM. Nvidia generally dominates the desktop PC Gaming market place with somewhere between 70-80% of the add-in card market share and higher than that in the ProVis segment. The “challenges” you inquire about are an over-inventory situation, which time will resolve in the near term. Long term, abandoning a very lucrative market like gaming doesn’t make sense. Streaming gaming services (via cloud or data center based gaming) will grow to dwarf Nvidia’s PC gaming add in card business (in many years). Nvidia will earn revenue in two areas, hardware sales to CSPs and software subscription sales to end users.

This makes a lot of sense and I learned a lot- thank you EC!