Nvidia: There’s A New Kid In Datacenter Town

Unless you were born sometime during World War II, you have never seen anything like this before from a computing system manufacturer. …

Unless you were born sometime during World War II, you have never seen anything like this before from a computing system manufacturer. …

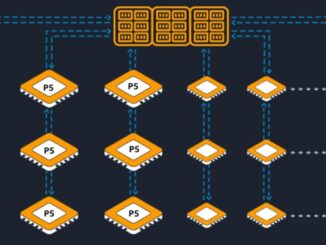

If large language models are the foundation of a new programming model, as Nvidia and many others believe it is, then the hybrid CPU-GPU compute engine is the new general purpose computing platform. …

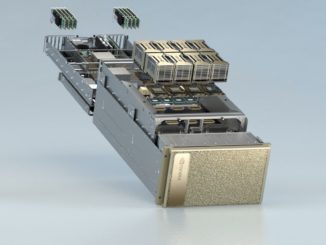

When we think about high performance computing, it is often in the context of liquid-cooled systems deployed in facilities specifically designed to accommodate their power and thermal requirements. …

UPDATED: It is funny what courses were the most fun and most useful when we look back at college. …

Here we go again. Some big hyperscalers and cloud builders and their ASIC and switch suppliers are unhappy about Ethernet, and rather than wait for the IEEE to address issues, they are taking matters in their own hands to create what will ultimately become an IEEE standard that moves Ethernet forward in a direction and speed of their choosing. …

The National Center for Supercomputing Applications at the University of Illinois just fired up its Delta system back in April 2022, and now it has just been given $10 million by the National Science Foundation to expand that machine with an AI partition, called DeltaAI appropriately enough, that is based on Nvidia’s “Hopper” H100 GPU accelerators. …

If you want to get the attention of server makers and compute engine providers and especially if you are going to be building GPU-laden clusters with shiny new gear to drive AI training and possibly AI inference for large language models and recommendation engines, the first thing you need is $1 billion. …

It was a fortuitous coincidence that Nvidia was already working on massively parallel GPU compute engines for doing calculations in HPC simulations and models when the machine learning tipping point happened, and similarly, it was fortunate for InfiniBand that it had the advantage of high bandwidth, low latency, and remote direct memory access across GPUs at that same moment. …

Updated With More MGX Specs: Whenever a compute engine maker also does motherboards as well as system designs, those companies that make motherboards (there are dozens who do) and create system designs (the original design manufacturers and the original – get a little bit nervous as well as a bit relieved. …

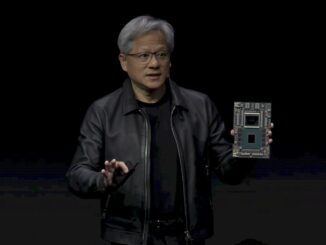

If you were hoping to get your hands on one of Nvidia’s “Grace” Arm-based CPUs, then you better be prepared to buy a pretty big machine. …

All Content Copyright The Next Platform