Compute

Putting In-Memory Processing Through The Paces

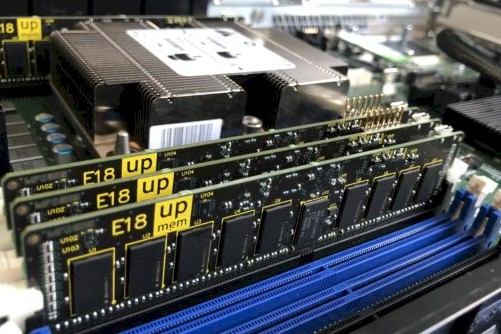

From a conceptual standpoint, the idea of embedding processing within main memory makes logical sense since it would eliminate many layers of latency between compute and memory in modern systems and make the parallel processing inherent in many workloads overlay elegantly onto the distributed compute and storage components to speed up processing. …