Companies Need On-Premise HPC – And For More Than AI, Too

Generative AI and the various capacity and latency needs it has for compute and storage is muscling out almost every other topic when conversations turn to HPC and enterprise. …

Generative AI and the various capacity and latency needs it has for compute and storage is muscling out almost every other topic when conversations turn to HPC and enterprise. …

It is not a coincidence that the companies that got the most “Hopper” H100 allocations from Nvidia in 2023 were also the hyperscalers and cloud builders, who in many cases wear both hats and who are as interested in renting out their GPU capacity for others to build AI models as they are in innovating in the development of large language models. …

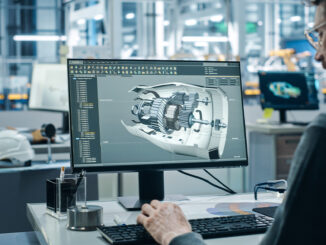

While a lot of people focus on the floating point and integer processing architectures of various kinds of compute engines, we are spending more and more of our time looking at memory hierarchies and interconnect hierarchies. …

If you want to take on Nvidia on its home turf of AI processing, then you had better bring more than your A game. …

If you handle hundreds of trillions of AI model executions per day, and are going to change that by one or two orders of magnitude as GenAI goes mainstream, you are going to need GPUs. …

PARTNER CONTENT: High performance computing (HPC) decision-makers are starting to prioritize energy efficiency in operations and procurement plans. …

Spoiler alert!

A lot of neat things have just been added to the Arm Neoverse datacenter compute roadmap, but one of them is not a datacenter-class, discrete GPU accelerator. …

For a lot of state universities in the United States, and their equivalent political organizations of regions or provinces in other nations across the globe, it is a lot easier to find extremely interested undergraduate and graduate students who want to contribute to the font of knowledge in high performance computing than it is to find the budget to build a top-notch supercomputer of reasonable scale. …

PARTNER CONTENT: Trillion-dollar industries are exploring new ways of increasing high performance computing (HPC) performance, while saving resources and reducing costs. …

In many ways, the “Grace” CG100 server processor created by Nvidia – its first true server CPU and a very useful adjunct for extending the memory space of its “Hopper” GH100 GPU accelerators – was designed perfectly for HPC simulation and modeling workloads. …

All Content Copyright The Next Platform