PARTNER CONTENT: Trillion-dollar industries are exploring new ways of increasing high performance computing (HPC) performance, while saving resources and reducing costs. Scientists and engineers are looking to build robust HPC infrastructure to run complex workloads in the most energy-efficient approach.

As a result, organizations are re-evaluating how they architect compute infrastructure to run HPC workloads, identify new ways to improve application performance, and reduce energy consumption. According to a recent study by Hyperion Research, organizations are increasingly choosing GPUs and the cloud to support their HPC workloads. Hyperion found that nearly every organization adopting HPC resources is either already using or is investigating GPUs and the cloud to accelerate HPC workloads, network performance, and reduce costs.

Convergence Of Cloud, HPC, And AI/ML

A new category of HPC workloads is emerging. HPC users are adopting and integrating artificial intelligence (AI) and machine learning (ML) at increasingly higher rates. Multiple methods and models exist with large language models (LLMs) and a number of foundation models (FMs), drawing broad global interest from organizations.

Hyperion Research found that nearly 90 percent of HPC users surveyed are currently using or plan to use AI to enhance their HPC workloads. This includes hardware (processors, networking, data access), software (data management, queueing, developer tools), AI expertise (procurement strategy, maintenance, troubleshooting), and regulations (data provenance, data privacy, legal concerns).

As a result, organizations are experiencing a convergence of cloud, HPC, and AI/ML. Two simultaneous shifts are occurring: one toward workflows, ensembles, and broader integration; and another toward tightly coupled, high-performance capabilities. The outcome is closely integrated, massive-scale computing accelerating innovation across industries from automotive and financial services to healthcare, manufacturing, and beyond.

Common cross-industry HPC challenges are multiple and diverse, and include:

Rising energy costs – US consumers paid on average 14.3 percent more for electricity last year than the previous year. Organizations are looking to reduce operating costs for electricity used in cooling on-premises data centers while running HPC simulations and building AI and ML models at scale.

Limited power allocation – Energy price increases raise concerns about limited electricity supply and power allocation in on-premises data centers. Organizations need to optimize resources to ensure HPC workload continuity within the same power envelope.

Growing complexity of HPC and AI workloads – As HPC simulations grow in complexity and size, they require increasingly large computational resources that use massively parallel computing. HPC teams are faced with issues building network performance with fast storage and large amounts of memory, while maintaining or reducing total power consumption.

Solve HPC Challenges With Accelerated Computing

With Amazon Web Services (AWS), organizations can overcome their HPC challenges by accessing flexible compute capacity and a variety of purpose-built tools. AWS service levels ensure elasticity from 100 instances to 1,000 instances and more in minutes, which reduces time that HPC users previously spent waiting in queues to access compute infrastructure.

Scientists and engineers can dynamically scale their HPC infrastructure according to their demands and budgets, while organizations can use Amazon Elastic Compute Cloud (Amazon EC2) instances, powered by NVIDIA GPUs, to run more HPC workloads faster typically for the same amount of energy consumed.

AWS and NVIDIA empower HPC use cases by providing flexible, scalable, high-performance file systems, batch orchestration and cluster management, low-latency and high-throughput networking, as well as accelerated computing libraries, frameworks, and SDKs.

Purpose-built tools and services enable HPC teams to build solutions that accelerate results. They utilize cloud resources by defining jobs and environments with AWS Batch and running their largest HPC applications anywhere with NICE DCV for remote visualization.

NVIDIA GPU-powered instances on AWS are capable of running the largest models and datasets. In combination with SDKs such as the NVIDIA HPC SDK GPU-Optimized AMI and developer libraries, organizations have a better foundation and support in developing highly-performant HPC infrastructure.

Adding accelerated computing on AWS enables industry leaders to accelerate their HPC workloads with AI and ML tools including TensorFlow and PyTorch.

In addition, AWS and NVIDIA announced a strategic collaboration to offer new supercomputing infrastructure, software, and services to supercharge HPC, design and simulation workloads, and generative AI. This includes NVIDIA DGX Cloud coming to AWS and Amazon EC2 instances powered by NVIDIA GH200 Grace Hopper Superchip, H200, L40S and L4 GPUs.

How Industries Are Benefiting From HPC In The Cloud

Healthcare and Life Sciences – Scientists and researchers can run large-scale HPC simulations and train large models for drug discovery more efficiently. NVIDIA GPU-accelerated tools and services running on AWS reduce data processing times and accelerate genomic sequencing, resulting in shorter drug development time and costs, while using less energy for HPC workloads run during research and development.

Financial Services – Banks, trading firms, fintechs, and other financial institutions are using HPC and AI/ML on AWS to build models and applications for risk modeling, trading, portfolio optimization, customer experience improvements, and fraud detection. Using EC2 instances, powered by NVIDIA GPUs, financial organizations can process data in real-time and feed into sophisticated ML models to shorten their time to results, and reduce energy consumption used for modeling and simulation workloads.

Energy – Geoscientists, geophysicists, and reservoir engineers working with high-fidelity, 3D geophysics visualizations for reservoir simulation and seismic processing can use EC2 instances, powered by NVIDIA GPUs. This scalable HPC infrastructure and AI/ML tools enhance models used for subsurface understanding, reducing energy consumption through faster simulations.

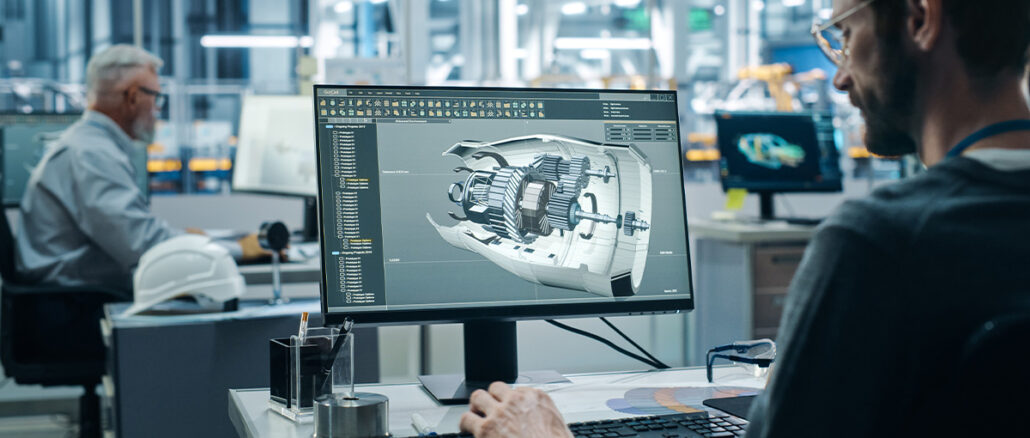

Manufacturing – Industrial engineers can run computational fluid dynamics (CFD) simulations needed to optimize product design with high throughput and low latency. With HPC and AI/ML tools and services from AWS and NVIDIA, engineers can run high-fidelity simulations typically faster for the same amount of energy used, reducing total compute demands, and improving overall energy efficiency of simulations.

The scalability and agility of AWS combined with high-performance NVIDIA GPU-powered instances give scientists and engineers the ability to run large HPC simulations faster at scale.

Learn more about how AWS and NVIDIA can help accelerate HPC workloads

This article was contributed by AWS and NVIDIA.

Psst.. should say in line 1 this article is being FUNDED to promote AWS and Nvidia products/services.

We all know customers are pulling back all REAL AI production workloads and datasets On premises or in colos due to regulatory data restrictions, security issues, NTM EXTREMELY higher TCO when deploying nearly ANYTHING in the public cloud, hence the massive repatriation that ALL customers are doing. They can buy a massive AI config for a fraction of the public cloud annual TCO.. charging you for Data across regions, network egress back out, NTM a dozen services needed .. Where has ETHICAL reporting gone?

Most companies are going to perform model training in the cloud and perform inferencing work on premise. Some work will be performed in the cloud, others will be performed on-premise. Evangelicals that promote cloud or self-hosting, are fooling themselves. For some organizations it makes sense to go fully into the cloud, for others, they will host on-premise but most will pursue a combination of of cloud, on-premise and co-location hosting depending on market conditions.