For a lot of state universities in the United States, and their equivalent political organizations of regions or provinces in other nations across the globe, it is a lot easier to find extremely interested undergraduate and graduate students who want to contribute to the font of knowledge in high performance computing than it is to find the budget to build a top-notch supercomputer of reasonable scale.

There is only so much state and federal money to go around, and it tends to agglomerate in fairly large pools, which in the United States have been significantly enhanced over the decades by the National Science Foundation, the Department of Energy, and the Defense Advanced Research Projects Agency. (The latter lost its ambitions in supercomputing as we know it about a decade ago.) And so, each new supercomputer that is built by a state university, or a collection of state universities, is an achievement. And getting one built these days using state-of-the-art GPUs from Nvidia or AMD is a triumph, considering how scarce they are thanks to the voracious appetites of the hyperscalers and cloud builders, who are at the front of the line with new silicon these days in a way that HPC centers used to be.

It is with all of this in mind that we consider the “Cardinal” system that has just been acquired by the Ohio Supercomputer Center, which was established in 1987 and which has been a hotbed for advanced computer graphics and MPI interconnects and which has had its share of big iron back in the middle years of the HPC revolution, including machines from Convex, Cray, and IBM. For instance, the $22 million Cray Y-MP8/864 system installed in the summer of 1989 was the fastest machine in the world at that time, and a whippersnapper named Steve Jobs even showed up one day to talk to techies using the NeXT systems Jobs had built after being tossed summarily out of Apple.

OSC currently has three clusters that are being augmented by the new Cardinal system, which has a mix of CPU-only and CPU-GPU hybrid nodes to try to take on the diverse HPC and now AI workloads that universities all over the world need to support for the training of future IT professionals.

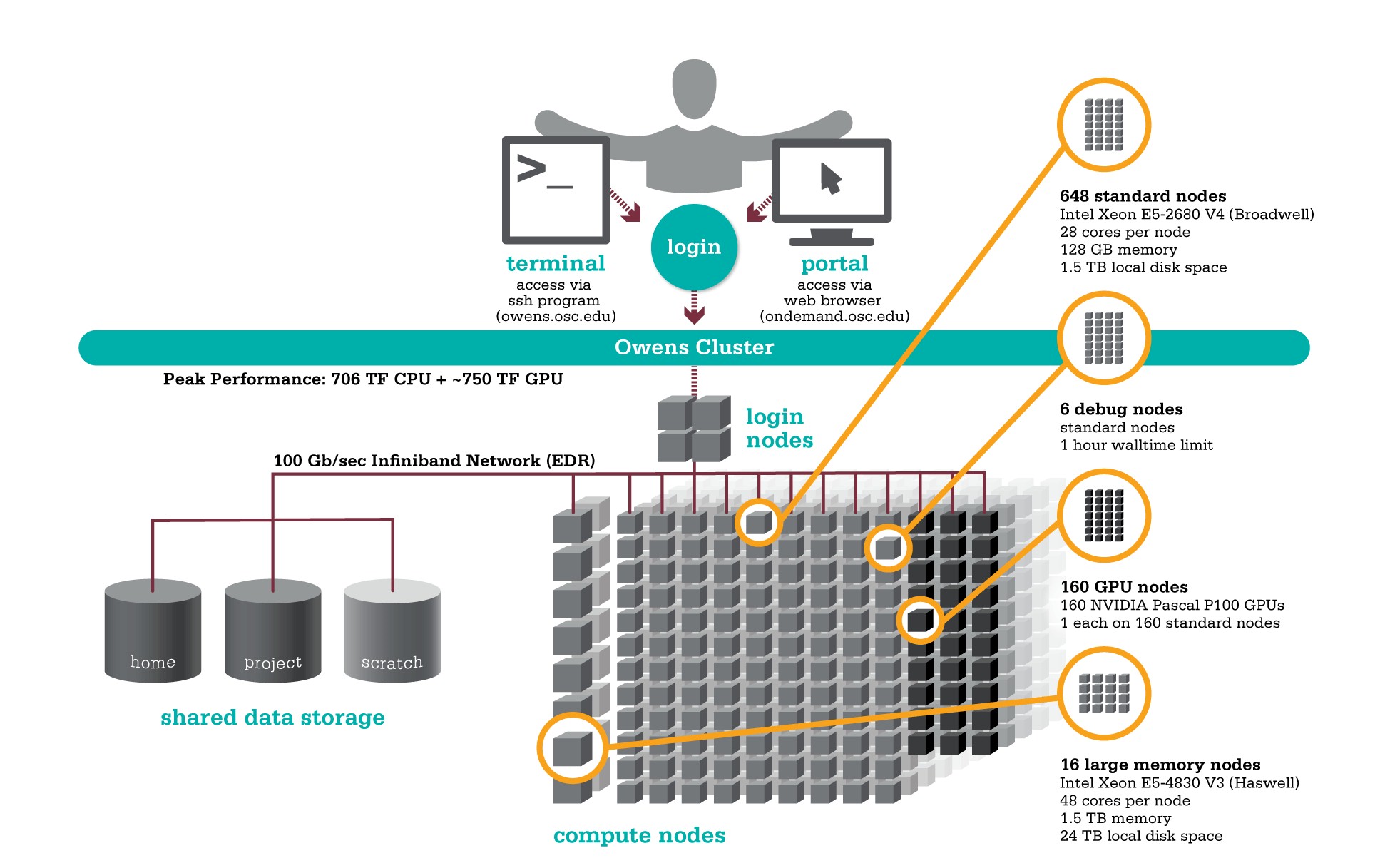

The oldest of the machines that are still running is nicknamed “Owens,” after Olympic gold medalist Jesse Owens, who was a Buckeye. The Owens supercomputer was built by Dell for $9.7 million in 2016. Given its graphics history, OSC has an excellent schematic of the machine:

The machine has 824 total nodes with 23,392 “Skylake” Xeon E5 cores, with a total of 750 teraflops peak FP64 performance; the GPUs add another 750 petaflops. Considering that this Owens machine has a fast 100 Gb/sec InfiniBand network, that is why it is still in production on the north side of the Buckeye campus. The storage for the Owen machine was supplied by DataDirect Networks.

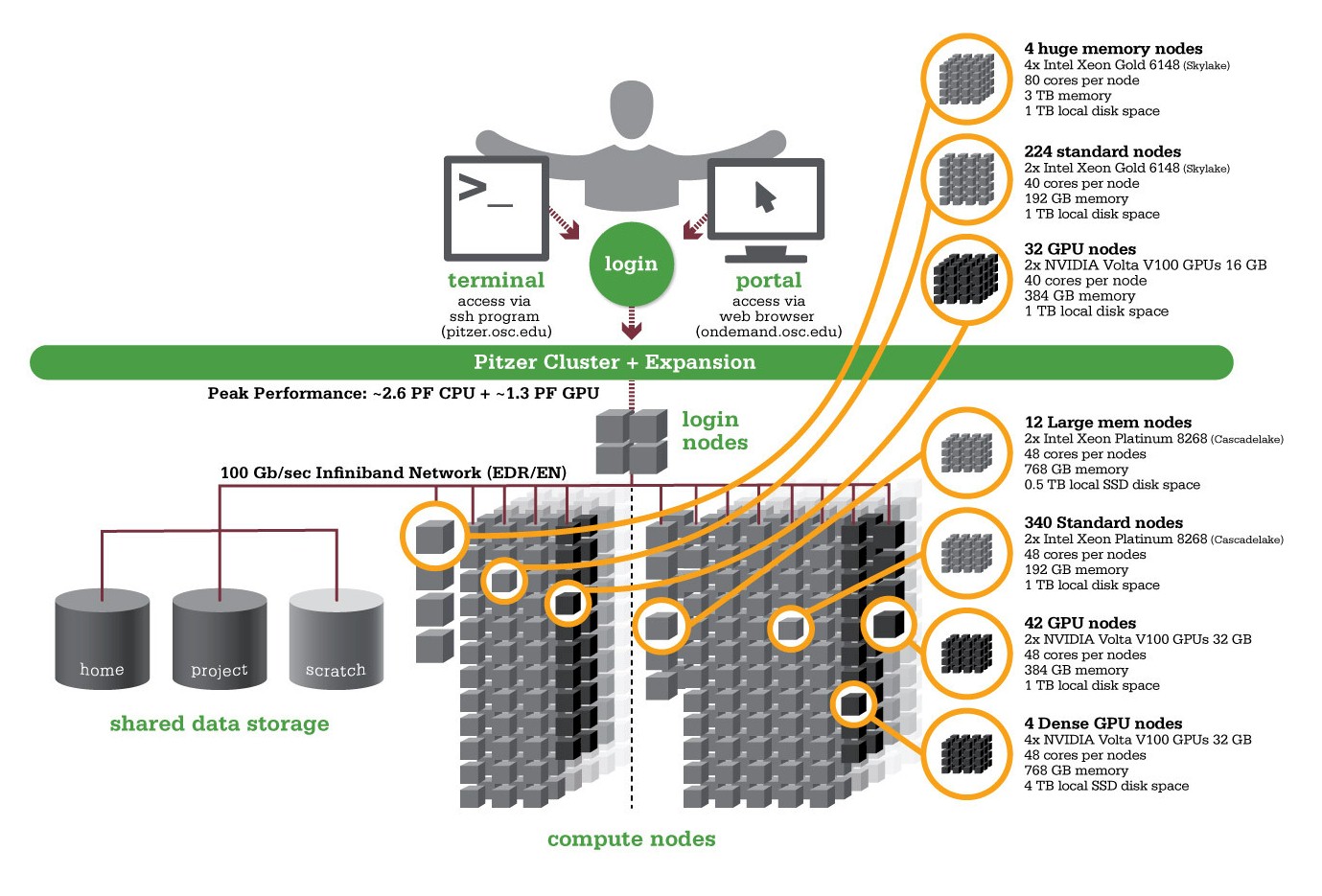

The next flagship machine installed at OSC was “Pitzer,” named after Russell Pitzer, a researcher at the Ohio State University who, like many others around the country, had to borrow time on machines running at the national labs to get the kind of HPC oomph they needed and who was one of the founders of OSC. This machine, which was built by Dell, was comprised of a mix of CPU-only and GPU accelerated nodes that was installed in 2018 and expanded in 2020. Here is the schematic for this system, which is also still in production:

There were 244 nodes installed in 2018 for the Pitzer machine, with a total of 10,560 cores, plus 32 nodes with a total of 64 Nvidia “Volta” V100 GPUs, plus a handful of experimental nodes. Another 340 CPU-only nodes were installed in 2020 with 19,104 cores plus another 46 nodes with 100 Nvidia V100 GPUs (there were four quad-GPU nodes, which is how you get to 100 there). Add it all up and Pitzer had a total of 658 nodes with 29,664 cores that provided 2.75 FP64 petaflops peak on the CPUs and 1.15 FP64 petaflops peak on the GPUs – just shy of 4 petaflops in total. Not a huge machine, but again with a 100 Gb/sec InfiniBand network, perfectly usable. (For some reason, those petaflops numbers don’t match the ones on the chart.)

There were 244 nodes installed in 2018 for the Pitzer machine, with a total of 10,560 cores, plus 32 nodes with a total of 64 Nvidia “Volta” V100 GPUs, plus a handful of experimental nodes. Another 340 CPU-only nodes were installed in 2020 with 19,104 cores plus another 46 nodes with 100 Nvidia V100 GPUs (there were four quad-GPU nodes, which is how you get to 100 there). Add it all up and Pitzer had a total of 658 nodes with 29,664 cores that provided 2.75 FP64 petaflops peak on the CPUs and 1.15 FP64 petaflops peak on the GPUs – just shy of 4 petaflops in total. Not a huge machine, but again with a 100 Gb/sec InfiniBand network, perfectly usable. (For some reason, those petaflops numbers don’t match the ones on the chart.)

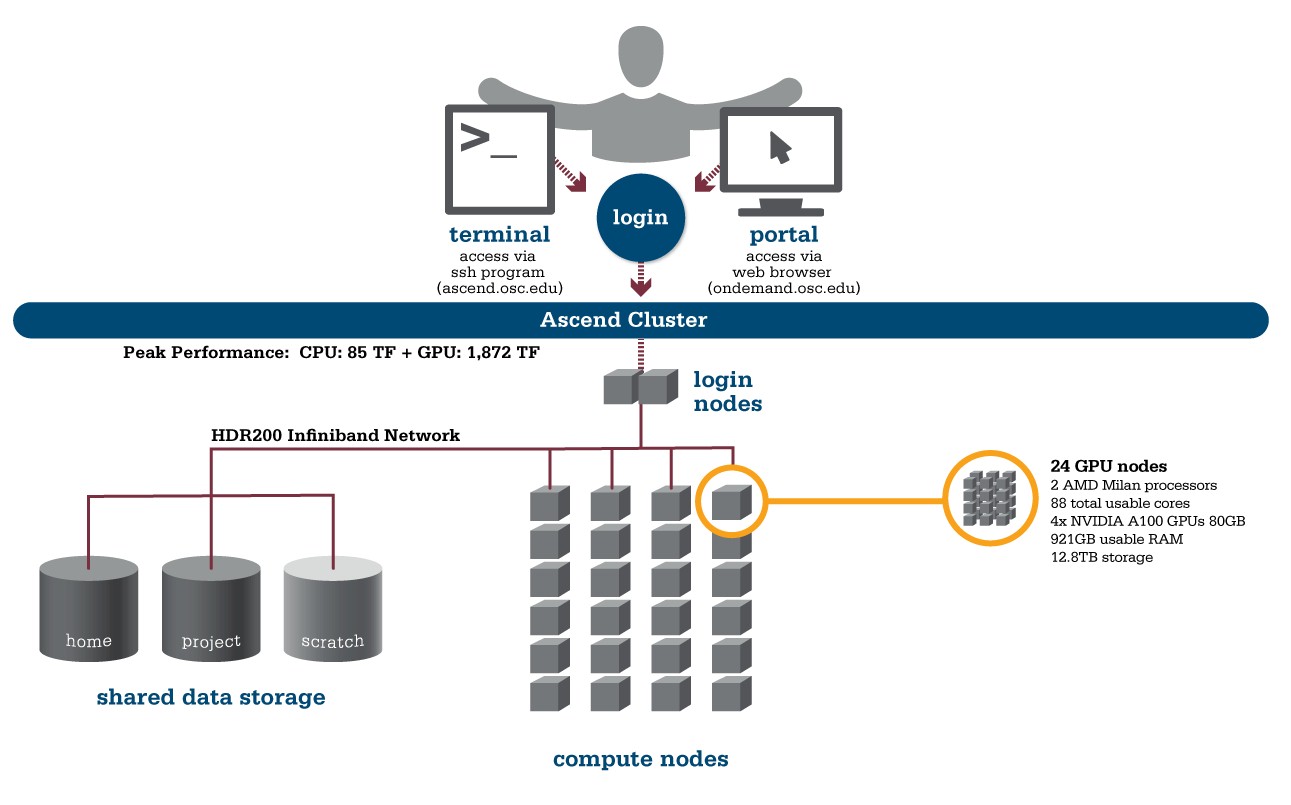

In 2022, the “Ascend” system was installed at OSC, also built by Dell. This one made no pretensions about having a mix of CPU and GPU compute, but was also not a very large system with only two dozen of Dell’s PowerEdge XE8545 nodes. Those nodes had two 44-core AMD “Milan” Epyc 7743 CPUs and a quad of Nvidia “Ampere” A100 GPUs. That was 2,112 cores rated at around 85 teraflops and 96 GPUs with 1.87 petaflops. Like this:

That brings us to the new Cardinal machine, which is being built now and which does not yet have a snazzy graphics. This machine is – you guessed it – also being built by Dell, and will have 378 nodes in total with 39,132 cores and 128 GPUs. There are 326 nodes that are based on the “Sapphire Rapids” Xeon Max CPU processors from Intel, which have 52 cores each and which have 128 GB of HMB2e memory as well as 512 GB of DDR5 memory. These are housed in the hyperscaler-inspired four node PowerEdge C6620 enclosures with 1.6 TB of NVM-Express flash per node.

The Cardinal system also has 32 GPU nodes, which have a pair of Sapphire Rapids Xeon SP-8470 Platinum processors from Intel, off of which hang a quad of four “Hopper” H100 GPU accelerators. These GPU nodes have 1 TB of main memory on the pair of CPUs and 96 GB of HBM3 memory on each GPU. There are sixteen other nodes configured for data analytics workloads that employ a pair of the Sapphire Rapids with HBM processors and 2 TB of memory and 12.8 TB of flash per node.

All of the standard nodes in the Cardinal machine are linked with 200 Gb/sec InfiniBand pipes coming out of the nodes and the GPU nodes have four 400 Gb/sec InfiniBand adapters.

The combined nodes are expected to yield about 10.5 petaflops of peak FP64 performance. This machine alone will have 40 percent more performance than OSC’s current three machines. When the Cardinal machine comes online in the second half of this year, OSC will retire the Owens system from 2016, and the lab will have about 16.5 petaflops of aggregate FP64 oomph across the Pitzer, Ascend, and Cardinal systems.

That is absolutely enough to get onto the Top500 list, if OSC decided to do LINPACK tests on its iron, which it has not done since installing the Owens machine in 2016. OSC is focused on getting some real science done and teaching the next generation of HPC and AI experts, which is what academic supercomputer centers are supposed to do.

Good going Buckeyes … and the Cardinal (Cardinalis cardinalis) is the State bird of Ohio! I hope they do run LINPACK on this machine, for a listing on page 1 of Top500 (8 PF/s to 1+ EF/s range). I’ll also note that, beyond NSF, DoE, and DARPA, NIH is quite a research funding powerhouse these days, and given the multitude of applications of computational research to health (biomolecular dynamics, protein folding, drug design, biological twins, biosignal processiing for implants, spatiotemporal modeling of epidemics, telemedicine, …), one can only hope that some level of HPC will (in time) end up being supported by this agency as well (pretty please)!