Crafting A DGX-Alike AI Server Out Of AMD GPUs And PCI Switches

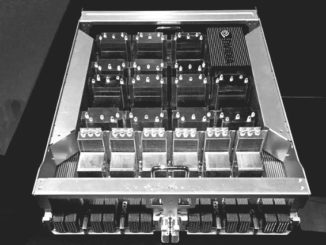

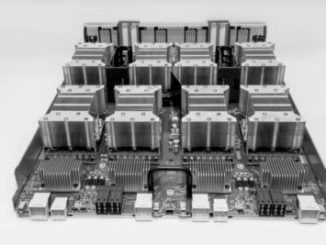

Not everybody can afford an Nvidia DGX AI server loaded up with the latest “Hopper” H100 GPU accelerators or even one of its many clones available from the OEMs and ODMs of the world. …

Not everybody can afford an Nvidia DGX AI server loaded up with the latest “Hopper” H100 GPU accelerators or even one of its many clones available from the OEMs and ODMs of the world. …

GPU computing platform maker Nvidia announced its financial results for its fiscal fourth quarter ended in January, which showed the same digestion of already acquired capacity by the hyperscalers and cloud builders and the same hesitation to spend by enterprises that other compute engine makers for datacenter computing are also seeing. …

Normally, when we look at a system, we think from the compute engines at a very fine detail and then work our way out across the intricacies of the nodes and then the interconnect and software stack that scales it across the nodes into a distributed computing platform. …

A new CPU or GPU compute engine is always an exciting time for the datacenter because we get to see the results of years of work and clever thinking by hardware and software engineers who are trying to break through barriers with both their Dennard scaling and their Moore’s Law arms tied behind their backs. …

GPU chip maker Nvidia doesn’t just make the devices that end up in some of the largest supercomputers in the world. …

A research team from Nvidia has provided interesting insight about using mixed precision on deep learning training across very large training sets and how performance and scalability are affected by working with a batch size of 32,000 using recurrent neural networks. …

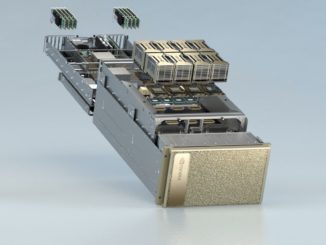

When Nvidia co-founder and chief executive officer Jensen Huang told the assembled multitudes at the keynote opening to the GPU Technology Conference that the new DGX-2 system, weighing in at 2 petaflops at half precision using the latest Tesla GPU accelerators, would cost $1.5 million when it became available in the third quarter, the audience paused for a few seconds, doing the human-speed math to try to reckon how that stacked up to the DGX-1 servers sporting eight Teslas. …

At well over $150,000 per appliance, the Volta GPU based DGX appliances from Nvidia, which take aim at deep learning with framework integration and 8 Volta-accelerated nodes linked with NVlink, is set to appeal to the most bleeding edge of machine learning shops. …

One of the reasons why Nvidia has been able to quadruple revenues for its Tesla accelerators in recent quarters is that it doesn’t just sell raw accelerators as well as PCI-Express cards, but has become a system vendor in its own right through its DGX-1 server line. …

If you can’t beat the largest cloud players at economies of scale, the only option is to try to outrun them in performance, capabilities, or price. …

All Content Copyright The Next Platform